Haohao Hu

XD-MAP: Cross-Modal Domain Adaptation using Semantic Parametric Mapping

Jan 20, 2026Abstract:Until open-world foundation models match the performance of specialized approaches, the effectiveness of deep learning models remains heavily dependent on dataset availability. Training data must align not only with the target object categories but also with the sensor characteristics and modalities. To bridge the gap between available datasets and deployment domains, domain adaptation strategies are widely used. In this work, we propose a novel approach to transferring sensor-specific knowledge from an image dataset to LiDAR, an entirely different sensing domain. Our method XD-MAP leverages detections from a neural network on camera images to create a semantic parametric map. The map elements are modeled to produce pseudo labels in the target domain without any manual annotation effort. Unlike previous domain transfer approaches, our method does not require direct overlap between sensors and enables extending the angular perception range from a front-view camera to a full 360 view. On our large-scale road feature dataset, XD-MAP outperforms single shot baseline approaches by +19.5 mIoU for 2D semantic segmentation, +19.5 PQth for 2D panoptic segmentation, and +32.3 mIoU in 3D semantic segmentation. The results demonstrate the effectiveness of our approach achieving strong performance on LiDAR data without any manual labeling.

Robust Self-Tuning Data Association for Geo-Referencing Using Lane Markings

Jul 28, 2022

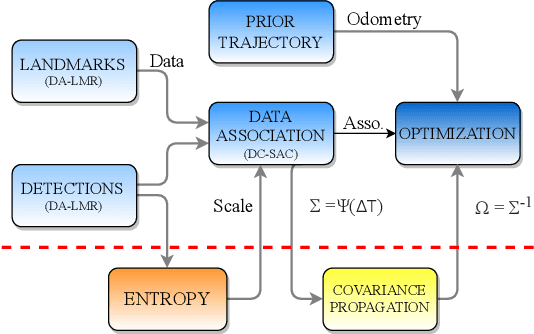

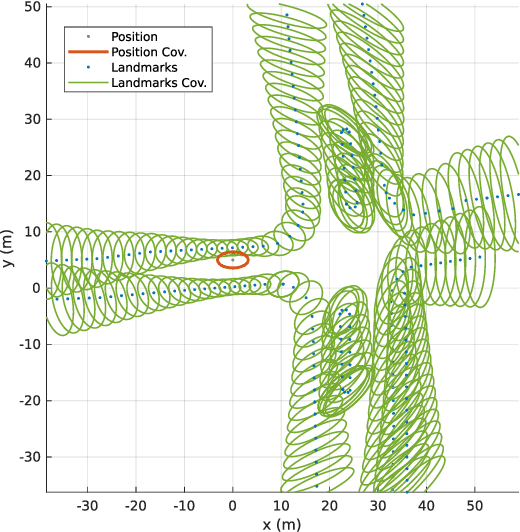

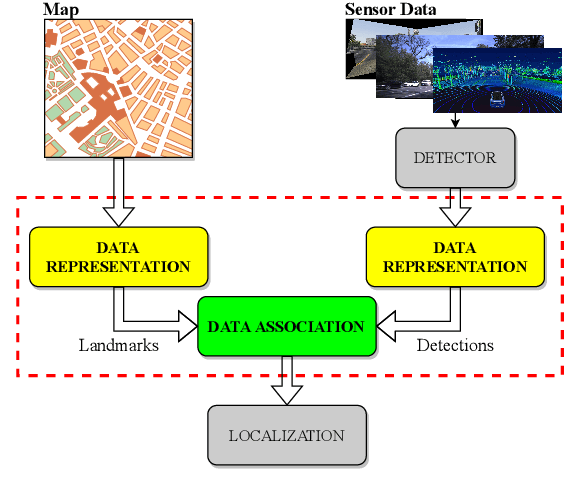

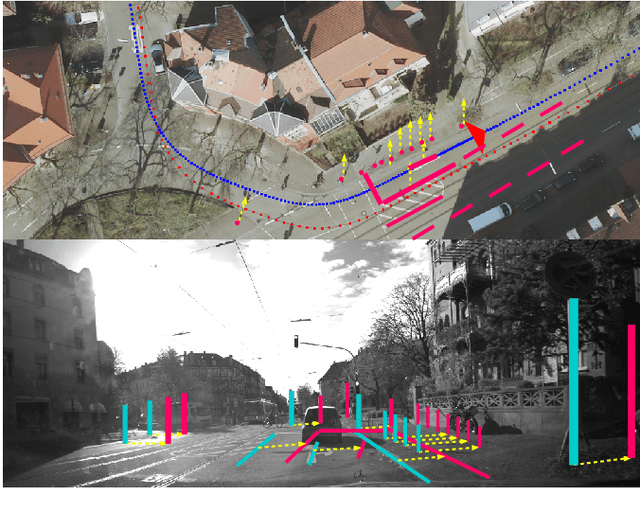

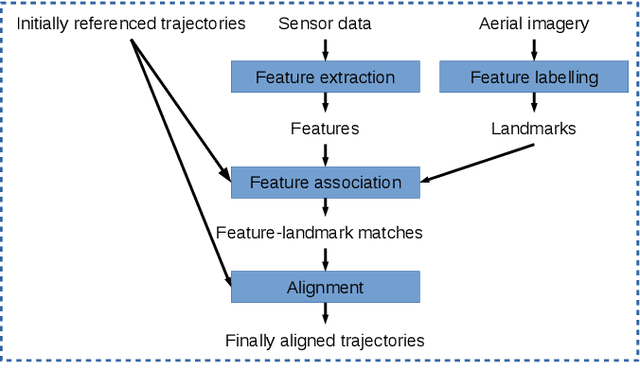

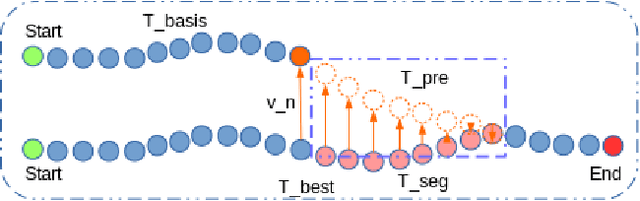

Abstract:Localization in aerial imagery-based maps offers many advantages, such as global consistency, geo-referenced maps, and the availability of publicly accessible data. However, the landmarks that can be observed from both aerial imagery and on-board sensors is limited. This leads to ambiguities or aliasing during the data association. Building upon a highly informative representation (that allows efficient data association), this paper presents a complete pipeline for resolving these ambiguities. Its core is a robust self-tuning data association that adapts the search area depending on the entropy of the measurements. Additionally, to smooth the final result, we adjust the information matrix for the associated data as a function of the relative transform produced by the data association process. We evaluate our method on real data from urban and rural scenarios around the city of Karlsruhe in Germany. We compare state-of-the-art outlier mitigation methods with our self-tuning approach, demonstrating a considerable improvement, especially for outer-urban scenarios.

Improving Lidar-Based Semantic Segmentation of Top-View Grid Maps by Learning Features in Complementary Representations

Mar 02, 2022

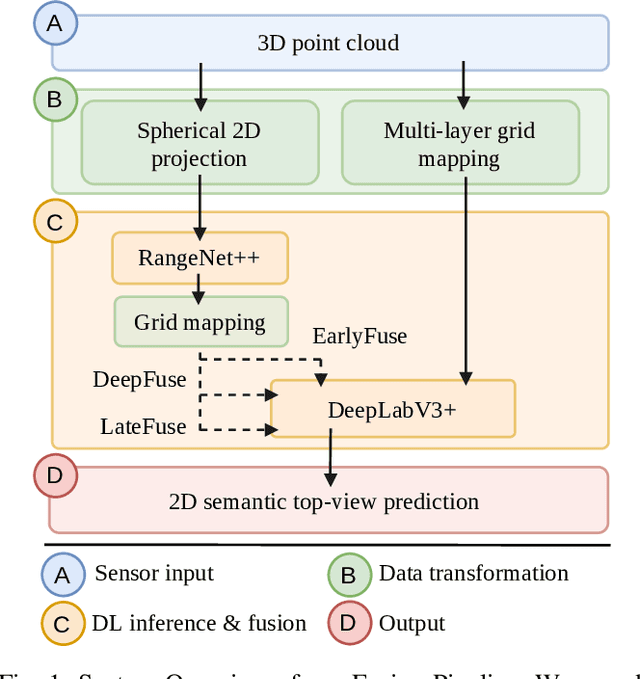

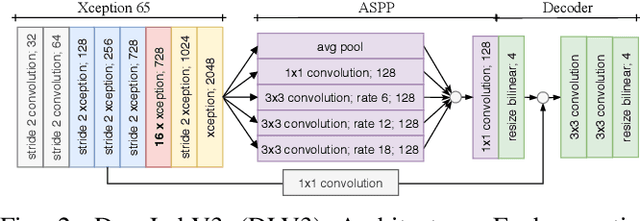

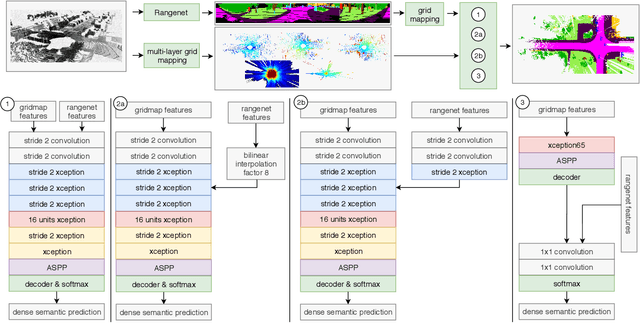

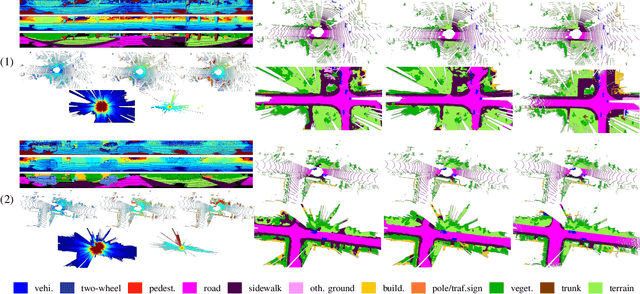

Abstract:In this paper we introduce a novel way to predict semantic information from sparse, single-shot LiDAR measurements in the context of autonomous driving. In particular, we fuse learned features from complementary representations. The approach is aimed specifically at improving the semantic segmentation of top-view grid maps. Towards this goal the 3D LiDAR point cloud is projected onto two orthogonal 2D representations. For each representation a tailored deep learning architecture is developed to effectively extract semantic information which are fused by a superordinate deep neural network. The contribution of this work is threefold: (1) We examine different stages within the segmentation network for fusion. (2) We quantify the impact of embedding different features. (3) We use the findings of this survey to design a tailored deep neural network architecture leveraging respective advantages of different representations. Our method is evaluated using the SemanticKITTI dataset which provides a point-wise semantic annotation of more than 23.000 LiDAR measurements.

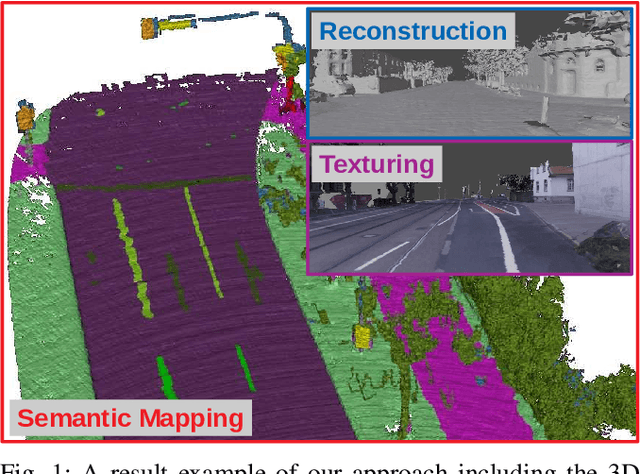

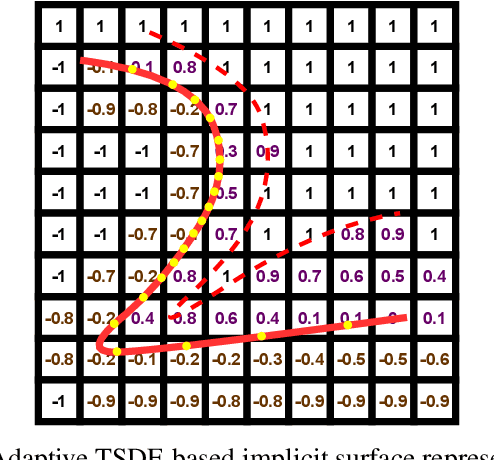

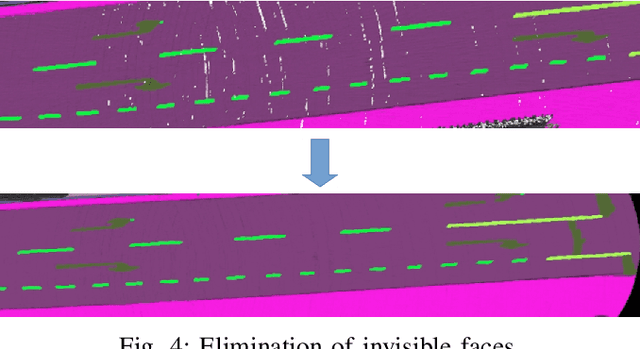

Large-Scale 3D Semantic Reconstruction for Automated Driving Vehicles with Adaptive Truncated Signed Distance Function

Feb 28, 2022

Abstract:The Large-scale 3D reconstruction, texturing and semantic mapping are nowadays widely used for automated driving vehicles, virtual reality and automatic data generation. However, most approaches are developed for RGB-D cameras with colored dense point clouds and not suitable for large-scale outdoor environments using sparse LiDAR point clouds. Since a 3D surface can be usually observed from multiple camera images with different view poses, an optimal image patch selection for the texturing and an optimal semantic class estimation for the semantic mapping are still challenging. To address these problems, we propose a novel 3D reconstruction, texturing and semantic mapping system using LiDAR and camera sensors. An Adaptive Truncated Signed Distance Function is introduced to describe surfaces implicitly, which can deal with different LiDAR point sparsities and improve model quality. The from this implicit function extracted triangle mesh map is then textured from a series of registered camera images by applying an optimal image patch selection strategy. Besides that, a Markov Random Field-based data fusion approach is proposed to estimate the optimal semantic class for each triangle mesh. Our approach is evaluated on a synthetic dataset, the KITTI dataset and a dataset recorded with our experimental vehicle. The results show that the 3D models generated using our approach are more accurate in comparison to using other state-of-the-art approaches. The texturing and semantic mapping achieve also very promising results.

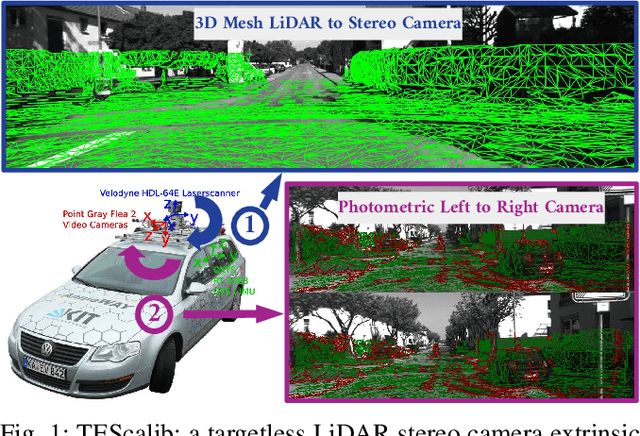

TEScalib: Targetless Extrinsic Self-Calibration of LiDAR and Stereo Camera for Automated Driving Vehicles with Uncertainty Analysis

Feb 28, 2022

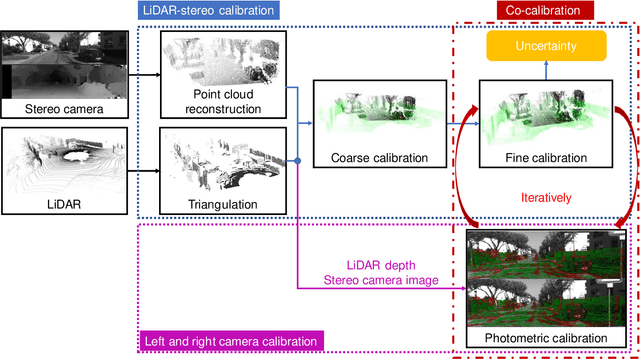

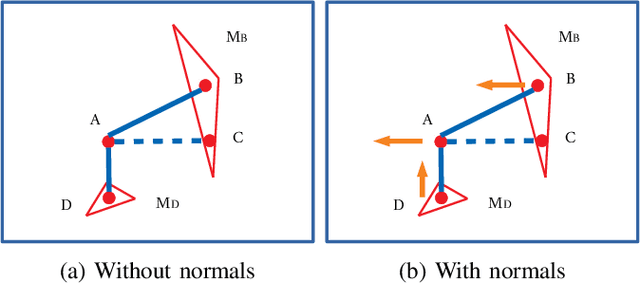

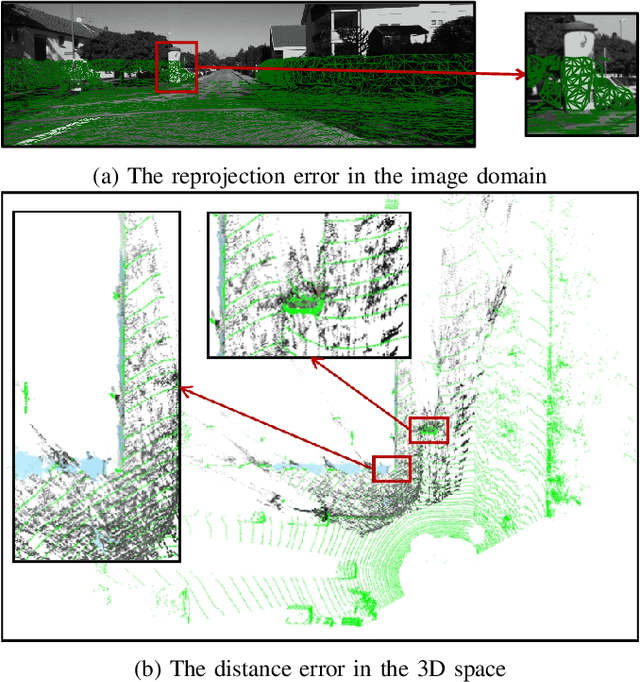

Abstract:In this paper, we present TEScalib, a novel extrinsic self-calibration approach of LiDAR and stereo camera using the geometric and photometric information of surrounding environments without any calibration targets for automated driving vehicles. Since LiDAR and stereo camera are widely used for sensor data fusion on automated driving vehicles, their extrinsic calibration is highly important. However, most of the LiDAR and stereo camera calibration approaches are mainly target-based and therefore time consuming. Even the newly developed targetless approaches in last years are either inaccurate or unsuitable for driving platforms. To address those problems, we introduce TEScalib. By applying a 3D mesh reconstruction-based point cloud registration, the geometric information is used to estimate the LiDAR to stereo camera extrinsic parameters accurately and robustly. To calibrate the stereo camera, a photometric error function is builded and the LiDAR depth is involved to transform key points from one camera to another. During driving, these two parts are processed iteratively. Besides that, we also propose an uncertainty analysis for reflecting the reliability of the estimated extrinsic parameters. Our TEScalib approach evaluated on the KITTI dataset achieves very promising results.

DA-LMR: A Robust Lane Markings Representation for Data Association Methods

Nov 17, 2021

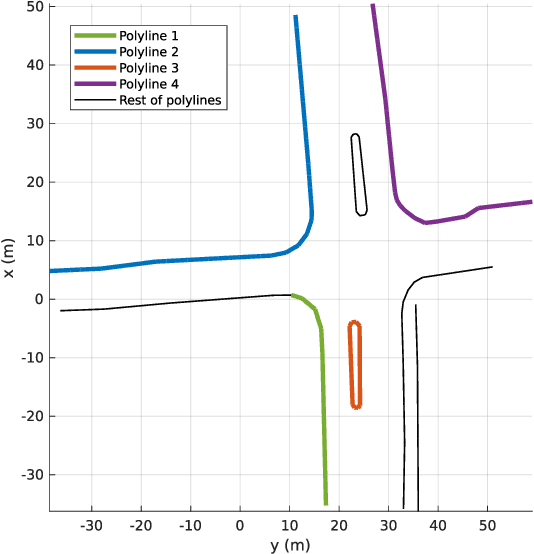

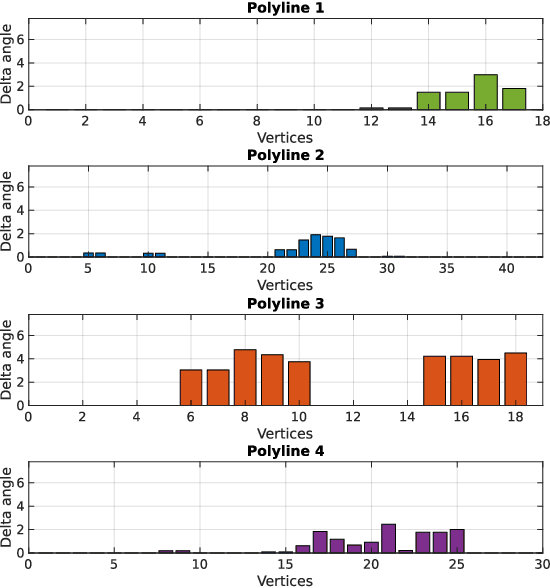

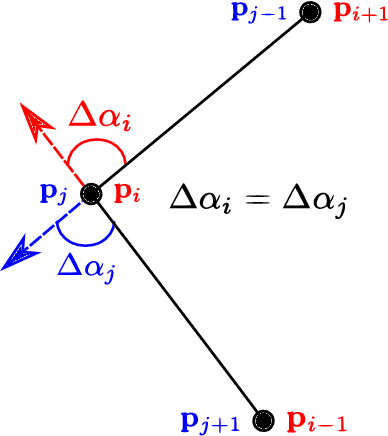

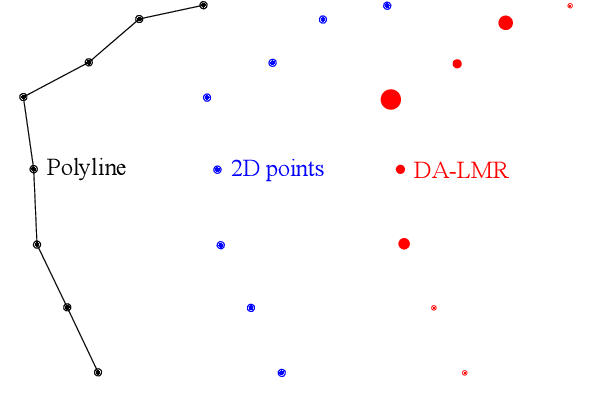

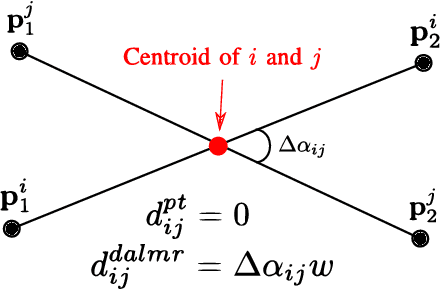

Abstract:While complete localization approaches are widely studied in the literature, their data association and data representation subprocesses usually go unnoticed. However, both are a key part of the final pose estimation. In this work, we present DA-LMR (Delta-Angle Lane Markings Representation), a robust data representation in the context of localization approaches. We propose a representation of lane markings that encodes how a curve changes in each point and includes this information in an additional dimension, thus providing a more detailed geometric structure description of the data. We also propose DC-SAC (Distance-Compatible Sample Consensus), a data association method. This is a heuristic version of RANSAC that dramatically reduces the hypothesis space by distance compatibility restrictions. We compare the presented methods with some state-of-the-art data representation and data association approaches in different noisy scenarios. The DA-LMR and DC-SAC produce the most promising combination among those compared, reaching 98.1% in precision and 99.7% in recall for noisy data with 0.5m of standard deviation.

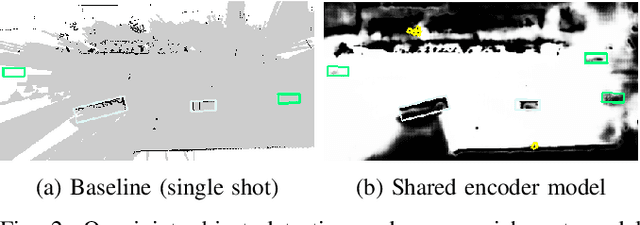

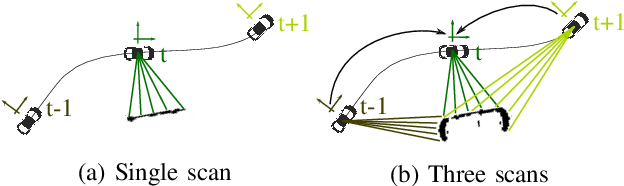

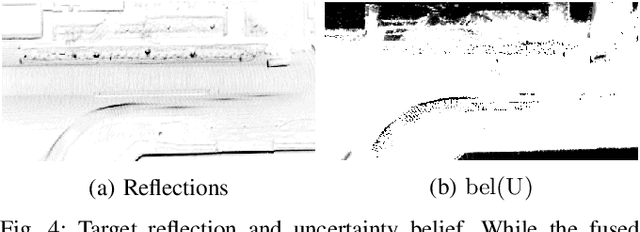

Learned Enrichment of Top-View Grid Maps Improves Object Detection

Mar 09, 2020

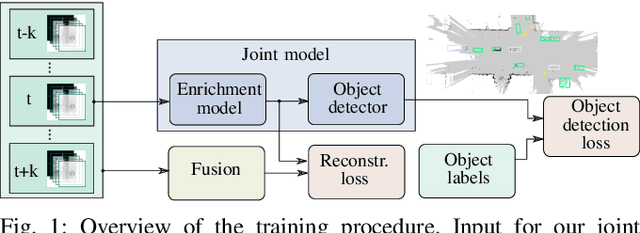

Abstract:We propose an object detector for top-view grid maps which is additionally trained to generate an enriched version of its input. Our goal in the joint model is to improve generalization by regularizing towards structural knowledge in form of a map fused from multiple adjacent range sensor measurements. This training data can be generated in an automatic fashion, thus does not require manual annotations. We present an evidential framework to generate training data, investigate different model architectures and show that predicting enriched inputs as an additional task can improve object detection performance.

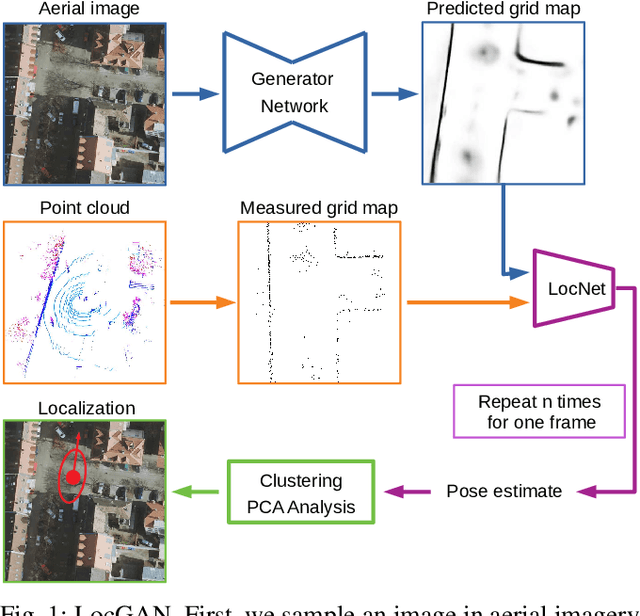

Localization in Aerial Imagery with Grid Maps using LocGAN

Jun 04, 2019

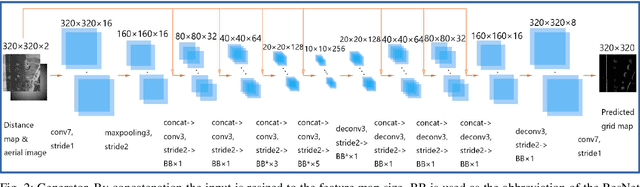

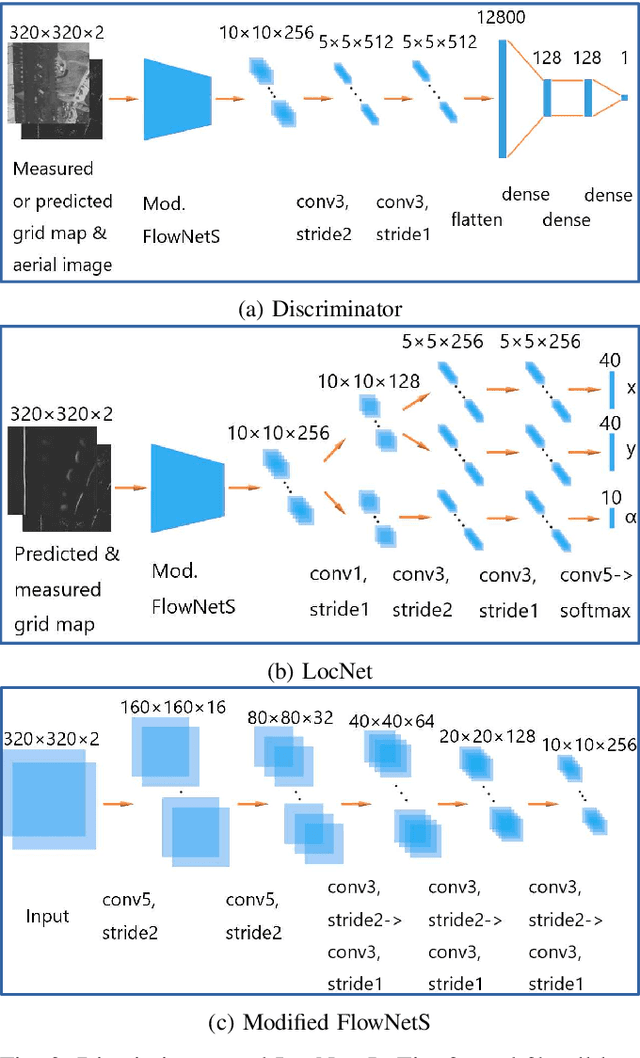

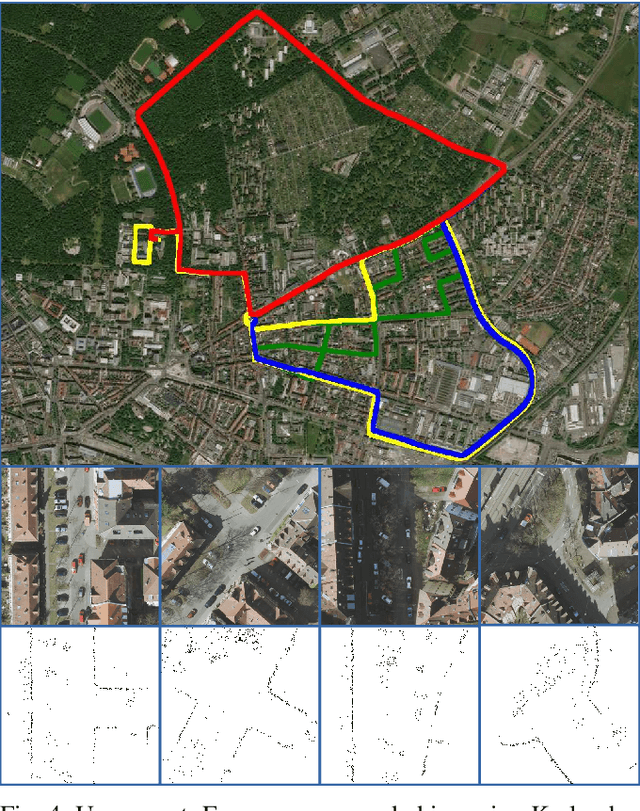

Abstract:In this work, we present LocGAN, our localization approach based on a geo-referenced aerial imagery and LiDAR grid maps. Currently, most self-localization approaches relate the current sensor observations to a map generated from previously acquired data. Unfortunately, this data is not always available and the generated maps are usually sensor setup specific. Global Navigation Satellite Systems (GNSS) can overcome this problem. However, they are not always reliable especially in urban areas due to multi-path and shadowing effects. Since aerial imagery is usually available, we can use it as prior information. To match aerial images with grid maps, we use conditional Generative Adversarial Networks (cGANs) which transform aerial images to the grid map domain. The transformation between the predicted and measured grid map is estimated using a localization network (LocNet). Given the geo-referenced aerial image transformation the vehicle pose can be estimated. Evaluations performed on the data recorded in region Karlsruhe, Germany show that our LocGAN approach provides reliable global localization results.

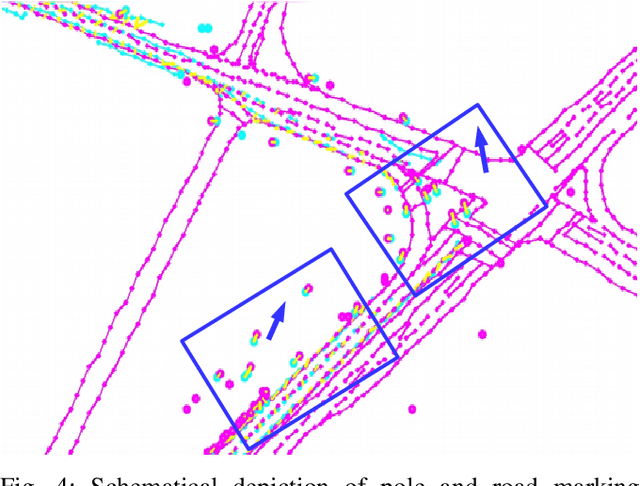

Accurate Global Trajectory Alignment using Poles and Road Markings

Mar 25, 2019

Abstract:Currently, digital maps are indispensable for automated driving. However, due to the low precision and reliability of GNSS particularly in urban areas, fusing trajectories of independent recording sessions and different regions is a challenging task. To bypass the flaws from direct incorporation of GNSS measurements for geo-referencing, the usage of aerial imagery seems promising. Furthermore, more accurate geo-referencing improves the global map accuracy and allows to estimate the sensor calibration error. In this paper, we present a novel geo-referencing approach to align trajectories to aerial imagery using poles and road markings. To match extracted features from sensor observations to aerial imagery landmarks robustly, a RANSAC-based matching approach is applied in a sliding window. For that, we assume that the trajectories are roughly referenced to the imagery which can be achieved by rough GNSS measurements from a low-cost GNSS receiver. Finally, we align the initial trajectories precisely to the aerial imagery by minimizing a geometric cost function comprising all determined matches. Evaluations performed on data recorded in Karlsruhe, Germany show that our algorithm yields trajectories which are accurately referenced to the used aerial imagery.

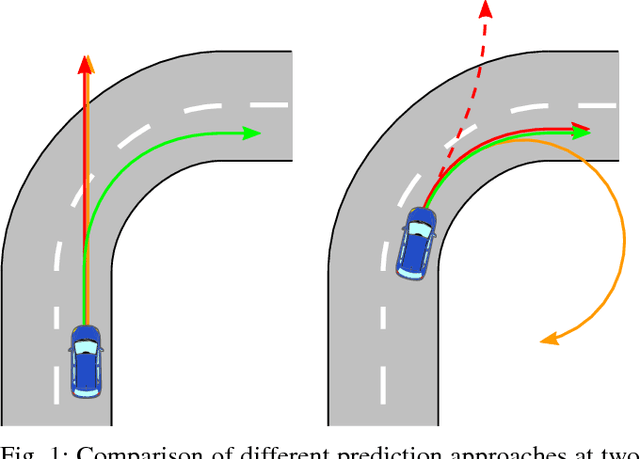

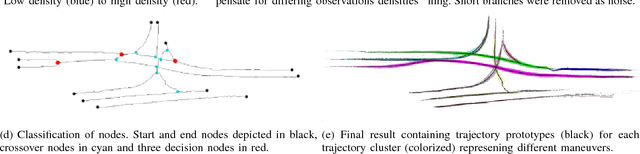

An Approach to Vehicle Trajectory Prediction Using Automatically Generated Traffic Maps

Jun 14, 2018

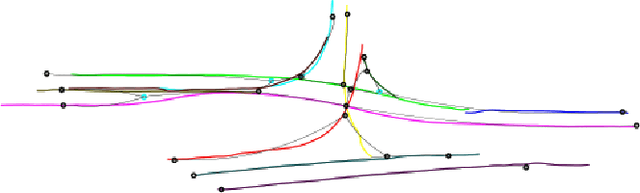

Abstract:Trajectory and intention prediction of traffic participants is an important task in automated driving and crucial for safe interaction with the environment. In this paper, we present a new approach to vehicle trajectory prediction based on automatically generated maps containing statistical information about the behavior of traffic participants in a given area. These maps are generated based on trajectory observations using image processing and map matching techniques and contain all typical vehicle movements and probabilities in the considered area. Our prediction approach matches an observed trajectory to a behavior contained in the map and uses this information to generate a prediction. We evaluated our approach on a dataset containing over 14000 trajectories and found that it produces significantly more precise mid-term predictions compared to motion model-based prediction approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge