Fernando Torres

Automatics, Robotics and Artificial Vision Research Group, Dept. of Physics, System Engineering and Signal Theory, University of Alicante, Spain, Computer Science Research Institute, University of Alicante, Spain

ViKi-HyCo: A Hybrid-Control approach for complex car-like maneuvers

Nov 13, 2023

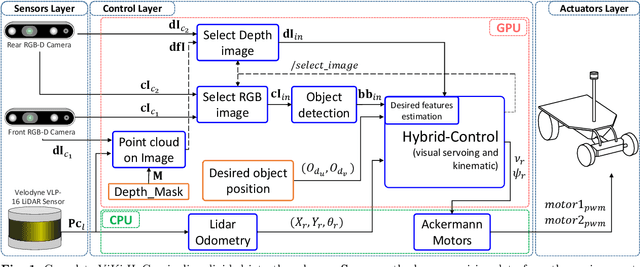

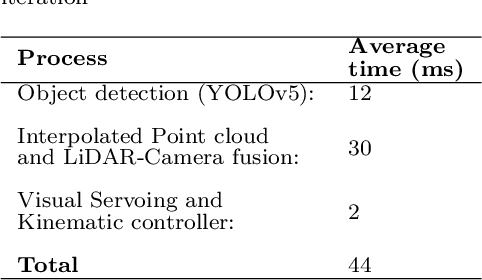

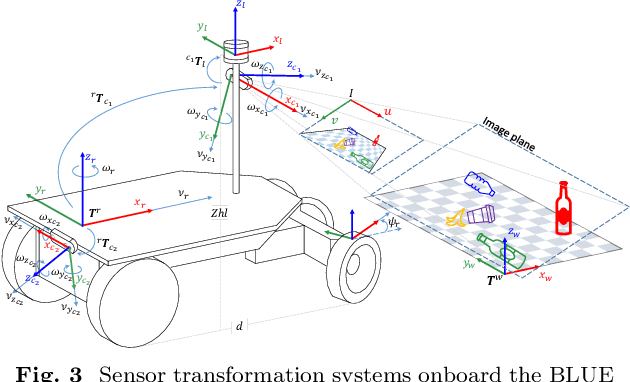

Abstract:This paper presents ViKi-HyCo (Visual servoing and Kinematic Hybrid-Controller), an approach that generates the necessary maneuvers for the complex positioning of a non-holonomic mobile robot in outdoor environments, towards a target point based on the object detection, by combining an image based visual servoing (IBVS) and a kinematic controller. The method avoids the problems of the visual servoing controller when it loses the visual object detection features by switching to a kinematic controller. We also present object localization for outdoor environments employing the fusion of LiDAR and RGB-D cameras that estimates the spatial location of a target point for the kinematic controller, and also allows the dynamic calculation of a desired bounding box of the detected object for the calculation of velocities in the visual servoing controller. The presented approach does not require an object tracking algorithm and is applicable to any visually tracking robotic task where its kinematic model is known. The Hybrid-Control presents an error of 0.0428 \pm 0.0467 m in the X-axis and 0.0515 \pm 0.0323 m in the Y-axis at the end of a complete positioning task.

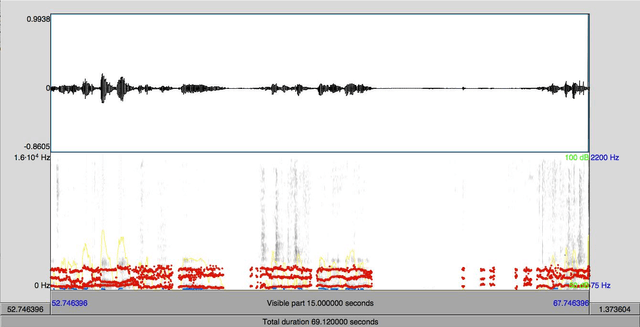

LiLO: Lightweight and low-bias LiDAR Odometry method based on spherical range image filtering

Nov 13, 2023Abstract:In unstructured outdoor environments, robotics requires accurate and efficient odometry with low computational time. Existing low-bias LiDAR odometry methods are often computationally expensive. To address this problem, we present a lightweight LiDAR odometry method that converts unorganized point cloud data into a spherical range image (SRI) and filters out surface, edge, and ground features in the image plane. This substantially reduces computation time and the required features for odometry estimation in LOAM-based algorithms. Our odometry estimation method does not rely on global maps or loop closure algorithms, which further reduces computational costs. Experimental results generate a translation and rotation error of 0.86\% and 0.0036{\deg}/m on the KITTI dataset with an average runtime of 78ms. In addition, we tested the method with our data, obtaining an average closed-loop error of 0.8m and a runtime of 27ms over eight loops covering 3.5Km.

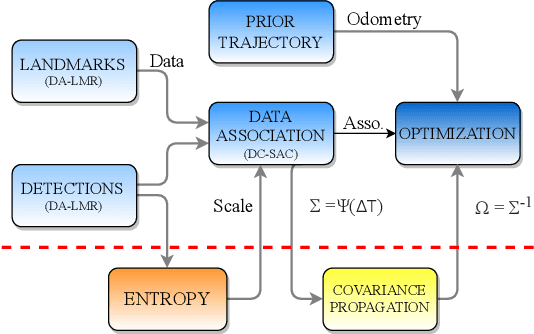

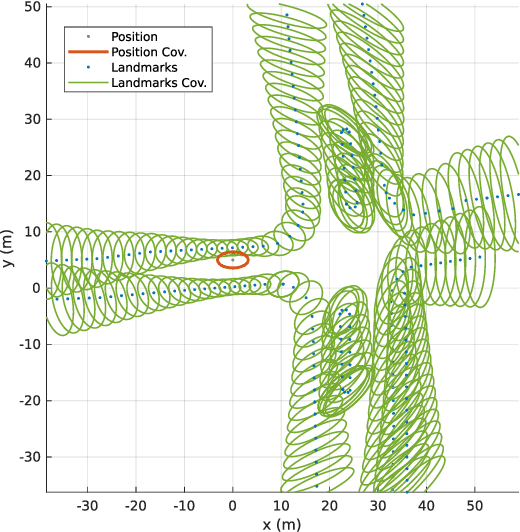

Dynamically Weighted Factor-Graph for Feature-based Geo-localization

Nov 13, 2023Abstract:Feature-based geo-localization relies on associating features extracted from aerial imagery with those detected by the vehicle's sensors. This requires that the type of landmarks must be observable from both sources. This no-variety of feature types generates poor representations that lead to outliers and deviations, produced by ambiguities and lack of detections respectively. To mitigate these drawbacks, in this paper, we present a dynamically weighted factor graph model for the vehicle's trajectory estimation. The weight adjustment in this implementation depends on information quantification in the detections performed using a LiDAR sensor. Also, a prior (GNSS-based) error estimation is included in the model. Then, when the representation becomes ambiguous or sparse, the weights are dynamically adjusted to rely on the corrected prior trajectory, mitigating in this way outliers and deviations. We compare our method against state-of-the-art geo-localization ones in a challenging ambiguous environment, where we also cause detection losses. We demonstrate mitigation of the mentioned drawbacks where the other methods fail.

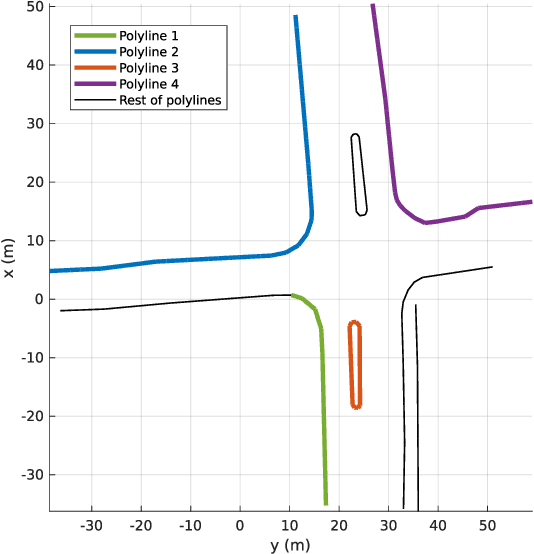

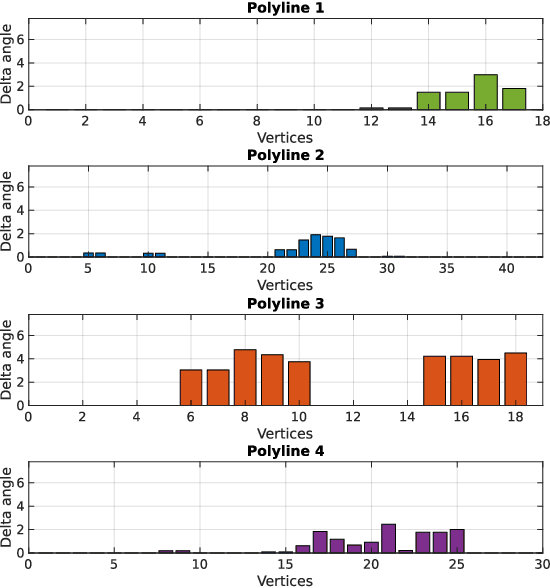

Robust Self-Tuning Data Association for Geo-Referencing Using Lane Markings

Jul 28, 2022

Abstract:Localization in aerial imagery-based maps offers many advantages, such as global consistency, geo-referenced maps, and the availability of publicly accessible data. However, the landmarks that can be observed from both aerial imagery and on-board sensors is limited. This leads to ambiguities or aliasing during the data association. Building upon a highly informative representation (that allows efficient data association), this paper presents a complete pipeline for resolving these ambiguities. Its core is a robust self-tuning data association that adapts the search area depending on the entropy of the measurements. Additionally, to smooth the final result, we adjust the information matrix for the associated data as a function of the relative transform produced by the data association process. We evaluate our method on real data from urban and rural scenarios around the city of Karlsruhe in Germany. We compare state-of-the-art outlier mitigation methods with our self-tuning approach, demonstrating a considerable improvement, especially for outer-urban scenarios.

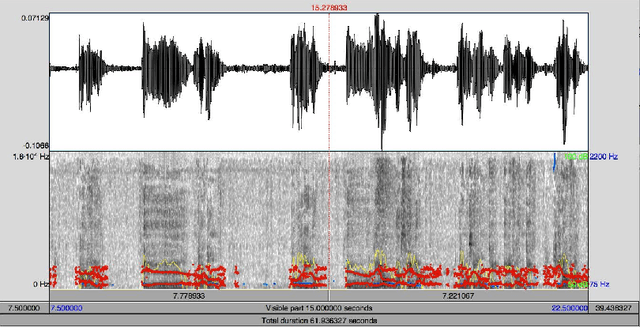

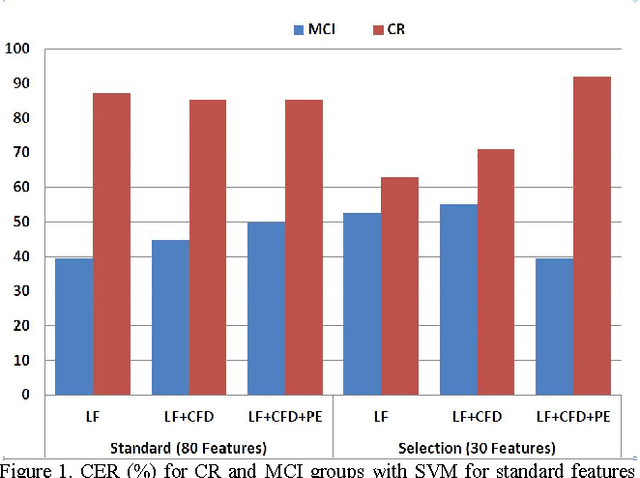

Automatic analysis of Categorical Verbal Fluency for Mild Cognitive Impartment detection: a non-linear language independent approach

Mar 18, 2022

Abstract:Alzheimer's disease (AD) is one the main causes of dementia in the world and the patients develop severe disability and sometime full dependence. In previous stages Mild Cognitive Impairment (MCI) produces cognitive loss but not severe enough to interfere with daily life. This work, on selection of biomarkers from speech for the detection of AD, is part of a wide-ranging cross study for the diagnosis of Alzheimer. Specifically in this work a task for detection of MCI has been used. The task analyzes Categorical Verbal Fluency. The automatic classification is carried out by SVM over classical linear features, Castiglioni fractal dimension and Permutation Entropy. Finally the most relevant features are selected by ANOVA test. The promising results are over 50% for MCI

* 4 pages, published in 2015 4th International Work Conference on Bioinspired Intelligence (IWOBI), pp. 101-104

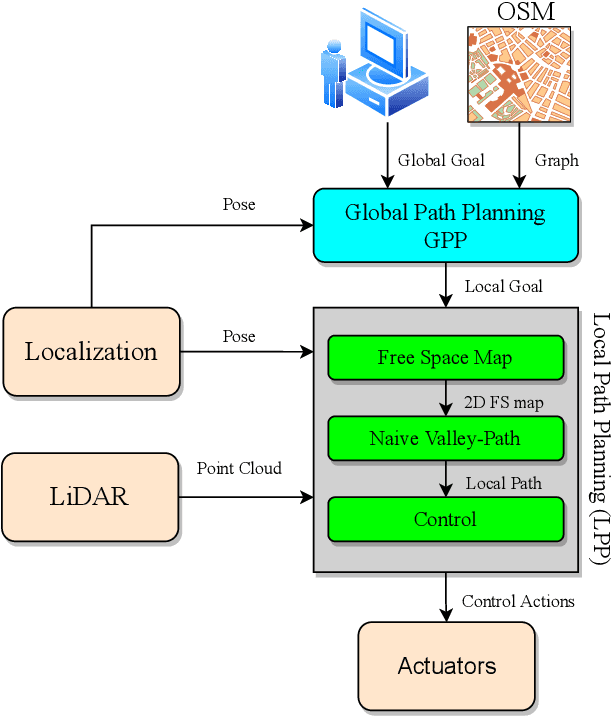

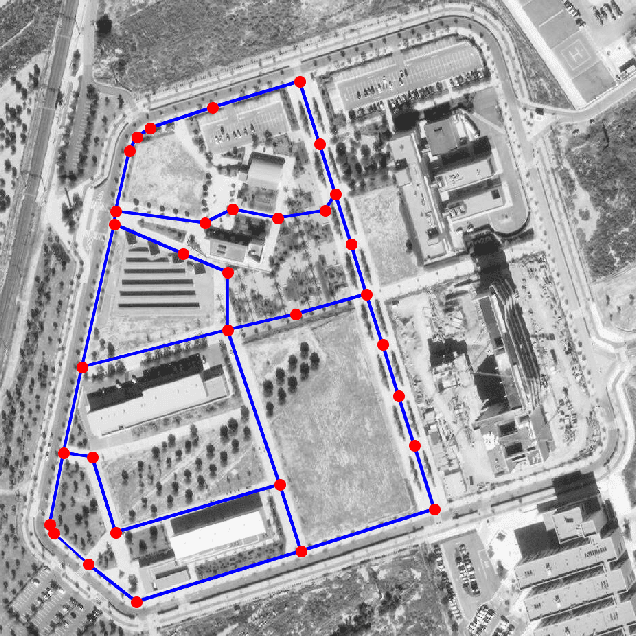

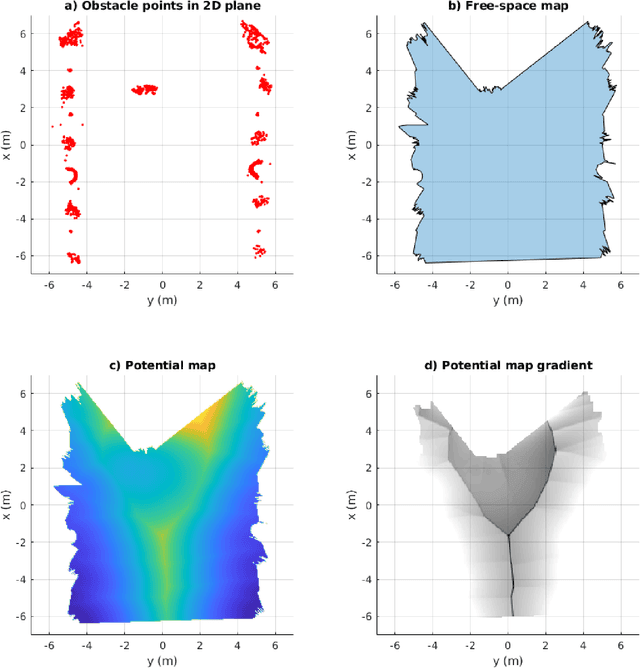

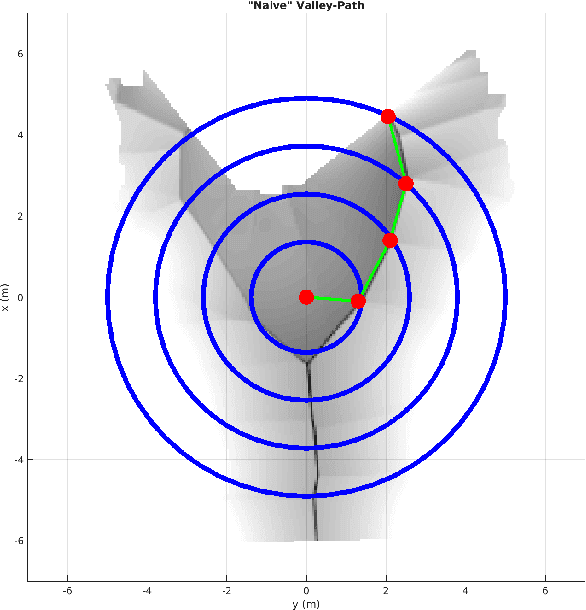

Path Planning With Naive-Valley-Path Obstacle Avoidance and Global Map-Free

Aug 20, 2021

Abstract:In this paper, we present a complete Path Planning approach divided into two main categories: Global Path Planning (GPP) and Local Path Planning (LPP). Unlike most other works, the GPP layer, instead of complex and heavy maps, uses road and intersections graphs obtained directly from internet applications like OpenStreetMaps (OSM). This map-free GPP frees us from the common area-size restrictions. In the LPP layer, we use a novel Naive-Valley-Path method (NVP) to generate a local path avoiding obstacles in the road in an extremely-low execution time period. This approach exploits the concept of valley areas around local minima, i.e., the ones always away from obstacles. We demonstrate the robustness of the system in our research platform BLUE, driving autonomously across the University of Alicante Scientific Park for more than 20 km in a 12.33 ha area. Our vehicle avoids different static persistent and non-persistent obstacles in the road and even dynamic ones, such as vehicles and pedestrians. Code is available at https://github.com/AUROVA-LAB/lib_planning.

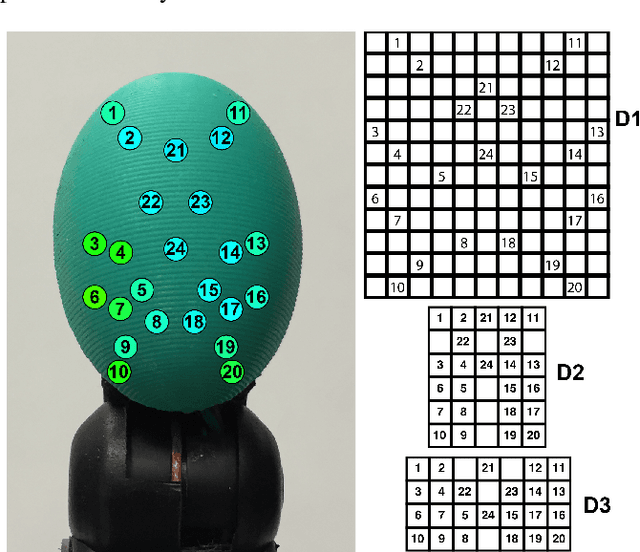

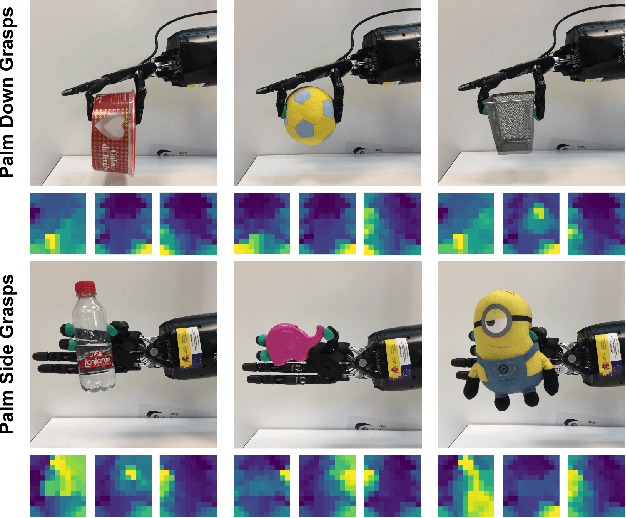

Non-Matrix Tactile Sensors: How Can Be Exploited Their Local Connectivity For Predicting Grasp Stability?

Sep 14, 2018

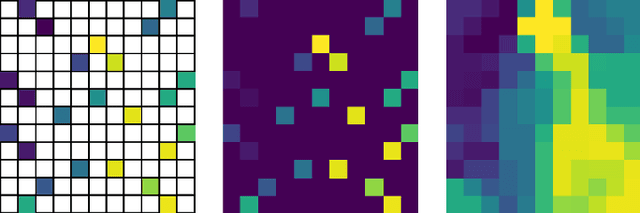

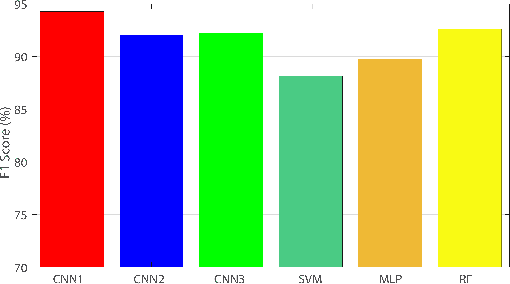

Abstract:Tactile sensors supply useful information during the interaction with an object that can be used for assessing the stability of a grasp. Most of the previous works on this topic processed tactile readings as signals by calculating hand-picked features. Some of them have processed these readings as images calculating characteristics on matrix-like sensors. In this work, we explore how non-matrix sensors (sensors with taxels not arranged exactly in a matrix) can be processed as tactile images as well. In addition, we prove that they can be used for predicting grasp stability by training a Convolutional Neural Network (CNN) with them. We captured over 2500 real three-fingered grasps on 41 everyday objects to train a CNN that exploited the local connectivity inherent on the non-matrix tactile sensors, achieving 94.2% F1-score on predicting stability.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge