Julie Stephany Berrio Perez

Spotting the Unexpected (STU): A 3D LiDAR Dataset for Anomaly Segmentation in Autonomous Driving

May 04, 2025

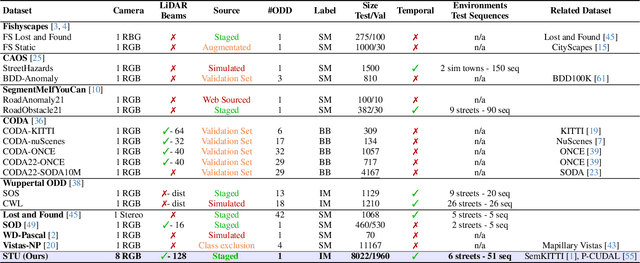

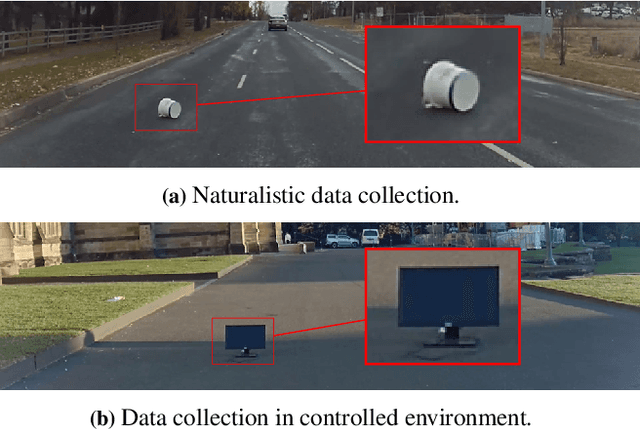

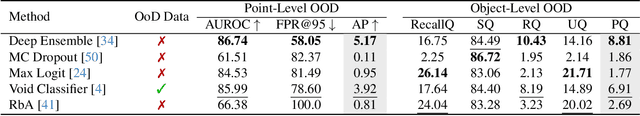

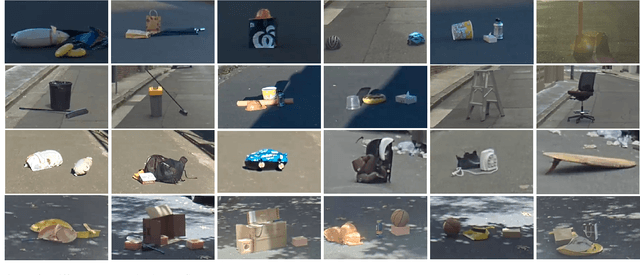

Abstract:To operate safely, autonomous vehicles (AVs) need to detect and handle unexpected objects or anomalies on the road. While significant research exists for anomaly detection and segmentation in 2D, research progress in 3D is underexplored. Existing datasets lack high-quality multimodal data that are typically found in AVs. This paper presents a novel dataset for anomaly segmentation in driving scenarios. To the best of our knowledge, it is the first publicly available dataset focused on road anomaly segmentation with dense 3D semantic labeling, incorporating both LiDAR and camera data, as well as sequential information to enable anomaly detection across various ranges. This capability is critical for the safe navigation of autonomous vehicles. We adapted and evaluated several baseline models for 3D segmentation, highlighting the challenges of 3D anomaly detection in driving environments. Our dataset and evaluation code will be openly available, facilitating the testing and performance comparison of different approaches.

Mixed Signals: A Diverse Point Cloud Dataset for Heterogeneous LiDAR V2X Collaboration

Feb 19, 2025Abstract:Vehicle-to-everything (V2X) collaborative perception has emerged as a promising solution to address the limitations of single-vehicle perception systems. However, existing V2X datasets are limited in scope, diversity, and quality. To address these gaps, we present Mixed Signals, a comprehensive V2X dataset featuring 45.1k point clouds and 240.6k bounding boxes collected from three connected autonomous vehicles (CAVs) equipped with two different types of LiDAR sensors, plus a roadside unit with dual LiDARs. Our dataset provides precisely aligned point clouds and bounding box annotations across 10 classes, ensuring reliable data for perception training. We provide detailed statistical analysis on the quality of our dataset and extensively benchmark existing V2X methods on it. Mixed Signals V2X Dataset is one of the highest quality, large-scale datasets publicly available for V2X perception research. Details on the website https://mixedsignalsdataset.cs.cornell.edu/.

Robots in the Wild: Contextually-Adaptive Human-Robot Interactions in Urban Public Environments

Dec 10, 2024

Abstract:The increasing transition of human-robot interaction (HRI) context from controlled settings to dynamic, real-world public environments calls for enhanced adaptability in robotic systems. This can go beyond algorithmic navigation or traditional HRI strategies in structured settings, requiring the ability to navigate complex public urban systems containing multifaceted dynamics and various socio-technical needs. Therefore, our proposed workshop seeks to extend the boundaries of adaptive HRI research beyond predictable, semi-structured contexts and highlight opportunities for adaptable robot interactions in urban public environments. This half-day workshop aims to explore design opportunities and challenges in creating contextually-adaptive HRI within these spaces and establish a network of interested parties within the OzCHI research community. By fostering ongoing discussions, sharing of insights, and collaborations, we aim to catalyse future research that empowers robots to navigate the inherent uncertainties and complexities of real-world public interactions.

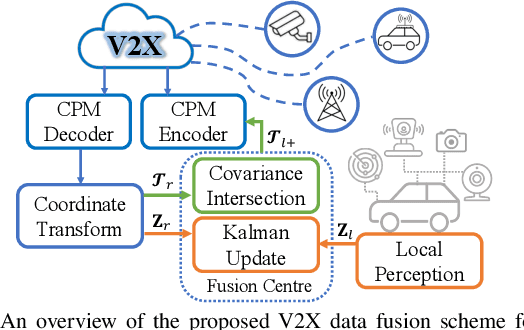

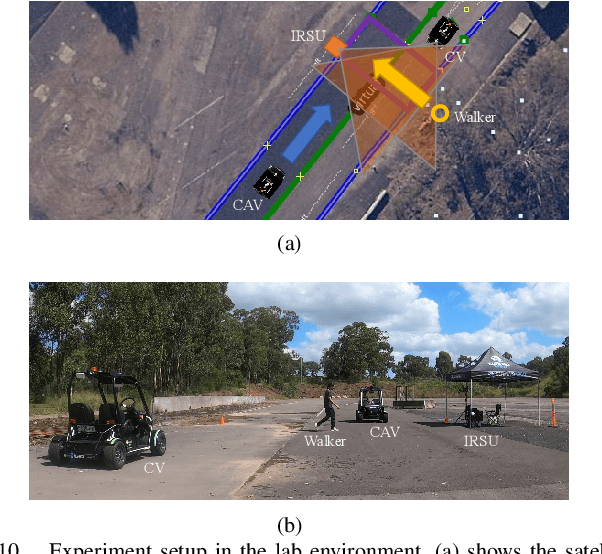

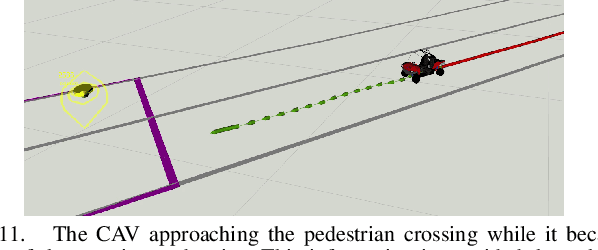

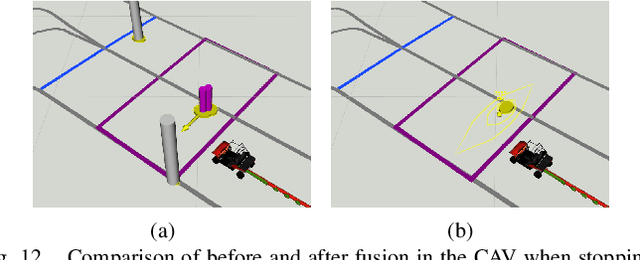

A Novel Probabilistic V2X Data Fusion Framework for Cooperative Perception

Mar 31, 2022

Abstract:The paper addresses the vehicle-to-X (V2X) data fusion for cooperative or collective perception (CP). This emerging and promising intelligent transportation systems (ITS) technology has enormous potential for improving efficiency and safety of road transportation. Recent advances in V2X communication primarily address the definition of V2X messages and data dissemination amongst ITS stations (ITS-Ss) in a traffic environment. Yet, a largely unsolved problem is how a connected vehicle (CV) can efficiently and consistently fuse its local perception information with the data received from other ITS-Ss. In this paper, we present a novel data fusion framework to fuse the local and V2X perception data for CP that considers the presence of cross-correlation. The proposed approach is validated through comprehensive results obtained from numerical simulation, CARLA simulation, and real-world experimentation that incorporates V2X-enabled intelligent platforms. The real-world experiment includes a CV, a connected and automated vehicle (CAV), and an intelligent roadside unit (IRSU) retrofitted with vision and lidar sensors. We also demonstrate how the fused CP information can improve the awareness of vulnerable road users (VRU) for CV/CAV, and how this information can be considered in path planning/decision making within the CAV to facilitate safe interactions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge