Jukka Heikkonen

Governance-as-a-Service: A Multi-Agent Framework for AI System Compliance and Policy Enforcement

Aug 27, 2025Abstract:As AI systems evolve into distributed ecosystems with autonomous execution, asynchronous reasoning, and multi-agent coordination, the absence of scalable, decoupled governance poses a structural risk. Existing oversight mechanisms are reactive, brittle, and embedded within agent architectures, making them non-auditable and hard to generalize across heterogeneous deployments. We introduce Governance-as-a-Service (GaaS): a modular, policy-driven enforcement layer that regulates agent outputs at runtime without altering model internals or requiring agent cooperation. GaaS employs declarative rules and a Trust Factor mechanism that scores agents based on compliance and severity-weighted violations. It enables coercive, normative, and adaptive interventions, supporting graduated enforcement and dynamic trust modulation. To evaluate GaaS, we conduct three simulation regimes with open-source models (LLaMA3, Qwen3, DeepSeek-R1) across content generation and financial decision-making. In the baseline, agents act without governance; in the second, GaaS enforces policies; in the third, adversarial agents probe robustness. All actions are intercepted, evaluated, and logged for analysis. Results show that GaaS reliably blocks or redirects high-risk behaviors while preserving throughput. Trust scores track rule adherence, isolating and penalizing untrustworthy components in multi-agent systems. By positioning governance as a runtime service akin to compute or storage, GaaS establishes infrastructure-level alignment for interoperable agent ecosystems. It does not teach agents ethics; it enforces them.

Focus Your Attention: Towards Data-Intuitive Lightweight Vision Transformers

Jun 23, 2025Abstract:The evolution of Vision Transformers has led to their widespread adaptation to different domains. Despite large-scale success, there remain significant challenges including their reliance on extensive computational and memory resources for pre-training on huge datasets as well as difficulties in task-specific transfer learning. These limitations coupled with energy inefficiencies mainly arise due to the computation-intensive self-attention mechanism. To address these issues, we propose a novel Super-Pixel Based Patch Pooling (SPPP) technique that generates context-aware, semantically rich, patch embeddings to effectively reduce the architectural complexity and improve efficiency. Additionally, we introduce the Light Latent Attention (LLA) module in our pipeline by integrating latent tokens into the attention mechanism allowing cross-attention operations to significantly reduce the time and space complexity of the attention module. By leveraging the data-intuitive patch embeddings coupled with dynamic positional encodings, our approach adaptively modulates the cross-attention process to focus on informative regions while maintaining the global semantic structure. This targeted attention improves training efficiency and accelerates convergence. Notably, the SPPP module is lightweight and can be easily integrated into existing transformer architectures. Extensive experiments demonstrate that our proposed architecture provides significant improvements in terms of computational efficiency while achieving comparable results with the state-of-the-art approaches, highlighting its potential for energy-efficient transformers suitable for edge deployment. (The code is available on our GitHub repository: https://github.com/zser092/Focused-Attention-ViT).

Sim-to-Real Transfer for Mobile Robots with Reinforcement Learning: from NVIDIA Isaac Sim to Gazebo and Real ROS 2 Robots

Jan 06, 2025

Abstract:Unprecedented agility and dexterous manipulation have been demonstrated with controllers based on deep reinforcement learning (RL), with a significant impact on legged and humanoid robots. Modern tooling and simulation platforms, such as NVIDIA Isaac Sim, have been enabling such advances. This article focuses on demonstrating the applications of Isaac in local planning and obstacle avoidance as one of the most fundamental ways in which a mobile robot interacts with its environments. Although there is extensive research on proprioception-based RL policies, the article highlights less standardized and reproducible approaches to exteroception. At the same time, the article aims to provide a base framework for end-to-end local navigation policies and how a custom robot can be trained in such simulation environment. We benchmark end-to-end policies with the state-of-the-art Nav2, navigation stack in Robot Operating System (ROS). We also cover the sim-to-real transfer process by demonstrating zero-shot transferability of policies trained in the Isaac simulator to real-world robots. This is further evidenced by the tests with different simulated robots, which show the generalization of the learned policy. Finally, the benchmarks demonstrate comparable performance to Nav2, opening the door to quick deployment of state-of-the-art end-to-end local planners for custom robot platforms, but importantly furthering the possibilities by expanding the state and action spaces or task definitions for more complex missions. Overall, with this article we introduce the most important steps, and aspects to consider, in deploying RL policies for local path planning and obstacle avoidance with Isaac Sim training, Gazebo testing, and ROS 2 for real-time inference in real robots. The code is available at https://github.com/sahars93/RL-Navigation.

Super Level Sets and Exponential Decay: A Synergistic Approach to Stable Neural Network Training

Sep 25, 2024Abstract:The objective of this paper is to enhance the optimization process for neural networks by developing a dynamic learning rate algorithm that effectively integrates exponential decay and advanced anti-overfitting strategies. Our primary contribution is the establishment of a theoretical framework where we demonstrate that the optimization landscape, under the influence of our algorithm, exhibits unique stability characteristics defined by Lyapunov stability principles. Specifically, we prove that the superlevel sets of the loss function, as influenced by our adaptive learning rate, are always connected, ensuring consistent training dynamics. Furthermore, we establish the "equiconnectedness" property of these superlevel sets, which maintains uniform stability across varying training conditions and epochs. This paper contributes to the theoretical understanding of dynamic learning rate mechanisms in neural networks and also pave the way for the development of more efficient and reliable neural optimization techniques. This study intends to formalize and validate the equiconnectedness of loss function as superlevel sets in the context of neural network training, opening newer avenues for future research in adaptive machine learning algorithms. We leverage previous theoretical discoveries to propose training mechanisms that can effectively handle complex and high-dimensional data landscapes, particularly in applications requiring high precision and reliability.

Cross-Vendor Reproducibility of Radiomics-based Machine Learning Models for Computer-aided Diagnosis

Jul 25, 2024

Abstract:Background: The reproducibility of machine-learning models in prostate cancer detection across different MRI vendors remains a significant challenge. Methods: This study investigates Support Vector Machines (SVM) and Random Forest (RF) models trained on radiomic features extracted from T2-weighted MRI images using Pyradiomics and MRCradiomics libraries. Feature selection was performed using the maximum relevance minimum redundancy (MRMR) technique. We aimed to enhance clinical decision support through multimodal learning and feature fusion. Results: Our SVM model, utilizing combined features from Pyradiomics and MRCradiomics, achieved an AUC of 0.74 on the Multi-Improd dataset (Siemens scanner) but decreased to 0.60 on the Philips test set. The RF model showed similar trends, with notable robustness for models using Pyradiomics features alone (AUC of 0.78 on Philips). Conclusions: These findings demonstrate the potential of multimodal feature integration to improve the robustness and generalizability of machine-learning models for clinical decision support in prostate cancer detection. This study marks a significant step towards developing reliable AI-driven diagnostic tools that maintain efficacy across various imaging platforms.

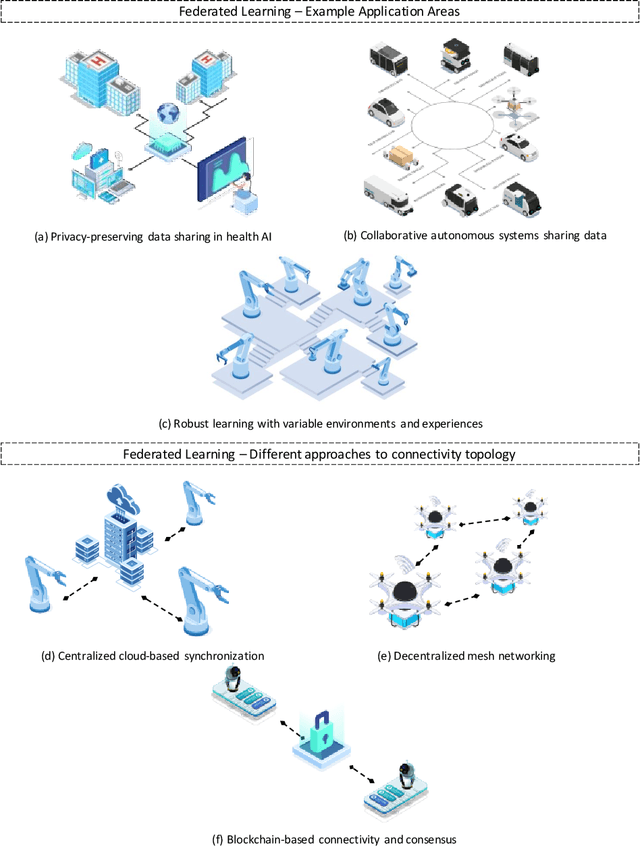

An Overview of Federated Learning at the Edge and Distributed Ledger Technologies for Robotic and Autonomous Systems

Apr 21, 2021

Abstract:Autonomous systems are becoming inherently ubiquitous with the advancements of computing and communication solutions enabling low-latency offloading and real-time collaboration of distributed devices. Decentralized technologies with blockchain and distributed ledger technologies (DLTs) are playing a key role. At the same time, advances in deep learning (DL) have significantly raised the degree of autonomy and level of intelligence of robotic and autonomous systems. While these technological revolutions were taking place, raising concerns in terms of data security and end-user privacy has become an inescapable research consideration. Federated learning (FL) is a promising solution to privacy-preserving DL at the edge, with an inherently distributed nature by learning on isolated data islands and communicating only model updates. However, FL by itself does not provide the levels of security and robustness required by today's standards in distributed autonomous systems. This survey covers applications of FL to autonomous robots, analyzes the role of DLT and FL for these systems, and introduces the key background concepts and considerations in current research.

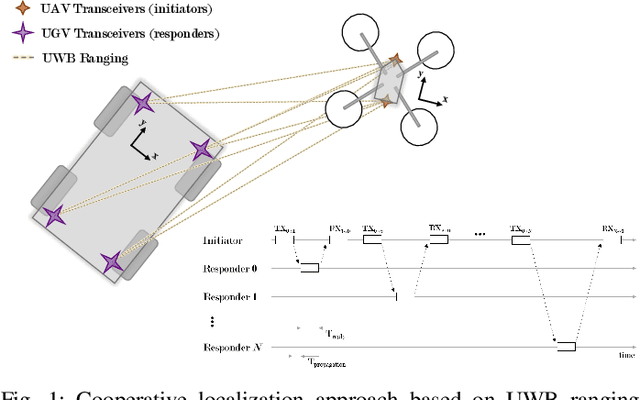

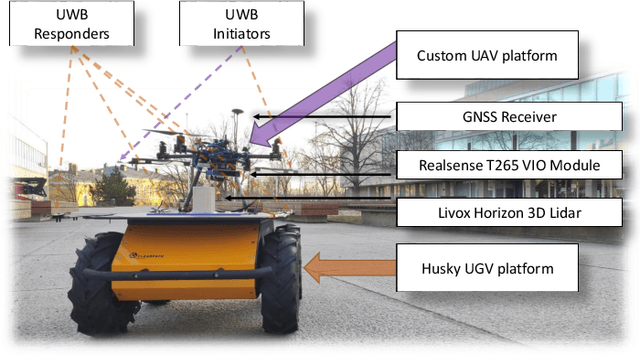

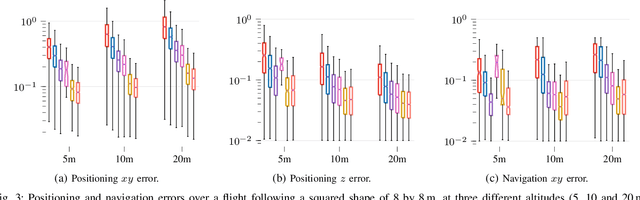

Cooperative UWB-Based Localization for Outdoors Positioning and Navigation of UAVs aided by Ground Robots

Apr 01, 2021

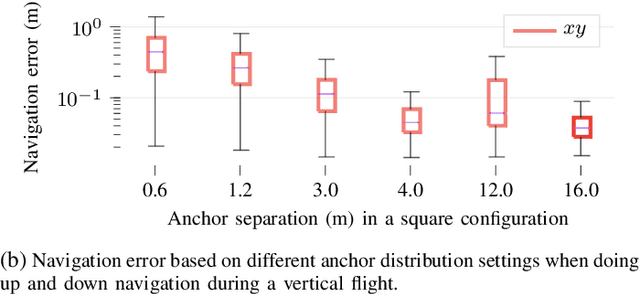

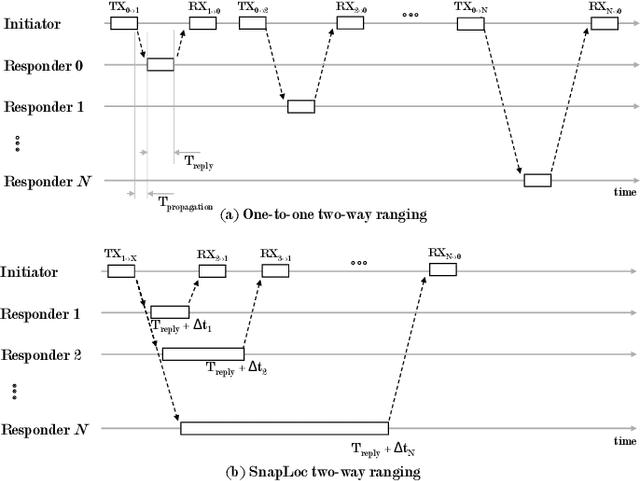

Abstract:Unmanned aerial vehicles (UAVs) are becoming largely ubiquitous with an increasing demand for aerial data. Accurate navigation and localization, required for precise data collection in many industrial applications, often relies on RTK GNSS. These systems, able of centimeter-level accuracy, require a setup and calibration process and are relatively expensive. This paper addresses the problem of accurate positioning and navigation of UAVs through cooperative localization. Inexpensive ultra-wideband (UWB) transceivers installed on both the UAV and a support ground robot enable centimeter-level relative positioning. With fast deployment and wide setup flexibility, the proposed system is able to accommodate different environments and can also be utilized in GNSS-denied environments. Through extensive simulations and test fields, we evaluate the accuracy of the system and compare it to GNSS in urban environments where multipath transmission degrades accuracy. For completeness, we include visual-inertial odometry in the experiments and compare the performance with the UWB-based cooperative localization.

Applications of UWB Networks and Positioning to Autonomous Robots and Industrial Systems

Mar 28, 2021

Abstract:Ultra-wideband (UWB) technology is a mature technology that contested other wireless technologies in the advent of the IoT but did not achieve the same levels of widespread adoption. In recent years, however, with its potential as a wireless ranging and localization solution, it has regained momentum. Within the robotics field, UWB positioning systems are being increasingly adopted for localizing autonomous ground or aerial robots. In the Industrial IoT (IIoT) domain, its potential for ad-hoc networking and simultaneous positioning is also being explored. This survey overviews the state-of-the-art in UWB networking and localization for robotic and autonomous systems. We also cover novel techniques focusing on more scalable systems, collaborative approaches to localization, ad-hoc networking, and solutions involving machine learning to improve accuracy. This is, to the best of our knowledge, the first survey to put together the robotics and IIoT perspectives and to emphasize novel ranging and positioning modalities. We complete the survey with a discussion on current trends and open research problems.

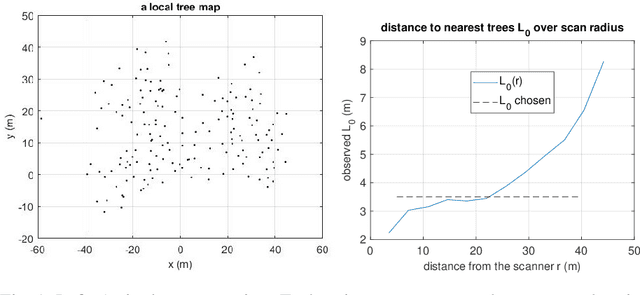

Long-Term Autonomy in Forest Environment using Self-Corrective SLAM

Jan 05, 2021

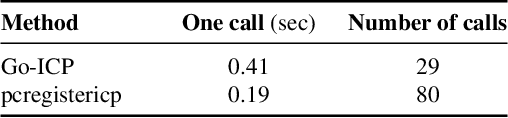

Abstract:Vehicles with prolonged autonomous missions have to maintain environment awareness by simultaneous localization and mapping (SLAM). Closed loop correction is substituted by interpolation in rigid body transformation space in order to systematically reduce the accumulated error over different scales. The computation is divided to an edge computed lightweight SLAM and iterative corrections in the cloud environment. Tree locations in the forest environment are sent via a potentially limited communication bandwidths. Data from a real forest site is used in the verification of the proposed algorithm. The algorithm adds new iterative closest point (ICP) cases to the initial SLAM and measures the resulting map quality by the mean of the root mean squared error (RMSE) of individual tree clusters. Adding 4% more match cases yields the mean RMSE 0.15 m on a large site with 180 m odometric distance.

Asynchronous Corner Tracking Algorithm based on Lifetime of Events for DAVIS Cameras

Oct 29, 2020

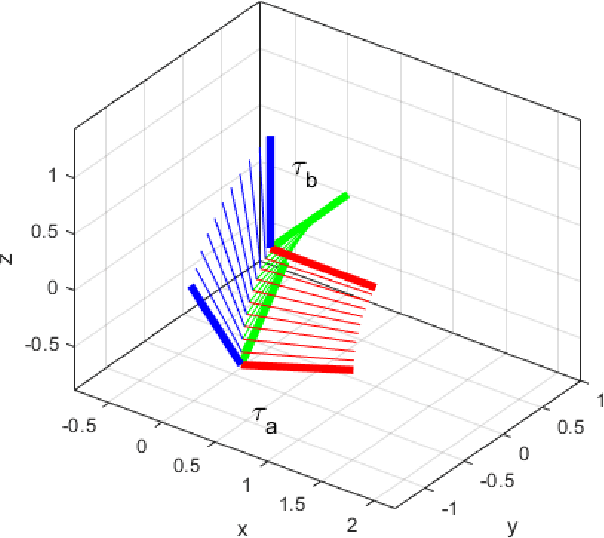

Abstract:Event cameras, i.e., the Dynamic and Active-pixel Vision Sensor (DAVIS) ones, capture the intensity changes in the scene and generates a stream of events in an asynchronous fashion. The output rate of such cameras can reach up to 10 million events per second in high dynamic environments. DAVIS cameras use novel vision sensors that mimic human eyes. Their attractive attributes, such as high output rate, High Dynamic Range (HDR), and high pixel bandwidth, make them an ideal solution for applications that require high-frequency tracking. Moreover, applications that operate in challenging lighting scenarios can exploit the high HDR of event cameras, i.e., 140 dB compared to 60 dB of traditional cameras. In this paper, a novel asynchronous corner tracking method is proposed that uses both events and intensity images captured by a DAVIS camera. The Harris algorithm is used to extract features, i.e., frame-corners from keyframes, i.e., intensity images. Afterward, a matching algorithm is used to extract event-corners from the stream of events. Events are solely used to perform asynchronous tracking until the next keyframe is captured. Neighboring events, within a window size of 5x5 pixels around the event-corner, are used to calculate the velocity and direction of extracted event-corners by fitting the 2D planar using a randomized Hough transform algorithm. Experimental evaluation showed that our approach is able to update the location of the extracted corners up to 100 times during the blind time of traditional cameras, i.e., between two consecutive intensity images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge