Tomi Westerlund

INDOOR-LiDAR: Bridging Simulation and Reality for Robot-Centric 360 degree Indoor LiDAR Perception -- A Robot-Centric Hybrid Dataset

Dec 13, 2025Abstract:We present INDOOR-LIDAR, a comprehensive hybrid dataset of indoor 3D LiDAR point clouds designed to advance research in robot perception. Existing indoor LiDAR datasets often suffer from limited scale, inconsistent annotation formats, and human-induced variability during data collection. INDOOR-LIDAR addresses these limitations by integrating simulated environments with real-world scans acquired using autonomous ground robots, providing consistent coverage and realistic sensor behavior under controlled variations. Each sample consists of dense point cloud data enriched with intensity measurements and KITTI-style annotations. The annotation schema encompasses common indoor object categories within various scenes. The simulated subset enables flexible configuration of layouts, point densities, and occlusions, while the real-world subset captures authentic sensor noise, clutter, and domain-specific artifacts characteristic of real indoor settings. INDOOR-LIDAR supports a wide range of applications including 3D object detection, bird's-eye-view (BEV) perception, SLAM, semantic scene understanding, and domain adaptation between simulated and real indoor domains. By bridging the gap between synthetic and real-world data, INDOOR-LIDAR establishes a scalable, realistic, and reproducible benchmark for advancing robotic perception in complex indoor environments.

Seamless Outdoor-Indoor Pedestrian Positioning System with GNSS/UWB/IMU Fusion: A Comparison of EKF, FGO, and PF

Dec 11, 2025Abstract:Accurate and continuous pedestrian positioning across outdoor-indoor environments remains challenging because GNSS, UWB, and inertial PDR are complementary yet individually fragile under signal blockage, multipath, and drift. This paper presents a unified GNSS/UWB/IMU fusion framework for seamless pedestrian localization and provides a controlled comparison of three probabilistic back-ends: an error-state extended Kalman filter, sliding-window factor graph optimization, and a particle filter. The system uses chest-mounted IMU-based PDR as the motion backbone and integrates absolute updates from GNSS outdoors and UWB indoors. To enhance transition robustness and mitigate urban GNSS degradation, we introduce a lightweight map-based feasibility constraint derived from OpenStreetMap building footprints, treating most building interiors as non-navigable while allowing motion inside a designated UWB-instrumented building. The framework is implemented in ROS 2 and runs in real time on a wearable platform, with visualization in Foxglove. We evaluate three scenarios: indoor (UWB+PDR), outdoor (GNSS+PDR), and seamless outdoor-indoor (GNSS+UWB+PDR). Results show that the ESKF provides the most consistent overall performance in our implementation.

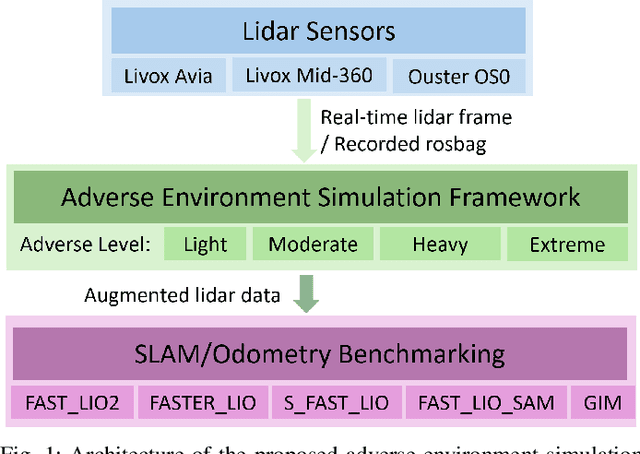

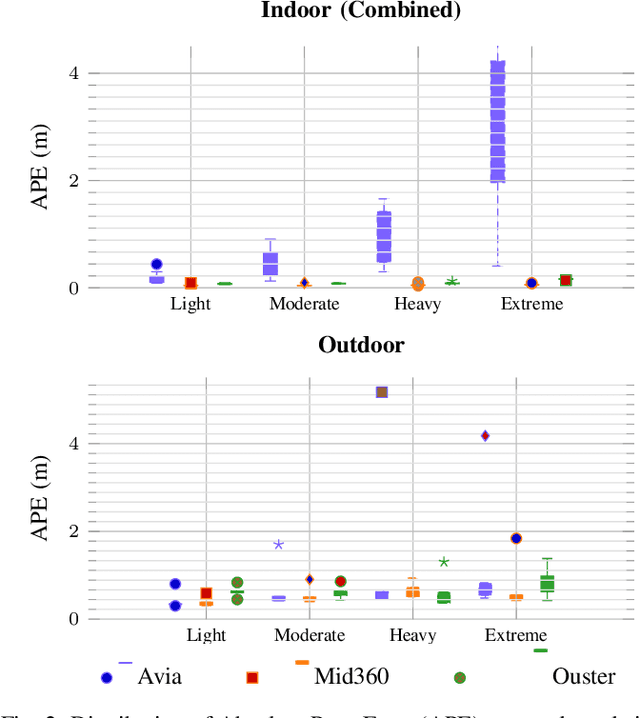

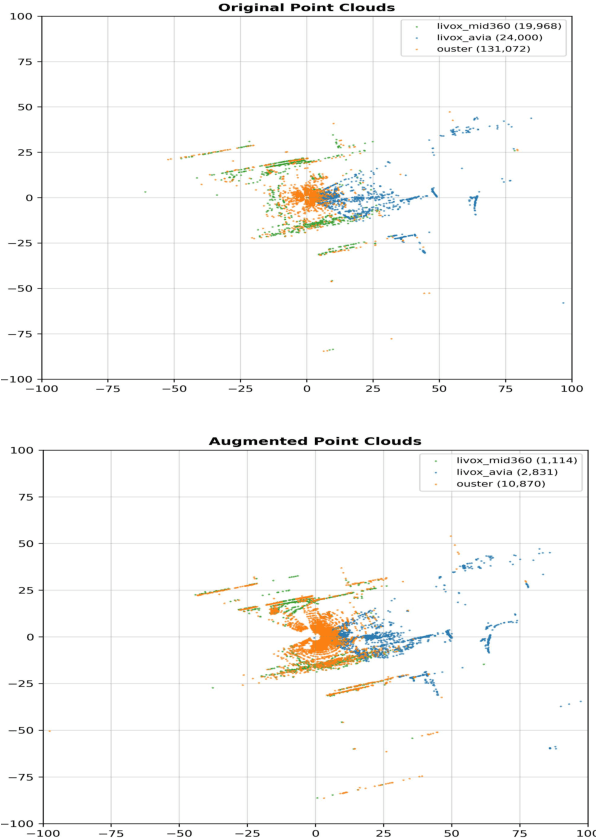

A Sensor-Aware Phenomenological Framework for Lidar Degradation Simulation and SLAM Robustness Evaluation

Dec 09, 2025

Abstract:Lidar-based SLAM systems are highly sensitive to adverse conditions such as occlusion, noise, and field-of-view (FoV) degradation, yet existing robustness evaluation methods either lack physical grounding or do not capture sensor-specific behavior. This paper presents a sensor-aware, phenomenological framework for simulating interpretable lidar degradations directly on real point clouds, enabling controlled and reproducible SLAM stress testing. Unlike image-derived corruption benchmarks (e.g., SemanticKITTI-C) or simulation-only approaches (e.g., lidarsim), the proposed system preserves per-point geometry, intensity, and temporal structure while applying structured dropout, FoV reduction, Gaussian noise, occlusion masking, sparsification, and motion distortion. The framework features autonomous topic and sensor detection, modular configuration with four severity tiers (light--extreme), and real-time performance (less than 20 ms per frame) compatible with ROS workflows. Experimental validation across three lidar architectures and five state-of-the-art SLAM systems reveals distinct robustness patterns shaped by sensor design and environmental context. The open-source implementation provides a practical foundation for benchmarking lidar-based SLAM under physically meaningful degradation scenarios.

Follow-Me in Micro-Mobility with End-to-End Imitation Learning

Nov 07, 2025Abstract:Autonomous micro-mobility platforms face challenges from the perspective of the typical deployment environment: large indoor spaces or urban areas that are potentially crowded and highly dynamic. While social navigation algorithms have progressed significantly, optimizing user comfort and overall user experience over other typical metrics in robotics (e.g., time or distance traveled) is understudied. Specifically, these metrics are critical in commercial applications. In this paper, we show how imitation learning delivers smoother and overall better controllers, versus previously used manually-tuned controllers. We demonstrate how DAAV's autonomous wheelchair achieves state-of-the-art comfort in follow-me mode, in which it follows a human operator assisting persons with reduced mobility (PRM). This paper analyzes different neural network architectures for end-to-end control and demonstrates their usability in real-world production-level deployments.

Degradation-Aware Cooperative Multi-Modal GNSS-Denied Localization Leveraging LiDAR-Based Robot Detections

Oct 23, 2025Abstract:Accurate long-term localization using onboard sensors is crucial for robots operating in Global Navigation Satellite System (GNSS)-denied environments. While complementary sensors mitigate individual degradations, carrying all the available sensor types on a single robot significantly increases the size, weight, and power demands. Distributing sensors across multiple robots enhances the deployability but introduces challenges in fusing asynchronous, multi-modal data from independently moving platforms. We propose a novel adaptive multi-modal multi-robot cooperative localization approach using a factor-graph formulation to fuse asynchronous Visual-Inertial Odometry (VIO), LiDAR-Inertial Odometry (LIO), and 3D inter-robot detections from distinct robots in a loosely-coupled fashion. The approach adapts to changing conditions, leveraging reliable data to assist robots affected by sensory degradations. A novel interpolation-based factor enables fusion of the unsynchronized measurements. LIO degradations are evaluated based on the approximate scan-matching Hessian. A novel approach of weighting odometry data proportionally to the Wasserstein distance between the consecutive VIO outputs is proposed. A theoretical analysis is provided, investigating the cooperative localization problem under various conditions, mainly in the presence of sensory degradations. The proposed method has been extensively evaluated on real-world data gathered with heterogeneous teams of an Unmanned Ground Vehicle (UGV) and Unmanned Aerial Vehicles (UAVs), showing that the approach provides significant improvements in localization accuracy in the presence of various sensory degradations.

IndoorBEV: Joint Detection and Footprint Completion of Objects via Mask-based Prediction in Indoor Scenarios for Bird's-Eye View Perception

Jul 23, 2025Abstract:Detecting diverse objects within complex indoor 3D point clouds presents significant challenges for robotic perception, particularly with varied object shapes, clutter, and the co-existence of static and dynamic elements where traditional bounding box methods falter. To address these limitations, we propose IndoorBEV, a novel mask-based Bird's-Eye View (BEV) method for indoor mobile robots. In a BEV method, a 3D scene is projected into a 2D BEV grid which handles naturally occlusions and provides a consistent top-down view aiding to distinguish static obstacles from dynamic agents. The obtained 2D BEV results is directly usable to downstream robotic tasks like navigation, motion prediction, and planning. Our architecture utilizes an axis compact encoder and a window-based backbone to extract rich spatial features from this BEV map. A query-based decoder head then employs learned object queries to concurrently predict object classes and instance masks in the BEV space. This mask-centric formulation effectively captures the footprint of both static and dynamic objects regardless of their shape, offering a robust alternative to bounding box regression. We demonstrate the effectiveness of IndoorBEV on a custom indoor dataset featuring diverse object classes including static objects and dynamic elements like robots and miscellaneous items, showcasing its potential for robust indoor scene understanding.

Improved Brain Tumor Detection in MRI: Fuzzy Sigmoid Convolution in Deep Learning

May 08, 2025Abstract:Early detection and accurate diagnosis are essential to improving patient outcomes. The use of convolutional neural networks (CNNs) for tumor detection has shown promise, but existing models often suffer from overparameterization, which limits their performance gains. In this study, fuzzy sigmoid convolution (FSC) is introduced along with two additional modules: top-of-the-funnel and middle-of-the-funnel. The proposed methodology significantly reduces the number of trainable parameters without compromising classification accuracy. A novel convolutional operator is central to this approach, effectively dilating the receptive field while preserving input data integrity. This enables efficient feature map reduction and enhances the model's tumor detection capability. In the FSC-based model, fuzzy sigmoid activation functions are incorporated within convolutional layers to improve feature extraction and classification. The inclusion of fuzzy logic into the architecture improves its adaptability and robustness. Extensive experiments on three benchmark datasets demonstrate the superior performance and efficiency of the proposed model. The FSC-based architecture achieved classification accuracies of 99.17%, 99.75%, and 99.89% on three different datasets. The model employs 100 times fewer parameters than large-scale transfer learning architectures, highlighting its computational efficiency and suitability for detecting brain tumors early. This research offers lightweight, high-performance deep-learning models for medical imaging applications.

Enhancing Lidar Point Cloud Sampling via Colorization and Super-Resolution of Lidar Imagery

May 04, 2025Abstract:Recent advancements in lidar technology have led to improved point cloud resolution as well as the generation of 360 degrees, low-resolution images by encoding depth, reflectivity, or near-infrared light within each pixel. These images enable the application of deep learning (DL) approaches, originally developed for RGB images from cameras to lidar-only systems, eliminating other efforts, such as lidar-camera calibration. Compared with conventional RGB images, lidar imagery demonstrates greater robustness in adverse environmental conditions, such as low light and foggy weather. Moreover, the imaging capability addresses the challenges in environments where the geometric information in point clouds may be degraded, such as long corridors, and dense point clouds may be misleading, potentially leading to drift errors. Therefore, this paper proposes a novel framework that leverages DL-based colorization and super-resolution techniques on lidar imagery to extract reliable samples from lidar point clouds for odometry estimation. The enhanced lidar images, enriched with additional information, facilitate improved keypoint detection, which is subsequently employed for more effective point cloud downsampling. The proposed method enhances point cloud registration accuracy and mitigates mismatches arising from insufficient geometric information or misleading extra points. Experimental results indicate that our approach surpasses previous methods, achieving lower translation and rotation errors while using fewer points.

Sim-to-Real Transfer for Mobile Robots with Reinforcement Learning: from NVIDIA Isaac Sim to Gazebo and Real ROS 2 Robots

Jan 06, 2025

Abstract:Unprecedented agility and dexterous manipulation have been demonstrated with controllers based on deep reinforcement learning (RL), with a significant impact on legged and humanoid robots. Modern tooling and simulation platforms, such as NVIDIA Isaac Sim, have been enabling such advances. This article focuses on demonstrating the applications of Isaac in local planning and obstacle avoidance as one of the most fundamental ways in which a mobile robot interacts with its environments. Although there is extensive research on proprioception-based RL policies, the article highlights less standardized and reproducible approaches to exteroception. At the same time, the article aims to provide a base framework for end-to-end local navigation policies and how a custom robot can be trained in such simulation environment. We benchmark end-to-end policies with the state-of-the-art Nav2, navigation stack in Robot Operating System (ROS). We also cover the sim-to-real transfer process by demonstrating zero-shot transferability of policies trained in the Isaac simulator to real-world robots. This is further evidenced by the tests with different simulated robots, which show the generalization of the learned policy. Finally, the benchmarks demonstrate comparable performance to Nav2, opening the door to quick deployment of state-of-the-art end-to-end local planners for custom robot platforms, but importantly furthering the possibilities by expanding the state and action spaces or task definitions for more complex missions. Overall, with this article we introduce the most important steps, and aspects to consider, in deploying RL policies for local path planning and obstacle avoidance with Isaac Sim training, Gazebo testing, and ROS 2 for real-time inference in real robots. The code is available at https://github.com/sahars93/RL-Navigation.

Dual-Criterion Model Aggregation in Federated Learning: Balancing Data Quantity and Quality

Nov 12, 2024

Abstract:Federated learning (FL) has become one of the key methods for privacy-preserving collaborative learning, as it enables the transfer of models without requiring local data exchange. Within the FL framework, an aggregation algorithm is recognized as one of the most crucial components for ensuring the efficacy and security of the system. Existing average aggregation algorithms typically assume that all client-trained data holds equal value or that weights are based solely on the quantity of data contributed by each client. In contrast, alternative approaches involve training the model locally after aggregation to enhance adaptability. However, these approaches fundamentally ignore the inherent heterogeneity between different clients' data and the complexity of variations in data at the aggregation stage, which may lead to a suboptimal global model. To address these issues, this study proposes a novel dual-criterion weighted aggregation algorithm involving the quantity and quality of data from the client node. Specifically, we quantify the data used for training and perform multiple rounds of local model inference accuracy evaluation on a specialized dataset to assess the data quality of each client. These two factors are utilized as weights within the aggregation process, applied through a dynamically weighted summation of these two factors. This approach allows the algorithm to adaptively adjust the weights, ensuring that every client can contribute to the global model, regardless of their data's size or initial quality. Our experiments show that the proposed algorithm outperforms several existing state-of-the-art aggregation approaches on both a general-purpose open-source dataset, CIFAR-10, and a dataset specific to visual obstacle avoidance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge