Haizhou Zhang

Seamless Outdoor-Indoor Pedestrian Positioning System with GNSS/UWB/IMU Fusion: A Comparison of EKF, FGO, and PF

Dec 11, 2025Abstract:Accurate and continuous pedestrian positioning across outdoor-indoor environments remains challenging because GNSS, UWB, and inertial PDR are complementary yet individually fragile under signal blockage, multipath, and drift. This paper presents a unified GNSS/UWB/IMU fusion framework for seamless pedestrian localization and provides a controlled comparison of three probabilistic back-ends: an error-state extended Kalman filter, sliding-window factor graph optimization, and a particle filter. The system uses chest-mounted IMU-based PDR as the motion backbone and integrates absolute updates from GNSS outdoors and UWB indoors. To enhance transition robustness and mitigate urban GNSS degradation, we introduce a lightweight map-based feasibility constraint derived from OpenStreetMap building footprints, treating most building interiors as non-navigable while allowing motion inside a designated UWB-instrumented building. The framework is implemented in ROS 2 and runs in real time on a wearable platform, with visualization in Foxglove. We evaluate three scenarios: indoor (UWB+PDR), outdoor (GNSS+PDR), and seamless outdoor-indoor (GNSS+UWB+PDR). Results show that the ESKF provides the most consistent overall performance in our implementation.

fastbmRAG: A Fast Graph-Based RAG Framework for Efficient Processing of Large-Scale Biomedical Literature

Nov 13, 2025Abstract:Large language models (LLMs) are rapidly transforming various domains, including biomedicine and healthcare, and demonstrate remarkable potential from scientific research to new drug discovery. Graph-based retrieval-augmented generation (RAG) systems, as a useful application of LLMs, can improve contextual reasoning through structured entity and relationship identification from long-context knowledge, e.g. biomedical literature. Even though many advantages over naive RAGs, most of graph-based RAGs are computationally intensive, which limits their application to large-scale dataset. To address this issue, we introduce fastbmRAG, an fast graph-based RAG optimized for biomedical literature. Utilizing well organized structure of biomedical papers, fastbmRAG divides the construction of knowledge graph into two stages, first drafting graphs using abstracts; and second, refining them using main texts guided by vector-based entity linking, which minimizes redundancy and computational load. Our evaluations demonstrate that fastbmRAG is over 10x faster than existing graph-RAG tools and achieve superior coverage and accuracy to input knowledge. FastbmRAG provides a fast solution for quickly understanding, summarizing, and answering questions about biomedical literature on a large scale. FastbmRAG is public available in https://github.com/menggf/fastbmRAG.

Dual-Criterion Model Aggregation in Federated Learning: Balancing Data Quantity and Quality

Nov 12, 2024

Abstract:Federated learning (FL) has become one of the key methods for privacy-preserving collaborative learning, as it enables the transfer of models without requiring local data exchange. Within the FL framework, an aggregation algorithm is recognized as one of the most crucial components for ensuring the efficacy and security of the system. Existing average aggregation algorithms typically assume that all client-trained data holds equal value or that weights are based solely on the quantity of data contributed by each client. In contrast, alternative approaches involve training the model locally after aggregation to enhance adaptability. However, these approaches fundamentally ignore the inherent heterogeneity between different clients' data and the complexity of variations in data at the aggregation stage, which may lead to a suboptimal global model. To address these issues, this study proposes a novel dual-criterion weighted aggregation algorithm involving the quantity and quality of data from the client node. Specifically, we quantify the data used for training and perform multiple rounds of local model inference accuracy evaluation on a specialized dataset to assess the data quality of each client. These two factors are utilized as weights within the aggregation process, applied through a dynamically weighted summation of these two factors. This approach allows the algorithm to adaptively adjust the weights, ensuring that every client can contribute to the global model, regardless of their data's size or initial quality. Our experiments show that the proposed algorithm outperforms several existing state-of-the-art aggregation approaches on both a general-purpose open-source dataset, CIFAR-10, and a dataset specific to visual obstacle avoidance.

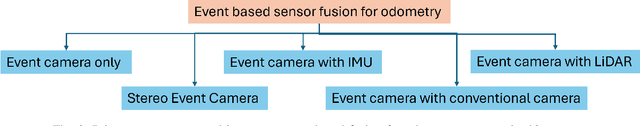

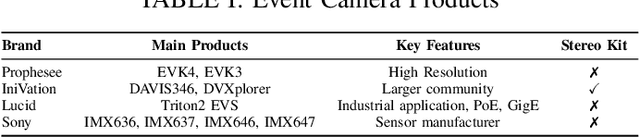

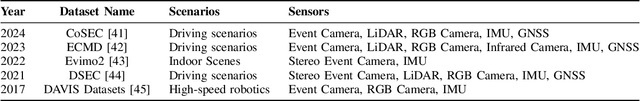

Event-based Sensor Fusion and Application on Odometry: A Survey

Oct 20, 2024

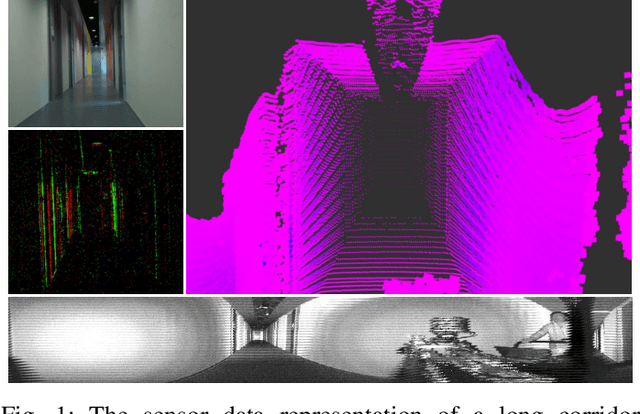

Abstract:Event cameras, inspired by biological vision, are asynchronous sensors that detect changes in brightness, offering notable advantages in environments characterized by high-speed motion, low lighting, or wide dynamic range. These distinctive properties render event cameras particularly effective for sensor fusion in robotics and computer vision, especially in enhancing traditional visual or LiDAR-inertial odometry. Conventional frame-based cameras suffer from limitations such as motion blur and drift, which can be mitigated by the continuous, low-latency data provided by event cameras. Similarly, LiDAR-based odometry encounters challenges related to the loss of geometric information in environments such as corridors. To address these limitations, unlike the existing event camera-related surveys, this paper presents a comprehensive overview of recent advancements in event-based sensor fusion for odometry applications particularly, investigating fusion strategies that incorporate frame-based cameras, inertial measurement units (IMUs), and LiDAR. The survey critically assesses the contributions of these fusion methods to improving odometry performance in complex environments, while highlighting key applications, and discussing the strengths, limitations, and unresolved challenges. Additionally, it offers insights into potential future research directions to advance event-based sensor fusion for next-generation odometry applications.

LiDAR-Generated Images Derived Keypoints Assisted Point Cloud Registration Scheme in Odometry Estimation

Sep 19, 2023

Abstract:Keypoint detection and description play a pivotal role in various robotics and autonomous applications including visual odometry (VO), visual navigation, and Simultaneous localization and mapping (SLAM). While a myriad of keypoint detectors and descriptors have been extensively studied in conventional camera images, the effectiveness of these techniques in the context of LiDAR-generated images, i.e. reflectivity and ranges images, has not been assessed. These images have gained attention due to their resilience in adverse conditions such as rain or fog. Additionally, they contain significant textural information that supplements the geometric information provided by LiDAR point clouds in the point cloud registration phase, especially when reliant solely on LiDAR sensors. This addresses the challenge of drift encountered in LiDAR Odometry (LO) within geometrically identical scenarios or where not all the raw point cloud is informative and may even be misleading. This paper aims to analyze the applicability of conventional image key point extractors and descriptors on LiDAR-generated images via a comprehensive quantitative investigation. Moreover, we propose a novel approach to enhance the robustness and reliability of LO. After extracting key points, we proceed to downsample the point cloud, subsequently integrating it into the point cloud registration phase for the purpose of odometry estimation. Our experiment demonstrates that the proposed approach has comparable accuracy but reduced computational overhead, higher odometry publishing rate, and even superior performance in scenarios prone to drift by using the raw point cloud. This, in turn, lays a foundation for subsequent investigations into the integration of LiDAR-generated images with LO. Our code is available on GitHub: https://github.com/TIERS/ws-lidar-as-camera-odom.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge