Jorge Peña Queralta

Follow-Me in Micro-Mobility with End-to-End Imitation Learning

Nov 07, 2025Abstract:Autonomous micro-mobility platforms face challenges from the perspective of the typical deployment environment: large indoor spaces or urban areas that are potentially crowded and highly dynamic. While social navigation algorithms have progressed significantly, optimizing user comfort and overall user experience over other typical metrics in robotics (e.g., time or distance traveled) is understudied. Specifically, these metrics are critical in commercial applications. In this paper, we show how imitation learning delivers smoother and overall better controllers, versus previously used manually-tuned controllers. We demonstrate how DAAV's autonomous wheelchair achieves state-of-the-art comfort in follow-me mode, in which it follows a human operator assisting persons with reduced mobility (PRM). This paper analyzes different neural network architectures for end-to-end control and demonstrates their usability in real-world production-level deployments.

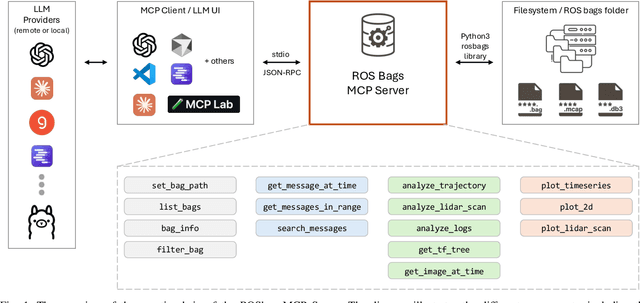

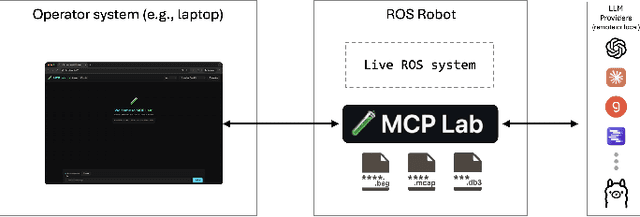

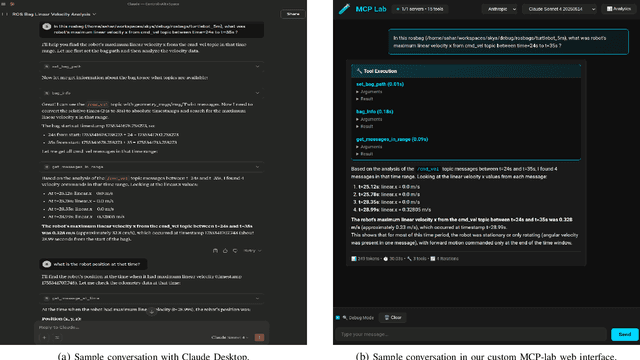

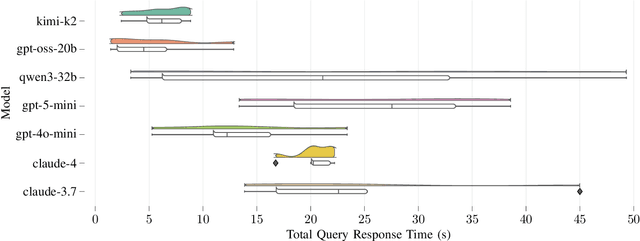

ROSBag MCP Server: Analyzing Robot Data with LLMs for Agentic Embodied AI Applications

Nov 05, 2025

Abstract:Agentic AI systems and Physical or Embodied AI systems have been two key research verticals at the forefront of Artificial Intelligence and Robotics, with Model Context Protocol (MCP) increasingly becoming a key component and enabler of agentic applications. However, the literature at the intersection of these verticals, i.e., Agentic Embodied AI, remains scarce. This paper introduces an MCP server for analyzing ROS and ROS 2 bags, allowing for analyzing, visualizing and processing robot data with natural language through LLMs and VLMs. We describe specific tooling built with robotics domain knowledge, with our initial release focused on mobile robotics and supporting natively the analysis of trajectories, laser scan data, transforms, or time series data. This is in addition to providing an interface to standard ROS 2 CLI tools ("ros2 bag list" or "ros2 bag info"), as well as the ability to filter bags with a subset of topics or trimmed in time. Coupled with the MCP server, we provide a lightweight UI that allows the benchmarking of the tooling with different LLMs, both proprietary (Anthropic, OpenAI) and open-source (through Groq). Our experimental results include the analysis of tool calling capabilities of eight different state-of-the-art LLM/VLM models, both proprietary and open-source, large and small. Our experiments indicate that there is a large divide in tool calling capabilities, with Kimi K2 and Claude Sonnet 4 demonstrating clearly superior performance. We also conclude that there are multiple factors affecting the success rates, from the tool description schema to the number of arguments, as well as the number of tools available to the models. The code is available with a permissive license at https://github.com/binabik-ai/mcp-rosbags.

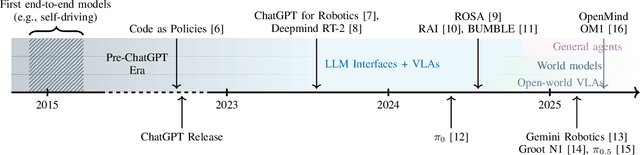

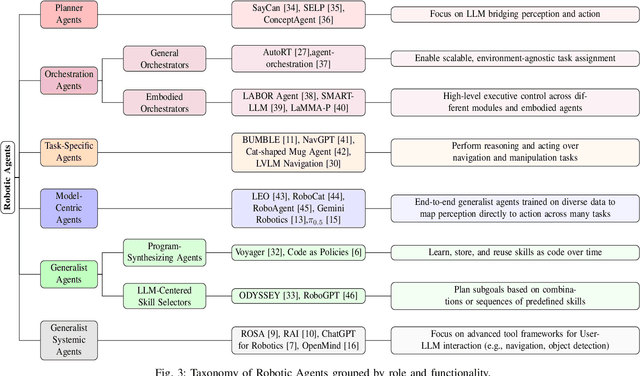

Towards Embodied Agentic AI: Review and Classification of LLM- and VLM-Driven Robot Autonomy and Interaction

Aug 07, 2025

Abstract:Foundation models, including large language models (LLMs) and vision-language models (VLMs), have recently enabled novel approaches to robot autonomy and human-robot interfaces. In parallel, vision-language-action models (VLAs) or large behavior models (BLMs) are increasing the dexterity and capabilities of robotic systems. This survey paper focuses on those words advancing towards agentic applications and architectures. This includes initial efforts exploring GPT-style interfaces to tooling, as well as more complex system where AI agents are coordinators, planners, perception actors, or generalist interfaces. Such agentic architectures allow robots to reason over natural language instructions, invoke APIs, plan task sequences, or assist in operations and diagnostics. In addition to peer-reviewed research, due to the fast-evolving nature of the field, we highlight and include community-driven projects, ROS packages, and industrial frameworks that show emerging trends. We propose a taxonomy for classifying model integration approaches and present a comparative analysis of the role that agents play in different solutions in today's literature.

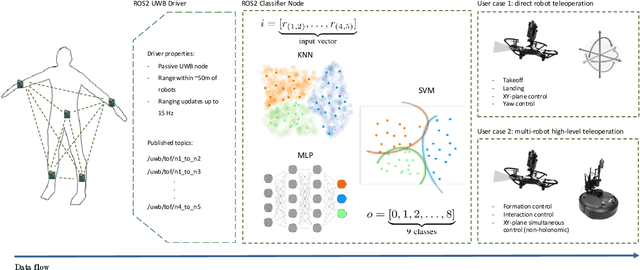

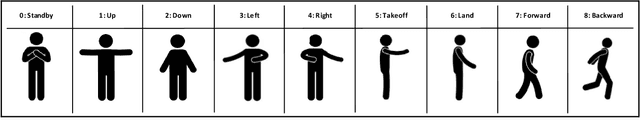

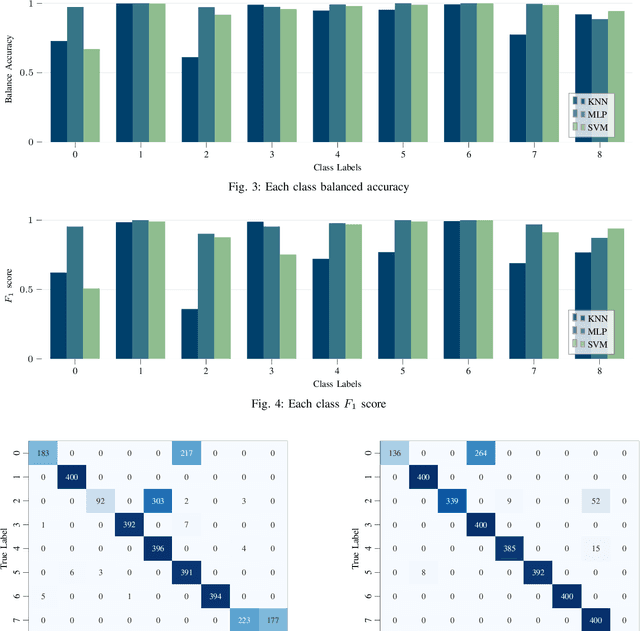

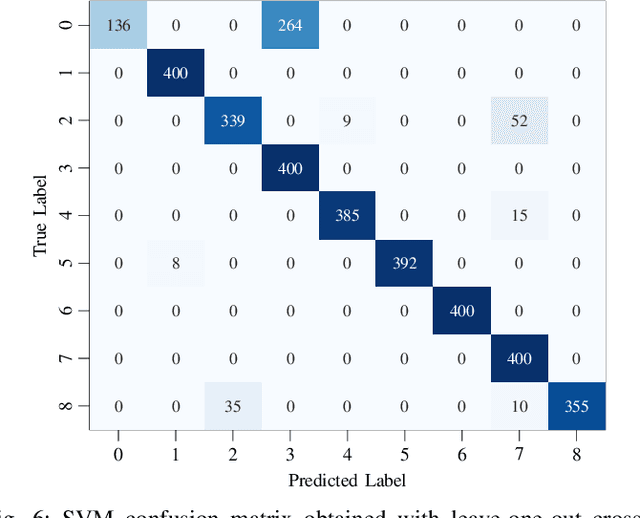

Benchmarking ML Approaches to UWB-Based Range-Only Posture Recognition for Human Robot-Interaction

Aug 28, 2024

Abstract:Human pose estimation involves detecting and tracking the positions of various body parts using input data from sources such as images, videos, or motion and inertial sensors. This paper presents a novel approach to human pose estimation using machine learning algorithms to predict human posture and translate them into robot motion commands using ultra-wideband (UWB) nodes, as an alternative to motion sensors. The study utilizes five UWB sensors implemented on the human body to enable the classification of still poses and more robust posture recognition. This approach ensures effective posture recognition across a variety of subjects. These range measurements serve as input features for posture prediction models, which are implemented and compared for accuracy. For this purpose, machine learning algorithms including K-Nearest Neighbors (KNN), Support Vector Machine (SVM), and deep Multi-Layer Perceptron (MLP) neural network are employed and compared in predicting corresponding postures. We demonstrate the proposed approach for real-time control of different mobile/aerial robots with inference implemented in a ROS 2 node. Experimental results demonstrate the efficacy of the approach, showcasing successful prediction of human posture and corresponding robot movements with high accuracy.

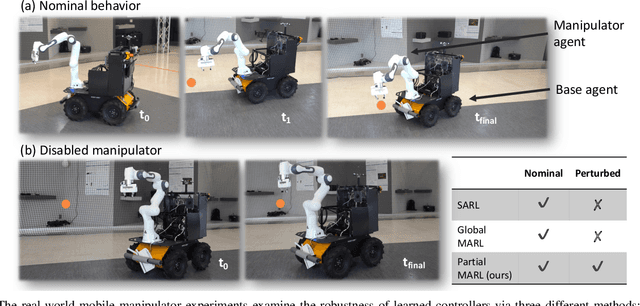

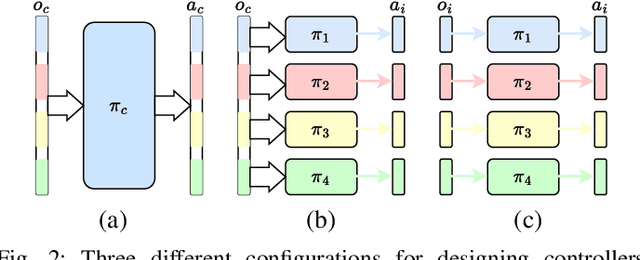

Less Is More: Robust Robot Learning via Partially Observable Multi-Agent Reinforcement Learning

Sep 26, 2023

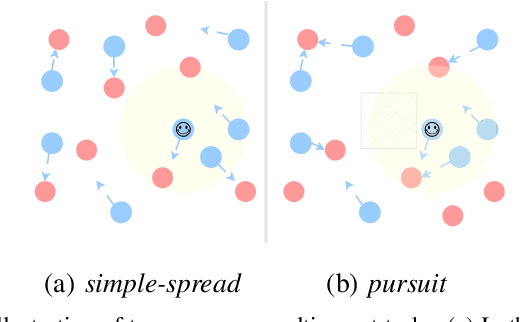

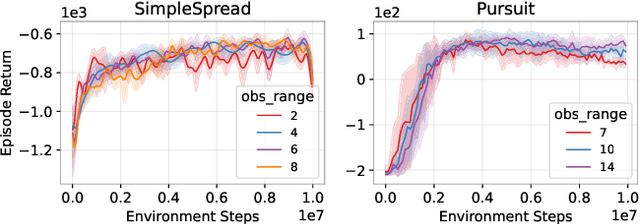

Abstract:In many multi-agent and high-dimensional robotic tasks, the controller can be designed in either a centralized or decentralized way. Correspondingly, it is possible to use either single-agent reinforcement learning (SARL) or multi-agent reinforcement learning (MARL) methods to learn such controllers. However, the relationship between these two paradigms remains under-studied in the literature. This work explores research questions in terms of robustness and performance of SARL and MARL approaches to the same task, in order to gain insight into the most suitable methods. We start by analytically showing the equivalence between these two paradigms under the full-state observation assumption. Then, we identify a broad subclass of \textit{Dec-POMDP} tasks where the agents are weakly or partially interacting. In these tasks, we show that partial observations of each agent are sufficient for near-optimal decision-making. Furthermore, we propose to exploit such partially observable MARL to improve the robustness of robots when joint or agent failures occur. Our experiments on both simulated multi-agent tasks and a real robot task with a mobile manipulator validate the presented insights and the effectiveness of the proposed robust robot learning method via partially observable MARL.

A Customizable Conflict Resolution and Attribute-Based Access Control Framework for Multi-Robot Systems

Aug 31, 2023Abstract:As multi-robot systems continue to advance and become integral to various applications, managing conflicts and ensuring secure access control are critical challenges that need to be addressed. Access control is essential in multi-robot systems to ensure secure and authorized interactions among robots, protect sensitive data, and prevent unauthorized access to resources. This paper presents a novel framework for customizable conflict resolution and attribute-based access control in multi-robot systems for ROS 2 leveraging the Hyperledger Fabric blockchain. We introduce an attribute-based access control (ABAC) Fabric-ROS 2 bridge to enable secure communication and control between users and robots. By defining conflict resolution policies based on task priorities, robot capabilities, and user-defined constraints, our framework offers a flexible way to resolve conflicts. Additionally, it incorporates attribute-based access control, granting access rights based on user and robot attributes. ABAC offers a modular approach to control access compared to existing access control approaches in ROS 2, such as SROS2. Through this framework, multi-robot systems can be managed efficiently, securely, and adaptably, ensuring controlled access to resources and managing conflicts. Our experimental evaluation shows that our framework marginally improves latency and throughput over exiting Fabric and ROS 2 integration solutions. At higher network load, it is the only solution to operate reliably without a diverging transaction commitment latency. We also demonstrate how conflicts arising from simultaneous control or a robot by two users are resolved in real-time and motion distortion is effectively eliminated.

Vision-based Safe Autonomous UAV Docking with Panoramic Sensors

May 25, 2023Abstract:The remarkable growth of unmanned aerial vehicles (UAVs) has also sparked concerns about safety measures during their missions. To advance towards safer autonomous aerial robots, this work presents a vision-based solution to ensuring safe autonomous UAV landings with minimal infrastructure. During docking maneuvers, UAVs pose a hazard to people in the vicinity. In this paper, we propose the use of a single omnidirectional panoramic camera pointing upwards from a landing pad to detect and estimate the position of people around the landing area. The images are processed in real-time in an embedded computer, which communicates with the onboard computer of approaching UAVs to transition between landing, hovering or emergency landing states. While landing, the ground camera also aids in finding an optimal position, which can be required in case of low-battery or when hovering is no longer possible. We use a YOLOv7-based object detection model and a XGBooxt model for localizing nearby people, and the open-source ROS and PX4 frameworks for communication, interfacing, and control of the UAV. We present both simulation and real-world indoor experimental results to show the efficiency of our methods.

Benchmarking UWB-Based Infrastructure-Free Positioning and Multi-Robot Relative Localization: Dataset and Characterization

May 15, 2023

Abstract:Ultra-wideband (UWB) positioning has emerged as a low-cost and dependable localization solution for multiple use cases, from mobile robots to asset tracking within the Industrial IoT. The technology is mature and the scientific literature contains multiple datasets and methods for localization based on fixed UWB nodes. At the same time, research in UWB-based relative localization and infrastructure-free localization is gaining traction, further domains. tools and datasets in this domain are scarce. Therefore, we introduce in this paper a novel dataset for benchmarking infrastructure-free relative localization targeting the domain of multi-robot systems. Compared to previous datasets, we analyze the performance of different relative localization approaches for a much wider variety of scenarios with varying numbers of fixed and mobile nodes. A motion capture system provides ground truth data, are multi-modal and include inertial or odometry measurements for benchmarking sensor fusion methods. Additionally, the dataset contains measurements of ranging accuracy based on the relative orientation of antennas and a comprehensive set of measurements for ranging between a single pair of nodes. Our experimental analysis shows that high accuracy can be localization, but the variability of the ranging error is significant across different settings and setups.

Simulation Analysis of Exploration Strategies and UAV Planning for Search and Rescue

Apr 11, 2023

Abstract:Aerial scans with unmanned aerial vehicles (UAVs) are becoming more widely adopted across industries, from smart farming to urban mapping. An application area that can leverage the strength of such systems is search and rescue (SAR) operations. However, with a vast variability in strategies and topology of application scenarios, as well as the difficulties in setting up real-world UAV-aided SAR operations for testing, designing an optimal flight pattern to search for and detect all victims can be a challenging problem. Specifically, the deployed UAV should be able to scan the area in the shortest amount of time while maintaining high victim detection recall rates. Therefore, low probability of false negatives (i.e., high recall) is more important than precision in this case. To address the issues mentioned above, we have developed a simulation environment that emulates different SAR scenarios and allows experimentation with flight missions to provide insight into their efficiency. The solution was developed with the open-source ROS framework and Gazebo simulator, with PX4 as the autopilot system for flight control, and YOLO as the object detector.

Event-driven Fabric Blockchain - ROS 2 Interface: Towards Secure and Auditable Teleoperation of Mobile Robots

Apr 03, 2023

Abstract:The integration of blockchain technology in robotic systems has been met by the community with a combination of hype and skepticism. The current literature shows that there is indeed potential for more secure and trustable distributed robotic systems. However, it is still unclear in what aspects of robotics beyond high-level decision making can blockchain technology be indeed usable. This paper explores the limits of a permissioned blockchain framework, Hyperledger Fabric, for teleoperation. Remote operation of mobile robots can benefit from the auditability and security properties of a blockchain. We study the potential benefits and the main limitations of such an approach. We introduce a new design and implementation for a event-driven Fabric-ROS 2 bridge that is able to maintain lower latencies at higher network loads than previous solutions. We also show this opens the door to more realistic use cases and applications. Our experiments with small aerial robots show latencies in the hundreds of milliseconds and simultaneous control of both a single and multi-robot system. We analyze the main trade-offs and limitations for real-world near real-time remote teleoperation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge