Li Qingqing

Robust Multi-Modal Multi-LiDAR-Inertial Odometry and Mapping for Indoor Environments

Mar 05, 2023

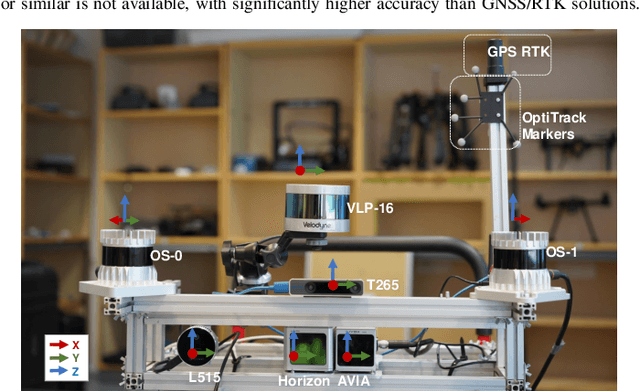

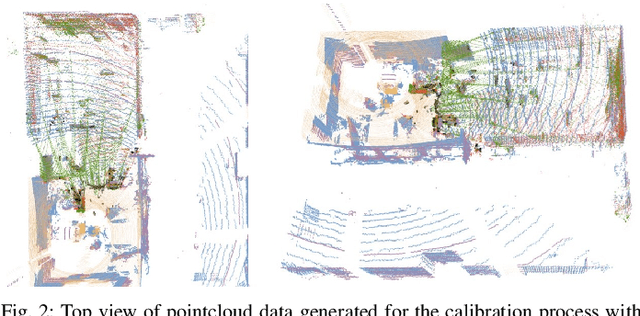

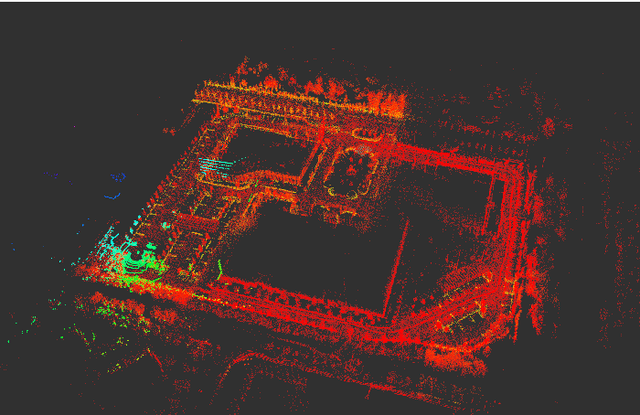

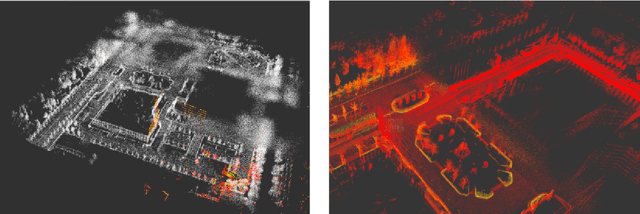

Abstract:Integrating multiple LiDAR sensors can significantly enhance a robot's perception of the environment, enabling it to capture adequate measurements for simultaneous localization and mapping (SLAM). Indeed, solid-state LiDARs can bring in high resolution at a low cost to traditional spinning LiDARs in robotic applications. However, their reduced field of view (FoV) limits performance, particularly indoors. In this paper, we propose a tightly-coupled multi-modal multi-LiDAR-inertial SLAM system for surveying and mapping tasks. By taking advantage of both solid-state and spinnings LiDARs, and built-in inertial measurement units (IMU), we achieve both robust and low-drift ego-estimation as well as high-resolution maps in diverse challenging indoor environments (e.g., small, featureless rooms). First, we use spatial-temporal calibration modules to align the timestamp and calibrate extrinsic parameters between sensors. Then, we extract two groups of feature points including edge and plane points, from LiDAR data. Next, with pre-integrated IMU data, an undistortion module is applied to the LiDAR point cloud data. Finally, the undistorted point clouds are merged into one point cloud and processed with a sliding window based optimization module. From extensive experiment results, our method shows competitive performance with state-of-the-art spinning-LiDAR-only or solid-state-LiDAR-only SLAM systems in diverse environments. More results, code, and dataset can be found at \href{https://github.com/TIERS/multi-modal-loam}{https://github.com/TIERS/multi-modal-loam}.

A Benchmark for Multi-Modal Lidar SLAM with Ground Truth in GNSS-Denied Environments

Oct 03, 2022

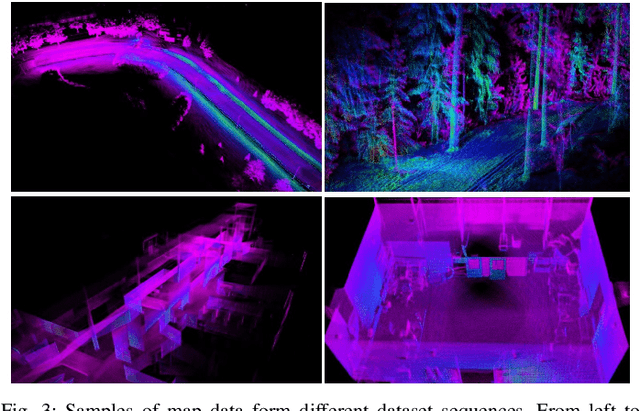

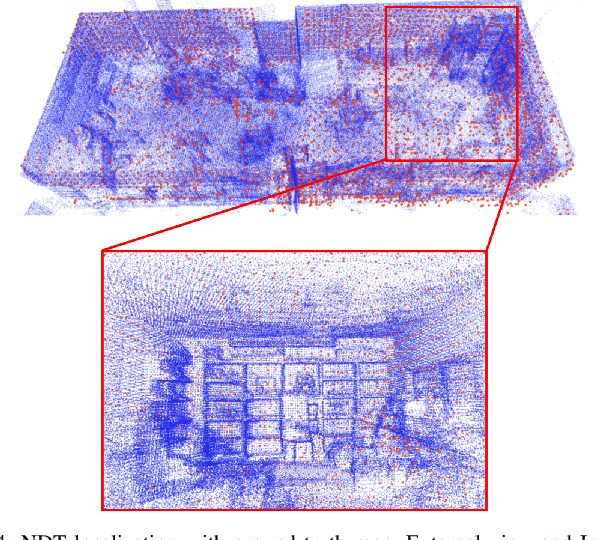

Abstract:Lidar-based simultaneous localization and mapping (SLAM) approaches have obtained considerable success in autonomous robotic systems. This is in part owing to the high-accuracy of robust SLAM algorithms and the emergence of new and lower-cost lidar products. This study benchmarks current state-of-the-art lidar SLAM algorithms with a multi-modal lidar sensor setup showcasing diverse scanning modalities (spinning and solid-state) and sensing technologies, and lidar cameras, mounted on a mobile sensing and computing platform. We extend our previous multi-modal multi-lidar dataset with additional sequences and new sources of ground truth data. Specifically, we propose a new multi-modal multi-lidar SLAM-assisted and ICP-based sensor fusion method for generating ground truth maps. With these maps, we then match real-time pointcloud data using a natural distribution transform (NDT) method to obtain the ground truth with full 6 DOF pose estimation. This novel ground truth data leverages high-resolution spinning and solid-state lidars. We also include new open road sequences with GNSS-RTK data and additional indoor sequences with motion capture (MOCAP) ground truth, complementing the previous forest sequences with MOCAP data. We perform an analysis of the positioning accuracy achieved with ten different SLAM algorithm and lidar combinations. We also report the resource utilization in four different computational platforms and a total of five settings (Intel and Jetson ARM CPUs). Our experimental results show that current state-of-the-art lidar SLAM algorithms perform very differently for different types of sensors. More results, code, and the dataset can be found at: \href{https://github.com/TIERS/tiers-lidars-dataset-enhanced}{github.com/TIERS/tiers-lidars-dataset-enhanced.

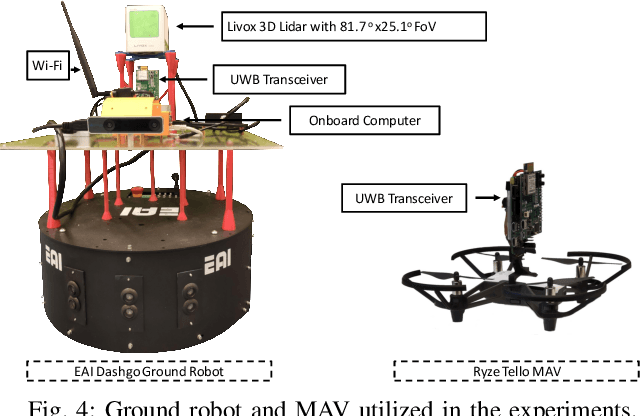

Cooperative UWB-Based Localization for Outdoors Positioning and Navigation of UAVs aided by Ground Robots

Apr 01, 2021

Abstract:Unmanned aerial vehicles (UAVs) are becoming largely ubiquitous with an increasing demand for aerial data. Accurate navigation and localization, required for precise data collection in many industrial applications, often relies on RTK GNSS. These systems, able of centimeter-level accuracy, require a setup and calibration process and are relatively expensive. This paper addresses the problem of accurate positioning and navigation of UAVs through cooperative localization. Inexpensive ultra-wideband (UWB) transceivers installed on both the UAV and a support ground robot enable centimeter-level relative positioning. With fast deployment and wide setup flexibility, the proposed system is able to accommodate different environments and can also be utilized in GNSS-denied environments. Through extensive simulations and test fields, we evaluate the accuracy of the system and compare it to GNSS in urban environments where multipath transmission degrades accuracy. For completeness, we include visual-inertial odometry in the experiments and compare the performance with the UWB-based cooperative localization.

Multi Sensor Fusion for Navigation and Mapping in Autonomous Vehicles: Accurate Localization in Urban Environments

Mar 25, 2021

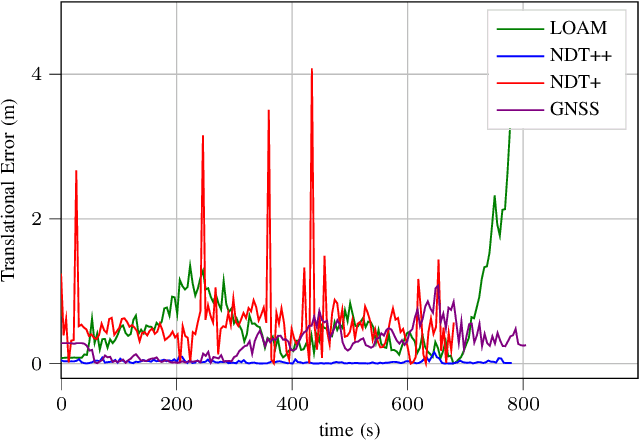

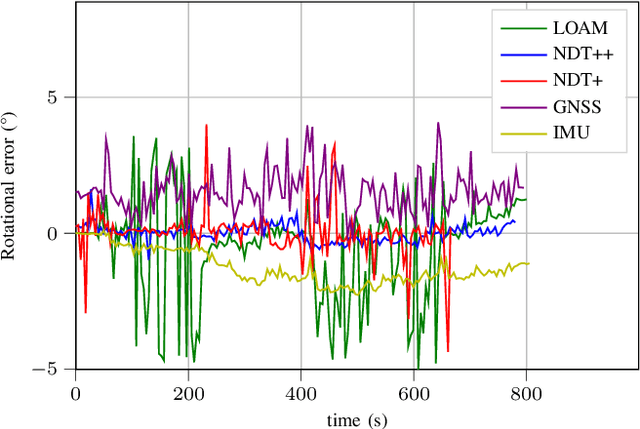

Abstract:The combination of data from multiple sensors, also known as sensor fusion or data fusion, is a key aspect in the design of autonomous robots. In particular, algorithms able to accommodate sensor fusion techniques enable increased accuracy, and are more resilient against the malfunction of individual sensors. The development of algorithms for autonomous navigation, mapping and localization have seen big advancements over the past two decades. Nonetheless, challenges remain in developing robust solutions for accurate localization in dense urban environments, where the so called last-mile delivery occurs. In these scenarios, local motion estimation is combined with the matching of real-time data with a detailed pre-built map. In this paper, we utilize data gathered with an autonomous delivery robot to compare different sensor fusion techniques and evaluate which are the algorithms providing the highest accuracy depending on the environment. The techniques we analyze and propose in this paper utilize 3D lidar data, inertial data, GNSS data and wheel encoder readings. We show how lidar scan matching combined with other sensor data can be used to increase the accuracy of the robot localization and, in consequence, its navigation. Moreover, we propose a strategy to reduce the impact on navigation performance when a change in the environment renders map data invalid or part of the available map is corrupted.

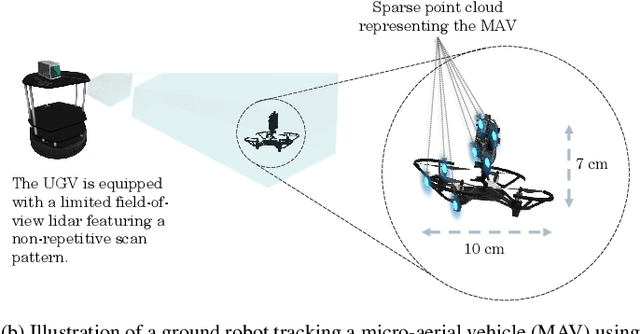

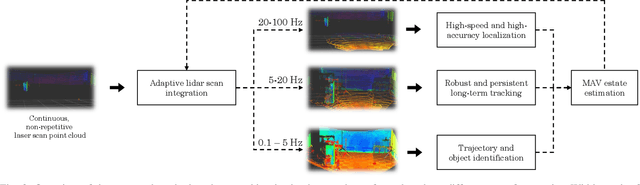

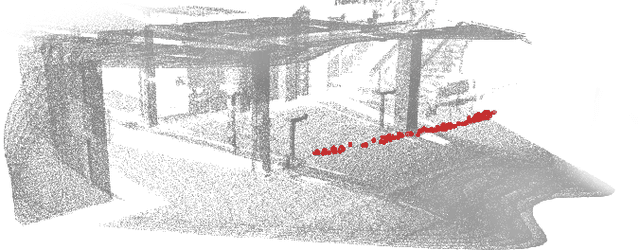

Adaptive Lidar Scan Frame Integration: Tracking Known MAVs in 3D Point Clouds

Mar 06, 2021

Abstract:Micro-aerial vehicles (MAVs) are becoming ubiquitous across multiple industries and application domains. Lightweight MAVs with only an onboard flight controller and a minimal sensor suite (e.g., IMU, vision, and vertical ranging sensors) have potential as mobile and easily deployable sensing platforms. When deployed from a ground robot, a key parameter is a relative localization between the ground robot and the MAV. This paper proposes a novel method for tracking MAVs in lidar point clouds. In lidar point clouds, we consider the speed and distance of the MAV to actively adapt the lidar's frame integration time and, in essence, the density and size of the point cloud to be processed. We show that this method enables more persistent and robust tracking when the speed of the MAV or its distance to the tracking sensor changes. In addition, we propose a multi-modal tracking method that relies on high-frequency scans for accurate state estimation, lower-frequency scans for robust and persistent tracking, and sub-Hz processing for trajectory and object identification. These three integration and processing modalities allow for an overall accurate and robust MAV tracking while ensuring the object being tracked meets shape and size constraints.

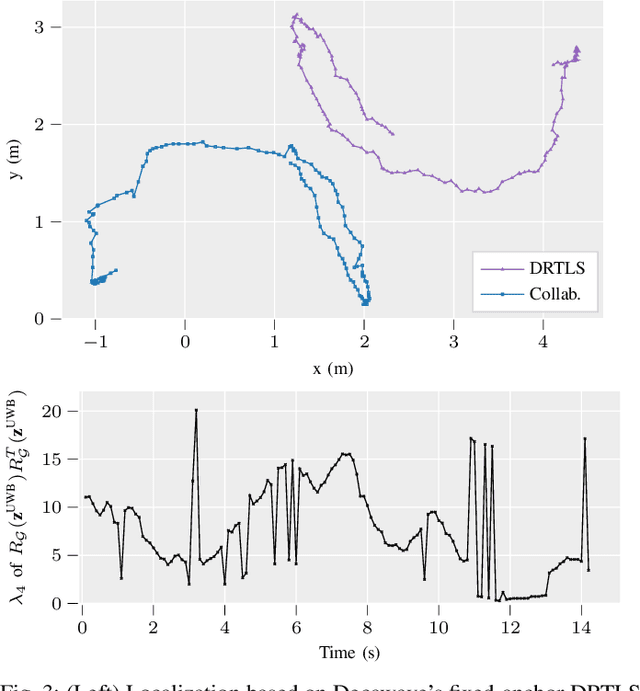

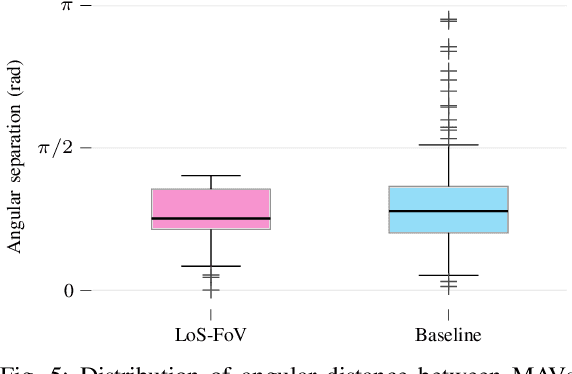

VIO-UWB-Based Collaborative Localization and Dense Scene Reconstruction within Heterogeneous Multi-Robot Systems

Nov 02, 2020

Abstract:Effective collaboration in multi-robot systems requires accurate and robust estimation of relative localization: from cooperative manipulation to collaborative sensing, and including cooperative exploration or cooperative transportation. This paper introduces a novel approach to collaborative localization for dense scene reconstruction in heterogeneous multi-robot systems comprising ground robots and micro-aerial vehicles (MAVs). We solve the problem of full relative pose estimation without sliding time windows by relying on UWB-based ranging and Visual Inertial Odometry (VIO)-based egomotion estimation for localization, while exploiting lidars onboard the ground robots for full relative pose estimation in a single reference frame. During operation, the rigidity eigenvalue provides feedback to the system. To tackle the challenge of path planning and obstacle avoidance of MAVs in GNSS-denied environments, we maintain line-of-sight between ground robots and MAVs. Because lidars capable of dense reconstruction have limited FoV, this introduces new constraints to the system. Therefore, we propose a novel formulation with a variant of the Dubins multiple traveling salesman problem with neighborhoods (DMTSPN) where we include constraints related to the limited FoV of the ground robots. Our approach is validated with simulations and experiments with real robots for the different parts of the system.

Secure Encoded Instruction Graphs for End-to-End Data Validation in Autonomous Robots

Sep 02, 2020

Abstract:As autonomous robots become increasingly ubiquitous, more attention is being paid to the security of robotic operation. Autonomous robots can be seen as cyber-physical systems that transverse the virtual realm and operate in the human dimension. As a consequence, securing the operation of autonomous robots goes beyond securing data, from sensor input to mission instructions, towards securing the interaction with their environment. There is a lack of research towards methods that would allow a robot to ensure that both its sensors and actuators are operating correctly without external feedback. This paper introduces a robotic mission encoding method that serves as an end-to-end validation framework for autonomous robots. In particular, we put our framework into practice with a proof of concept describing a novel map encoding method that allows robots to navigate an objective environment with almost-zero a priori knowledge of it, and to validate operational instructions. We also demonstrate the applicability of our framework through experiments with real robots for two different map encoding methods. The encoded maps inherit all the advantages of traditional landmark-based navigation, with the addition of cryptographic hashes that enable end-to-end information validation. This end-to-end validation can be applied to virtually any aspect of robotic operation where there is a predefined set of operations or instructions given to the robot.

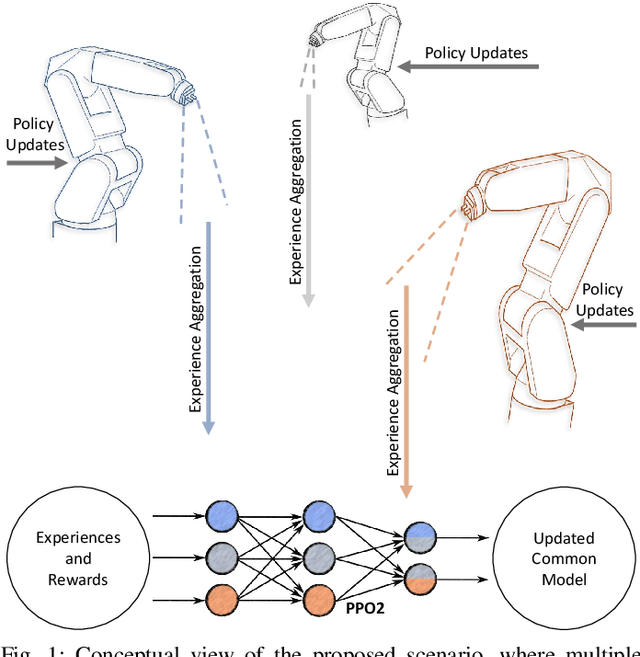

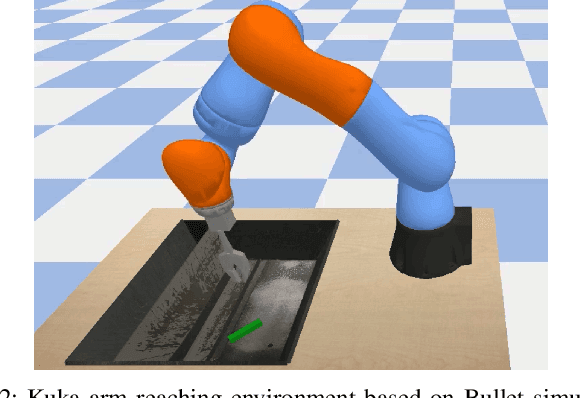

Towards Closing the Sim-to-Real Gap in Collaborative Multi-Robot Deep Reinforcement Learning

Aug 18, 2020

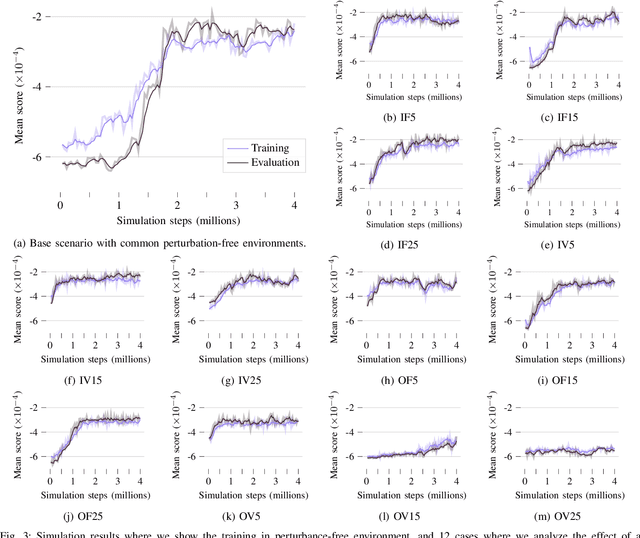

Abstract:Current research directions in deep reinforcement learning include bridging the simulation-reality gap, improving sample efficiency of experiences in distributed multi-agent reinforcement learning, together with the development of robust methods against adversarial agents in distributed learning, among many others. In this work, we are particularly interested in analyzing how multi-agent reinforcement learning can bridge the gap to reality in distributed multi-robot systems where the operation of the different robots is not necessarily homogeneous. These variations can happen due to sensing mismatches, inherent errors in terms of calibration of the mechanical joints, or simple differences in accuracy. While our results are simulation-based, we introduce the effect of sensing, calibration, and accuracy mismatches in distributed reinforcement learning with proximal policy optimization (PPO). We discuss on how both the different types of perturbances and how the number of agents experiencing those perturbances affect the collaborative learning effort. The simulations are carried out using a Kuka arm model in the Bullet physics engine. This is, to the best of our knowledge, the first work exploring the limitations of PPO in multi-robot systems when considering that different robots might be exposed to different environments where their sensors or actuators have induced errors. With the conclusions of this work, we set the initial point for future work on designing and developing methods to achieve robust reinforcement learning on the presence of real-world perturbances that might differ within a multi-robot system.

Ubiquitous Distributed Deep Reinforcement Learning at the Edge: Analyzing Byzantine Agents in Discrete Action Spaces

Aug 18, 2020

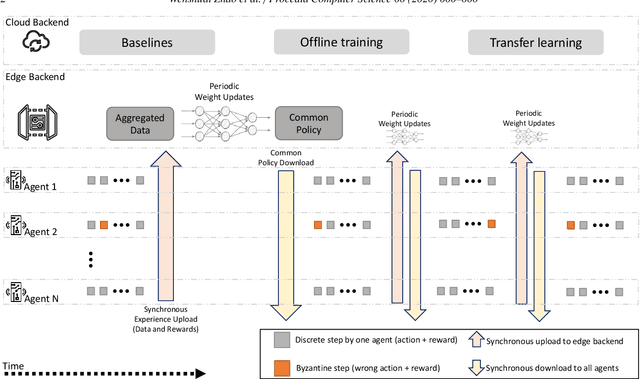

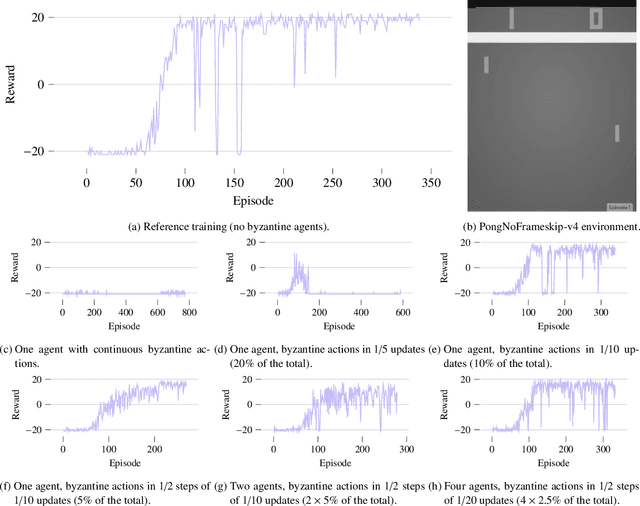

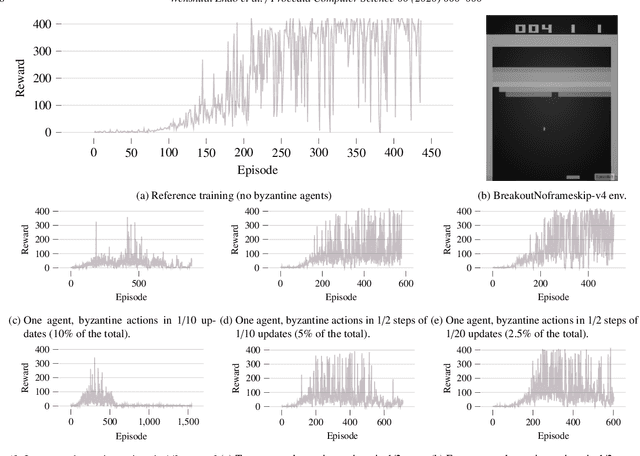

Abstract:The integration of edge computing in next-generation mobile networks is bringing low-latency and high-bandwidth ubiquitous connectivity to a myriad of cyber-physical systems. This will further boost the increasing intelligence that is being embedded at the edge in various types of autonomous systems, where collaborative machine learning has the potential to play a significant role. This paper discusses some of the challenges in multi-agent distributed deep reinforcement learning that can occur in the presence of byzantine or malfunctioning agents. As the simulation-to-reality gap gets bridged, the probability of malfunctions or errors must be taken into account. We show how wrong discrete actions can significantly affect the collaborative learning effort. In particular, we analyze the effect of having a fraction of agents that might perform the wrong action with a given probability. We study the ability of the system to converge towards a common working policy through the collaborative learning process based on the number of experiences from each of the agents to be aggregated for each policy update, together with the fraction of wrong actions from agents experiencing malfunctions. Our experiments are carried out in a simulation environment using the Atari testbed for the discrete action spaces, and advantage actor-critic (A2C) for the distributed multi-agent training.

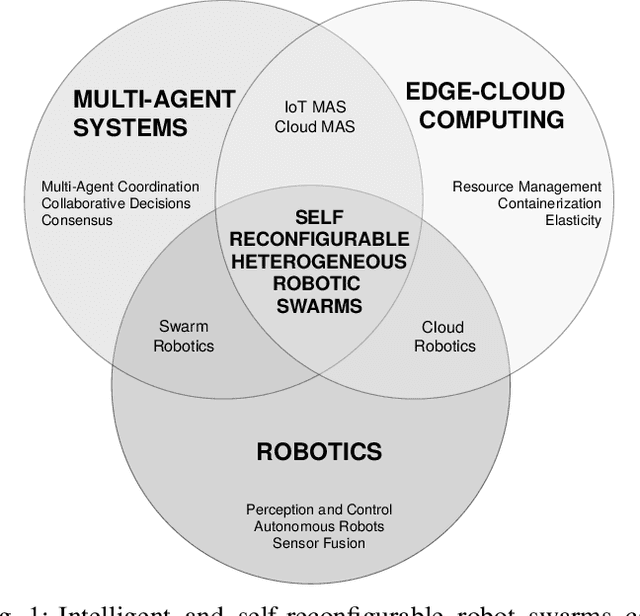

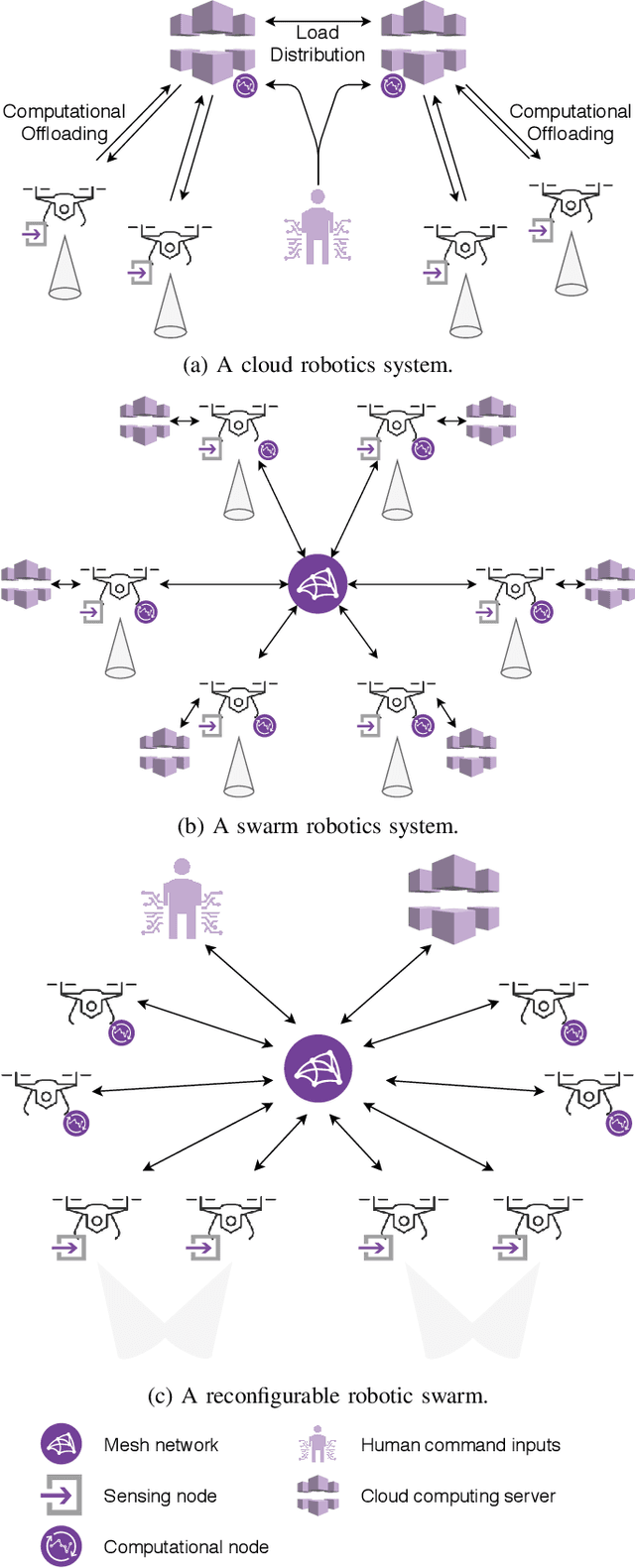

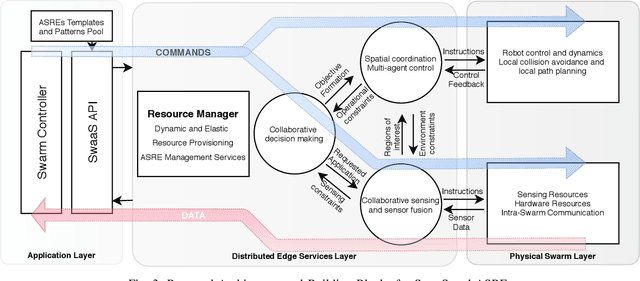

End-to-End Design for Self-Reconfigurable Heterogeneous Robotic Swarms

Apr 29, 2020

Abstract:More widespread adoption requires swarms of robots to be more flexible for real-world applications. Multiple challenges remain in complex scenarios where a large amount of data needs to be processed in real-time and high degrees of situational awareness are required. The options in this direction are limited in existing robotic swarms, mostly homogeneous robots with limited operational and reconfiguration flexibility. We address this by bringing elastic computing techniques and dynamic resource management from the edge-cloud computing domain to the swarm robotics domain. This enables the dynamic provisioning of collective capabilities in the swarm for different applications. Therefore, we transform a swarm into a distributed sensing and computing platform capable of complex data processing tasks, which can then be offered as a service. In particular, we discuss how this can be applied to adaptive resource management in a heterogeneous swarm of drones, and how we are implementing the dynamic deployment of distributed data processing algorithms. With an elastic drone swarm built on reconfigurable hardware and containerized services, it will be possible to raise the self-awareness, degree of intelligence, and level of autonomy of heterogeneous swarms of robots. We describe novel directions for collaborative perception, and new ways of interacting with a robotic swarm.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge