Fabrizio Schiano

Reconfigurable Drone System for Transportation of Parcels With Variable Mass and Size

Nov 16, 2022

Abstract:Cargo drones are designed to carry payloads with predefined shape, size, and/or mass. This lack of flexibility requires a fleet of diverse drones tailored to specific cargo dimensions. Here we propose a new reconfigurable drone based on a modular design that adapts to different cargo shapes, sizes, and mass. We also propose a method for the automatic generation of drone configurations and suitable parameters for the flight controller. The parcel becomes the drone's body to which several individual propulsion modules are attached. We demonstrate the use of the reconfigurable hardware and the accompanying software by transporting parcels of different mass and sizes requiring various numbers and propulsion modules' positioning. The experiments are conducted indoors (with a motion capture system) and outdoors (with an RTK-GNSS sensor). The proposed design represents a cheaper and more versatile alternative to the solutions involving several drones for parcel transportation.

Personalized Human-Swarm Interaction through Hand Motion

Mar 13, 2021

Abstract:The control of collective robotic systems, such as drone swarms, is often delegated to autonomous navigation algorithms due to their high dimensionality. However, like other robotic entities, drone swarms can still benefit from being teleoperated by human operators, whose perception and decision-making capabilities are still out of the reach of autonomous systems. Drone swarm teleoperation is only at its dawn, and a standard human-swarm interface (HRI) is missing to date. In this study, we analyzed the spontaneous interaction strategies of naive users with a swarm of drones. We implemented a machine-learning algorithm to define a personalized Body-Machine Interface (BoMI) based only on a short calibration procedure. During this procedure, the human operator is asked to move spontaneously as if they were in control of a simulated drone swarm. We assessed that hands are the most commonly adopted body segment, and thus we chose a LEAP Motion controller to track them to let the users control the aerial drone swarm. This choice makes our interface portable since it does not rely on a centralized system for tracking the human body. We validated our algorithm to define personalized HRIs for a set of participants in a realistic simulated environment, showing promising results in performance and user experience. Our method leaves unprecedented freedom to the user to choose between position and velocity control only based on their body motion preferences.

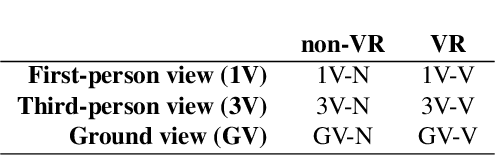

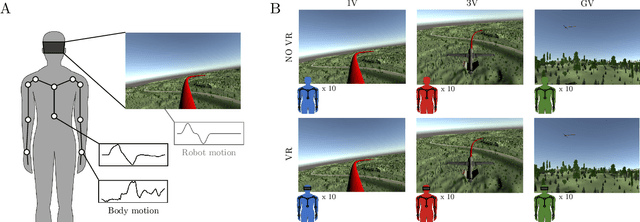

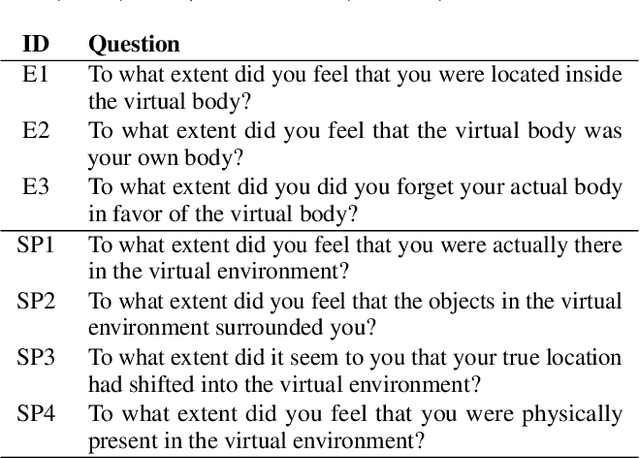

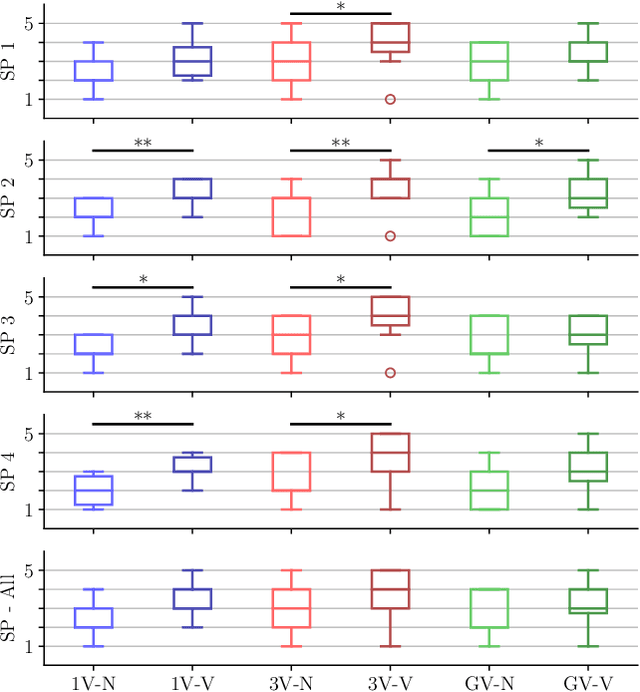

The Impact of Virtual Reality and Viewpoints in Body Motion Based Drone Teleoperation

Jan 30, 2021

Abstract:The operation of telerobotic systems can be a challenging task, requiring intuitive and efficient interfaces to enable inexperienced users to attain a high level of proficiency. Body-Machine Interfaces (BoMI) represent a promising alternative to standard control devices, such as joysticks, because they leverage intuitive body motion and gestures. It has been shown that the use of Virtual Reality (VR) and first-person view perspectives can increase the user's sense of presence in avatars. However, it is unclear if these beneficial effects occur also in the teleoperation of non-anthropomorphic robots that display motion patterns different from those of humans. Here we describe experimental results on teleoperation of a non-anthropomorphic drone showing that VR correlates with a higher sense of spatial presence, whereas viewpoints moving coherently with the robot are associated with a higher sense of embodiment. Furthermore, the experimental results show that spontaneous body motion patterns are affected by VR and viewpoint conditions in terms of variability, amplitude, and robot correlates, suggesting that the design of BoMIs for drone teleoperation must take into account the use of Virtual Reality and the choice of the viewpoint.

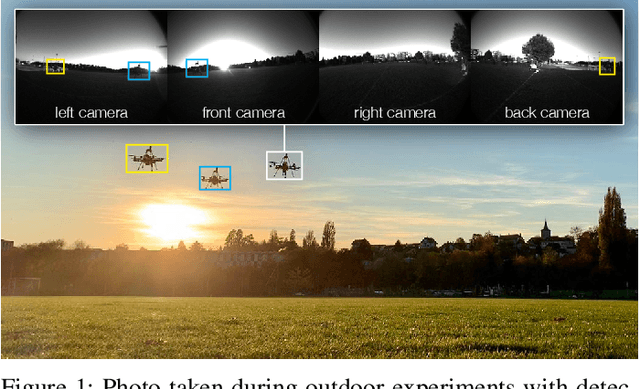

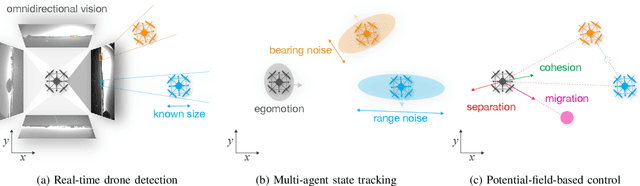

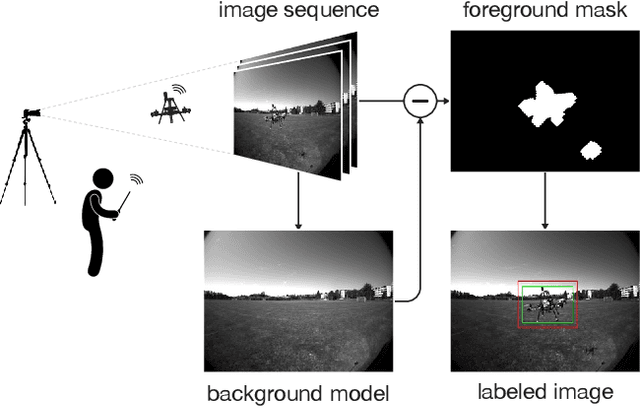

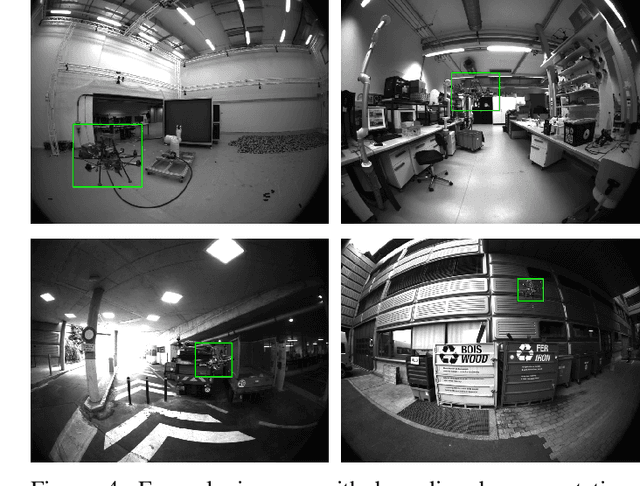

Vision-based flocking in outdoor environments

Dec 02, 2020

Abstract:Deployment of drone swarms usually relies on inter-agent communication or visual markers that are mounted on the vehicles to simplify their mutual detection. This letter proposes a vision-based detection and tracking algorithm that enables groups of drones to navigate without communication or visual markers. We employ a convolutional neural network to detect and localize nearby agents onboard the quadcopters in real-time. Rather than manually labeling a dataset, we automatically annotate images to train the neural network using background subtraction by systematically flying a quadcopter in front of a static camera. We use a multi-agent state tracker to estimate the relative positions and velocities of nearby agents, which are subsequently fed to a flocking algorithm for high-level control. The drones are equipped with multiple cameras to provide omnidirectional visual inputs. The camera setup ensures the safety of the flock by avoiding blind spots regardless of the agent configuration. We evaluate the approach with a group of three real quadcopters that are controlled using the proposed vision-based flocking algorithm. The results show that the drones can safely navigate in an outdoor environment despite substantial background clutter and difficult lighting conditions.

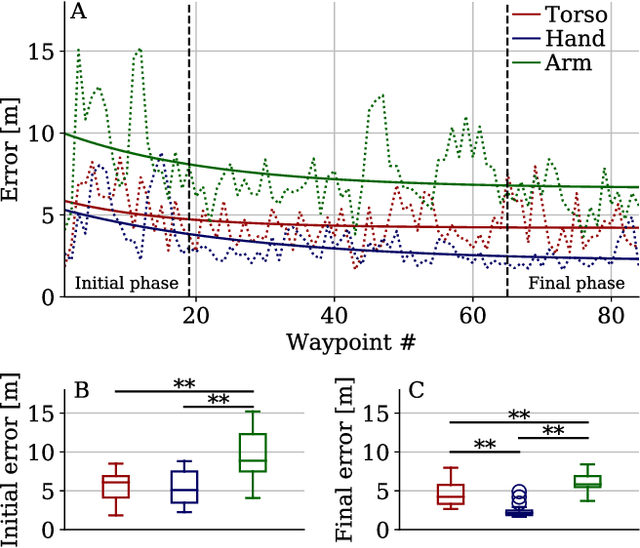

Does spontaneous motion lead to intuitive Body-Machine Interfaces? A fitness study of different body segments for wearable telerobotics

Nov 15, 2020

Abstract:Human-Robot Interfaces (HRIs) represent a crucial component in telerobotic systems. Body-Machine Interfaces (BoMIs) based on body motion can feel more intuitive than standard HRIs for naive users as they leverage humans' natural control capability over their movements. Among the different methods used to map human gestures into robot commands, data-driven approaches select a set of body segments and transform their motion into commands for the robot based on the users' spontaneous motion patterns. Despite being a versatile and generic method, there is no scientific evidence that implementing an interface based on spontaneous motion maximizes its effectiveness. In this study, we compare a set of BoMIs based on different body segments to investigate this aspect. We evaluate the interfaces in a teleoperation task of a fixed-wing drone and observe users' performance and feedback. To this aim, we use a framework that allows a user to control the drone with a single Inertial Measurement Unit (IMU) and without prior instructions. We show through a user study that selecting the body segment for a BoMI based on spontaneous motion can lead to sub-optimal performance. Based on our findings, we suggest additional metrics based on biomechanical and behavioral factors that might improve data-driven methods for the design of HRIs.

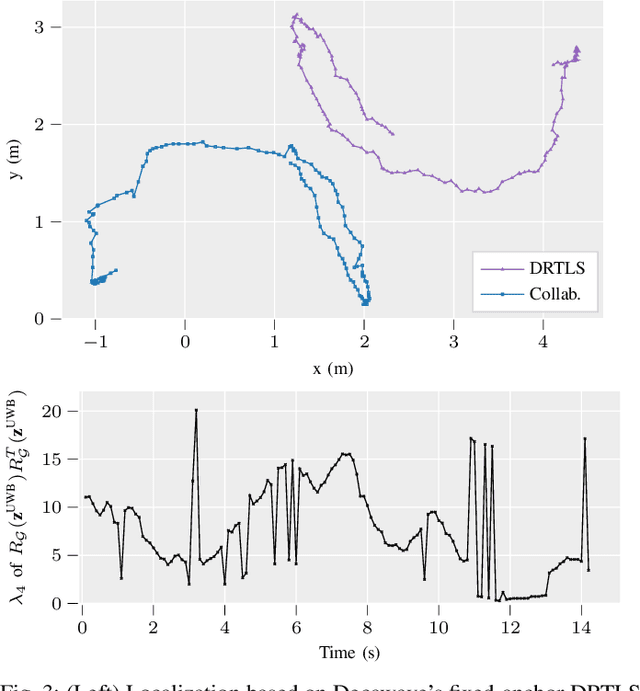

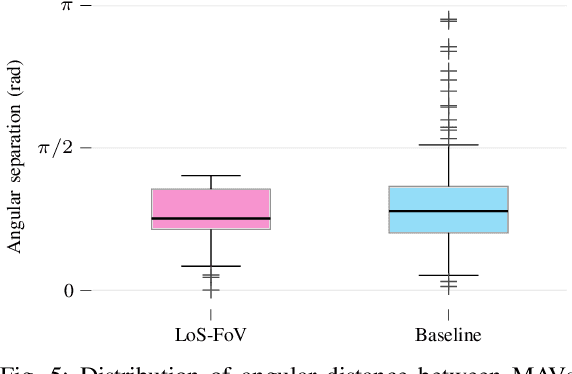

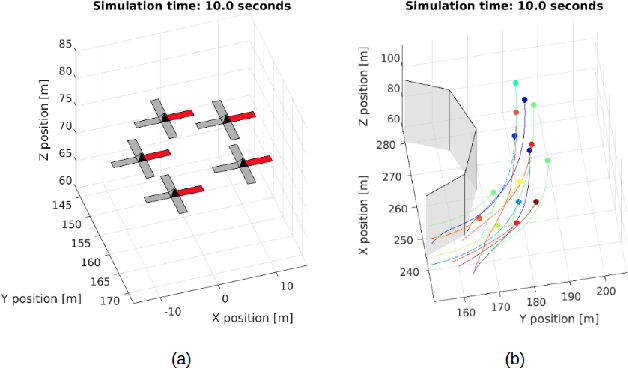

VIO-UWB-Based Collaborative Localization and Dense Scene Reconstruction within Heterogeneous Multi-Robot Systems

Nov 02, 2020

Abstract:Effective collaboration in multi-robot systems requires accurate and robust estimation of relative localization: from cooperative manipulation to collaborative sensing, and including cooperative exploration or cooperative transportation. This paper introduces a novel approach to collaborative localization for dense scene reconstruction in heterogeneous multi-robot systems comprising ground robots and micro-aerial vehicles (MAVs). We solve the problem of full relative pose estimation without sliding time windows by relying on UWB-based ranging and Visual Inertial Odometry (VIO)-based egomotion estimation for localization, while exploiting lidars onboard the ground robots for full relative pose estimation in a single reference frame. During operation, the rigidity eigenvalue provides feedback to the system. To tackle the challenge of path planning and obstacle avoidance of MAVs in GNSS-denied environments, we maintain line-of-sight between ground robots and MAVs. Because lidars capable of dense reconstruction have limited FoV, this introduces new constraints to the system. Therefore, we propose a novel formulation with a variant of the Dubins multiple traveling salesman problem with neighborhoods (DMTSPN) where we include constraints related to the limited FoV of the ground robots. Our approach is validated with simulations and experiments with real robots for the different parts of the system.

SwarmLab: a Matlab Drone Swarm Simulator

May 06, 2020

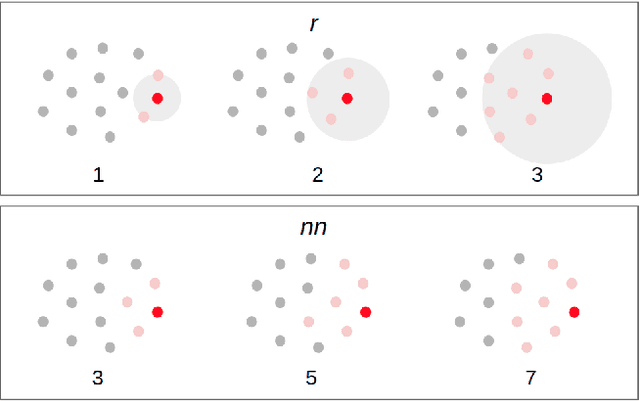

Abstract:Among the available solutions for drone swarm simulations, we identified a gap in simulation frameworks that allow easy algorithms prototyping, tuning, debugging and performance analysis, and do not require the user to interface with multiple programming languages. We present SwarmLab, a software entirely written in Matlab, that aims at the creation of standardized processes and metrics to quantify the performance and robustness of swarm algorithms, and in particular, it focuses on drones. We showcase the functionalities of SwarmLab by comparing two state-of-the-art algorithms for the navigation of aerial swarms in cluttered environments, Olfati-Saber's and Vasarhelyi's. We analyze the variability of the inter-agent distances and agents' speeds during flight. We also study some of the performance metrics presented, i.e. order, inter and extra-agent safety, union, and connectivity. While Olfati-Saber's approach results in a faster crossing of the obstacle field, Vasarhelyi's approach allows the agents to fly smoother trajectories, without oscillations. We believe that SwarmLab is relevant for both the biological and robotics research communities, and for education, since it allows fast algorithm development, the automatic collection of simulated data, the systematic analysis of swarming behaviors with performance metrics inherited from the state of the art.

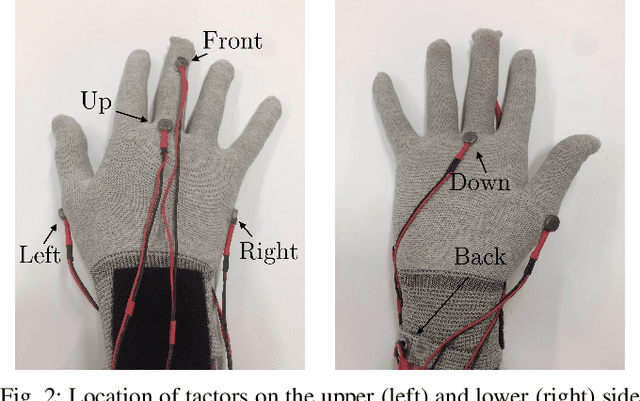

Hand-worn Haptic Interface for Drone Teleoperation

Apr 15, 2020

Abstract:Drone teleoperation is usually accomplished using remote radio controllers, devices that can be hard to master for inexperienced users. Moreover, the limited amount of information fed back to the user about the robot's state, often limited to vision, can represent a bottleneck for operation in several conditions. In this work, we present a wearable interface for drone teleoperation and its evaluation through a user study. The two main features of the proposed system are a data glove to allow the user to control the drone trajectory by hand motion and a haptic system used to augment their awareness of the environment surrounding the robot. This interface can be employed for the operation of robotic systems in line of sight (LoS) by inexperienced operators and allows them to safely perform tasks common in inspection and search-and-rescue missions such as approaching walls and crossing narrow passages with limited visibility conditions. In addition to the design and implementation of the wearable interface, we performed a systematic study to assess the effectiveness of the system through three user studies (n = 36) to evaluate the users' learning path and their ability to perform tasks with limited visibility. We validated our ideas in both a simulated and a real-world environment. Our results demonstrate that the proposed system can improve teleoperation performance in different cases compared to standard remote controllers, making it a viable alternative to standard Human-Robot Interfaces.

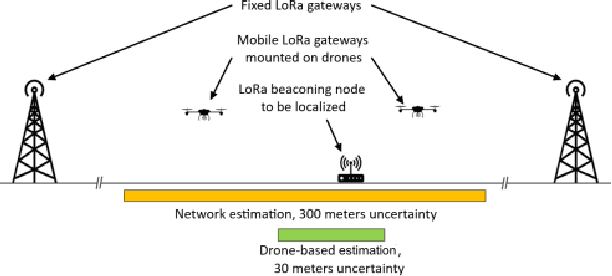

Drone-aided Localization in LoRa IoT Networks

Apr 08, 2020

Abstract:Besides being part of the Internet of Things (IoT), drones can play a relevant role in it as enablers. The 3D mobility of UAVs can be exploited to improve node localization in IoT networks for, e.g., search and rescue or goods localization and tracking. One of the widespread IoT communication technologies is Long Range Wide Area Network (LoRaWAN), which allows achieving long communication distances with low power. In this work, we present a drone-aided localization system for LoRa networks in which a UAV is used to improve the estimation of a node's location initially provided by the network. We characterize the relevant parameters of the communication system and use them to develop and test a search algorithm in a realistic simulated scenario. We then move to the full implementation of a real system in which a drone is seamlessly integrated into Swisscom's LoRa network. The drone coordinates with the network with a two-way exchange of information which results in an accurate and fully autonomous localization system. The results obtained in our field tests show a ten-fold improvement in localization precision with respect to the estimation provided by the fixed network. Up to our knowledge, this is the first time a UAV is successfully integrated in a LoRa network to improve its localization accuracy.

UWB-based system for UAV Localization in GNSS-Denied Environments: Characterization and Dataset

Mar 09, 2020

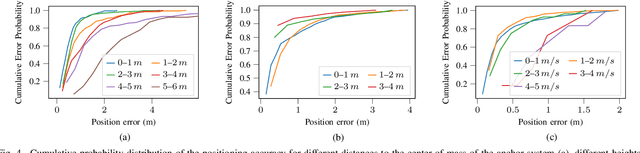

Abstract:Small unmanned aerial vehicles (UAV) have penetrated multiple domains over the past years. In GNSS-denied or indoor environments, aerial robots require a robust and stable localization system, often with external feedback, in order to fly safely. Motion capture systems are typically utilized indoors when accurate localization is needed. However, these systems are expensive and most require a fixed setup. Recently, visual-inertial odometry and similar methods have advanced to a point where autonomous UAVs can rely on them for localization. The main limitation in this case comes from the environment, as well as in long-term autonomy due to accumulating error if loop closure cannot be performed efficiently. For instance, the impact of low visibility due to dust or smoke in post-disaster scenarios might render the odometry methods inapplicable. In this paper, we study and characterize an ultra-wideband (UWB) system for navigation and localization of aerial robots indoors based on Decawave's DWM1001 UWB node. The system is portable, inexpensive and can be battery powered in its totality. We show the viability of this system for autonomous flight of UAVs, and provide open-source methods and data that enable its widespread application even with movable anchor systems. We characterize the accuracy based on the position of the UAV with respect to the anchors, its altitude and speed, and the distribution of the anchors in space. Finally, we analyze the accuracy of the self-calibration of the anchors' positions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge