Matteo Macchini

Personalized Human-Swarm Interaction through Hand Motion

Mar 13, 2021

Abstract:The control of collective robotic systems, such as drone swarms, is often delegated to autonomous navigation algorithms due to their high dimensionality. However, like other robotic entities, drone swarms can still benefit from being teleoperated by human operators, whose perception and decision-making capabilities are still out of the reach of autonomous systems. Drone swarm teleoperation is only at its dawn, and a standard human-swarm interface (HRI) is missing to date. In this study, we analyzed the spontaneous interaction strategies of naive users with a swarm of drones. We implemented a machine-learning algorithm to define a personalized Body-Machine Interface (BoMI) based only on a short calibration procedure. During this procedure, the human operator is asked to move spontaneously as if they were in control of a simulated drone swarm. We assessed that hands are the most commonly adopted body segment, and thus we chose a LEAP Motion controller to track them to let the users control the aerial drone swarm. This choice makes our interface portable since it does not rely on a centralized system for tracking the human body. We validated our algorithm to define personalized HRIs for a set of participants in a realistic simulated environment, showing promising results in performance and user experience. Our method leaves unprecedented freedom to the user to choose between position and velocity control only based on their body motion preferences.

The Impact of Virtual Reality and Viewpoints in Body Motion Based Drone Teleoperation

Jan 30, 2021

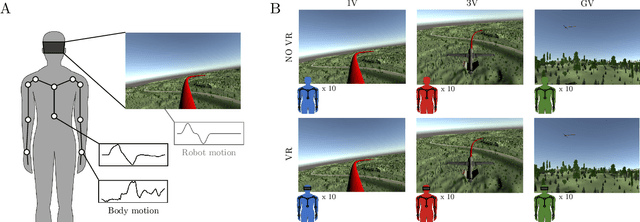

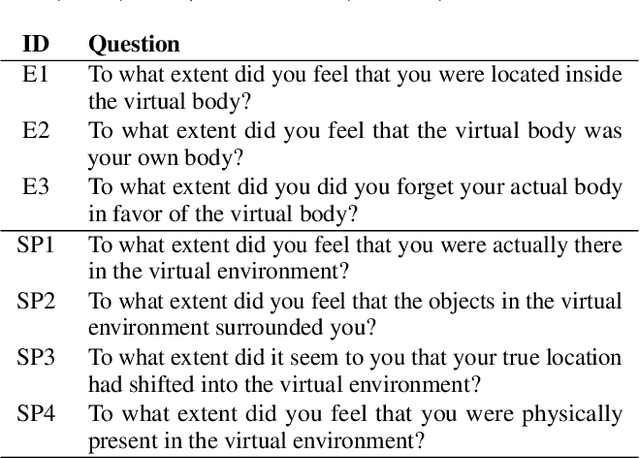

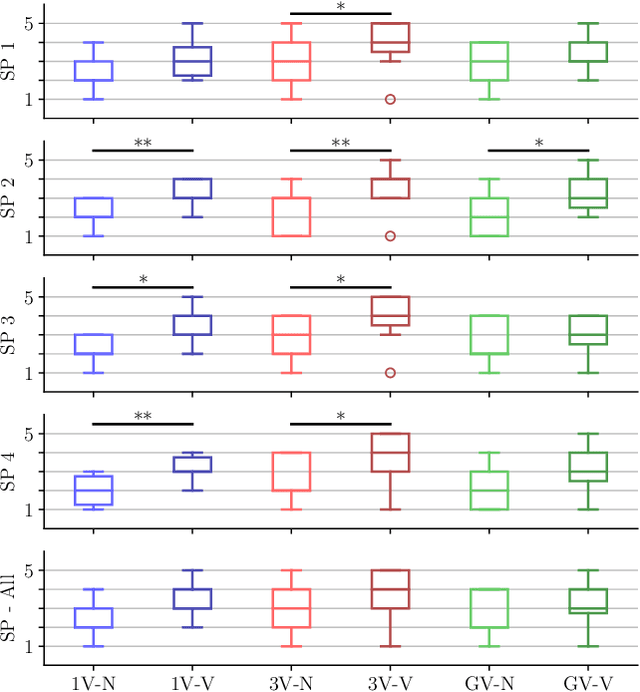

Abstract:The operation of telerobotic systems can be a challenging task, requiring intuitive and efficient interfaces to enable inexperienced users to attain a high level of proficiency. Body-Machine Interfaces (BoMI) represent a promising alternative to standard control devices, such as joysticks, because they leverage intuitive body motion and gestures. It has been shown that the use of Virtual Reality (VR) and first-person view perspectives can increase the user's sense of presence in avatars. However, it is unclear if these beneficial effects occur also in the teleoperation of non-anthropomorphic robots that display motion patterns different from those of humans. Here we describe experimental results on teleoperation of a non-anthropomorphic drone showing that VR correlates with a higher sense of spatial presence, whereas viewpoints moving coherently with the robot are associated with a higher sense of embodiment. Furthermore, the experimental results show that spontaneous body motion patterns are affected by VR and viewpoint conditions in terms of variability, amplitude, and robot correlates, suggesting that the design of BoMIs for drone teleoperation must take into account the use of Virtual Reality and the choice of the viewpoint.

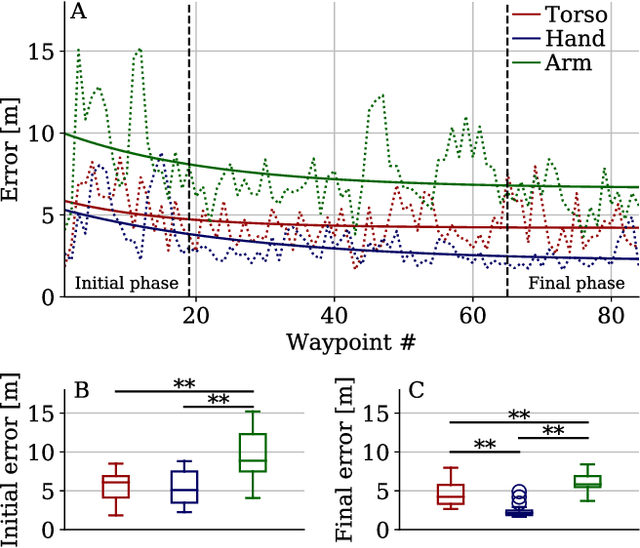

Does spontaneous motion lead to intuitive Body-Machine Interfaces? A fitness study of different body segments for wearable telerobotics

Nov 15, 2020

Abstract:Human-Robot Interfaces (HRIs) represent a crucial component in telerobotic systems. Body-Machine Interfaces (BoMIs) based on body motion can feel more intuitive than standard HRIs for naive users as they leverage humans' natural control capability over their movements. Among the different methods used to map human gestures into robot commands, data-driven approaches select a set of body segments and transform their motion into commands for the robot based on the users' spontaneous motion patterns. Despite being a versatile and generic method, there is no scientific evidence that implementing an interface based on spontaneous motion maximizes its effectiveness. In this study, we compare a set of BoMIs based on different body segments to investigate this aspect. We evaluate the interfaces in a teleoperation task of a fixed-wing drone and observe users' performance and feedback. To this aim, we use a framework that allows a user to control the drone with a single Inertial Measurement Unit (IMU) and without prior instructions. We show through a user study that selecting the body segment for a BoMI based on spontaneous motion can lead to sub-optimal performance. Based on our findings, we suggest additional metrics based on biomechanical and behavioral factors that might improve data-driven methods for the design of HRIs.

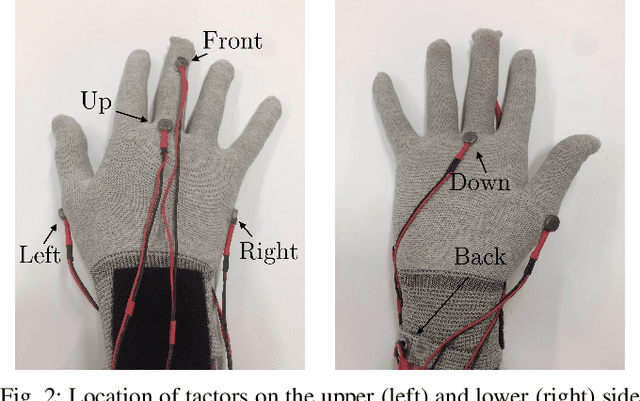

Hand-worn Haptic Interface for Drone Teleoperation

Apr 15, 2020

Abstract:Drone teleoperation is usually accomplished using remote radio controllers, devices that can be hard to master for inexperienced users. Moreover, the limited amount of information fed back to the user about the robot's state, often limited to vision, can represent a bottleneck for operation in several conditions. In this work, we present a wearable interface for drone teleoperation and its evaluation through a user study. The two main features of the proposed system are a data glove to allow the user to control the drone trajectory by hand motion and a haptic system used to augment their awareness of the environment surrounding the robot. This interface can be employed for the operation of robotic systems in line of sight (LoS) by inexperienced operators and allows them to safely perform tasks common in inspection and search-and-rescue missions such as approaching walls and crossing narrow passages with limited visibility conditions. In addition to the design and implementation of the wearable interface, we performed a systematic study to assess the effectiveness of the system through three user studies (n = 36) to evaluate the users' learning path and their ability to perform tasks with limited visibility. We validated our ideas in both a simulated and a real-world environment. Our results demonstrate that the proposed system can improve teleoperation performance in different cases compared to standard remote controllers, making it a viable alternative to standard Human-Robot Interfaces.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge