Joerg Widmer

Compressed Sensing-Driven Near-Field Localization Exploiting Array of Subarrays

Jan 29, 2026Abstract:Near-field localization for ISAC requires large-aperture arrays, making fully-digital implementations prohibitively complex and costly. While sparse subarray architectures can reduce cost, they introduce severe estimation ambiguity from grating lobes. To address both issues, we propose SHARE (Sparse Hierarchical Angle-Range Estimation), a novel two-stage sparse recovery algorithm. SHARE operates in two stages. It first performs coarse, unambiguous angle estimation using individual subarrays to resolve the grating lobe ambiguity. It then leverages the full sparse aperture to perform a localized joint angle-range search. This hierarchical approach avoids an exhaustive and computationally intensive two-dimensional grid search while preserving the high resolution of the large aperture. Simulation results show that SHARE significantly outperforms conventional one-shot sparse recovery methods, such as Orthogonal Matching Pursuit (OMP), in both localization accuracy and robustness. Furthermore, we show that SHARE's overall localization accuracy is comparable to or even surpasses that of the fully-digital 2D-MUSIC algorithm, despite MUSIC having access to the complete, uncompressed data from every antenna element. SHARE therefore provides a practical path for high-resolution near-field ISAC systems.

SymbXRL: Symbolic Explainable Deep Reinforcement Learning for Mobile Networks

Jan 29, 2026Abstract:The operation of future 6th-generation (6G) mobile networks will increasingly rely on the ability of deep reinforcement learning (DRL) to optimize network decisions in real-time. DRL yields demonstrated efficacy in various resource allocation problems, such as joint decisions on user scheduling and antenna allocation or simultaneous control of computing resources and modulation. However, trained DRL agents are closed-boxes and inherently difficult to explain, which hinders their adoption in production settings. In this paper, we make a step towards removing this critical barrier by presenting SymbXRL, a novel technique for explainable reinforcement learning (XRL) that synthesizes human-interpretable explanations for DRL agents. SymbXRL leverages symbolic AI to produce explanations where key concepts and their relationships are described via intuitive symbols and rules; coupling such a representation with logical reasoning exposes the decision process of DRL agents and offers more comprehensible descriptions of their behaviors compared to existing approaches. We validate SymbXRL in practical network management use cases supported by DRL, proving that it not only improves the semantics of the explanations but also paves the way for explicit agent control: for instance, it enables intent-based programmatic action steering that improves by 12% the median cumulative reward over a pure DRL solution.

* 10 pages, 9 figures, published in IEEE INFOCOM 2025

Millimeter-Scale Absolute Carrier Phase-Based Localization in Multi-Band Systems

Nov 07, 2025Abstract:Localization is a key feature of future Sixth Generation (6G) net-works with foreseen accuracy requirements down to the millimeter level, to enable novel applications in the fields of telesurgery, high-precision manufacturing, and others. Currently, such accuracy requirements are only achievable with specialized or highly resource-demanding systems, rendering them impractical for more wide-spread deployment. In this paper, we present the first system that enables low-complexity and low-bandwidth absolute 3D localization with millimeter-level accuracy in generic wireless networks. It performs a carrier phase-based wireless localization refinement of an initial location estimate based on successive location-likelihood optimization across multiple bands. Unlike previous phase unwrapping methods, our solution is one-shot. We evaluate its performance collecting ~350, 000 measurements, showing an improvement of more than one order of magnitude over classical localization techniques. Finally, we will open-source the low-cost, modular FR3 front-end that we developed for the experimental campaign.

EgoLife: Towards Egocentric Life Assistant

Mar 05, 2025Abstract:We introduce EgoLife, a project to develop an egocentric life assistant that accompanies and enhances personal efficiency through AI-powered wearable glasses. To lay the foundation for this assistant, we conducted a comprehensive data collection study where six participants lived together for one week, continuously recording their daily activities - including discussions, shopping, cooking, socializing, and entertainment - using AI glasses for multimodal egocentric video capture, along with synchronized third-person-view video references. This effort resulted in the EgoLife Dataset, a comprehensive 300-hour egocentric, interpersonal, multiview, and multimodal daily life dataset with intensive annotation. Leveraging this dataset, we introduce EgoLifeQA, a suite of long-context, life-oriented question-answering tasks designed to provide meaningful assistance in daily life by addressing practical questions such as recalling past relevant events, monitoring health habits, and offering personalized recommendations. To address the key technical challenges of (1) developing robust visual-audio models for egocentric data, (2) enabling identity recognition, and (3) facilitating long-context question answering over extensive temporal information, we introduce EgoButler, an integrated system comprising EgoGPT and EgoRAG. EgoGPT is an omni-modal model trained on egocentric datasets, achieving state-of-the-art performance on egocentric video understanding. EgoRAG is a retrieval-based component that supports answering ultra-long-context questions. Our experimental studies verify their working mechanisms and reveal critical factors and bottlenecks, guiding future improvements. By releasing our datasets, models, and benchmarks, we aim to stimulate further research in egocentric AI assistants.

MovISAC: Coherent Imaging of Moving Targets with Distributed Asynchronous ISAC Devices

Feb 12, 2025

Abstract:Distributed integrated sensing and communication (ISAC) devices can overcome the traditional resolution limitations imposed by the signal bandwidth, cooperating to produce high-resolution images of the environment. However, existing phase-coherent imaging approaches are not suited to imaging multiple moving targets, since the Doppler effect causes a phase rotation that degrades the image focus and biases the targets' locations. In this paper, we propose MovISAC, the first coherent imaging method for moving targets using distributed asynchronous ISAC devices. Our approach obtains a set of high-resolution images of the environment, in which each image represents only targets moving with a selected velocity. To achieve this, MovISAC performs over-the-air (OTA) synchronization to compensate for timing, frequency, and phase offsets among distributed ISAC devices. Then, to solve the prohibitive complexity of an exhaustive search over the targets' velocities, we introduce a new association algorithm to match the Doppler shifts observed by each ISAC device pair to the corresponding spatial peaks obtained by standard imaging methods. This gives MovISAC the unique capability of pre-compensating the Doppler shift for each target before forming the images, thus recasting it from an undesired impairment to image formation to an additional means for resolving the targets. We perform extensive numerical simulations showing that our approach achieves extremely high resolution, superior robustness to clutter thanks to the additional target separation in the Doppler domain, and obtains cm- and cm/s-level target localization and velocity estimation errors. MovISAC significantly outperforms existing imaging methods that do not compensate for the Doppler shift, demonstrating up to 18 times lower localization error and enabling velocity estimation.

HiSAC: High-Resolution Sensing with Multiband Communication Signals

Jul 09, 2024

Abstract:Integrated Sensing And Communication (ISAC ) systems are expected to perform accurate radar sensing while having minimal impact on communication. Ideally, sensing should only reuse communication resources, especially for spectrum which is contended by many applications. However, this poses a great challenge in that communication systems often operate on narrow subbands with low sensing resolution. Combining contiguous subbands has shown significant resolution gain in active localization. However, multiband ISAC remains unexplored due to communication subbands being highly sparse (non-contiguous) and affected by phase offsets that prevent their aggregation (incoherent). To tackle these problems, we design HiSAC, the first multiband ISAC system that combines diverse subbands across a wide frequency range to achieve super-resolved passive ranging. To solve the non-contiguity and incoherence of subbands, HiSAC combines them progressively, exploiting an anchor propagation path between transmitter and receiver in an optimization problem to achieve phase coherence. HiSAC fully reuses pilot signals in communication systems, it applies to different frequencies and can combine diverse technologies, e.g., 5G-NR and WiGig. We implement HiSAC on an experimental platform in the millimeter-wave unlicensed band and test it on objects and humans. Our results show it enhances the sensing resolution by up to 20 times compared to single-band processing while occupying the same spectrum.

Angle estimation using mmWave RSS measurements with enhanced multipath information

Mar 14, 2024

Abstract:mmWave communication has come up as the unexplored spectrum for 5G services. With new standards for 5G NR positioning, more off-the-shelf platforms and algorithms are needed to perform indoor positioning. An object can be accurately positioned in a room either by using an angle and a delay estimate or two angle estimates or three delay estimates. We propose an algorithm to jointly estimate the angle of arrival (AoA) and angle of departure (AoD), based only on the received signal strength (RSS). We use mm-FLEX, an experimentation platform developed by IMDEA Networks Institute that can perform real-time signal processing for experimental validation of our proposed algorithm. Codebook-based beampatterns are used with a uniquely placed multi-antenna array setup to enhance the reception of multipath components and we obtain an AoA estimate per receiver thereby overcoming the line-of-sight (LoS) limitation of RSS-based localization systems. We further validate the results from measurements by emulating the setup with a simple ray-tracing approach.

Sensing in Bi-Static ISAC Systems with Clock Asynchronism: A Signal Processing Perspective

Feb 14, 2024

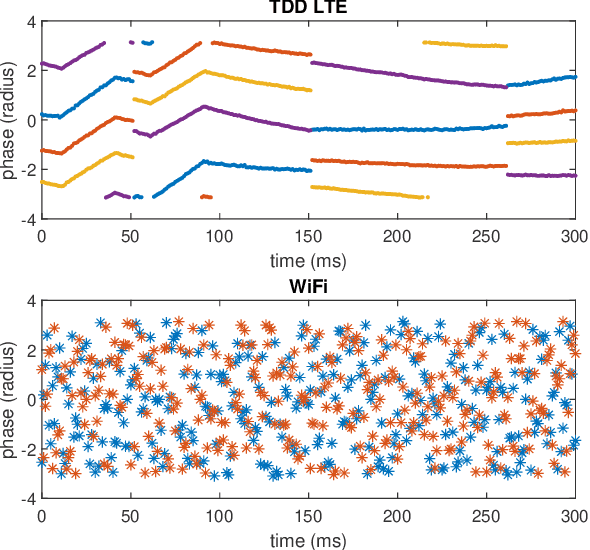

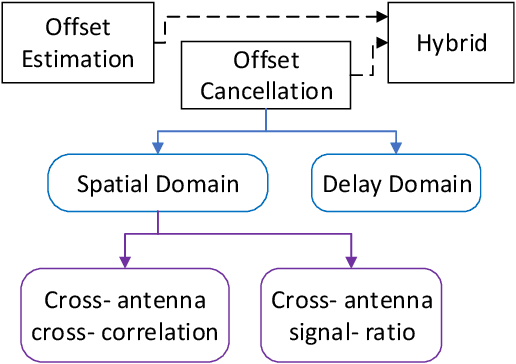

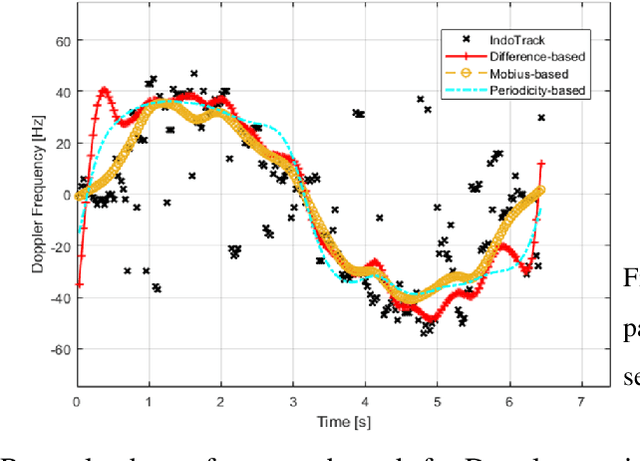

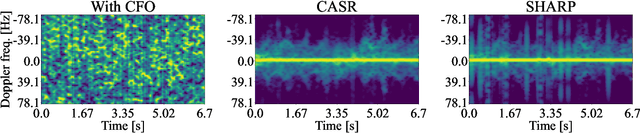

Abstract:Integrated Sensing and Communications (ISAC) has been identified as a pillar usage scenario for the impending 6G era. Bi-static sensing, a major type of sensing in \ac{isac}, is promising to expedite ISAC in the near future, as it requires minimal changes to the existing network infrastructure. However, a critical challenge for bi-static sensing is clock asynchronism due to the use of different clocks at far separated transmitter and receiver. This causes the received signal to be affected by time-varying random phase offsets, severely degrading, or even failing, direct sensing. Considerable research attention has been directed toward addressing the clock asynchronism issue in bi-static sensing. In this white paper, we endeavor to fill the gap by providing an overview of the issue and existing techniques developed in an ISAC background. Based on the review and comparison, we also draw insights into the future research directions and open problems, aiming to nurture the maturation of bi-static sensing in ISAC.

Fundamental Trade-Offs in Monostatic ISAC: A Holistic Investigation Towards 6G

Jan 31, 2024

Abstract:Integrated sensing and communication (ISAC) emerges as a cornerstone technology for the upcoming 6G era, seamlessly incorporating sensing functionality into wireless networks as an inherent capability. This paper undertakes a holistic investigation of two fundamental trade-offs in monostatic OFDM ISAC systems-namely, the time-frequency domain trade-off and the spatial domain trade-off. To ensure robust sensing across diverse modulation orders in the time-frequency domain, including high-order QAM, we design a linear minimum mean-squared-error (LMMSE) estimator tailored for sensing with known, randomly generated signals of varying amplitude. Moreover, we explore spatial domain trade-offs through two ISAC transmission strategies: concurrent, employing joint beams, and time-sharing, using separate, time-non-overlapping beams for sensing and communications. Simulations demonstrate superior performance of the LMMSE estimator in detecting weak targets in the presence of strong ones under high-order QAM, consistently yielding more favorable ISAC trade-offs than existing baselines. Key insights into these trade-offs under various modulation schemes, SNR conditions, target radar cross section (RCS) levels and transmission strategies highlight the merits of the proposed LMMSE approach.

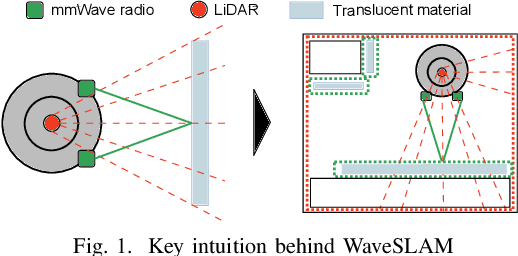

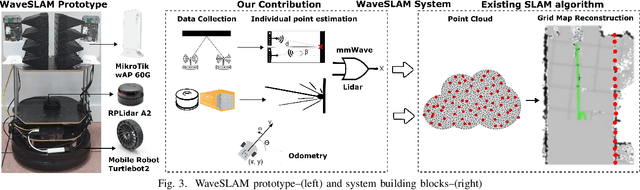

waveSLAM: Empowering Accurate Indoor Mapping Using Off-the-Shelf Millimeter-wave Self-sensing

Dec 12, 2023

Abstract:This paper presents the design, implementation and evaluation of waveSLAM, a low-cost mobile robot system that uses the millimetre wave (mmWave) communication devices to enhance the indoor mapping process targeting environments with reduced visibility or glass/mirror walls. A unique feature of waveSLAM is that it only leverages existing Commercial-Off-The-Shelf (COTS) hardware (Lidar and mmWave radios) that are mounted on mobile robots to improve the accurate indoor mapping achieved with optical sensors. The key intuition behind the waveSLAM design is that while the mobile robots moves freely, the mmWave radios can periodically exchange angle and distance estimates between themselves (self-sensing) by bouncing the signal from the environment, thus enabling accurate estimates of the target object/material surface. Our experiments verify that waveSLAM can archive cm-level accuracy with errors below 22 cm and 20deg in angle orientation which is compatible with Lidar when building indoor maps.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge