Francesca Meneghello

Semantic Multiplexing

Nov 16, 2025Abstract:Mobile devices increasingly require the parallel execution of several computing tasks offloaded at the wireless edge. Existing communication systems only support parallel transmissions at the bit level, which fundamentally limits the number of tasks that can be concurrently processed. To address this bottleneck, this paper introduces the new concept of Semantic Multiplexing. Our approach shifts stream multiplexing from bits to tasks by merging multiple task-related compressed representations into a single semantic representation. As such, Semantic Multiplexing can multiplex more tasks than the number of physical channels without adding antennas or widening bandwidth by extending the effective degrees of freedom at the semantic layer, without contradicting Shannon capacity rules. We have prototyped Semantic Multiplexing on an experimental testbed with Jetson Orin Nano and millimeter-wave software-defined radios and tested its performance on image classification and sentiment analysis while comparing to several existing baselines in semantic communications. Our experiments demonstrate that Semantic Multiplexing allows jointly processing multiple tasks at the semantic level while maintaining sufficient task accuracy. For example, image classification accuracy drops by less than 4% when increasing from 2 to 8 the number of tasks multiplexed over a 4$\times$4 channel. Semantic Multiplexing reduces latency, energy consumption, and communication load respectively by up to 8$\times$, 25$\times$, and 54$\times$ compared to the baselines while keeping comparable performance. We pledge to publicly share the complete software codebase and the collected datasets for reproducibility.

Generating the Traces You Need: A Conditional Generative Model for Process Mining Data

Nov 04, 2024Abstract:In recent years, trace generation has emerged as a significant challenge within the Process Mining community. Deep Learning (DL) models have demonstrated accuracy in reproducing the features of the selected processes. However, current DL generative models are limited in their ability to adapt the learned distributions to generate data samples based on specific conditions or attributes. This limitation is particularly significant because the ability to control the type of generated data can be beneficial in various contexts, enabling a focus on specific behaviours, exploration of infrequent patterns, or simulation of alternative 'what-if' scenarios. In this work, we address this challenge by introducing a conditional model for process data generation based on a conditional variational autoencoder (CVAE). Conditional models offer control over the generation process by tuning input conditional variables, enabling more targeted and controlled data generation. Unlike other domains, CVAE for process mining faces specific challenges due to the multiperspective nature of the data and the need to adhere to control-flow rules while ensuring data variability. Specifically, we focus on generating process executions conditioned on control flow and temporal features of the trace, allowing us to produce traces for specific, identified sub-processes. The generated traces are then evaluated using common metrics for generative model assessment, along with additional metrics to evaluate the quality of the conditional generation

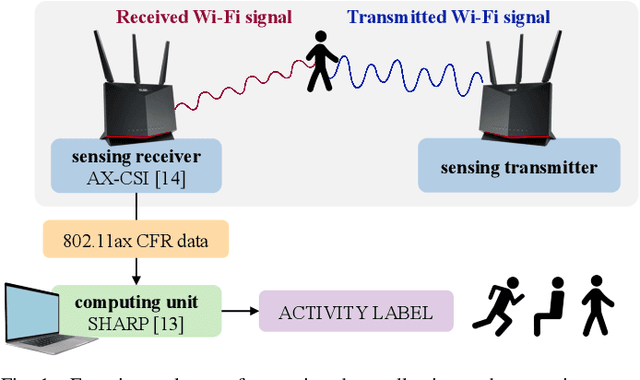

SAWEC: Sensing-Assisted Wireless Edge Computing

Feb 15, 2024

Abstract:Emerging mobile virtual reality (VR) systems will require to continuously perform complex computer vision tasks on ultra-high-resolution video frames through the execution of deep neural networks (DNNs)-based algorithms. Since state-of-the-art DNNs require computational power that is excessive for mobile devices, techniques based on wireless edge computing (WEC) have been recently proposed. However, existing WEC methods require the transmission and processing of a high amount of video data which may ultimately saturate the wireless link. In this paper, we propose a novel Sensing-Assisted Wireless Edge Computing (SAWEC) paradigm to address this issue. SAWEC leverages knowledge about the physical environment to reduce the end-to-end latency and overall computational burden by transmitting to the edge server only the relevant data for the delivery of the service. Our intuition is that the transmission of the portion of the video frames where there are no changes with respect to previous frames can be avoided. Specifically, we leverage wireless sensing techniques to estimate the location of objects in the environment and obtain insights about the environment dynamics. Hence, only the part of the frames where any environmental change is detected is transmitted and processed. We evaluated SAWEC by using a 10K 360$^{\circ}$ camera with a Wi-Fi 6 sensing system operating at 160 MHz and performing localization and tracking. We perform experiments in an anechoic chamber and a hall room with two human subjects in six different setups. Experimental results show that SAWEC reduces the channel occupation, and end-to-end latency by 93.81%, and 96.19% respectively while improving the instance segmentation performance by 46.98% with respect to state-of-the-art WEC approaches. For reproducibility purposes, we pledge to share our whole dataset and code repository.

Sensing in Bi-Static ISAC Systems with Clock Asynchronism: A Signal Processing Perspective

Feb 14, 2024

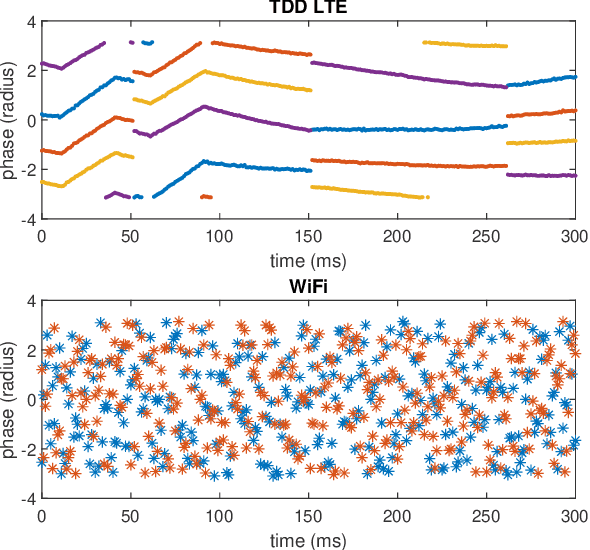

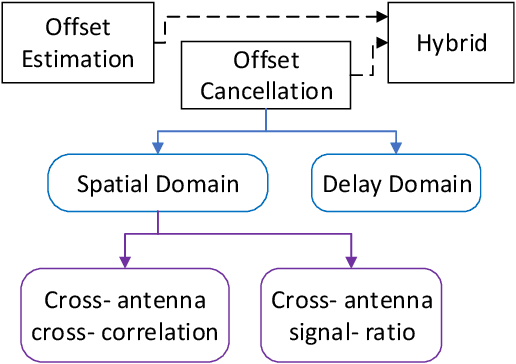

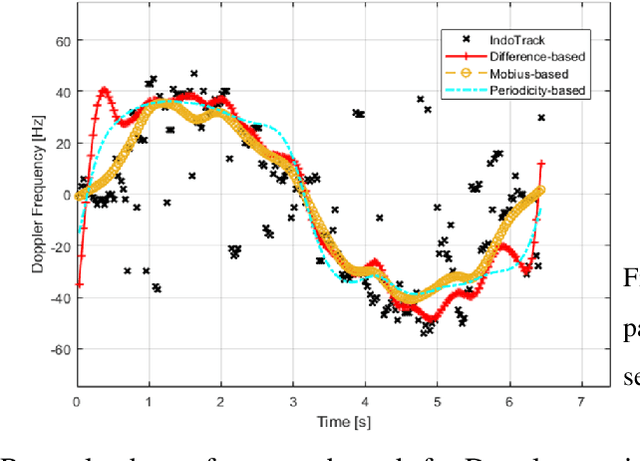

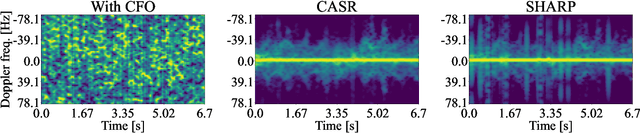

Abstract:Integrated Sensing and Communications (ISAC) has been identified as a pillar usage scenario for the impending 6G era. Bi-static sensing, a major type of sensing in \ac{isac}, is promising to expedite ISAC in the near future, as it requires minimal changes to the existing network infrastructure. However, a critical challenge for bi-static sensing is clock asynchronism due to the use of different clocks at far separated transmitter and receiver. This causes the received signal to be affected by time-varying random phase offsets, severely degrading, or even failing, direct sensing. Considerable research attention has been directed toward addressing the clock asynchronism issue in bi-static sensing. In this white paper, we endeavor to fill the gap by providing an overview of the issue and existing techniques developed in an ISAC background. Based on the review and comparison, we also draw insights into the future research directions and open problems, aiming to nurture the maturation of bi-static sensing in ISAC.

Wi-BFI: Extracting the IEEE 802.11 Beamforming Feedback Information from Commercial Wi-Fi Devices

Sep 12, 2023

Abstract:Recently, researchers have shown that the beamforming feedback angles (BFAs) used for Wi-Fi multiple-input multiple-output (MIMO) operations can be effectively leveraged as a proxy of the channel frequency response (CFR) for different purposes. Examples are passive human activity recognition and device fingerprinting. However, even though the BFAs report frames are sent in clear text, there is not yet a unified open-source tool to extract and decode the BFAs from the frames. To fill this gap, we developed Wi-BFI, the first tool that allows retrieving Wi-Fi BFAs and reconstructing the beamforming feedback information (BFI) - a compressed representation of the CFR - from the BFAs frames captured over the air. The tool supports BFAs extraction within both IEEE 802.11ac and 802.11ax networks operating on radio channels with 160/80/40/20 MHz bandwidth. Both multi-user and single-user MIMO feedback can be decoded through Wi-BFI. The tool supports real-time and offline extraction and storage of BFAs and BFI. The real-time mode also includes a visual representation of the channel state that continuously updates based on the collected data. Wi-BFI code is open source and the tool is also available as a pip package.

A CSI Dataset for Wireless Human Sensing on 80 MHz Wi-Fi Channels

Apr 29, 2023

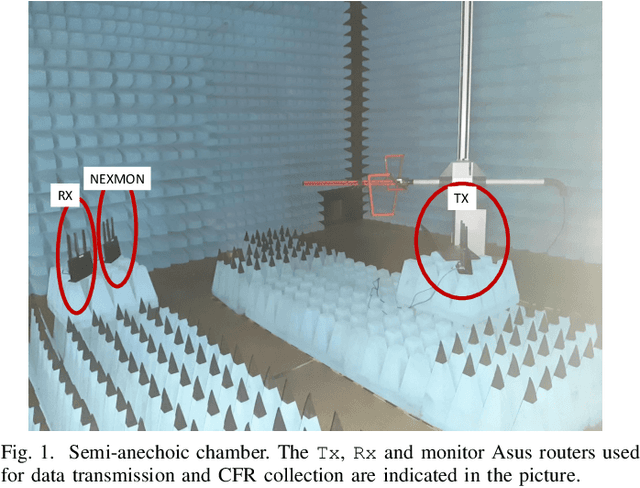

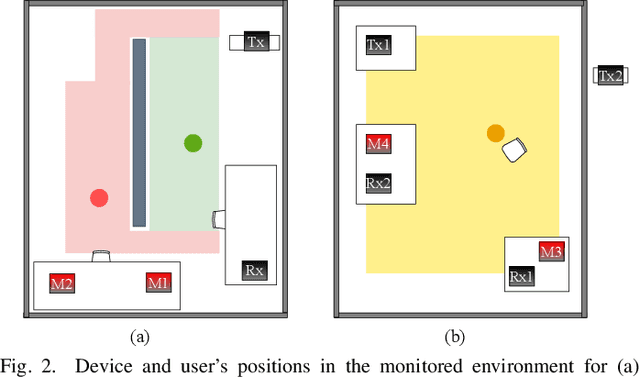

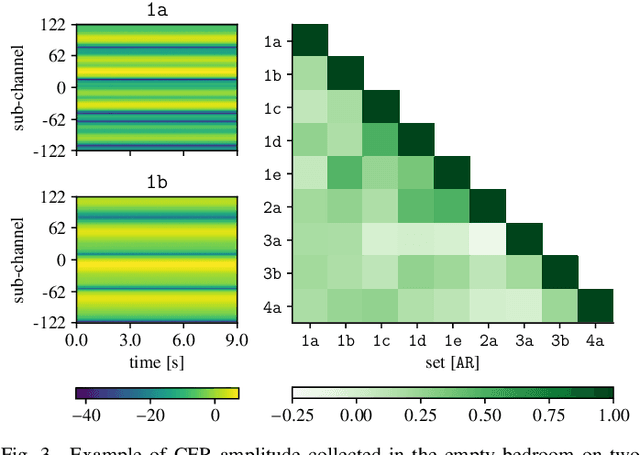

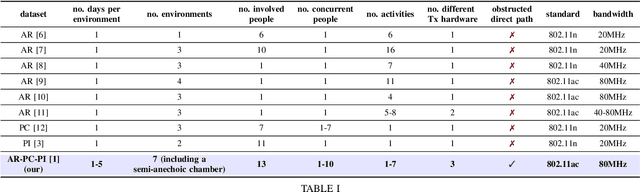

Abstract:In the last years, several machine learning-based techniques have been proposed to monitor human movements from Wi-Fi channel readings. However, the development of domain-adaptive algorithms that robustly work across different environments is still an open problem, whose solution requires large datasets characterized by strong domain diversity, in terms of environments, persons and Wi-Fi hardware. To date, the few public datasets available are mostly obsolete - as obtained via Wi-Fi devices operating on 20 or 40 MHz bands - and contain little or no domain diversity, thus dramatically limiting the advancements in the design of sensing algorithms. The present contribution aims to fill this gap by providing a dataset of IEEE 802.11ac channel measurements over an 80 MHz bandwidth channel featuring notable domain diversity, through measurement campaigns that involved thirteen subjects across different environments, days, and with different hardware. Novel experimental data is provided by blocking the direct path between the transmitter and the monitor, and collecting measurements in a semi-anechoic chamber (no multi-path fading). Overall, the dataset - available on IEEE DataPort [1] - contains more than thirteen hours of channel state information readings (23.6 GB), allowing researchers to test activity/identity recognition and people counting algorithms.

Recommending the optimal policy by learning to act from temporal data

Mar 16, 2023

Abstract:Prescriptive Process Monitoring is a prominent problem in Process Mining, which consists in identifying a set of actions to be recommended with the goal of optimising a target measure of interest or Key Performance Indicator (KPI). One challenge that makes this problem difficult is the need to provide Prescriptive Process Monitoring techniques only based on temporally annotated (process) execution data, stored in, so-called execution logs, due to the lack of well crafted and human validated explicit models. In this paper we aim at proposing an AI based approach that learns, by means of Reinforcement Learning (RL), an optimal policy (almost) only from the observation of past executions and recommends the best activities to carry on for optimizing a KPI of interest. This is achieved first by learning a Markov Decision Process for the specific KPIs from data, and then by using RL training to learn the optimal policy. The approach is validated on real and synthetic datasets and compared with off-policy Deep RL approaches. The ability of our approach to compare with, and often overcome, Deep RL approaches provides a contribution towards the exploitation of white box RL techniques in scenarios where only temporal execution data are available.

BeamSense: Rethinking Wireless Sensing with MU-MIMO Wi-Fi Beamforming Feedback

Mar 16, 2023Abstract:In this paper, we propose BeamSense, a completely novel approach to implement standard-compliant Wi-Fi sensing applications. Wi-Fi sensing enables game-changing applications in remote healthcare, home entertainment, and home surveillance, among others. However, existing work leverages the manual extraction of channel state information (CSI) from Wi-Fi chips to classify activities, which is not supported by the Wi-Fi standard and hence requires the usage of specialized equipment. On the contrary, BeamSense leverages the standard-compliant beamforming feedback information (BFI) to characterize the propagation environment. Conversely from CSI, the BFI (i) can be easily recorded without any firmware modification, and (ii) captures the multiple channels between the access point and the stations, thus providing much better sensitivity. BeamSense includes a novel cross-domain few-shot learning (FSL) algorithm to handle unseen environments and subjects with few additional data points. We evaluate BeamSense through an extensive data collection campaign with three subjects performing twenty different activities in three different environments. We show that our BFI-based approach achieves about 10% more accuracy when compared to CSI-based prior work, while our FSL strategy improves accuracy by up to 30% and 80% when compared with state-of-the-art cross-domain algorithms.

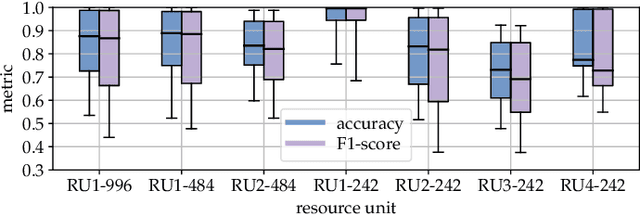

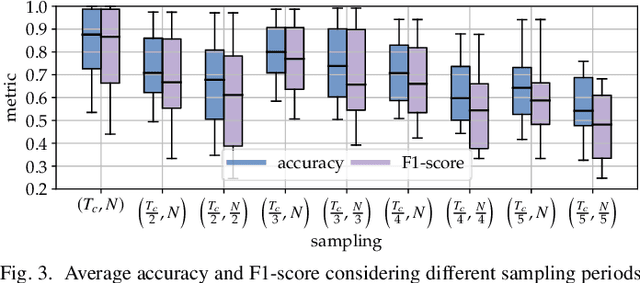

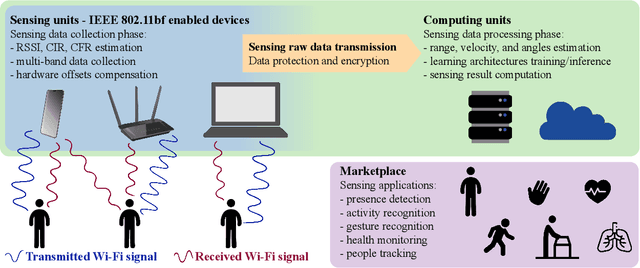

Towards Integrated Sensing and Communications in IEEE 802.11bf Wi-Fi Networks

Dec 28, 2022

Abstract:As Wi-Fi becomes ubiquitous in public and private spaces, it becomes natural to leverage its intrinsic ability to sense the surrounding environment to implement groundbreaking wireless sensing applications such as human presence detection, activity recognition, and object tracking. For this reason, the IEEE 802.11bf Task Group is defining the appropriate modifications to existing Wi-Fi standards to enhance sensing capabilities through 802.11-compliant devices. However, the new standard is expected to leave the specific sensing algorithms open to implementation. To fill this gap, this article explores the practical implications of integrating sensing and communications into Wi-Fi networks. We provide an overview of the support that will enable sensing applications, together with an in-depth analysis of the role of different devices in a Wi-Fi sensing system and a description of the open research challenges. Moreover, an experimental evaluation with off-the-shelf devices provides suggestions about the parameters to be considered when designing Wi-Fi sensing systems. To make such an evaluation replicable, we pledge to release all of our dataset and code to the community.

DeepCSI: Rethinking Wi-Fi Radio Fingerprinting Through MU-MIMO CSI Feedback Deep Learning

Apr 15, 2022

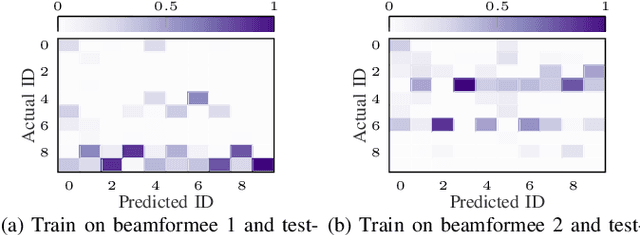

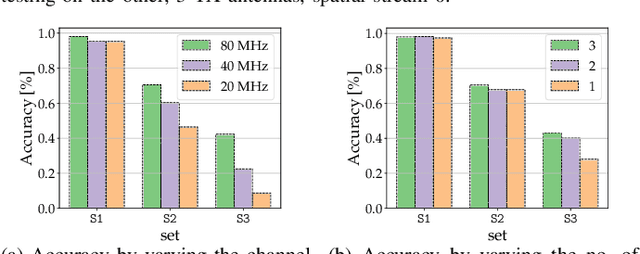

Abstract:We present DeepCSI, a novel approach to Wi-Fi radio fingerprinting (RFP) which leverages standard-compliant beamforming feedback matrices to authenticate MU-MIMO Wi-Fi devices on the move. By capturing unique imperfections in off-the-shelf radio circuitry, RFP techniques can identify wireless devices directly at the physical layer, allowing low-latency low-energy cryptography-free authentication. However, existing Wi-Fi RFP techniques are based on software-defined radio (SDRs), which may ultimately prevent their widespread adoption. Moreover, it is unclear whether existing strategies can work in the presence of MU-MIMO transmitters - a key technology in modern Wi-Fi standards. Conversely from prior work, DeepCSI does not require SDR technologies and can be run on any low-cost Wi-Fi device to authenticate MU-MIMO transmitters. Our key intuition is that imperfections in the transmitter's radio circuitry percolate onto the beamforming feedback matrix, and thus RFP can be performed without explicit channel state information (CSI) computation. DeepCSI is robust to inter-stream and inter-user interference being the beamforming feedback not affected by those phenomena. We extensively evaluate the performance of DeepCSI through a massive data collection campaign performed in the wild with off-the-shelf equipment, where 10 MU-MIMO Wi-Fi radios emit signals in different positions. Experimental results indicate that DeepCSI correctly identifies the transmitter with an accuracy of up to 98%. The identification accuracy remains above 82% when the device moves within the environment. To allow replicability and provide a performance benchmark, we pledge to share the 800 GB datasets - collected in static and, for the first time, dynamic conditions - and the code database with the community.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge