Stefano Branchi

Recommending the optimal policy by learning to act from temporal data

Mar 16, 2023

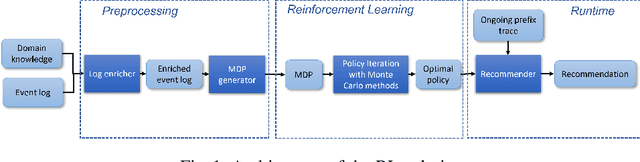

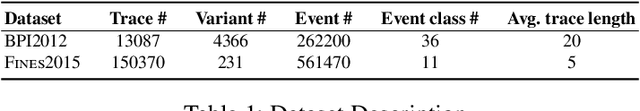

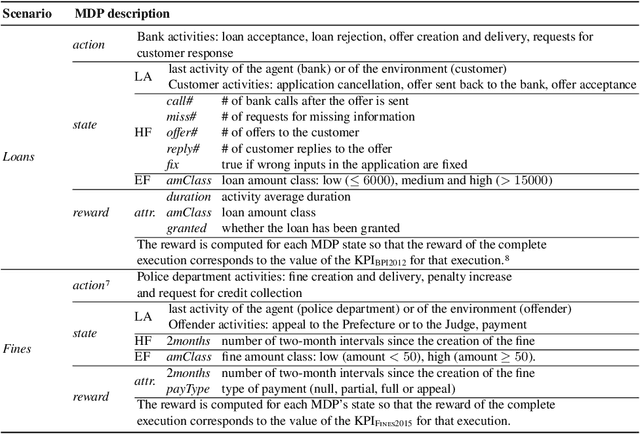

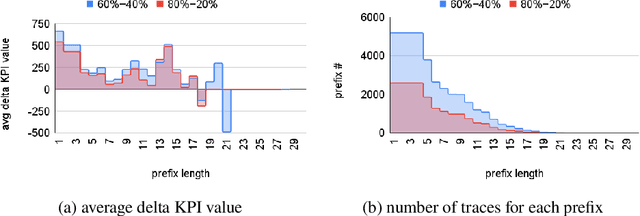

Abstract:Prescriptive Process Monitoring is a prominent problem in Process Mining, which consists in identifying a set of actions to be recommended with the goal of optimising a target measure of interest or Key Performance Indicator (KPI). One challenge that makes this problem difficult is the need to provide Prescriptive Process Monitoring techniques only based on temporally annotated (process) execution data, stored in, so-called execution logs, due to the lack of well crafted and human validated explicit models. In this paper we aim at proposing an AI based approach that learns, by means of Reinforcement Learning (RL), an optimal policy (almost) only from the observation of past executions and recommends the best activities to carry on for optimizing a KPI of interest. This is achieved first by learning a Markov Decision Process for the specific KPIs from data, and then by using RL training to learn the optimal policy. The approach is validated on real and synthetic datasets and compared with off-policy Deep RL approaches. The ability of our approach to compare with, and often overcome, Deep RL approaches provides a contribution towards the exploitation of white box RL techniques in scenarios where only temporal execution data are available.

Learning to act: a Reinforcement Learning approach to recommend the best next activities

Mar 29, 2022

Abstract:The rise of process data availability has led in the last decade to the development of several data-driven learning approaches. However, most of these approaches limit themselves to use the learned model to predict the future of ongoing process executions. The goal of this paper is moving a step forward and leveraging data with the purpose of learning to act by supporting users with recommendations for the best strategy to follow, in order to optimize a measure of performance. In this paper, we take the (optimization) perspective of one process actor and we recommend the best activities to execute next, in response to what happens in a complex external environment, where there is no control on exogenous factors. To this aim, we investigate an approach that learns, by means of Reinforcement Learning, an optimal policy from the observation of past executions and recommends the best activities to carry on for optimizing a Key Performance Indicator of interest. The potentiality of the approach has been demonstrated on two scenarios taken from real-life data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge