Milin Zhang

BoxMind: Closed-loop AI strategy optimization for elite boxing validated in the 2024 Olympics

Jan 16, 2026Abstract:Competitive sports require sophisticated tactical analysis, yet combat disciplines like boxing remain underdeveloped in AI-driven analytics due to the complexity of action dynamics and the lack of structured tactical representations. To address this, we present BoxMind, a closed-loop AI expert system validated in elite boxing competition. By defining atomic punch events with precise temporal boundaries and spatial and technical attributes, we parse match footage into 18 hierarchical technical-tactical indicators. We then propose a graph-based predictive model that fuses these explicit technical-tactical profiles with learnable, time-variant latent embeddings to capture the dynamics of boxer matchups. Modeling match outcome as a differentiable function of technical-tactical indicators, we turn winning probability gradients into executable tactical adjustments. Experiments show that the outcome prediction model achieves state-of-the-art performance, with 69.8% accuracy on BoxerGraph test set and 87.5% on Olympic matches. Using this predictive model as a foundation, the system generates strategic recommendations that demonstrate proficiency comparable to human experts. BoxMind is validated through a closed-loop deployment during the 2024 Paris Olympics, directly contributing to the Chinese National Team's historic achievement of three gold and two silver medals. BoxMind establishes a replicable paradigm for transforming unstructured video data into strategic intelligence, bridging the gap between computer vision and decision support in competitive sports.

Semantic Edge Computing and Semantic Communications in 6G Networks: A Unifying Survey and Research Challenges

Nov 27, 2024Abstract:Semantic Edge Computing (SEC) and Semantic Communications (SemComs) have been proposed as viable approaches to achieve real-time edge-enabled intelligence in sixth-generation (6G) wireless networks. On one hand, SemCom leverages the strength of Deep Neural Networks (DNNs) to encode and communicate the semantic information only, while making it robust to channel distortions by compensating for wireless effects. Ultimately, this leads to an improvement in the communication efficiency. On the other hand, SEC has leveraged distributed DNNs to divide the computation of a DNN across different devices based on their computational and networking constraints. Although significant progress has been made in both fields, the literature lacks a systematic view to connect both fields. In this work, we fulfill the current gap by unifying the SEC and SemCom fields. We summarize the research problems in these two fields and provide a comprehensive review of the state of the art with a focus on their technical strengths and challenges.

Resilience and Security of Deep Neural Networks Against Intentional and Unintentional Perturbations: Survey and Research Challenges

Aug 03, 2024

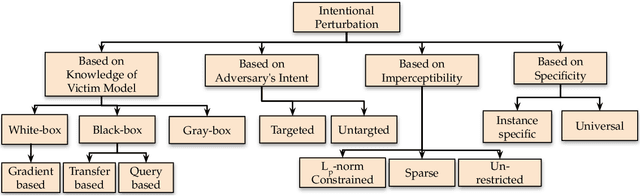

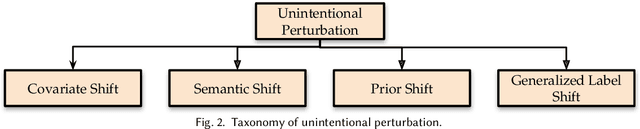

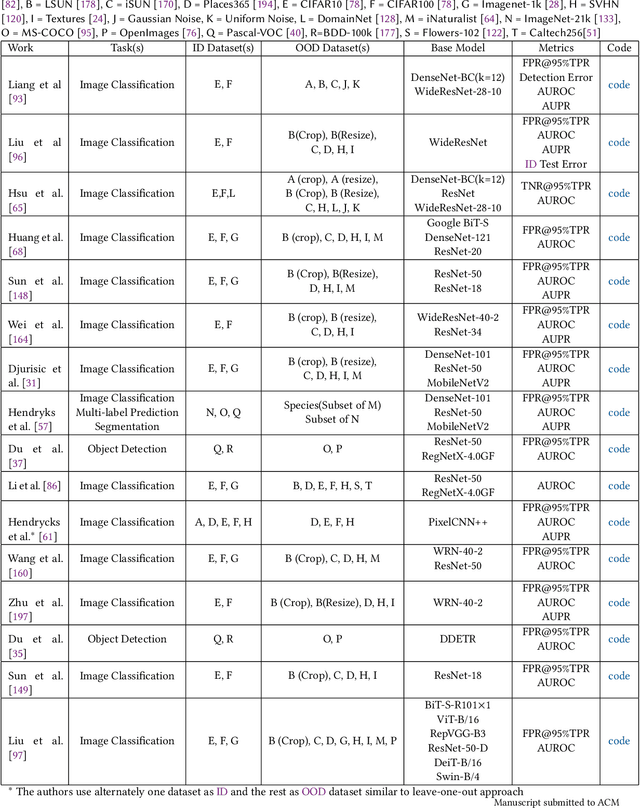

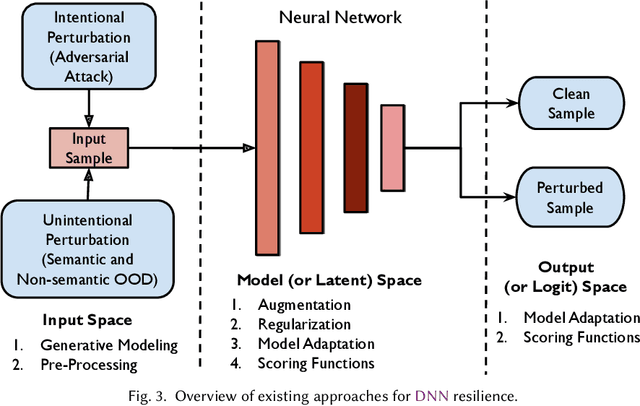

Abstract:In order to deploy deep neural networks (DNNs) in high-stakes scenarios, it is imperative that DNNs provide inference robust to external perturbations - both intentional and unintentional. Although the resilience of DNNs to intentional and unintentional perturbations has been widely investigated, a unified vision of these inherently intertwined problem domains is still missing. In this work, we fill this gap by providing a survey of the state of the art and highlighting the similarities of the proposed approaches.We also analyze the research challenges that need to be addressed to deploy resilient and secure DNNs. As there has not been any such survey connecting the resilience of DNNs to intentional and unintentional perturbations, we believe this work can help advance the frontier in both domains by enabling the exchange of ideas between the two communities.

Cepstral Analysis Based Artifact Detection, Recognition and Removal for Prefrontal EEG

Apr 12, 2024Abstract:This paper proposes to use cepstrum for artifact detection, recognition and removal in prefrontal EEG. This work focuses on the artifact caused by eye movement. A database containing artifact-free EEG and eye movement contaminated EEG from different subjects is established. A cepstral analysis-based feature extraction with support vector machine (SVM) based classifier is designed to identify the artifacts from the target EEG signals. The proposed method achieves an accuracy of 99.62% on the artifact detection task and a 82.79% accuracy on the 6-category eye movement classification task. A statistical value-based artifact removal method is proposed and evaluated on a public EEG database, where an accuracy improvement of 3.46% is obtained on the 3-category emotion classification task. In order to make a confident decision of each 5s EEG segment, the algorithm requires only 0.66M multiplication operations. Compared to the state-of-the-art approaches in artifact detection and removal, the proposed method features higher detection accuracy and lower computational cost, which makes it a more suitable solution to be integrated into a real-time and artifact robust Brain-Machine Interface (BMI).

* 5 pages, 4 figures, published by TCAS-II

Resilience of Entropy Model in Distributed Neural Networks

Mar 01, 2024Abstract:Distributed deep neural networks (DNNs) have emerged as a key technique to reduce communication overhead without sacrificing performance in edge computing systems. Recently, entropy coding has been introduced to further reduce the communication overhead. The key idea is to train the distributed DNN jointly with an entropy model, which is used as side information during inference time to adaptively encode latent representations into bit streams with variable length. To the best of our knowledge, the resilience of entropy models is yet to be investigated. As such, in this paper we formulate and investigate the resilience of entropy models to intentional interference (e.g., adversarial attacks) and unintentional interference (e.g., weather changes and motion blur). Through an extensive experimental campaign with 3 different DNN architectures, 2 entropy models and 4 rate-distortion trade-off factors, we demonstrate that the entropy attacks can increase the communication overhead by up to 95%. By separating compression features in frequency and spatial domain, we propose a new defense mechanism that can reduce the transmission overhead of the attacked input by about 9% compared to unperturbed data, with only about 2% accuracy loss. Importantly, the proposed defense mechanism is a standalone approach which can be applied in conjunction with approaches such as adversarial training to further improve robustness. Code will be shared for reproducibility.

Stitching the Spectrum: Semantic Spectrum Segmentation with Wideband Signal Stitching

Feb 07, 2024

Abstract:Spectrum has become an extremely scarce and congested resource. As a consequence, spectrum sensing enables the coexistence of different wireless technologies in shared spectrum bands. Most existing work requires spectrograms to classify signals. Ultimately, this implies that images need to be continuously created from I/Q samples, thus creating unacceptable latency for real-time operations. In addition, spectrogram-based approaches do not achieve sufficient granularity level as they are based on object detection performed on pixels and are based on rectangular bounding boxes. For this reason, we propose a completely novel approach based on semantic spectrum segmentation, where multiple signals are simultaneously classified and localized in both time and frequency at the I/Q level. Conversely from the state-of-the-art computer vision algorithm, we add non-local blocks to combine the spatial features of signals, and thus achieve better performance. In addition, we propose a novel data generation approach where a limited set of easy-to-collect real-world wireless signals are ``stitched together'' to generate large-scale, wideband, and diverse datasets. Experimental results obtained on multiple testbeds (including the Arena testbed) using multiple antennas, multiple sampling frequencies, and multiple radios over the course of 3 days show that our approach classifies and localizes signals with a mean intersection over union (IOU) of 96.70% across 5 wireless protocols while performing in real-time with a latency of 2.6 ms. Moreover, we demonstrate that our approach based on non-local blocks achieves 7% more accuracy when segmenting the most challenging signals with respect to the state-of-the-art U-Net algorithm. We will release our 17 GB dataset and code.

LightSleepNet: Design of a Personalized Portable Sleep Staging System Based on Single-Channel EEG

Jan 24, 2024

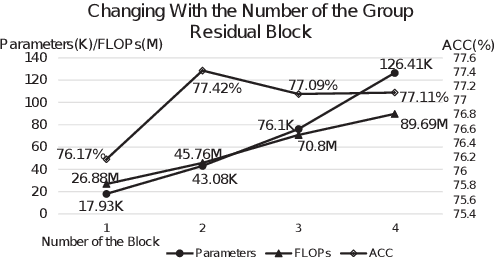

Abstract:This paper proposed LightSleepNet - a light-weight, 1-d Convolutional Neural Network (CNN) based personalized architecture for real-time sleep staging, which can be implemented on various mobile platforms with limited hardware resources. The proposed architecture only requires an input of 30s single-channel EEG signal for the classification. Two residual blocks consisting of group 1-d convolution are used instead of the traditional convolution layers to remove the redundancy in the CNN. Channel shuffles are inserted into each convolution layer to improve the accuracy. In order to avoid over-fitting to the training set, a Global Average Pooling (GAP) layer is used to replace the fully connected layer, which further reduces the total number of the model parameters significantly. A personalized algorithm combining Adaptive Batch Normalization (AdaBN) and gradient re-weighting is proposed for unsupervised domain adaptation. A higher priority is given to examples that are easy to transfer to the new subject, and the algorithm could be personalized for new subjects without re-training. Experimental results show a state-of-the-art overall accuracy of 83.8% with only 45.76 Million Floating-point Operations per Second (MFLOPs) computation and 43.08 K parameters.

* 5 pages, 3 figures, published by IEEE TCAS-II

A Closed-loop Brain-Machine Interface SoC Featuring a 0.2$μ$J/class Multiplexer Based Neural Network

Jan 07, 2024

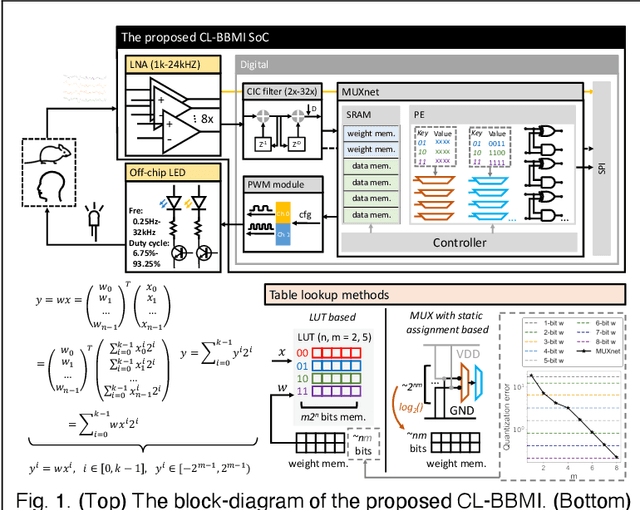

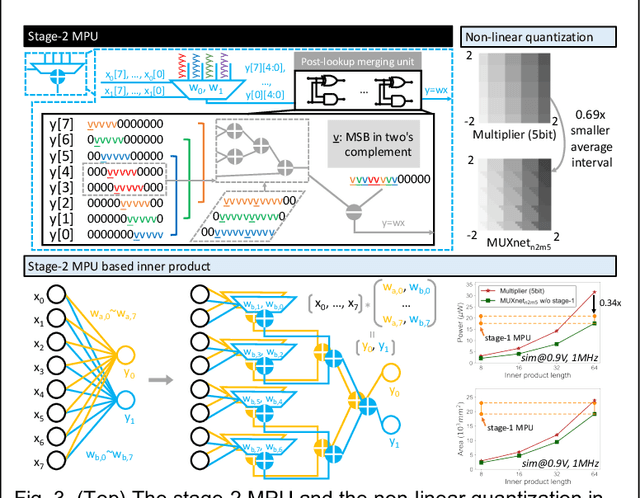

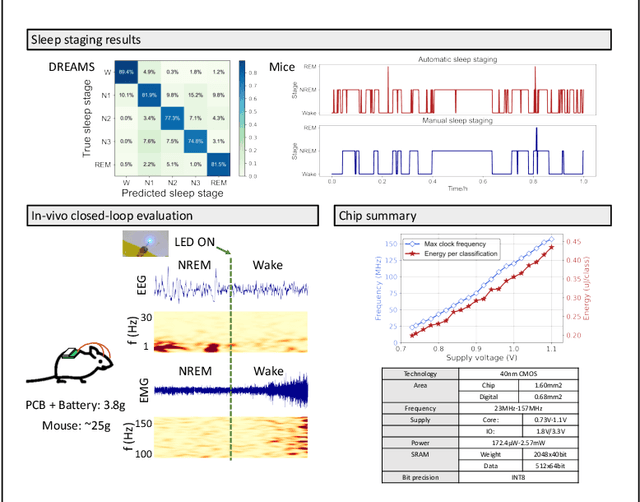

Abstract:This work presents the first fabricated electrophysiology-optogenetic closed-loop bidirectional brain-machine interface (CL-BBMI) system-on-chip (SoC) with electrical neural signal recording, on-chip sleep staging and optogenetic stimulation. The first multiplexer with static assignment based table lookup solution (MUXnet) for multiplier-free NN processor was proposed. A state-of-the-art average accuracy of 82.4% was achieved with an energy consumption of only 0.2$\mu$J/class in sleep staging task.

Multi-Channel Multi-Domain based Knowledge Distillation Algorithm for Sleep Staging with Single-Channel EEG

Jan 07, 2024

Abstract:This paper proposed a Multi-Channel Multi-Domain (MCMD) based knowledge distillation algorithm for sleep staging using single-channel EEG. Both knowledge from different domains and different channels are learnt in the proposed algorithm, simultaneously. A multi-channel pre-training and single-channel fine-tuning scheme is used in the proposed work. The knowledge from different channels in the source domain is transferred to the single-channel model in the target domain. A pre-trained teacher-student model scheme is used to distill knowledge from the multi-channel teacher model to the single-channel student model combining with output transfer and intermediate feature transfer in the target domain. The proposed algorithm achieves a state-of-the-art single-channel sleep staging accuracy of 86.5%, with only 0.6% deterioration from the state-of-the-art multi-channel model. There is an improvement of 2% compared to the baseline model. The experimental results show that knowledge from multiple domains (different datasets) and multiple channels (e.g. EMG, EOG) could be transferred to single-channel sleep staging.

* 5 pages, 2 figures, published by IEEE TCAS-II

Adversarial Machine Learning in Latent Representations of Neural Networks

Sep 29, 2023

Abstract:Distributed deep neural networks (DNNs) have been shown to reduce the computational burden of mobile devices and decrease the end-to-end inference latency in edge computing scenarios. While distributed DNNs have been studied, to the best of our knowledge the resilience of distributed DNNs to adversarial action still remains an open problem. In this paper, we fill the existing research gap by rigorously analyzing the robustness of distributed DNNs against adversarial action. We cast this problem in the context of information theory and introduce two new measurements for distortion and robustness. Our theoretical findings indicate that (i) assuming the same level of information distortion, latent features are always more robust than input representations; (ii) the adversarial robustness is jointly determined by the feature dimension and the generalization capability of the DNN. To test our theoretical findings, we perform extensive experimental analysis by considering 6 different DNN architectures, 6 different approaches for distributed DNN and 10 different adversarial attacks to the ImageNet-1K dataset. Our experimental results support our theoretical findings by showing that the compressed latent representations can reduce the success rate of adversarial attacks by 88% in the best case and by 57% on the average compared to attacks to the input space.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge