Sazzad Sayyed

Resilience and Security of Deep Neural Networks Against Intentional and Unintentional Perturbations: Survey and Research Challenges

Aug 03, 2024

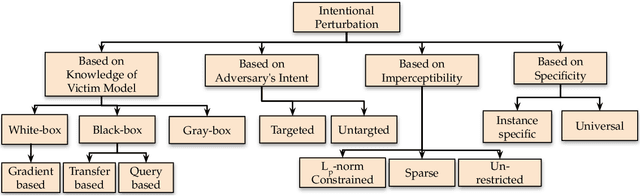

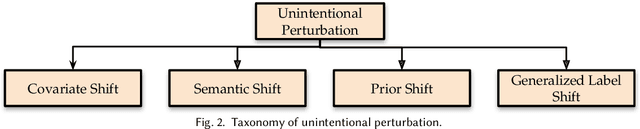

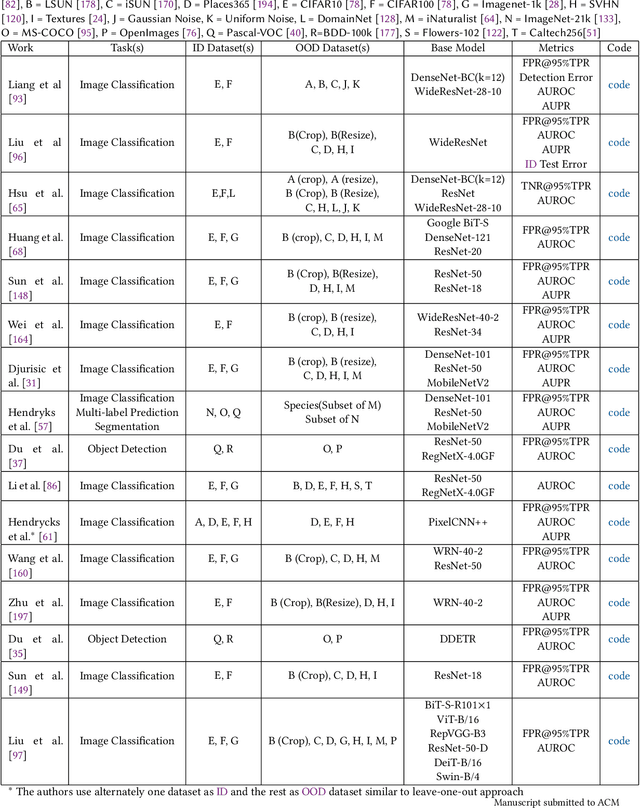

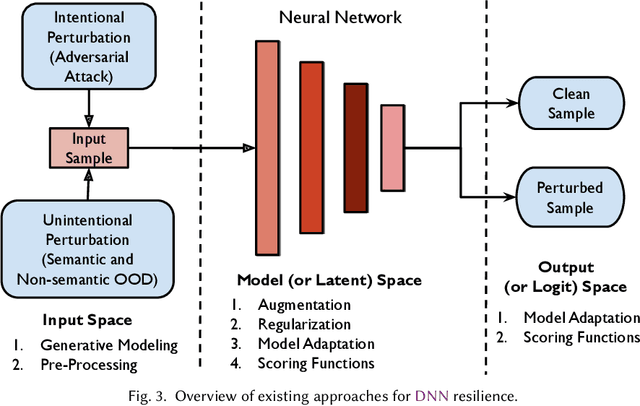

Abstract:In order to deploy deep neural networks (DNNs) in high-stakes scenarios, it is imperative that DNNs provide inference robust to external perturbations - both intentional and unintentional. Although the resilience of DNNs to intentional and unintentional perturbations has been widely investigated, a unified vision of these inherently intertwined problem domains is still missing. In this work, we fill this gap by providing a survey of the state of the art and highlighting the similarities of the proposed approaches.We also analyze the research challenges that need to be addressed to deploy resilient and secure DNNs. As there has not been any such survey connecting the resilience of DNNs to intentional and unintentional perturbations, we believe this work can help advance the frontier in both domains by enabling the exchange of ideas between the two communities.

Faster and Accurate Neural Networks with Semantic Inference

Oct 03, 2023

Abstract:Deep neural networks (DNN) usually come with a significant computational burden. While approaches such as structured pruning and mobile-specific DNNs have been proposed, they incur drastic accuracy loss. In this paper we leverage the intrinsic redundancy in latent representations to reduce the computational load with limited loss in performance. We show that semantically similar inputs share many filters, especially in the earlier layers. Thus, semantically similar classes can be clustered to create cluster-specific subgraphs. To this end, we propose a new framework called Semantic Inference (SINF). In short, SINF (i) identifies the semantic cluster the object belongs to using a small additional classifier and (ii) executes the subgraph extracted from the base DNN related to that semantic cluster for inference. To extract each cluster-specific subgraph, we propose a new approach named Discriminative Capability Score (DCS) that finds the subgraph with the capability to discriminate among the members of a specific semantic cluster. DCS is independent from SINF and can be applied to any DNN. We benchmark the performance of DCS on the VGG16, VGG19, and ResNet50 DNNs trained on the CIFAR100 dataset against 6 state-of-the-art pruning approaches. Our results show that (i) SINF reduces the inference time of VGG19, VGG16, and ResNet50 respectively by up to 35%, 29% and 15% with only 0.17%, 3.75%, and 6.75% accuracy loss (ii) DCS achieves respectively up to 3.65%, 4.25%, and 2.36% better accuracy with VGG16, VGG19, and ResNet50 with respect to existing discriminative scores (iii) when used as a pruning criterion, DCS achieves up to 8.13% accuracy gain with 5.82% less parameters than the existing state of the art work published at ICLR 2023 (iv) when considering per-cluster accuracy, SINF performs on average 5.73%, 8.38% and 6.36% better than the base VGG16, VGG19, and ResNet50.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge