Jinghan Yang

Detecting Mislabeled and Corrupted Data via Pointwise Mutual Information

Aug 11, 2025Abstract:Deep neural networks can memorize corrupted labels, making data quality critical for model performance, yet real-world datasets are frequently compromised by both label noise and input noise. This paper proposes a mutual information-based framework for data selection under hybrid noise scenarios that quantifies statistical dependencies between inputs and labels. We compute each sample's pointwise contribution to the overall mutual information and find that lower contributions indicate noisy or mislabeled instances. Empirical validation on MNIST with different synthetic noise settings demonstrates that the method effectively filters low-quality samples. Under label corruption, training on high-MI samples improves classification accuracy by up to 15\% compared to random sampling. Furthermore, the method exhibits robustness to benign input modifications, preserving semantically valid data while filtering truly corrupted samples.

How to Achieve Higher Accuracy with Less Training Points?

Apr 18, 2025Abstract:In the era of large-scale model training, the extensive use of available datasets has resulted in significant computational inefficiencies. To tackle this issue, we explore methods for identifying informative subsets of training data that can achieve comparable or even superior model performance. We propose a technique based on influence functions to determine which training samples should be included in the training set. We conducted empirical evaluations of our method on binary classification tasks utilizing logistic regression models. Our approach demonstrates performance comparable to that of training on the entire dataset while using only 10% of the data. Furthermore, we found that our method achieved even higher accuracy when trained with just 60% of the data.

Empowering Global Voices: A Data-Efficient, Phoneme-Tone Adaptive Approach to High-Fidelity Speech Synthesis

Apr 10, 2025Abstract:Text-to-speech (TTS) technology has achieved impressive results for widely spoken languages, yet many under-resourced languages remain challenged by limited data and linguistic complexities. In this paper, we present a novel methodology that integrates a data-optimized framework with an advanced acoustic model to build high-quality TTS systems for low-resource scenarios. We demonstrate the effectiveness of our approach using Thai as an illustrative case, where intricate phonetic rules and sparse resources are effectively addressed. Our method enables zero-shot voice cloning and improved performance across diverse client applications, ranging from finance to healthcare, education, and law. Extensive evaluations - both subjective and objective - confirm that our model meets state-of-the-art standards, offering a scalable solution for TTS production in data-limited settings, with significant implications for broader industry adoption and multilingual accessibility.

RED: Robust Environmental Design

Nov 26, 2024Abstract:The classification of road signs by autonomous systems, especially those reliant on visual inputs, is highly susceptible to adversarial attacks. Traditional approaches to mitigating such vulnerabilities have focused on enhancing the robustness of classification models. In contrast, this paper adopts a fundamentally different strategy aimed at increasing robustness through the redesign of road signs themselves. We propose an attacker-agnostic learning scheme to automatically design road signs that are robust to a wide array of patch-based attacks. Empirical tests conducted in both digital and physical environments demonstrate that our approach significantly reduces vulnerability to patch attacks, outperforming existing techniques.

Auto-ICL: In-Context Learning without Human Supervision

Nov 15, 2023

Abstract:In the era of Large Language Models (LLMs), human-computer interaction has evolved towards natural language, offering unprecedented flexibility. Despite this, LLMs are heavily reliant on well-structured prompts to function efficiently within the realm of In-Context Learning. Vanilla In-Context Learning relies on human-provided contexts, such as labeled examples, explicit instructions, or other guiding mechanisms that shape the model's outputs. To address this challenge, our study presents a universal framework named Automatic In-Context Learning. Upon receiving a user's request, we ask the model to independently generate examples, including labels, instructions, or reasoning pathways. The model then leverages this self-produced context to tackle the given problem. Our approach is universally adaptable and can be implemented in any setting where vanilla In-Context Learning is applicable. We demonstrate that our method yields strong performance across a range of tasks, standing up well when compared to existing methods.

Relabel Minimal Training Subset to Flip a Prediction

May 22, 2023Abstract:Yang et al. (2023) discovered that removing a mere 1% of training points can often lead to the flipping of a prediction. Given the prevalence of noisy data in machine learning models, we pose the question: can we also result in the flipping of a test prediction by relabeling a small subset of the training data before the model is trained? In this paper, utilizing the extended influence function, we propose an efficient procedure for identifying and relabeling such a subset, demonstrating consistent success. This mechanism serves multiple purposes: (1) providing a complementary approach to challenge model predictions by recovering potentially mislabeled training points; (2) evaluating model resilience, as our research uncovers a significant relationship between the subset's size and the ratio of noisy data in the training set; and (3) offering insights into bias within the training set. To the best of our knowledge, this work represents the first investigation into the problem of identifying and relabeling the minimal training subset required to flip a given prediction.

How Many and Which Training Points Would Need to be Removed to Flip this Prediction?

Feb 09, 2023Abstract:We consider the problem of identifying a minimal subset of training data $\mathcal{S}_t$ such that if the instances comprising $\mathcal{S}_t$ had been removed prior to training, the categorization of a given test point $x_t$ would have been different. Identifying such a set may be of interest for a few reasons. First, the cardinality of $\mathcal{S}_t$ provides a measure of robustness (if $|\mathcal{S}_t|$ is small for $x_t$, we might be less confident in the corresponding prediction), which we show is correlated with but complementary to predicted probabilities. Second, interrogation of $\mathcal{S}_t$ may provide a novel mechanism for contesting a particular model prediction: If one can make the case that the points in $\mathcal{S}_t$ are wrongly labeled or irrelevant, this may argue for overturning the associated prediction. Identifying $\mathcal{S}_t$ via brute-force is intractable. We propose comparatively fast approximation methods to find $\mathcal{S}_t$ based on influence functions, and find that -- for simple convex text classification models -- these approaches can often successfully identify relatively small sets of training examples which, if removed, would flip the prediction.

Certified Robust Control under Adversarial Perturbations

Feb 04, 2023Abstract:Autonomous systems increasingly rely on machine learning techniques to transform high-dimensional raw inputs into predictions that are then used for decision-making and control. However, it is often easy to maliciously manipulate such inputs and, as a result, predictions. While effective techniques have been proposed to certify the robustness of predictions to adversarial input perturbations, such techniques have been disembodied from control systems that make downstream use of the predictions. We propose the first approach for composing robustness certification of predictions with respect to raw input perturbations with robust control to obtain certified robustness of control to adversarial input perturbations. We use a case study of adaptive vehicle control to illustrate our approach and show the value of the resulting end-to-end certificates through extensive experiments.

PROVES: Establishing Image Provenance using Semantic Signatures

Oct 21, 2021

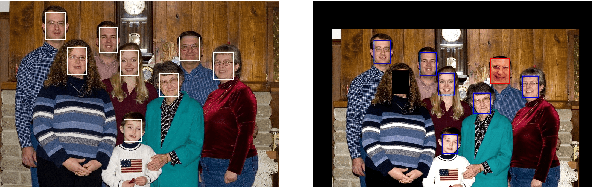

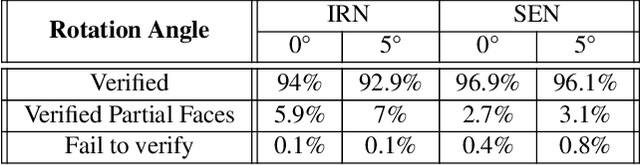

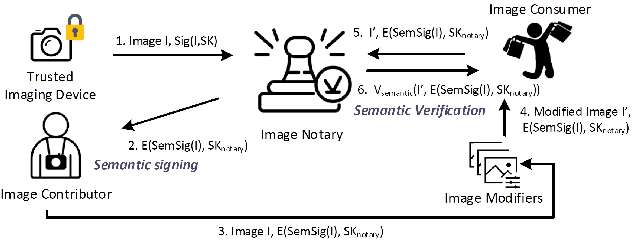

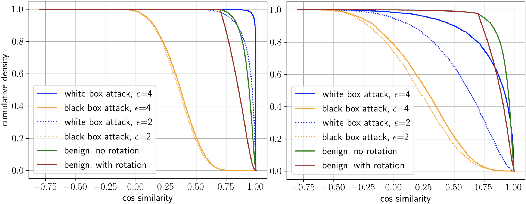

Abstract:Modern AI tools, such as generative adversarial networks, have transformed our ability to create and modify visual data with photorealistic results. However, one of the deleterious side-effects of these advances is the emergence of nefarious uses in manipulating information in visual data, such as through the use of deep fakes. We propose a novel architecture for preserving the provenance of semantic information in images to make them less susceptible to deep fake attacks. Our architecture includes semantic signing and verification steps. We apply this architecture to verifying two types of semantic information: individual identities (faces) and whether the photo was taken indoors or outdoors. Verification accounts for a collection of common image transformation, such as translation, scaling, cropping, and small rotations, and rejects adversarial transformations, such as adversarially perturbed or, in the case of face verification, swapped faces. Experiments demonstrate that in the case of provenance of faces in an image, our approach is robust to black-box adversarial transformations (which are rejected) as well as benign transformations (which are accepted), with few false negatives and false positives. Background verification, on the other hand, is susceptible to black-box adversarial examples, but becomes significantly more robust after adversarial training.

Finding Physical Adversarial Examples for Autonomous Driving with Fast and Differentiable Image Compositing

Oct 17, 2020

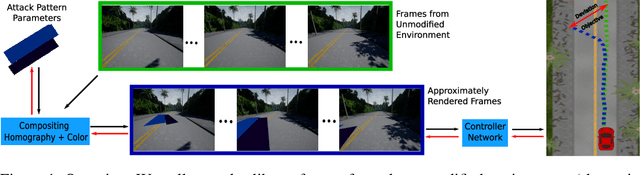

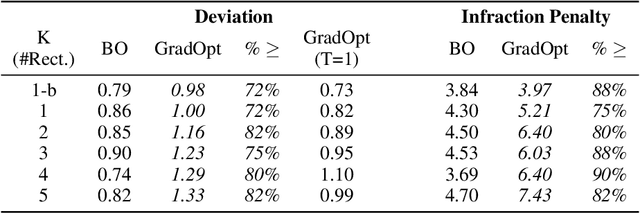

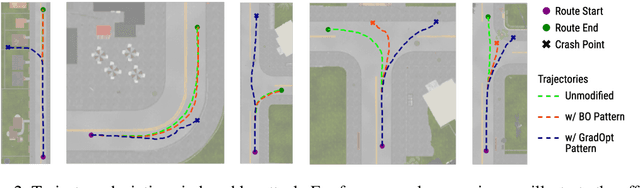

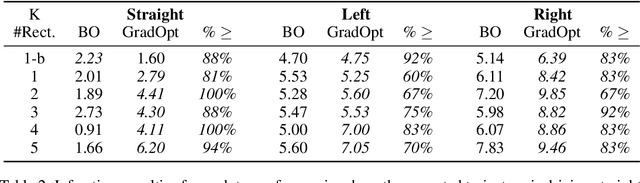

Abstract:There is considerable evidence that deep neural networks are vulnerable to adversarial perturbations applied directly to their digital inputs. However, it remains an open question whether this translates to vulnerabilities in real-world systems. Specifically, in the context of image inputs to autonomous driving systems, an attack can be achieved only by modifying the physical environment, so as to ensure that the resulting stream of video inputs to the car's controller leads to incorrect driving decisions. Inducing this effect on the video inputs indirectly through the environment requires accounting for system dynamics and tracking viewpoint changes. We propose a scalable and efficient approach for finding adversarial physical modifications, using a differentiable approximation for the mapping from environmental modifications-namely, rectangles drawn on the road-to the corresponding video inputs to the controller network. Given the color, location, position, and orientation parameters of the rectangles, our mapping composites them onto pre-recorded video streams of the original environment. Our mapping accounts for geometric and color variations, is differentiable with respect to rectangle parameters, and uses multiple original video streams obtained by varying the driving trajectory. When combined with a neural network-based controller, our approach allows the design of adversarial modifications through end-to-end gradient-based optimization. We evaluate our approach using the Carla autonomous driving simulator, and show that it is significantly more scalable and far more effective at generating attacks than a prior black-box approach based on Bayesian Optimization.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge