Adith Boloor

SnapPix: Efficient-Coding--Inspired In-Sensor Compression for Edge Vision

Apr 06, 2025Abstract:Energy-efficient image acquisition on the edge is crucial for enabling remote sensing applications where the sensor node has weak compute capabilities and must transmit data to a remote server/cloud for processing. To reduce the edge energy consumption, this paper proposes a sensor-algorithm co-designed system called SnapPix, which compresses raw pixels in the analog domain inside the sensor. We use coded exposure (CE) as the in-sensor compression strategy as it offers the flexibility to sample, i.e., selectively expose pixels, both spatially and temporally. SNAPPIX has three contributions. First, we propose a task-agnostic strategy to learn the sampling/exposure pattern based on the classic theory of efficient coding. Second, we co-design the downstream vision model with the exposure pattern to address the pixel-level non-uniformity unique to CE-compressed images. Finally, we propose lightweight augmentations to the image sensor hardware to support our in-sensor CE compression. Evaluating on action recognition and video reconstruction, SnapPix outperforms state-of-the-art video-based methods at the same speed while reducing the energy by up to 15.4x. We have open-sourced the code at: https://github.com/horizon-research/SnapPix.

Neural-PIM: Efficient Processing-In-Memory with Neural Approximation of Peripherals

Jan 30, 2022

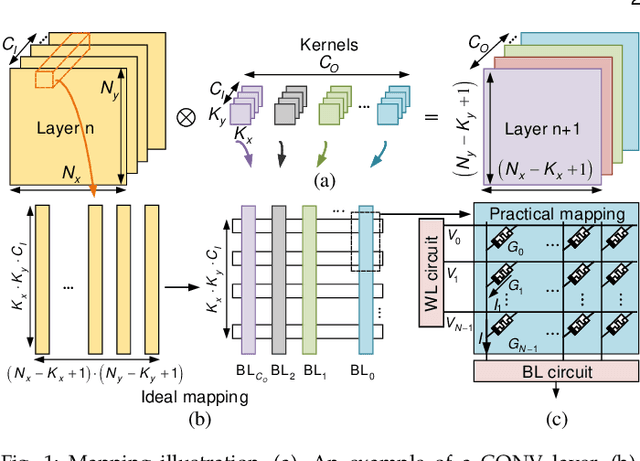

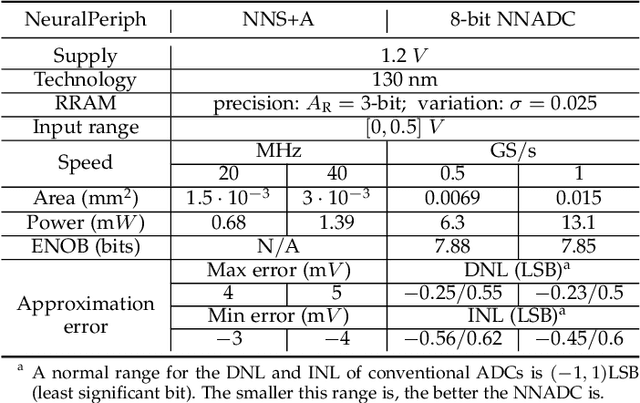

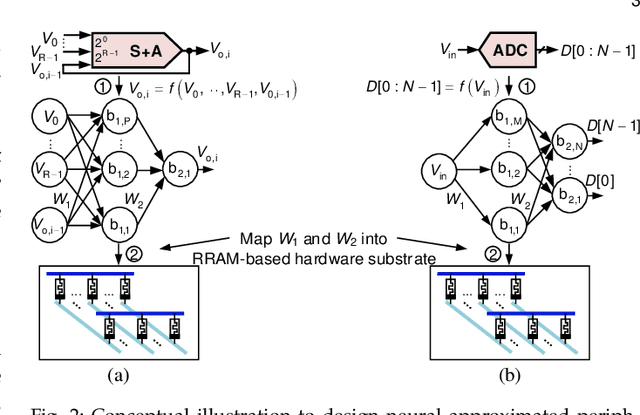

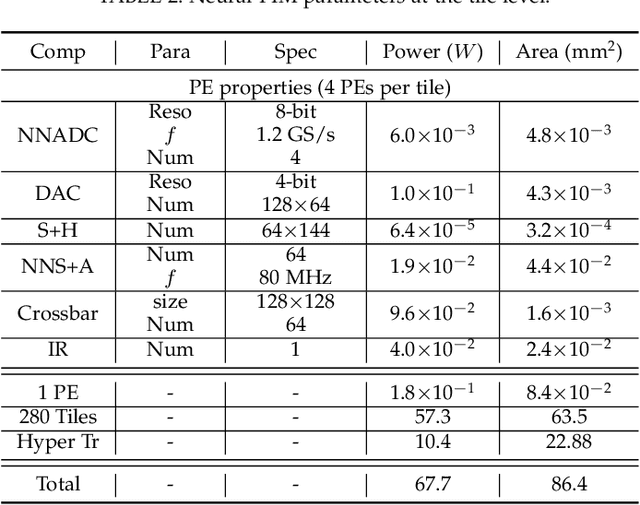

Abstract:Processing-in-memory (PIM) architectures have demonstrated great potential in accelerating numerous deep learning tasks. Particularly, resistive random-access memory (RRAM) devices provide a promising hardware substrate to build PIM accelerators due to their abilities to realize efficient in-situ vector-matrix multiplications (VMMs). However, existing PIM accelerators suffer from frequent and energy-intensive analog-to-digital (A/D) conversions, severely limiting their performance. This paper presents a new PIM architecture to efficiently accelerate deep learning tasks by minimizing the required A/D conversions with analog accumulation and neural approximated peripheral circuits. We first characterize the different dataflows employed by existing PIM accelerators, based on which a new dataflow is proposed to remarkably reduce the required A/D conversions for VMMs by extending shift and add (S+A) operations into the analog domain before the final quantizations. We then leverage a neural approximation method to design both analog accumulation circuits (S+A) and quantization circuits (ADCs) with RRAM crossbar arrays in a highly-efficient manner. Finally, we apply them to build an RRAM-based PIM accelerator (i.e., \textbf{Neural-PIM}) upon the proposed analog dataflow and evaluate its system-level performance. Evaluations on different benchmarks demonstrate that Neural-PIM can improve energy efficiency by 5.36x (1.73x) and speed up throughput by 3.43x (1.59x) without losing accuracy, compared to the state-of-the-art RRAM-based PIM accelerators, i.e., ISAAC (CASCADE).

* 14 pages, 13 figures, Published in IEEE Transactions on Computers

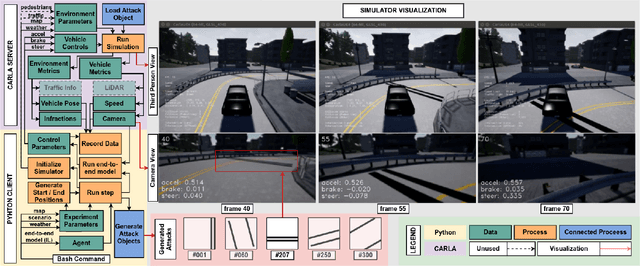

Finding Physical Adversarial Examples for Autonomous Driving with Fast and Differentiable Image Compositing

Oct 17, 2020

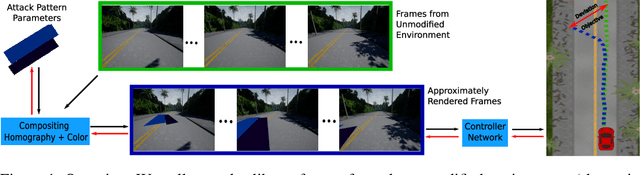

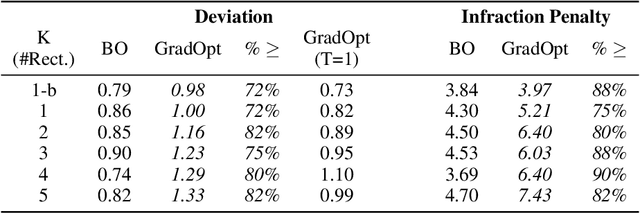

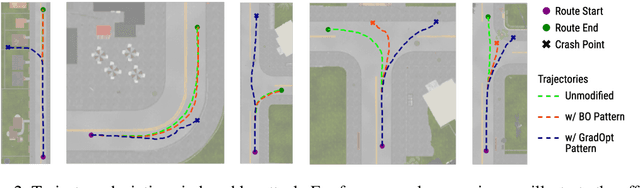

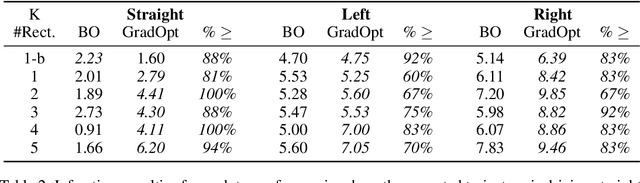

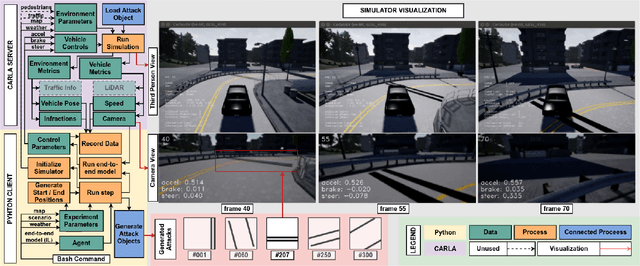

Abstract:There is considerable evidence that deep neural networks are vulnerable to adversarial perturbations applied directly to their digital inputs. However, it remains an open question whether this translates to vulnerabilities in real-world systems. Specifically, in the context of image inputs to autonomous driving systems, an attack can be achieved only by modifying the physical environment, so as to ensure that the resulting stream of video inputs to the car's controller leads to incorrect driving decisions. Inducing this effect on the video inputs indirectly through the environment requires accounting for system dynamics and tracking viewpoint changes. We propose a scalable and efficient approach for finding adversarial physical modifications, using a differentiable approximation for the mapping from environmental modifications-namely, rectangles drawn on the road-to the corresponding video inputs to the controller network. Given the color, location, position, and orientation parameters of the rectangles, our mapping composites them onto pre-recorded video streams of the original environment. Our mapping accounts for geometric and color variations, is differentiable with respect to rectangle parameters, and uses multiple original video streams obtained by varying the driving trajectory. When combined with a neural network-based controller, our approach allows the design of adversarial modifications through end-to-end gradient-based optimization. We evaluate our approach using the Carla autonomous driving simulator, and show that it is significantly more scalable and far more effective at generating attacks than a prior black-box approach based on Bayesian Optimization.

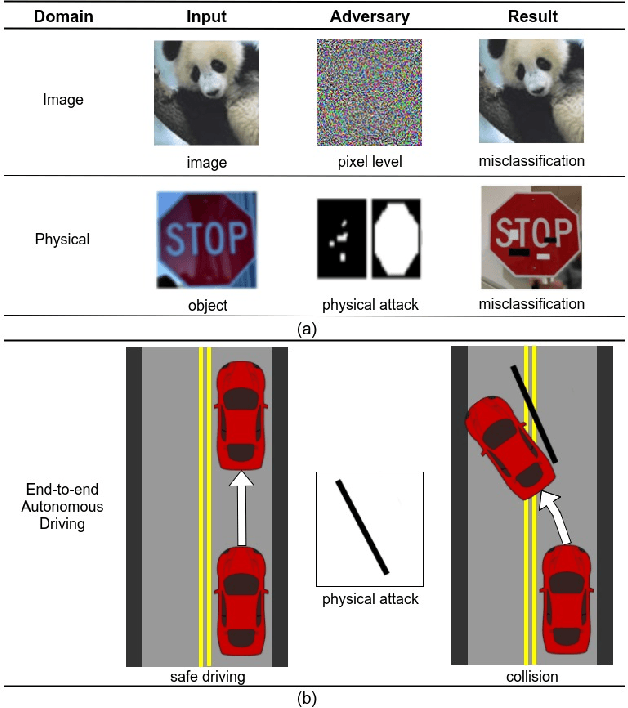

Attacking Vision-based Perception in End-to-End Autonomous Driving Models

Oct 02, 2019

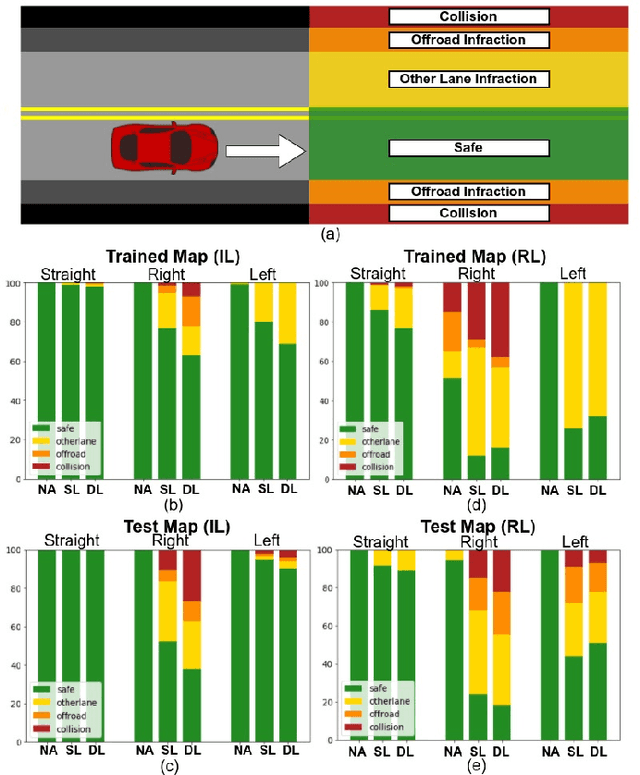

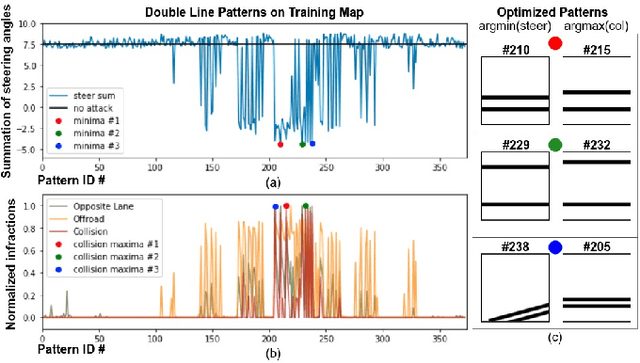

Abstract:Recent advances in machine learning, especially techniques such as deep neural networks, are enabling a range of emerging applications. One such example is autonomous driving, which often relies on deep learning for perception. However, deep learning-based perception has been shown to be vulnerable to a host of subtle adversarial manipulations of images. Nevertheless, the vast majority of such demonstrations focus on perception that is disembodied from end-to-end control. We present novel end-to-end attacks on autonomous driving in simulation, using simple physically realizable attacks: the painting of black lines on the road. These attacks target deep neural network models for end-to-end autonomous driving control. A systematic investigation shows that such attacks are easy to engineer, and we describe scenarios (e.g., right turns) in which they are highly effective. We define several objective functions that quantify the success of an attack and develop techniques based on Bayesian Optimization to efficiently traverse the search space of higher dimensional attacks. Additionally, we define a novel class of hijacking attacks, where painted lines on the road cause the driver-less car to follow a target path. Through the use of network deconvolution, we provide insights into the successful attacks, which appear to work by mimicking activations of entirely different scenarios. Our code is available at https://github.com/xz-group/AdverseDrive

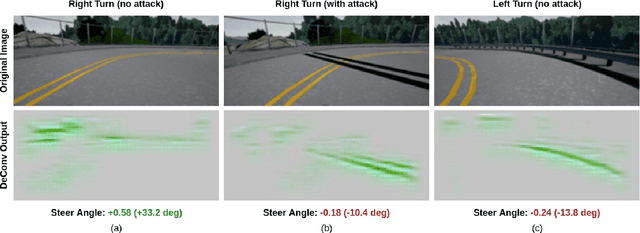

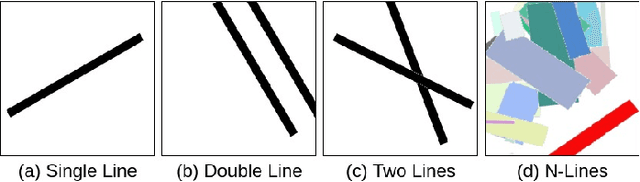

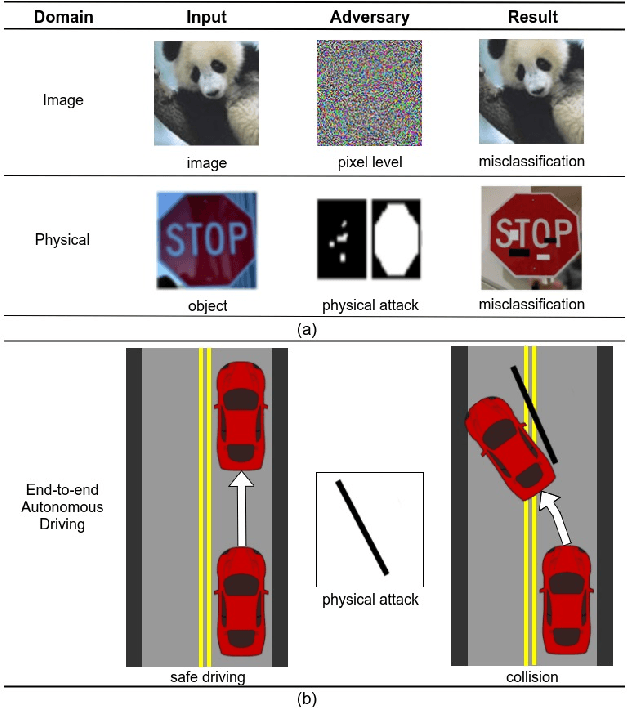

Simple Physical Adversarial Examples against End-to-End Autonomous Driving Models

Mar 12, 2019

Abstract:Recent advances in machine learning, especially techniques such as deep neural networks, are promoting a range of high-stakes applications, including autonomous driving, which often relies on deep learning for perception. While deep learning for perception has been shown to be vulnerable to a host of subtle adversarial manipulations of images, end-to-end demonstrations of successful attacks, which manipulate the physical environment and result in physical consequences, are scarce. Moreover, attacks typically involve carefully constructed adversarial examples at the level of pixels. We demonstrate the first end-to-end attacks on autonomous driving in simulation, using simple physically realizable attacks: the painting of black lines on the road. These attacks target deep neural network models for end-to-end autonomous driving control. A systematic investigation shows that such attacks are surprisingly easy to engineer, and we describe scenarios (e.g., right turns) in which they are highly effective, and others that are less vulnerable (e.g., driving straight). Further, we use network deconvolution to demonstrate that the attacks succeed by inducing activation patterns similar to entirely different scenarios used in training.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge