Jiaxuan Lu

ASA: Activation Steering for Tool-Calling Domain Adaptation

Feb 04, 2026Abstract:For real-world deployment of general-purpose LLM agents, the core challenge is often not tool use itself, but efficient domain adaptation under rapidly evolving toolsets, APIs, and protocols. Repeated LoRA or SFT across domains incurs exponentially growing training and maintenance costs, while prompt or schema methods are brittle under distribution shift and complex interfaces. We propose \textbf{Activation Steering Adapter (ASA}), a lightweight, inference-time, training-free mechanism that reads routing signals from intermediate activations and uses an ultra-light router to produce adaptive control strengths for precise domain alignment. Across multiple model scales and domains, ASA achieves LoRA-comparable adaptation with substantially lower overhead and strong cross-model transferability, making it ideally practical for robust, scalable, and efficient multi-domain tool ecosystems with frequent interface churn dynamics.

NeuroPareto: Calibrated Acquisition for Costly Many-Goal Search in Vast Parameter Spaces

Feb 03, 2026Abstract:The pursuit of optimal trade-offs in high-dimensional search spaces under stringent computational constraints poses a fundamental challenge for contemporary multi-objective optimization. We develop NeuroPareto, a cohesive architecture that integrates rank-centric filtering, uncertainty disentanglement, and history-conditioned acquisition strategies to navigate complex objective landscapes. A calibrated Bayesian classifier estimates epistemic uncertainty across non-domination tiers, enabling rapid generation of high-quality candidates with minimal evaluation cost. Deep Gaussian Process surrogates further separate predictive uncertainty into reducible and irreducible components, providing refined predictive means and risk-aware signals for downstream selection. A lightweight acquisition network, trained online from historical hypervolume improvements, guides expensive evaluations toward regions balancing convergence and diversity. With hierarchical screening and amortized surrogate updates, the method maintains accuracy while keeping computational overhead low. Experiments on DTLZ and ZDT suites and a subsurface energy extraction task show that NeuroPareto consistently outperforms classifier-enhanced and surrogate-assisted baselines in Pareto proximity and hypervolume.

SwiftRepertoire: Few-Shot Immune-Signature Synthesis via Dynamic Kernel Codes

Feb 01, 2026Abstract:Repertoire-level analysis of T cell receptors offers a biologically grounded signal for disease detection and immune monitoring, yet practical deployment is impeded by label sparsity, cohort heterogeneity, and the computational burden of adapting large encoders to new tasks. We introduce a framework that synthesizes compact task-specific parameterizations from a learned dictionary of prototypes conditioned on lightweight task descriptors derived from repertoire probes and pooled embedding statistics. This synthesis produces small adapter modules applied to a frozen pretrained backbone, enabling immediate adaptation to novel tasks with only a handful of support examples and without full model fine-tuning. The architecture preserves interpretability through motif-aware probes and a calibrated motif discovery pipeline that links predictive decisions to sequence-level signals. Together, these components yield a practical, sample-efficient, and interpretable pathway for translating repertoire-informed models into diverse clinical and research settings where labeled data are scarce and computational resources are constrained.

Beyond Static Tools: Test-Time Tool Evolution for Scientific Reasoning

Jan 12, 2026Abstract:The central challenge of AI for Science is not reasoning alone, but the ability to create computational methods in an open-ended scientific world. Existing LLM-based agents rely on static, pre-defined tool libraries, a paradigm that fundamentally fails in scientific domains where tools are sparse, heterogeneous, and intrinsically incomplete. In this paper, we propose Test-Time Tool Evolution (TTE), a new paradigm that enables agents to synthesize, verify, and evolve executable tools during inference. By transforming tools from fixed resources into problem-driven artifacts, TTE overcomes the rigidity and long-tail limitations of static tool libraries. To facilitate rigorous evaluation, we introduce SciEvo, a benchmark comprising 1,590 scientific reasoning tasks supported by 925 automatically evolved tools. Extensive experiments show that TTE achieves state-of-the-art performance in both accuracy and tool efficiency, while enabling effective cross-domain adaptation of computational tools. The code and benchmark have been released at https://github.com/lujiaxuan0520/Test-Time-Tool-Evol.

SCP: Accelerating Discovery with a Global Web of Autonomous Scientific Agents

Dec 30, 2025Abstract:We introduce SCP: the Science Context Protocol, an open-source standard designed to accelerate discovery by enabling a global network of autonomous scientific agents. SCP is built on two foundational pillars: (1) Unified Resource Integration: At its core, SCP provides a universal specification for describing and invoking scientific resources, spanning software tools, models, datasets, and physical instruments. This protocol-level standardization enables AI agents and applications to discover, call, and compose capabilities seamlessly across disparate platforms and institutional boundaries. (2) Orchestrated Experiment Lifecycle Management: SCP complements the protocol with a secure service architecture, which comprises a centralized SCP Hub and federated SCP Servers. This architecture manages the complete experiment lifecycle (registration, planning, execution, monitoring, and archival), enforces fine-grained authentication and authorization, and orchestrates traceable, end-to-end workflows that bridge computational and physical laboratories. Based on SCP, we have constructed a scientific discovery platform that offers researchers and agents a large-scale ecosystem of more than 1,600 tool resources. Across diverse use cases, SCP facilitates secure, large-scale collaboration between heterogeneous AI systems and human researchers while significantly reducing integration overhead and enhancing reproducibility. By standardizing scientific context and tool orchestration at the protocol level, SCP establishes essential infrastructure for scalable, multi-institution, agent-driven science.

HyDRA: A Hybrid Dual-Mode Network for Closed- and Open-Set RFFI with Optimized VMD

Jul 16, 2025Abstract:Device recognition is vital for security in wireless communication systems, particularly for applications like access control. Radio Frequency Fingerprint Identification (RFFI) offers a non-cryptographic solution by exploiting hardware-induced signal distortions. This paper proposes HyDRA, a Hybrid Dual-mode RF Architecture that integrates an optimized Variational Mode Decomposition (VMD) with a novel architecture based on the fusion of Convolutional Neural Networks (CNNs), Transformers, and Mamba components, designed to support both closed-set and open-set classification tasks. The optimized VMD enhances preprocessing efficiency and classification accuracy by fixing center frequencies and using closed-form solutions. HyDRA employs the Transformer Dynamic Sequence Encoder (TDSE) for global dependency modeling and the Mamba Linear Flow Encoder (MLFE) for linear-complexity processing, adapting to varying conditions. Evaluation on public datasets demonstrates state-of-the-art (SOTA) accuracy in closed-set scenarios and robust performance in our proposed open-set classification method, effectively identifying unauthorized devices. Deployed on NVIDIA Jetson Xavier NX, HyDRA achieves millisecond-level inference speed with low power consumption, providing a practical solution for real-time wireless authentication in real-world environments.

Graph Mamba for Efficient Whole Slide Image Understanding

May 23, 2025Abstract:Whole Slide Images (WSIs) in histopathology present a significant challenge for large-scale medical image analysis due to their high resolution, large size, and complex tile relationships. Existing Multiple Instance Learning (MIL) methods, such as Graph Neural Networks (GNNs) and Transformer-based models, face limitations in scalability and computational cost. To bridge this gap, we propose the WSI-GMamba framework, which synergistically combines the relational modeling strengths of GNNs with the efficiency of Mamba, the State Space Model designed for sequence learning. The proposed GMamba block integrates Message Passing, Graph Scanning & Flattening, and feature aggregation via a Bidirectional State Space Model (Bi-SSM), achieving Transformer-level performance with 7* fewer FLOPs. By leveraging the complementary strengths of lightweight GNNs and Mamba, the WSI-GMamba framework delivers a scalable solution for large-scale WSI analysis, offering both high accuracy and computational efficiency for slide-level classification.

PathOrchestra: A Comprehensive Foundation Model for Computational Pathology with Over 100 Diverse Clinical-Grade Tasks

Mar 31, 2025Abstract:The complexity and variability inherent in high-resolution pathological images present significant challenges in computational pathology. While pathology foundation models leveraging AI have catalyzed transformative advancements, their development demands large-scale datasets, considerable storage capacity, and substantial computational resources. Furthermore, ensuring their clinical applicability and generalizability requires rigorous validation across a broad spectrum of clinical tasks. Here, we present PathOrchestra, a versatile pathology foundation model trained via self-supervised learning on a dataset comprising 300K pathological slides from 20 tissue and organ types across multiple centers. The model was rigorously evaluated on 112 clinical tasks using a combination of 61 private and 51 public datasets. These tasks encompass digital slide preprocessing, pan-cancer classification, lesion identification, multi-cancer subtype classification, biomarker assessment, gene expression prediction, and the generation of structured reports. PathOrchestra demonstrated exceptional performance across 27,755 WSIs and 9,415,729 ROIs, achieving over 0.950 accuracy in 47 tasks, including pan-cancer classification across various organs, lymphoma subtype diagnosis, and bladder cancer screening. Notably, it is the first model to generate structured reports for high-incidence colorectal cancer and diagnostically complex lymphoma-areas that are infrequently addressed by foundational models but hold immense clinical potential. Overall, PathOrchestra exemplifies the feasibility and efficacy of a large-scale, self-supervised pathology foundation model, validated across a broad range of clinical-grade tasks. Its high accuracy and reduced reliance on extensive data annotation underline its potential for clinical integration, offering a pathway toward more efficient and high-quality medical services.

MV-GMN: State Space Model for Multi-View Action Recognition

Jan 23, 2025

Abstract:Recent advancements in multi-view action recognition have largely relied on Transformer-based models. While effective and adaptable, these models often require substantial computational resources, especially in scenarios with multiple views and multiple temporal sequences. Addressing this limitation, this paper introduces the MV-GMN model, a state-space model specifically designed to efficiently aggregate multi-modal data (RGB and skeleton), multi-view perspectives, and multi-temporal information for action recognition with reduced computational complexity. The MV-GMN model employs an innovative Multi-View Graph Mamba network comprising a series of MV-GMN blocks. Each block includes a proposed Bidirectional State Space Block and a GCN module. The Bidirectional State Space Block introduces four scanning strategies, including view-prioritized and time-prioritized approaches. The GCN module leverages rule-based and KNN-based methods to construct the graph network, effectively integrating features from different viewpoints and temporal instances. Demonstrating its efficacy, MV-GMN outperforms the state-of-the-arts on several datasets, achieving notable accuracies of 97.3\% and 96.7\% on the NTU RGB+D 120 dataset in cross-subject and cross-view scenarios, respectively. MV-GMN also surpasses Transformer-based baselines while requiring only linear inference complexity, underscoring the model's ability to reduce computational load and enhance the scalability and applicability of multi-view action recognition technologies.

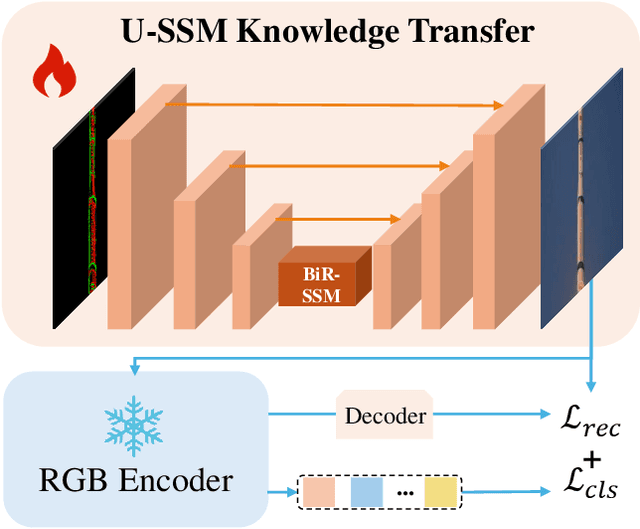

Event USKT : U-State Space Model in Knowledge Transfer for Event Cameras

Nov 22, 2024

Abstract:Event cameras, as an emerging imaging technology, offer distinct advantages over traditional RGB cameras, including reduced energy consumption and higher frame rates. However, the limited quantity of available event data presents a significant challenge, hindering their broader development. To alleviate this issue, we introduce a tailored U-shaped State Space Model Knowledge Transfer (USKT) framework for Event-to-RGB knowledge transfer. This framework generates inputs compatible with RGB frames, enabling event data to effectively reuse pre-trained RGB models and achieve competitive performance with minimal parameter tuning. Within the USKT architecture, we also propose a bidirectional reverse state space model. Unlike conventional bidirectional scanning mechanisms, the proposed Bidirectional Reverse State Space Model (BiR-SSM) leverages a shared weight strategy, which facilitates efficient modeling while conserving computational resources. In terms of effectiveness, integrating USKT with ResNet50 as the backbone improves model performance by 0.95%, 3.57%, and 2.9% on DVS128 Gesture, N-Caltech101, and CIFAR-10-DVS datasets, respectively, underscoring USKT's adaptability and effectiveness. The code will be made available upon acceptance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge