Jiangjun Peng

Hipandas: Hyperspectral Image Joint Denoising and Super-Resolution by Image Fusion with the Panchromatic Image

Dec 05, 2024

Abstract:Hyperspectral images (HSIs) are frequently noisy and of low resolution due to the constraints of imaging devices. Recently launched satellites can concurrently acquire HSIs and panchromatic (PAN) images, enabling the restoration of HSIs to generate clean and high-resolution imagery through fusing PAN images for denoising and super-resolution. However, previous studies treated these two tasks as independent processes, resulting in accumulated errors. This paper introduces \textbf{H}yperspectral \textbf{I}mage Joint \textbf{Pand}enoising \textbf{a}nd Pan\textbf{s}harpening (Hipandas), a novel learning paradigm that reconstructs HRHS images from noisy low-resolution HSIs (LRHS) and high-resolution PAN images. The proposed zero-shot Hipandas framework consists of a guided denoising network, a guided super-resolution network, and a PAN reconstruction network, utilizing an HSI low-rank prior and a newly introduced detail-oriented low-rank prior. The interconnection of these networks complicates the training process, necessitating a two-stage training strategy to ensure effective training. Experimental results on both simulated and real-world datasets indicate that the proposed method surpasses state-of-the-art algorithms, yielding more accurate and visually pleasing HRHS images.

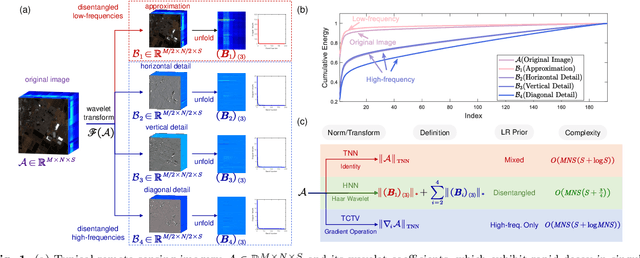

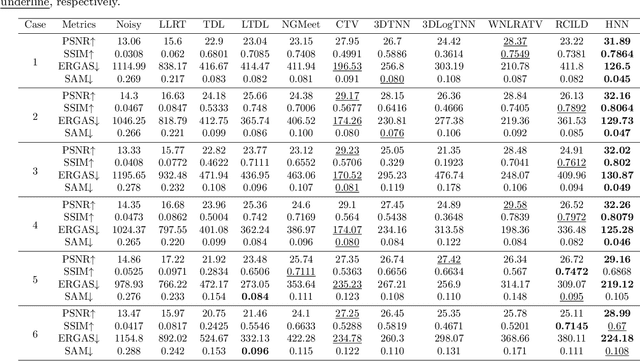

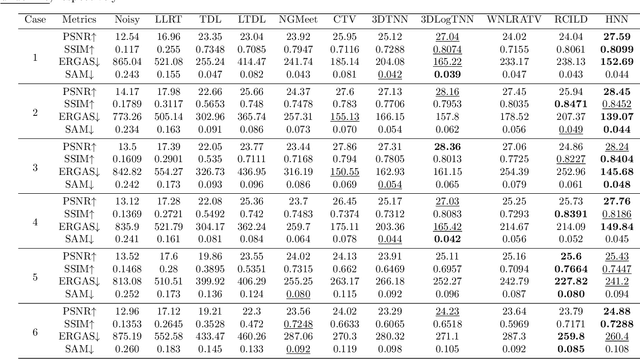

Haar Nuclear Norms with Applications to Remote Sensing Imagery Restoration

Jul 11, 2024

Abstract:Remote sensing image restoration aims to reconstruct missing or corrupted areas within images. To date, low-rank based models have garnered significant interest in this field. This paper proposes a novel low-rank regularization term, named the Haar nuclear norm (HNN), for efficient and effective remote sensing image restoration. It leverages the low-rank properties of wavelet coefficients derived from the 2-D frontal slice-wise Haar discrete wavelet transform, effectively modeling the low-rank prior for separated coarse-grained structure and fine-grained textures in the image. Experimental evaluations conducted on hyperspectral image inpainting, multi-temporal image cloud removal, and hyperspectral image denoising have revealed the HNN's potential. Typically, HNN achieves a performance improvement of 1-4 dB and a speedup of 10-28x compared to some state-of-the-art methods (e.g., tensor correlated total variation, and fully-connected tensor network) for inpainting tasks.

Pan-denoising: Guided Hyperspectral Image Denoising via Weighted Represent Coefficient Total Variation

Jul 08, 2024

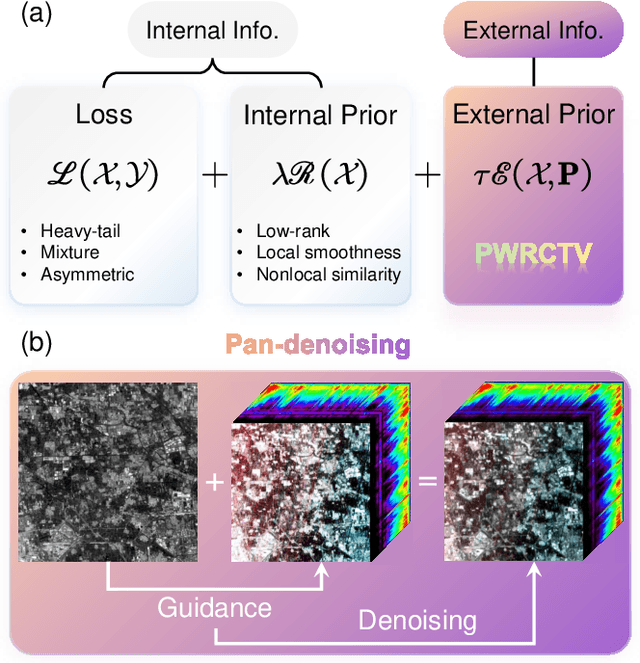

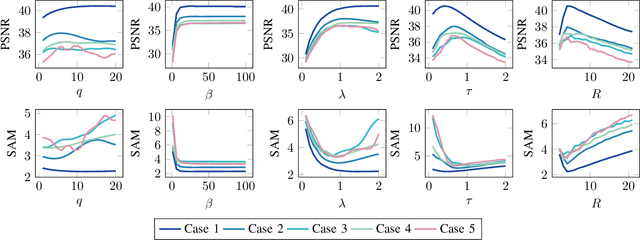

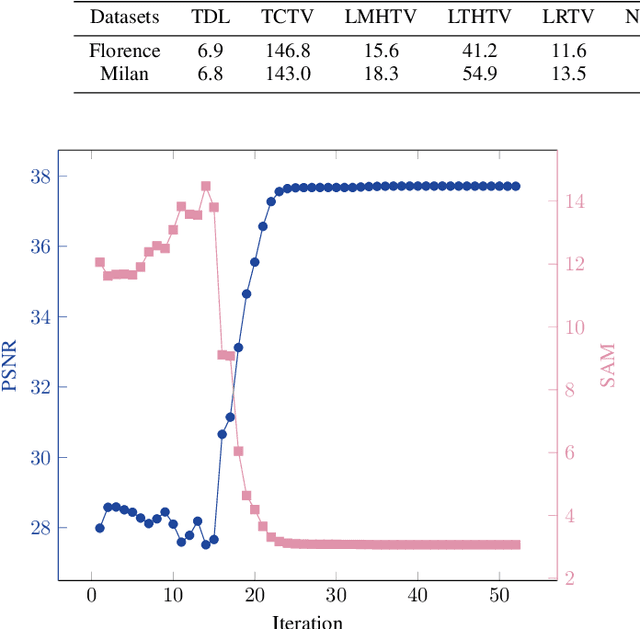

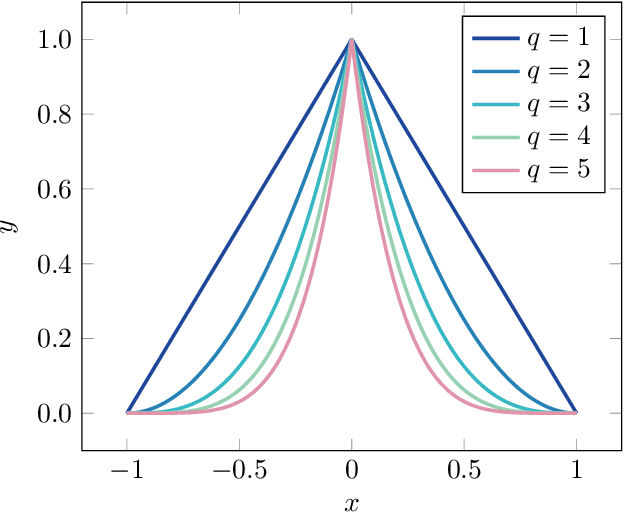

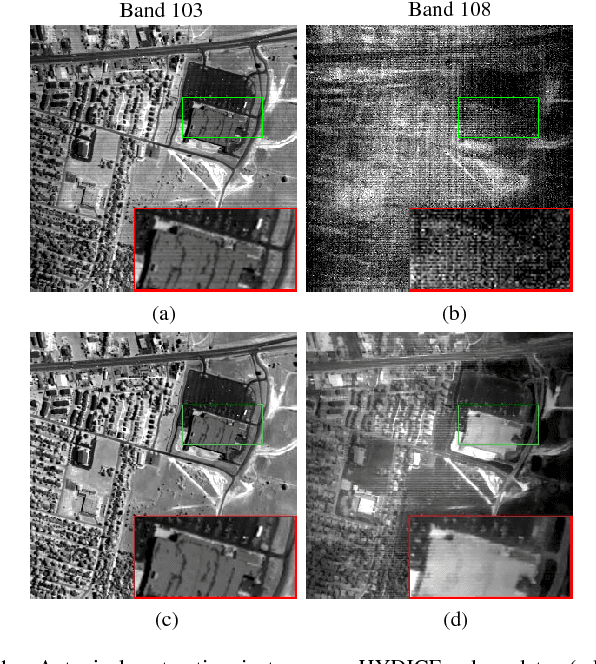

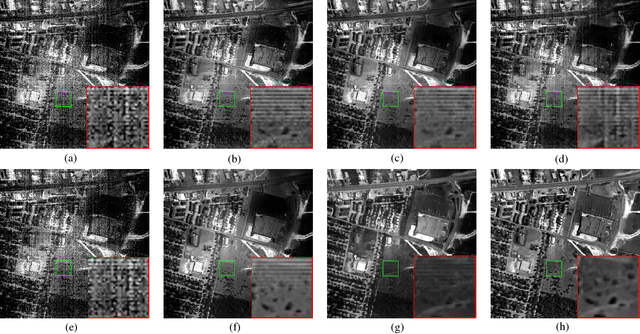

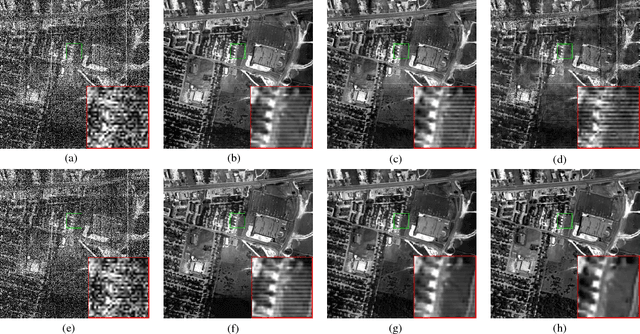

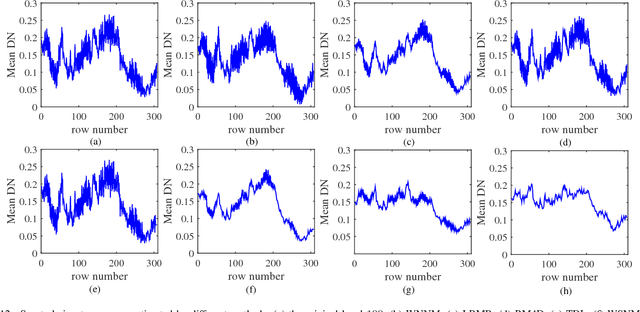

Abstract:This paper introduces a novel paradigm for hyperspectral image (HSI) denoising, which is termed \textit{pan-denoising}. In a given scene, panchromatic (PAN) images capture similar structures and textures to HSIs but with less noise. This enables the utilization of PAN images to guide the HSI denoising process. Consequently, pan-denoising, which incorporates an additional prior, has the potential to uncover underlying structures and details beyond the internal information modeling of traditional HSI denoising methods. However, the proper modeling of this additional prior poses a significant challenge. To alleviate this issue, the paper proposes a novel regularization term, Panchromatic Weighted Representation Coefficient Total Variation (PWRCTV). It employs the gradient maps of PAN images to automatically assign different weights of TV regularization for each pixel, resulting in larger weights for smooth areas and smaller weights for edges. This regularization forms the basis of a pan-denoising model, which is solved using the Alternating Direction Method of Multipliers. Extensive experiments on synthetic and real-world datasets demonstrate that PWRCTV outperforms several state-of-the-art methods in terms of metrics and visual quality. Furthermore, an HSI classification experiment confirms that PWRCTV, as a preprocessing method, can enhance the performance of downstream classification tasks. The code and data are available at https://github.com/shuangxu96/PWRCTV.

Neural Gradient Regularizer

Sep 13, 2023Abstract:Owing to its significant success, the prior imposed on gradient maps has consistently been a subject of great interest in the field of image processing. Total variation (TV), one of the most representative regularizers, is known for its ability to capture the intrinsic sparsity prior underlying gradient maps. Nonetheless, TV and its variants often underestimate the gradient maps, leading to the weakening of edges and details whose gradients should not be zero in the original image (i.e., image structures is not describable by sparse priors of gradient maps). Recently, total deep variation (TDV) has been introduced, assuming the sparsity of feature maps, which provides a flexible regularization learned from large-scale datasets for a specific task. However, TDV requires to retrain the network with image/task variations, limiting its versatility. To alleviate this issue, in this paper, we propose a neural gradient regularizer (NGR) that expresses the gradient map as the output of a neural network. Unlike existing methods, NGR does not rely on any subjective sparsity or other prior assumptions on image gradient maps, thereby avoiding the underestimation of gradient maps. NGR is applicable to various image types and different image processing tasks, functioning in a zero-shot learning fashion, making it a versatile and plug-and-play regularizer. Extensive experimental results demonstrate the superior performance of NGR over state-of-the-art counterparts for a range of different tasks, further validating its effectiveness and versatility.

HIDFlowNet: A Flow-Based Deep Network for Hyperspectral Image Denoising

Jun 20, 2023

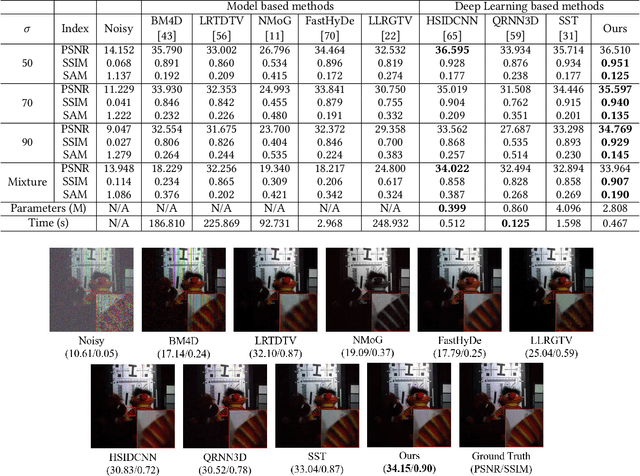

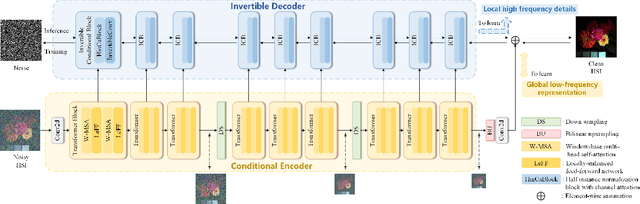

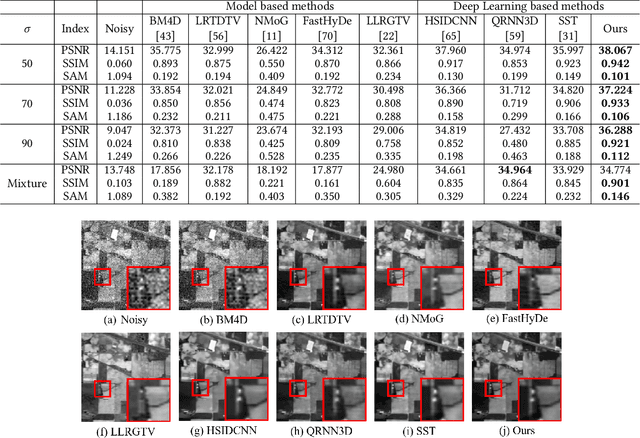

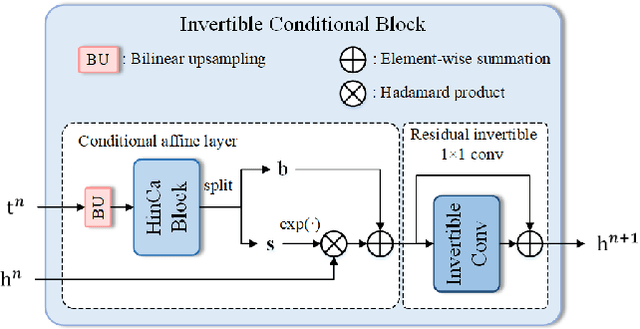

Abstract:Hyperspectral image (HSI) denoising is essentially ill-posed since a noisy HSI can be degraded from multiple clean HSIs. However, current deep learning-based approaches ignore this fact and restore the clean image with deterministic mapping (i.e., the network receives a noisy HSI and outputs a clean HSI). To alleviate this issue, this paper proposes a flow-based HSI denoising network (HIDFlowNet) to directly learn the conditional distribution of the clean HSI given the noisy HSI and thus diverse clean HSIs can be sampled from the conditional distribution. Overall, our HIDFlowNet is induced from the flow methodology and contains an invertible decoder and a conditional encoder, which can fully decouple the learning of low-frequency and high-frequency information of HSI. Specifically, the invertible decoder is built by staking a succession of invertible conditional blocks (ICBs) to capture the local high-frequency details since the invertible network is information-lossless. The conditional encoder utilizes down-sampling operations to obtain low-resolution images and uses transformers to capture correlations over a long distance so that global low-frequency information can be effectively extracted. Extensive experimental results on simulated and real HSI datasets verify the superiority of our proposed HIDFlowNet compared with other state-of-the-art methods both quantitatively and visually.

Guaranteed Tensor Recovery Fused Low-rankness and Smoothness

Feb 04, 2023

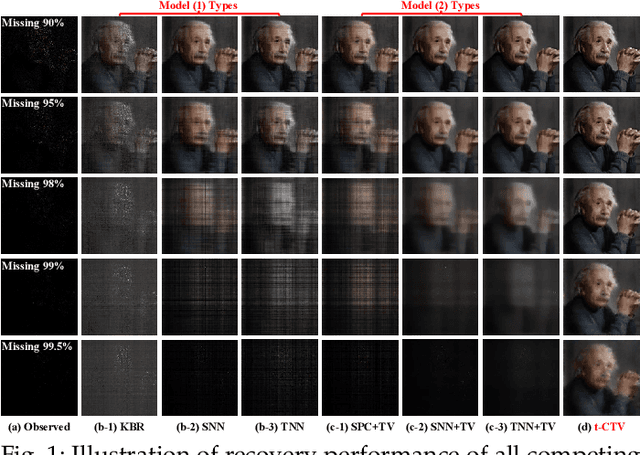

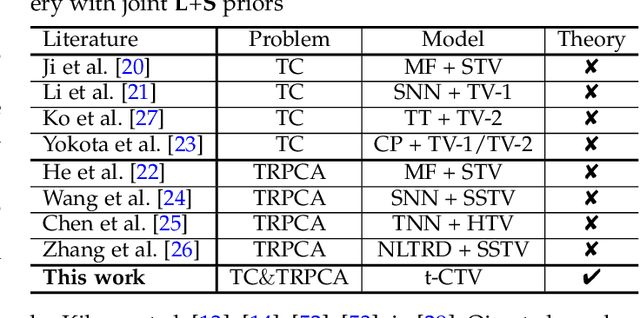

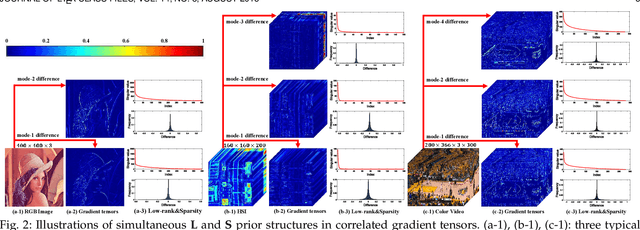

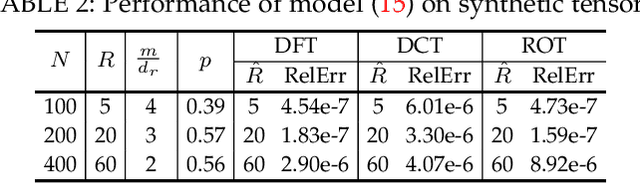

Abstract:The tensor data recovery task has thus attracted much research attention in recent years. Solving such an ill-posed problem generally requires to explore intrinsic prior structures underlying tensor data, and formulate them as certain forms of regularization terms for guiding a sound estimate of the restored tensor. Recent research have made significant progress by adopting two insightful tensor priors, i.e., global low-rankness (L) and local smoothness (S) across different tensor modes, which are always encoded as a sum of two separate regularization terms into the recovery models. However, unlike the primary theoretical developments on low-rank tensor recovery, these joint L+S models have no theoretical exact-recovery guarantees yet, making the methods lack reliability in real practice. To this crucial issue, in this work, we build a unique regularization term, which essentially encodes both L and S priors of a tensor simultaneously. Especially, by equipping this single regularizer into the recovery models, we can rigorously prove the exact recovery guarantees for two typical tensor recovery tasks, i.e., tensor completion (TC) and tensor robust principal component analysis (TRPCA). To the best of our knowledge, this should be the first exact-recovery results among all related L+S methods for tensor recovery. Significant recovery accuracy improvements over many other SOTA methods in several TC and TRPCA tasks with various kinds of visual tensor data are observed in extensive experiments. Typically, our method achieves a workable performance when the missing rate is extremely large, e.g., 99.5%, for the color image inpainting task, while all its peers totally fail in such challenging case.

Fast Noise Removal in Hyperspectral Images via Representative Coefficient Total Variation

Nov 03, 2022Abstract:Mining structural priors in data is a widely recognized technique for hyperspectral image (HSI) denoising tasks, whose typical ways include model-based methods and data-based methods. The model-based methods have good generalization ability, while the runtime cannot meet the fast processing requirements of the practical situations due to the large size of an HSI data $ \mathbf{X} \in \mathbb{R}^{MN\times B}$. For the data-based methods, they perform very fast on new test data once they have been trained. However, their generalization ability is always insufficient. In this paper, we propose a fast model-based HSI denoising approach. Specifically, we propose a novel regularizer named Representative Coefficient Total Variation (RCTV) to simultaneously characterize the low rank and local smooth properties. The RCTV regularizer is proposed based on the observation that the representative coefficient matrix $\mathbf{U}\in\mathbb{R}^{MN\times R} (R\ll B)$ obtained by orthogonally transforming the original HSI $\mathbf{X}$ can inherit the strong local-smooth prior of $\mathbf{X}$. Since $R/B$ is very small, the HSI denoising model based on the RCTV regularizer has lower time complexity. Additionally, we find that the representative coefficient matrix $\mathbf{U}$ is robust to noise, and thus the RCTV regularizer can somewhat promote the robustness of the HSI denoising model. Extensive experiments on mixed noise removal demonstrate the superiority of the proposed method both in denoising performance and denoising speed compared with other state-of-the-art methods. Remarkably, the denoising speed of our proposed method outperforms all the model-based techniques and is comparable with the deep learning-based approaches.

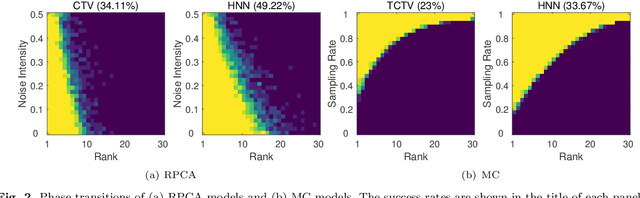

Exact Decomposition of Joint Low Rankness and Local Smoothness Plus Sparse Matrices

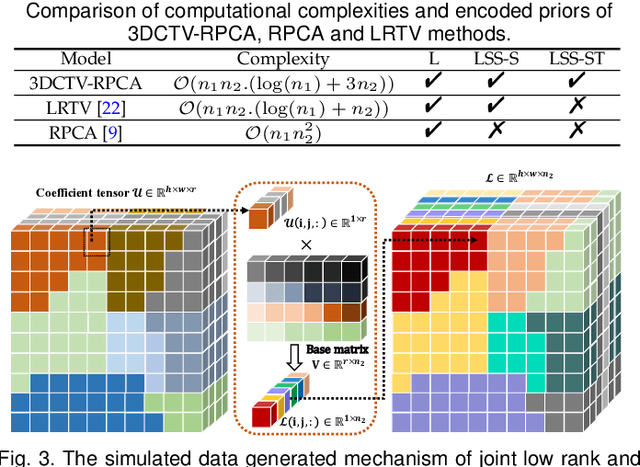

Jan 29, 2022

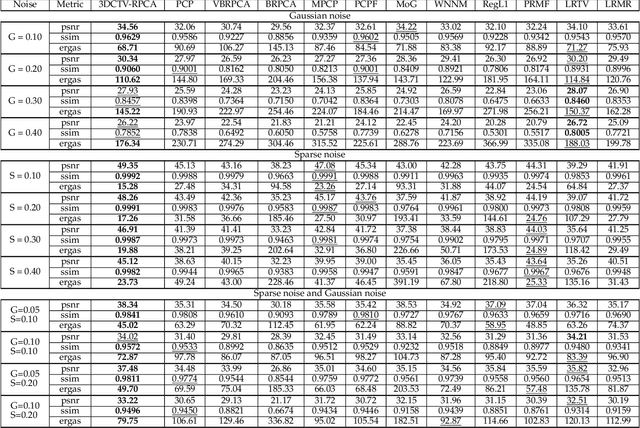

Abstract:It is known that the decomposition in low-rank and sparse matrices (\textbf{L+S} for short) can be achieved by several Robust PCA techniques. Besides the low rankness, the local smoothness (\textbf{LSS}) is a vitally essential prior for many real-world matrix data such as hyperspectral images and surveillance videos, which makes such matrices have low-rankness and local smoothness properties at the same time. This poses an interesting question: Can we make a matrix decomposition in terms of \textbf{L\&LSS +S } form exactly? To address this issue, we propose in this paper a new RPCA model based on three-dimensional correlated total variation regularization (3DCTV-RPCA for short) by fully exploiting and encoding the prior expression underlying such joint low-rank and local smoothness matrices. Specifically, using a modification of Golfing scheme, we prove that under some mild assumptions, the proposed 3DCTV-RPCA model can decompose both components exactly, which should be the first theoretical guarantee among all such related methods combining low rankness and local smoothness. In addition, by utilizing Fast Fourier Transform (FFT), we propose an efficient ADMM algorithm with a solid convergence guarantee for solving the resulting optimization problem. Finally, a series of experiments on both simulations and real applications are carried out to demonstrate the general validity of the proposed 3DCTV-RPCA model.

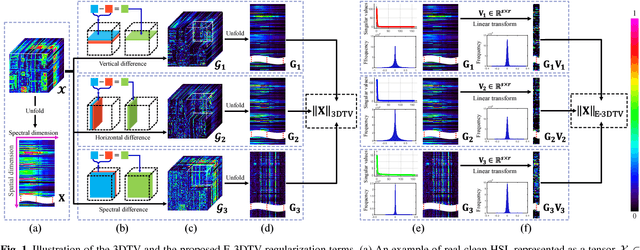

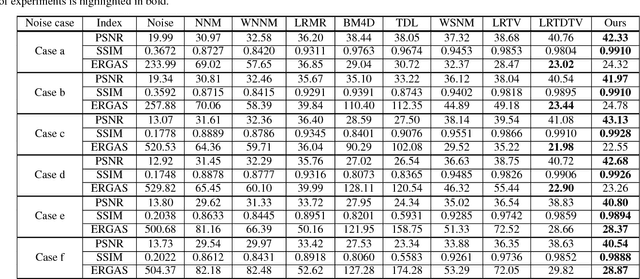

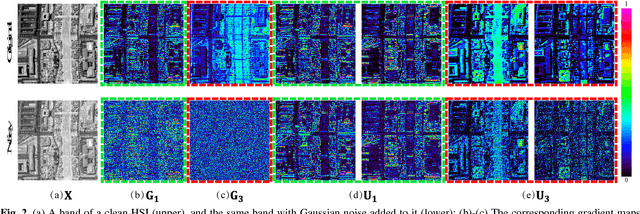

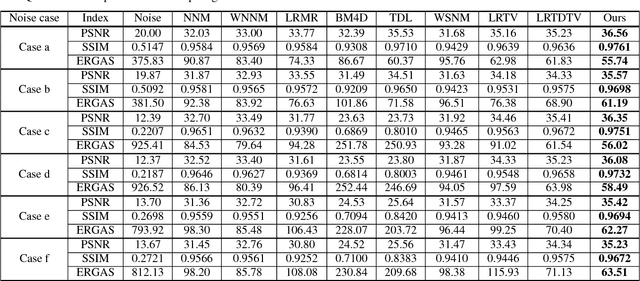

Enhanced 3DTV Regularization and Its Applications on Hyper-spectral Image Denoising and Compressed Sensing

Sep 18, 2018

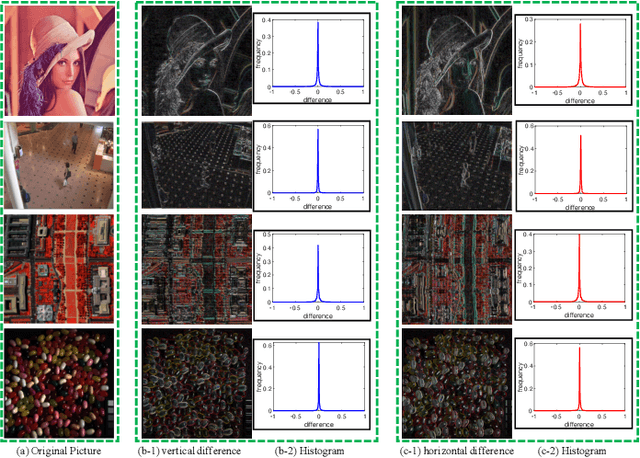

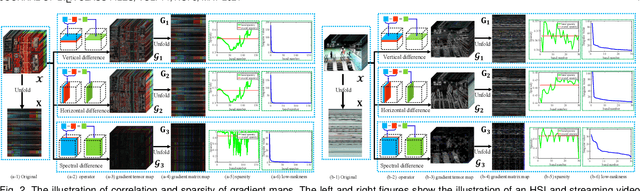

Abstract:The 3-D total variation (3DTV) is a powerful regularization term, which encodes the local smoothness prior structure underlying a hyper-spectral image (HSI), for general HSI processing tasks. This term is calculated by assuming identical and independent sparsity structures on all bands of gradient maps calculated along spatial and spectral HSI modes. This, however, is always largely deviated from the real cases, where the gradient maps are generally with different while correlated sparsity structures across all their bands. Such deviation tends to hamper the performance of the related method by adopting such prior term. To this is- sue, this paper proposes an enhanced 3DTV (E-3DTV) regularization term beyond conventional 3DTV. Instead of imposing sparsity on gradient maps themselves, the new term calculated sparsity on the subspace bases on the gradient maps along their bands, which naturally encode the correlation and difference across these bands, and more faithfully reflect the insightful configurations of an HSI. The E-3DTV term can easily replace the previous 3DTV term and be em- bedded into an HSI processing model to ameliorate its performance. The superiority of the proposed methods is substantiated by extensive experiments on two typical related tasks: HSI denoising and compressed sensing, as compared with state-of-the-arts designed for both tasks.

Hyperspectral Image Restoration via Total Variation Regularized Low-rank Tensor Decomposition

Jul 08, 2017

Abstract:Hyperspectral images (HSIs) are often corrupted by a mixture of several types of noise during the acquisition process, e.g., Gaussian noise, impulse noise, dead lines, stripes, and many others. Such complex noise could degrade the quality of the acquired HSIs, limiting the precision of the subsequent processing. In this paper, we present a novel tensor-based HSI restoration approach by fully identifying the intrinsic structures of the clean HSI part and the mixed noise part respectively. Specifically, for the clean HSI part, we use tensor Tucker decomposition to describe the global correlation among all bands, and an anisotropic spatial-spectral total variation (SSTV) regularization to characterize the piecewise smooth structure in both spatial and spectral domains. For the mixed noise part, we adopt the $\ell_1$ norm regularization to detect the sparse noise, including stripes, impulse noise, and dead pixels. Despite that TV regulariztion has the ability of removing Gaussian noise, the Frobenius norm term is further used to model heavy Gaussian noise for some real-world scenarios. Then, we develop an efficient algorithm for solving the resulting optimization problem by using the augmented Lagrange multiplier (ALM) method. Finally, extensive experiments on simulated and real-world noise HSIs are carried out to demonstrate the superiority of the proposed method over the existing state-of-the-art ones.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge