Jiaming Qi

Think Proprioceptively: Embodied Visual Reasoning for VLA Manipulation

Feb 06, 2026Abstract:Vision-language-action (VLA) models typically inject proprioception only as a late conditioning signal, which prevents robot state from shaping instruction understanding and from influencing which visual tokens are attended throughout the policy. We introduce ThinkProprio, which converts proprioception into a sequence of text tokens in the VLM embedding space and fuses them with the task instruction at the input. This early fusion lets embodied state participate in subsequent visual reasoning and token selection, biasing computation toward action-critical evidence while suppressing redundant visual tokens. In a systematic ablation over proprioception encoding, state entry point, and action-head conditioning, we find that text tokenization is more effective than learned projectors, and that retaining roughly 15% of visual tokens can match the performance of using the full token set. Across CALVIN, LIBERO, and real-world manipulation, ThinkProprio matches or improves over strong baselines while reducing end-to-end inference latency over 50%.

Failure-Aware Bimanual Teleoperation via Conservative Value Guided Assistance

Feb 01, 2026Abstract:Teleoperation of high-precision manipulation is con-strained by tight success tolerances and complex contact dy-namics, which make impending failures difficult for human operators to anticipate under partial observability. This paper proposes a value-guided, failure-aware framework for bimanual teleoperation that provides compliant haptic assistance while pre-serving continuous human authority. The framework is trained entirely from heterogeneous offline teleoperation data containing both successful and failed executions. Task feasibility is mod-eled as a conservative success score learned via Conservative Value Learning, yielding a risk-sensitive estimate that remains reliable under distribution shift. During online operation, the learned success score regulates the level of assistance, while a learned actor provides a corrective motion direction. Both are integrated through a joint-space impedance interface on the master side, yielding continuous guidance that steers the operator away from failure-prone actions without overriding intent. Experimental results on contact-rich manipulation tasks demonstrate improved task success rates and reduced operator workload compared to conventional teleoperation and shared-autonomy baselines, indicating that conservative value learning provides an effective mechanism for embedding failure awareness into bilateral teleoperation. Experimental videos are available at https://www.youtube.com/watch?v=XDTsvzEkDRE

BagIt! An Adaptive Dual-Arm Manipulation of Fabric Bags for Object Bagging

Sep 11, 2025

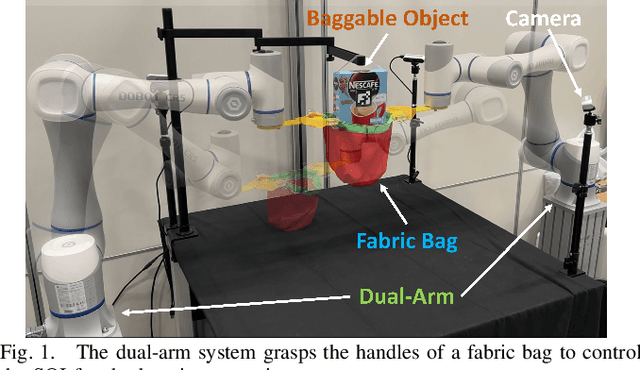

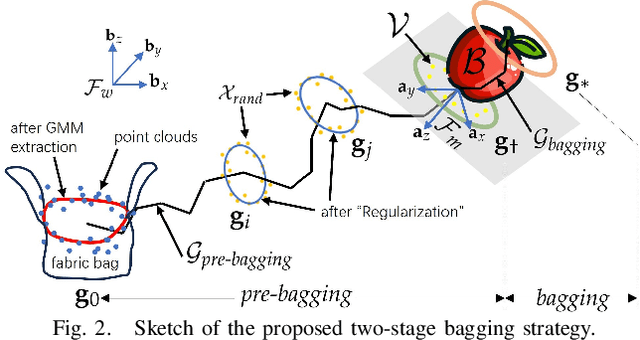

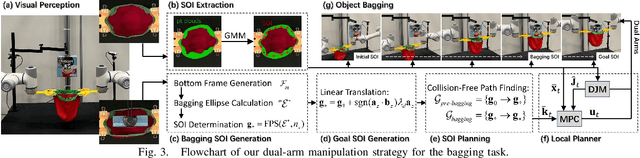

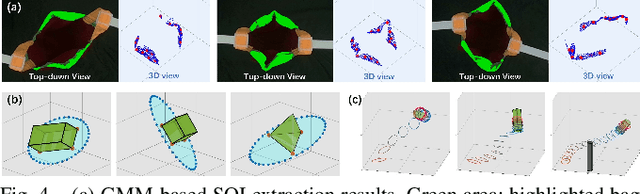

Abstract:Bagging tasks, commonly found in industrial scenarios, are challenging considering deformable bags' complicated and unpredictable nature. This paper presents an automated bagging system from the proposed adaptive Structure-of-Interest (SOI) manipulation strategy for dual robot arms. The system dynamically adjusts its actions based on real-time visual feedback, removing the need for pre-existing knowledge of bag properties. Our framework incorporates Gaussian Mixture Models (GMM) for estimating SOI states, optimization techniques for SOI generation, motion planning via Constrained Bidirectional Rapidly-exploring Random Tree (CBiRRT), and dual-arm coordination using Model Predictive Control (MPC). Extensive experiments validate the capability of our system to perform precise and robust bagging across various objects, showcasing its adaptability. This work offers a new solution for robotic deformable object manipulation (DOM), particularly in automated bagging tasks. Video of this work is available at https://youtu.be/6JWjCOeTGiQ.

Revolutionizing Packaging: A Robotic Bagging Pipeline with Constraint-aware Structure-of-Interest Planning

Mar 15, 2024

Abstract:Bagging operations, common in packaging and assisted living applications, are challenging due to a bag's complex deformable properties. To address this, we develop a robotic system for automated bagging tasks using an adaptive structure-of-interest (SOI) manipulation approach. Our method relies on real-time visual feedback to dynamically adjust manipulation without requiring prior knowledge of bag materials or dynamics. We present a robust pipeline featuring state estimation for SOIs using Gaussian Mixture Models (GMM), SOI generation via optimization-based bagging techniques, SOI motion planning with Constrained Bidirectional Rapidly-exploring Random Trees (CBiRRT), and dual-arm manipulation coordinated by Model Predictive Control (MPC). Experiments demonstrate the system's ability to achieve precise, stable bagging of various objects using adaptive coordination of the manipulators. The proposed framework advances the capability of dual-arm robots to perform more sophisticated automation of common tasks involving interactions with deformable objects.

Bimanual Deformable Bag Manipulation Using a Structure-of-Interest Based Latent Dynamics Model

Jan 21, 2024Abstract:The manipulation of deformable objects by robotic systems presents a significant challenge due to their complex and infinite-dimensional configuration spaces. This paper introduces a novel approach to Deformable Object Manipulation (DOM) by emphasizing the identification and manipulation of Structures of Interest (SOIs) in deformable fabric bags. We propose a bimanual manipulation framework that leverages a Graph Neural Network (GNN)-based latent dynamics model to succinctly represent and predict the behavior of these SOIs. Our approach involves constructing a graph representation from partial point cloud data of the object and learning the latent dynamics model that effectively captures the essential deformations of the fabric bag within a reduced computational space. By integrating this latent dynamics model with Model Predictive Control (MPC), we empower robotic manipulators to perform precise and stable manipulation tasks focused on the SOIs. We have validated our framework through various empirical experiments demonstrating its efficacy in bimanual manipulation of fabric bags. Our contributions not only address the complexities inherent in DOM but also provide new perspectives and methodologies for enhancing robotic interactions with deformable objects by concentrating on their critical structural elements. Experimental videos can be obtained from https://sites.google.com/view/bagbot.

Integrating Visual Foundation Models for Enhanced Robot Manipulation and Motion Planning: A Layered Approach

Sep 20, 2023Abstract:This paper presents a novel layered framework that integrates visual foundation models to improve robot manipulation tasks and motion planning. The framework consists of five layers: Perception, Cognition, Planning, Execution, and Learning. Using visual foundation models, we enhance the robot's perception of its environment, enabling more efficient task understanding and accurate motion planning. This approach allows for real-time adjustments and continual learning, leading to significant improvements in task execution. Experimental results demonstrate the effectiveness of the proposed framework in various robot manipulation tasks and motion planning scenarios, highlighting its potential for practical deployment in dynamic environments.

A Novel Uncalibrated Visual Servoing Controller Baesd on Model-Free Adaptive Control Method with Neural Network

Nov 21, 2022

Abstract:Nowadays, with the continuous expansion of application scenarios of robotic arms, there are more and more scenarios where nonspecialist come into contact with robotic arms. However, in terms of robotic arm visual servoing, traditional Position-based Visual Servoing (PBVS) requires a lot of calibration work, which is challenging for the nonspecialist to cope with. To cope with this situation, Uncalibrated Image-Based Visual Servoing (UIBVS) frees people from tedious calibration work. This work applied a model-free adaptive control (MFAC) method which means that the parameters of controller are updated in real time, bringing better ability of suppression changes of system and environment. An artificial intelligent neural network is applied in designs of controller and estimator for hand-eye relationship. The neural network is updated with the knowledge of the system input and output information in MFAC method. Inspired by "predictive model" and "receding-horizon" in Model Predictive Control (MPC) method and introducing similar structures into our algorithm, we realizes the uncalibrated visual servoing for both stationary targets and moving trajectories. Simulated experiments with a robotic manipulator will be carried out to validate the proposed algorithm.

Adaptive Finite-Time Model Estimation and Control for Manipulator Visual Servoing using Sliding Mode Control and Neural Networks

Nov 21, 2022Abstract:The image-based visual servoing without models of system is challenging since it is hard to fetch an accurate estimation of hand-eye relationship via merely visual measurement. Whereas, the accuracy of estimated hand-eye relationship expressed in local linear format with Jacobian matrix is important to whole system's performance. In this article, we proposed a finite-time controller as well as a Jacobian matrix estimator in a combination of online and offline way. The local linear formulation is formulated first. Then, we use a combination of online and offline method to boost the estimation of the highly coupled and nonlinear hand-eye relationship with data collected via depth camera. A neural network (NN) is pre-trained to give a relative reasonable initial estimation of Jacobian matrix. Then, an online updating method is carried out to modify the offline trained NN for a more accurate estimation. Moreover, sliding mode control algorithm is introduced to realize a finite-time controller. Compared with previous methods, our algorithm possesses better convergence speed. The proposed estimator possesses excellent performance in the accuracy of initial estimation and powerful tracking capabilities for time-varying estimation for Jacobian matrix compared with other data-driven estimators. The proposed scheme acquires the combination of neural network and finite-time control effect which drives a faster convergence speed compared with the exponentially converge ones. Another main feature of our algorithm is that the state signals in system is proved to be semi-global practical finite-time stable. Several experiments are carried out to validate proposed algorithm's performance.

Model Predictive Manipulation of Compliant Objects with Multi-Objective Optimizer and Adversarial Network for Occlusion Compensation

May 20, 2022

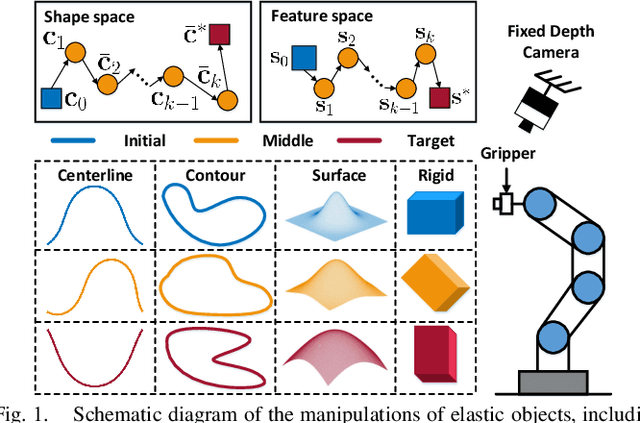

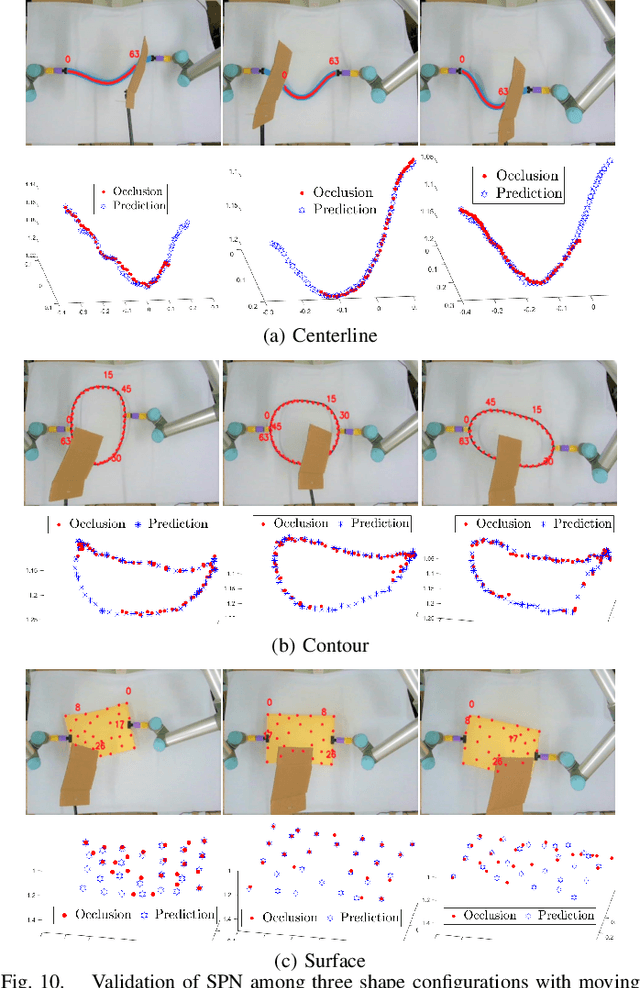

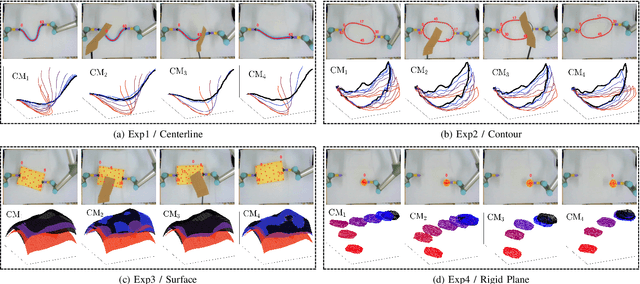

Abstract:The robotic manipulation of compliant objects is currently one of the most active problems in robotics due to its potential to automate many important applications. Despite the progress achieved by the robotics community in recent years, the 3D shaping of these types of materials remains an open research problem. In this paper, we propose a new vision-based controller to automatically regulate the shape of compliant objects with robotic arms. Our method uses an efficient online surface/curve fitting algorithm that quantifies the object's geometry with a compact vector of features; This feedback-like vector enables to establish an explicit shape servo-loop. To coordinate the motion of the robot with the computed shape features, we propose a receding-time estimator that approximates the system's sensorimotor model while satisfying various performance criteria. A deep adversarial network is developed to robustly compensate for visual occlusions in the camera's field of view, which enables to guide the shaping task even with partial observations of the object. Model predictive control is utilized to compute the robot's shaping motions subject to workspace and saturation constraints. A detailed experimental study is presented to validate the effectiveness of the proposed control framework.

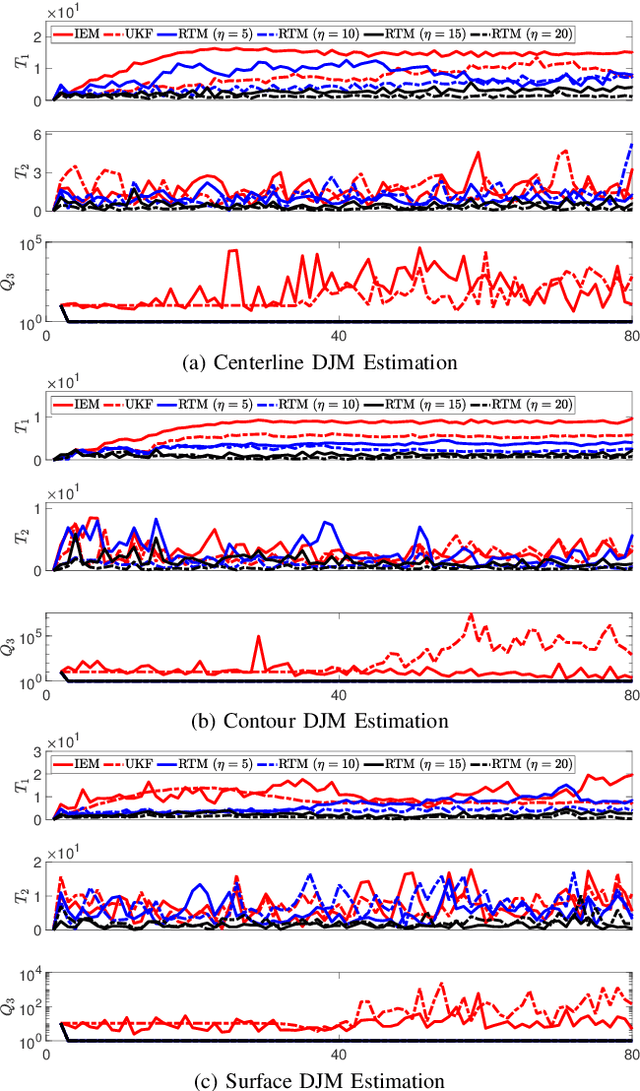

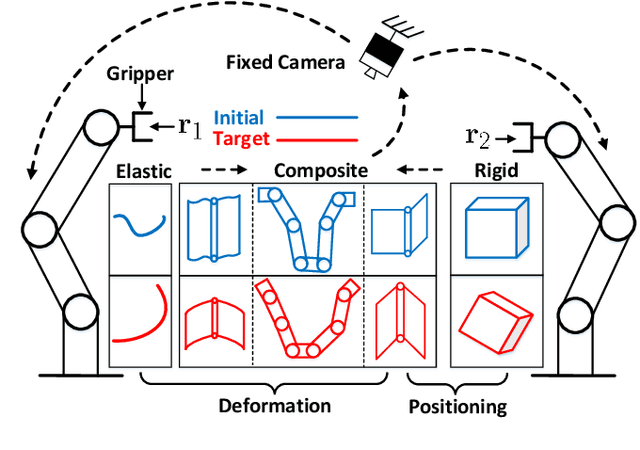

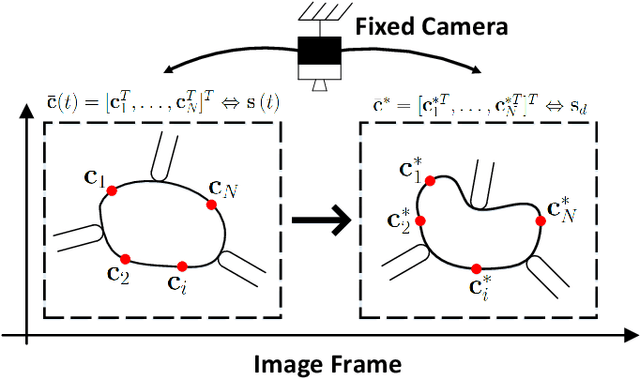

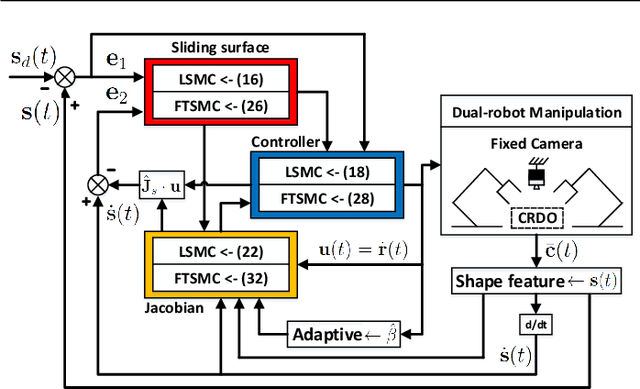

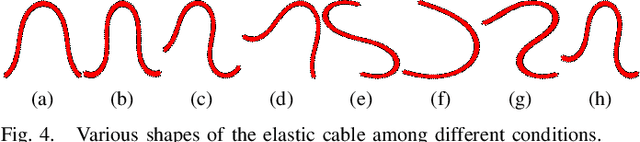

Contour Moments Based Manipulation of Composite Rigid-Deformable Objects with Finite Time Model Estimation and Shape/Position Control

Jun 04, 2021

Abstract:The robotic manipulation of composite rigid-deformable objects (i.e. those with mixed non-homogeneous stiffness properties) is a challenging problem with clear practical applications that, despite the recent progress in the field, it has not been sufficiently studied in the literature. To deal with this issue, in this paper we propose a new visual servoing method that has the capability to manipulate this broad class of objects (which varies from soft to rigid) with the same adaptive strategy. To quantify the object's infinite-dimensional configuration, our new approach computes a compact feedback vector of 2D contour moments features. A sliding mode control scheme is then designed to simultaneously ensure the finite-time convergence of both the feedback shape error and the model estimation error. The stability of the proposed framework (including the boundedness of all the signals) is rigorously proved with Lyapunov theory. Detailed simulations and experiments are presented to validate the effectiveness of the proposed approach. To the best of the author's knowledge, this is the first time that contour moments along with finite-time control have been used to solve this difficult manipulation problem.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge