Jee-Hwan Ryu

MORPH Wheel: A Passive Variable-Radius Wheel Embedding Mechanical Behavior Logic for Input-Responsive Transformation

Feb 05, 2026Abstract:This paper introduces the Mechacnially prOgrammed Radius-adjustable PHysical (MORPH) wheel, a fully passive variable-radius wheel that embeds mechanical behavior logic for torque-responsive transformation. Unlike conventional variable transmission systems relying on actuators, sensors, and active control, the MORPH wheel achieves passive adaptation solely through its geometry and compliant structure. The design integrates a torque-response coupler and spring-loaded connecting struts to mechanically adjust the wheel radius between 80 mm and 45 mm in response to input torque, without any electrical components. The MORPH wheel provides three unique capabilities rarely achieved simultaneously in previous passive designs: (1) bidirectional operation with unlimited rotation through a symmetric coupler; (2) high torque capacity exceeding 10 N with rigid power transmission in drive mode; and (3) precise and repeatable transmission ratio control governed by deterministic kinematics. A comprehensive analytical model was developed to describe the wheel's mechanical behavior logic, establishing threshold conditions for mode switching between direct drive and radius transformation. Experimental validation confirmed that the measured torque-radius and force-displacement characteristics closely follow theoretical predictions across wheel weights of 1.8-2.8kg. Robot-level demonstrations on varying loads (0-25kg), slopes, and unstructured terrains further verified that the MORPH wheel passively adjusts its radius to provide optimal transmission ratio. The MORPH wheel exemplifies a mechanically programmed structure, embedding intelligent, context-dependent behavior directly into its physical design. This approach offers a new paradigm for passive variable transmission and mechanical intelligence in robotic mobility systems operating in unpredictable or control-limited environments.

SPINE Gripper: A Twisted Underactuated Mechanism-based Passive Mode-Transition Gripper

Jan 11, 2026Abstract:This paper presents a single-actuator passive gripper that achieves both stable grasping and continuous bidirectional in-hand rotation through mechanically encoded power transmission logic. Unlike conventional multifunctional grippers that require multiple actuators, sensors, or control-based switching, the proposed gripper transitions between grasping and rotation solely according to the magnitude of the applied input torque. The key enabler of this behavior is a Twisted Underactuated Mechanism (TUM), which generates non-coplanar motions, namely axial contraction and rotation, from a single rotational input while producing identical contraction regardless of rotation direction. A friction generator mechanically defines torque thresholds that govern passive mode switching, enabling stable grasp establishment before autonomously transitioning to in-hand rotation without sensing or active control. Analytical models describing the kinematics, elastic force generation, and torque transmission of the TUM are derived and experimentally validated. The fabricated gripper is evaluated through quantitative experiments on grasp success, friction-based grasp force regulation, and bidirectional rotation performance. System-level demonstrations, including bolt manipulation, object reorientation, and manipulator-integrated tasks driven solely by wrist torque, confirm reliable grasp to rotate transitions in both rotational directions. These results demonstrate that non-coplanar multifunctional manipulation can be realized through mechanical design alone, establishing mechanically encoded power transmission logic as a robust alternative to actuator and control intensive gripper architectures.

Self-Wearing Adaptive Garments via Soft Robotic Unfurling

Jul 09, 2025Abstract:Robotic dressing assistance has the potential to improve the quality of life for individuals with limited mobility. Existing solutions predominantly rely on rigid robotic manipulators, which have challenges in handling deformable garments and ensuring safe physical interaction with the human body. Prior robotic dressing methods require excessive operation times, complex control strategies, and constrained user postures, limiting their practicality and adaptability. This paper proposes a novel soft robotic dressing system, the Self-Wearing Adaptive Garment (SWAG), which uses an unfurling and growth mechanism to facilitate autonomous dressing. Unlike traditional approaches,the SWAG conforms to the human body through an unfurling based deployment method, eliminating skin-garment friction and enabling a safer and more efficient dressing process. We present the working principles of the SWAG, introduce its design and fabrication, and demonstrate its performance in dressing assistance. The proposed system demonstrates effective garment application across various garment configurations, presenting a promising alternative to conventional robotic dressing assistance.

SIO-Mapper: A Framework for Lane-Level HD Map Construction Using Satellite Images and OpenStreetMap with No On-Site Visits

Apr 14, 2025Abstract:High-definition (HD) maps, particularly those containing lane-level information regarded as ground truth, are crucial for vehicle localization research. Traditionally, constructing HD maps requires highly accurate sensor measurements collection from the target area, followed by manual annotation to assign semantic information. Consequently, HD maps are limited in terms of geographic coverage. To tackle this problem, in this paper, we propose SIO-Mapper, a novel lane-level HD map construction framework that constructs city-scale maps without physical site visits by utilizing satellite images and OpenStreetmap data. One of the key contributions of SIO-Mapper is its ability to extract lane information more accurately by introducing SIO-Net, a novel deep learning network that integrates features from satellite image and OpenStreetmap using both Transformer-based and convolution-based encoders. Furthermore, to overcome challenges in merging lanes over large areas, we introduce a novel lane integration methodology that combines cluster-based and graph-based approaches. This algorithm ensures the seamless aggregation of lane segments with high accuracy and coverage, even in complex road environments. We validated SIO-Mapper on the Naver Labs Open Dataset and NuScenes dataset, demonstrating better performance in various environments including Korea, the United States, and Singapore compared to the state-of-the-art lane-level HD mapconstruction methods.

Teaching Robots to Handle Nuclear Waste: A Teleoperation-Based Learning Approach<

Apr 02, 2025Abstract:This paper presents a Learning from Teleoperation (LfT) framework that integrates human expertise with robotic precision to enable robots to autonomously perform skills learned from human operators. The proposed framework addresses challenges in nuclear waste handling tasks, which often involve repetitive and meticulous manipulation operations. By capturing operator movements and manipulation forces during teleoperation, the framework utilizes this data to train machine learning models capable of replicating and generalizing human skills. We validate the effectiveness of the LfT framework through its application to a power plug insertion task, selected as a representative scenario that is repetitive yet requires precise trajectory and force control. Experimental results highlight significant improvements in task efficiency, while reducing reliance on continuous operator involvement.

Development of a Collaborative Robotic Arm-based Bimanual Haptic Display

Nov 11, 2024

Abstract:This paper presents a bimanual haptic display based on collaborative robot arms. We address the limitations of existing robot arm-based haptic displays by optimizing the setup configuration and implementing inertia/friction compensation techniques. The optimized setup configuration maximizes workspace coverage, dexterity, and haptic feedback capability while ensuring collision safety. Inertia/friction compensation significantly improve transparency and reduce user fatigue, leading to a more seamless and transparent interaction. The effectiveness of our system is demonstrated in various applications, including bimanual bilateral teleoperation in both real and simulated environments. This research contributes to the advancement of haptic technology by presenting a practical and effective solution for creating high-performance bimanual haptic displays using collaborative robot arms.

External Steering of Vine Robots via Magnetic Actuation

Sep 02, 2024Abstract:This paper explores the concept of external magnetic control for vine robots to enable their high curvature steering and navigation for use in endoluminal applications. Vine robots, inspired by natural growth and locomotion strategies, present unique shape adaptation capabilities that allow passive deformation around obstacles. However, without additional steering mechanisms, they lack the ability to actively select the desired direction of growth. The principles of magnetically steered growing robots are discussed, and experimental results showcase the effectiveness of the proposed magnetic actuation approach. We present a 25 mm diameter vine robot with integrated magnetic tip capsule, including 6 Degrees of Freedom (DOF) localization and camera and demonstrate a minimum bending radius of 3.85 cm with an internal pressure of 30 kPa. Furthermore, we evaluate the robot's ability to form tight curvature through complex navigation tasks, with magnetic actuation allowing for extended free-space navigation without buckling. The suspension of the magnetic tip was also validated using the 6 DOF localization system to ensure that the shear-free nature of vine robots was preserved. Additionally, by exploiting the magnetic wrench at the tip, we showcase preliminary results of vine retraction. The findings contribute to the development of controllable vine robots for endoluminal applications, providing high tip force and shear-free navigation.

Ambiguity-Aware Multi-Object Pose Optimization for Visually-Assisted Robot Manipulation

Nov 02, 2022Abstract:6D object pose estimation aims to infer the relative pose between the object and the camera using a single image or multiple images. Most works have focused on predicting the object pose without associated uncertainty under occlusion and structural ambiguity (symmetricity). However, these works demand prior information about shape attributes, and this condition is hardly satisfied in reality; even asymmetric objects may be symmetric under the viewpoint change. In addition, acquiring and fusing diverse sensor data is challenging when extending them to robotics applications. Tackling these limitations, we present an ambiguity-aware 6D object pose estimation network, PrimA6D++, as a generic uncertainty prediction method. The major challenges in pose estimation, such as occlusion and symmetry, can be handled in a generic manner based on the measured ambiguity of the prediction. Specifically, we devise a network to reconstruct the three rotation axis primitive images of a target object and predict the underlying uncertainty along each primitive axis. Leveraging the estimated uncertainty, we then optimize multi-object poses using visual measurements and camera poses by treating it as an object SLAM problem. The proposed method shows a significant performance improvement in T-LESS and YCB-Video datasets. We further demonstrate real-time scene recognition capability for visually-assisted robot manipulation. Our code and supplementary materials are available at https://github.com/rpmsnu/PrimA6D.

OpenStreetMap-based LiDAR Global Localization in Urban Environment without a Prior LiDAR Map

Feb 15, 2022

Abstract:Using publicly accessible maps, we propose a novel vehicle localization method that can be applied without using prior light detection and ranging (LiDAR) maps. Our method generates OSM descriptors by calculating the distances to buildings from a location in OpenStreetMap at a regular angle, and LiDAR descriptors by calculating the shortest distances to building points from the current location at a regular angle. Comparing the OSM descriptors and LiDAR descriptors yields a highly accurate vehicle localization result. Compared to methods that use prior LiDAR maps, our method presents two main advantages: (1) vehicle localization is not limited to only places with previously acquired LiDAR maps, and (2) our method is comparable to LiDAR map-based methods, and especially outperforms the other methods with respect to the top one candidate at KITTI dataset sequence 00.

A Tip Mount for Carrying Payloads using Soft Growing Robots

Dec 17, 2019

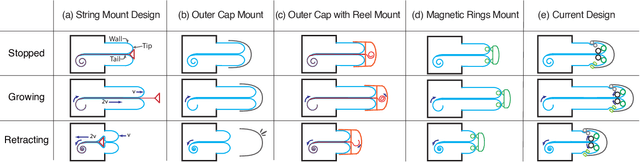

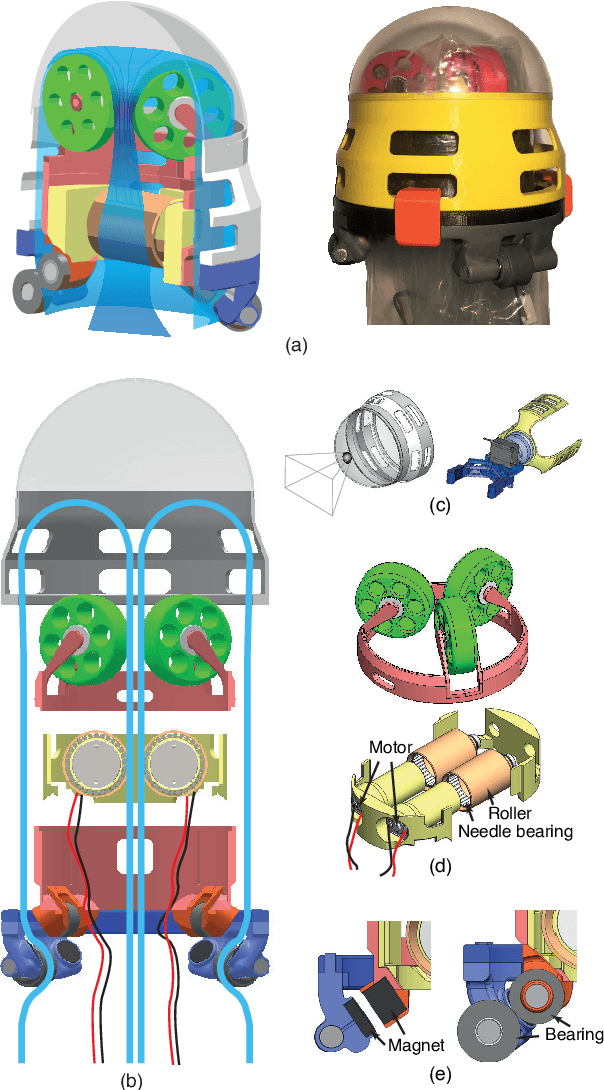

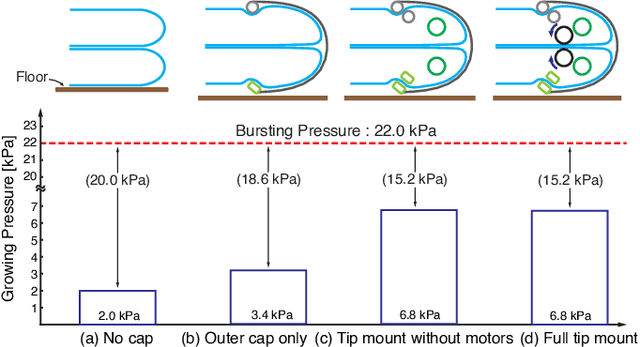

Abstract:Pneumatically operated soft growing robots that lengthen through tip eversion can be used for inspection and manipulation tasks in confined spaces such as caves, animal habitats, or disaster environments. Because new material is continually emitted from the robot tip, it is challenging to mount sensors, grippers, or other useful payloads at the tip of the robot. Here, we present a tip mount for soft growing robots that can be reliably used and remain attached to the tip during growing, retraction, and steering, while carrying a variety of payloads, including active devices. Our tip mount enables two new soft growing robot capabilities: retracting without buckling while carrying a payload at the tip, and exerting a significant tensile load on the environment during inversion. In this paper, we review previous research on soft growing robot tip mounts, and we discuss the important features of a successful tip mount. We present the design of our tip mount and results for the minimum pressure to grow and the maximum payload in tension. We also demonstrate a soft growing robot equipped with our tip mount retrieving an object and delivering it to a different location.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge