Ji Hun Kim

A Tip Mount for Carrying Payloads using Soft Growing Robots

Dec 17, 2019

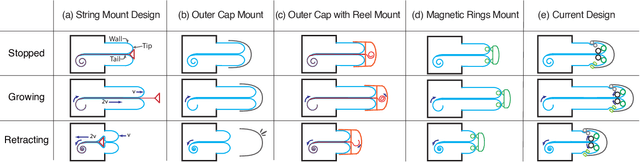

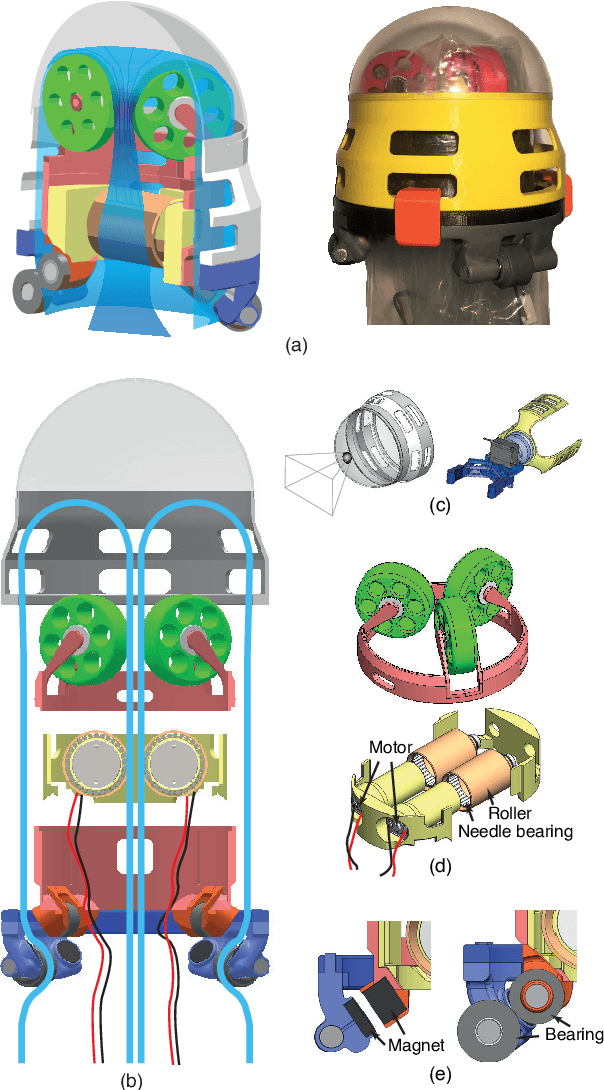

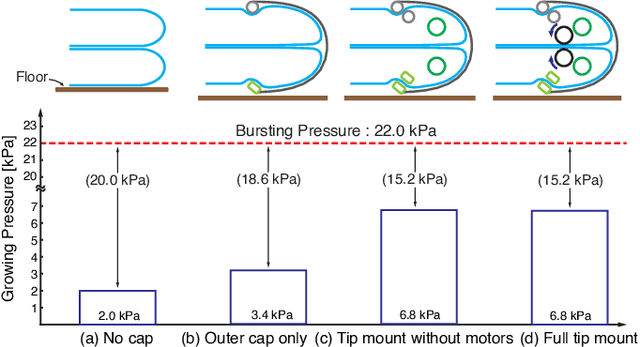

Abstract:Pneumatically operated soft growing robots that lengthen through tip eversion can be used for inspection and manipulation tasks in confined spaces such as caves, animal habitats, or disaster environments. Because new material is continually emitted from the robot tip, it is challenging to mount sensors, grippers, or other useful payloads at the tip of the robot. Here, we present a tip mount for soft growing robots that can be reliably used and remain attached to the tip during growing, retraction, and steering, while carrying a variety of payloads, including active devices. Our tip mount enables two new soft growing robot capabilities: retracting without buckling while carrying a payload at the tip, and exerting a significant tensile load on the environment during inversion. In this paper, we review previous research on soft growing robot tip mounts, and we discuss the important features of a successful tip mount. We present the design of our tip mount and results for the minimum pressure to grow and the maximum payload in tension. We also demonstrate a soft growing robot equipped with our tip mount retrieving an object and delivering it to a different location.

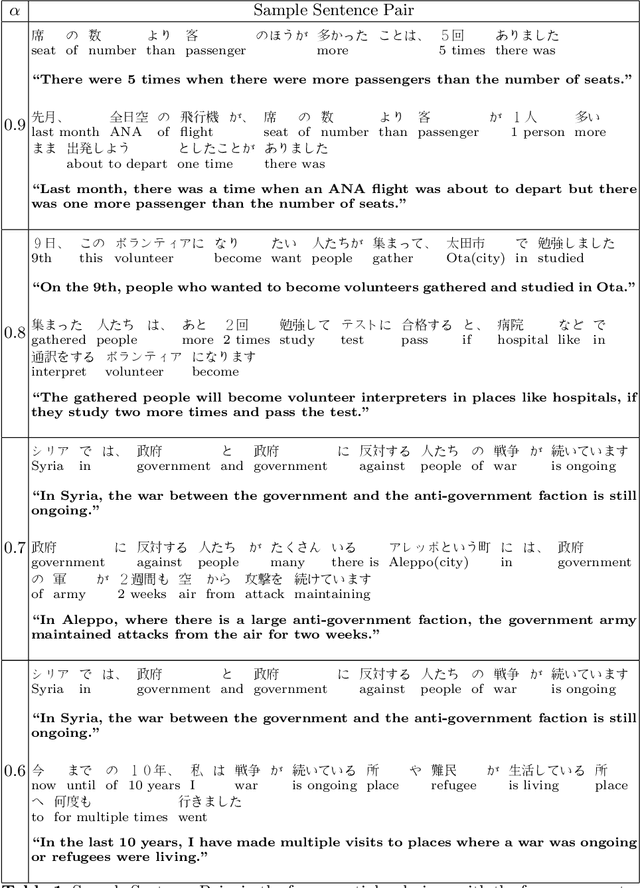

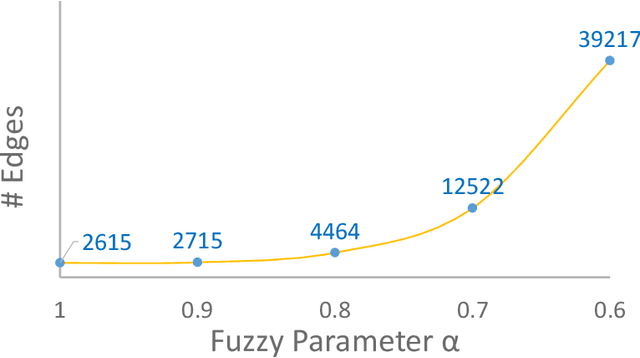

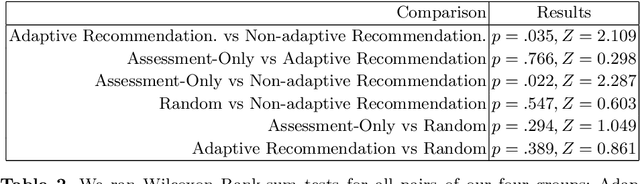

Adaptive Learning Material Recommendation in Online Language Education

May 26, 2019

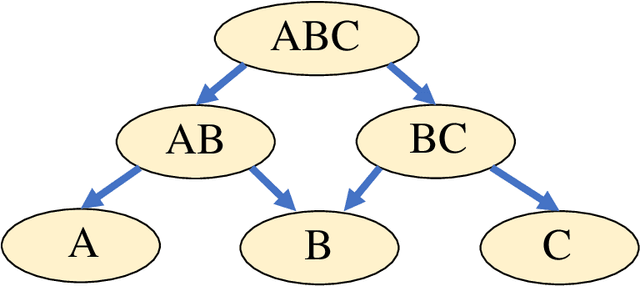

Abstract:Recommending personalized learning materials for online language learning is challenging because we typically lack data about the student's ability and the relative difficulty of learning materials. This makes it hard to recommend appropriate content that matches the student's prior knowledge. In this paper, we propose a refined hierarchical knowledge structure to model vocabulary knowledge, which enables us to automatically organize the authentic and up-to-date learning materials collected from the internet. Based on this knowledge structure, we then introduce a hybrid approach to recommend learning materials that adapts to a student's language level. We evaluate our work with an online Japanese learning tool and the results suggest adding adaptivity into material recommendation significantly increases student engagement.

* The short version of this paper is published at AIED 2019

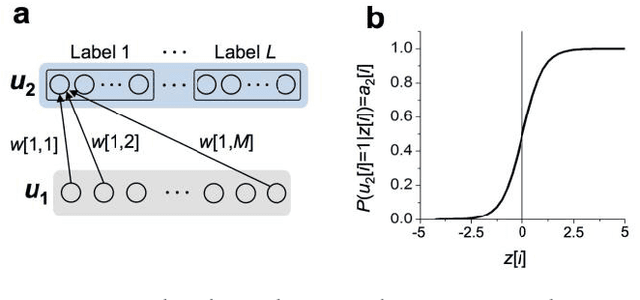

Markov chain Hebbian learning algorithm with ternary synaptic units

Nov 23, 2017

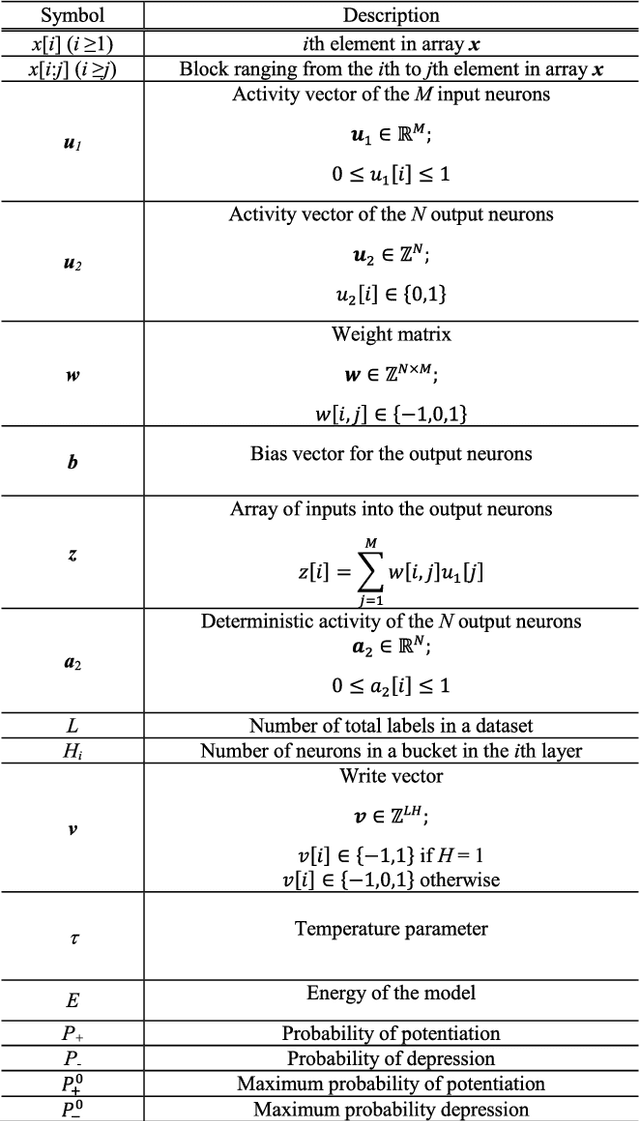

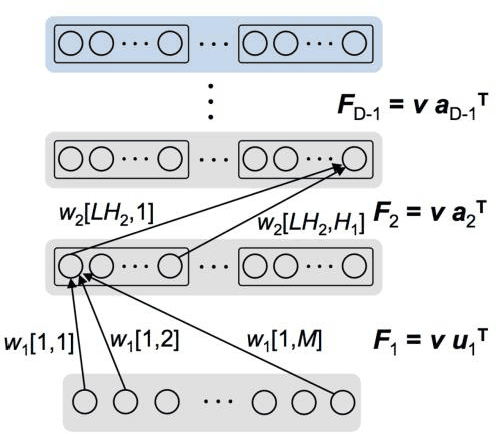

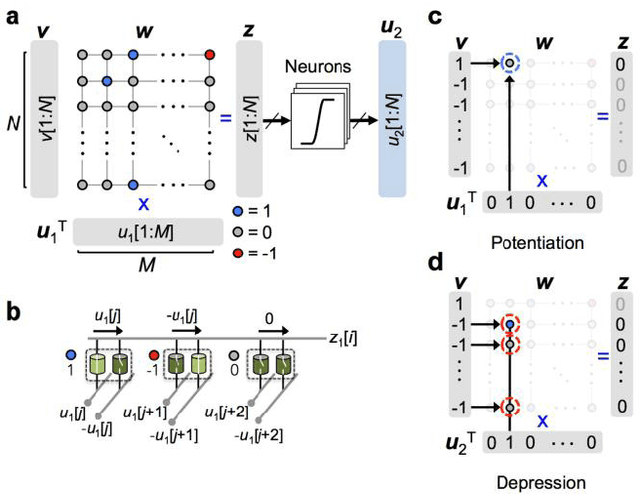

Abstract:In spite of remarkable progress in machine learning techniques, the state-of-the-art machine learning algorithms often keep machines from real-time learning (online learning) due in part to computational complexity in parameter optimization. As an alternative, a learning algorithm to train a memory in real time is proposed, which is named as the Markov chain Hebbian learning algorithm. The algorithm pursues efficient memory use during training in that (i) the weight matrix has ternary elements (-1, 0, 1) and (ii) each update follows a Markov chain--the upcoming update does not need past weight memory. The algorithm was verified by two proof-of-concept tasks (handwritten digit recognition and multiplication table memorization) in which numbers were taken as symbols. Particularly, the latter bases multiplication arithmetic on memory, which may be analogous to humans' mental arithmetic. The memory-based multiplication arithmetic feasibly offers the basis of factorization, supporting novel insight into the arithmetic.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge