Dohun Kim

Natural Language, AI, and Quantum Computing in 2024: Research Ingredients and Directions in QNLP

Mar 28, 2024Abstract:Language processing is at the heart of current developments in artificial intelligence, and quantum computers are becoming available at the same time. This has led to great interest in quantum natural language processing, and several early proposals and experiments. This paper surveys the state of this area, showing how NLP-related techniques including word embeddings, sequential models, attention, and grammatical parsing have been used in quantum language processing. We introduce a new quantum design for the basic task of text encoding (representing a string of characters in memory), which has not been addressed in detail before. As well as motivating new technologies, quantum theory has made key contributions to the challenging questions of 'What is uncertainty?' and 'What is intelligence?' As these questions are taking on fresh urgency with artificial systems, the paper also considers some of the ways facts are conceptualized and presented in language. In particular, we argue that the problem of 'hallucinations' arises through a basic misunderstanding: language expresses any number of plausible hypotheses, only a few of which become actual, a distinction that is ignored in classical mechanics, but present (albeit confusing) in quantum mechanics.

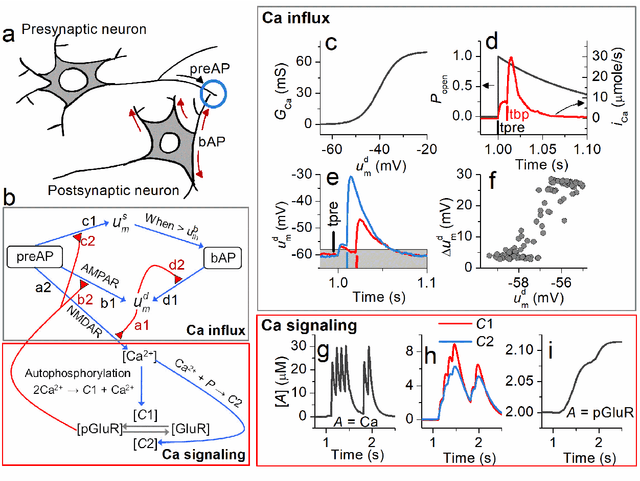

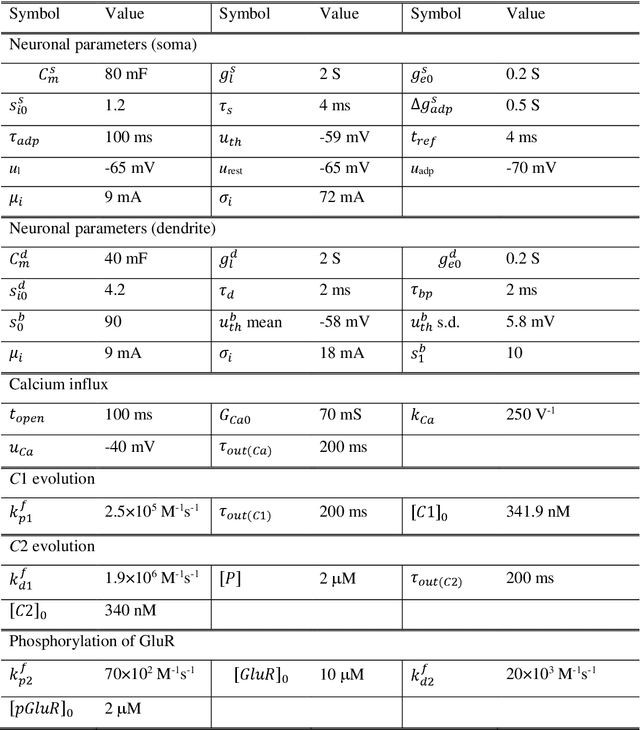

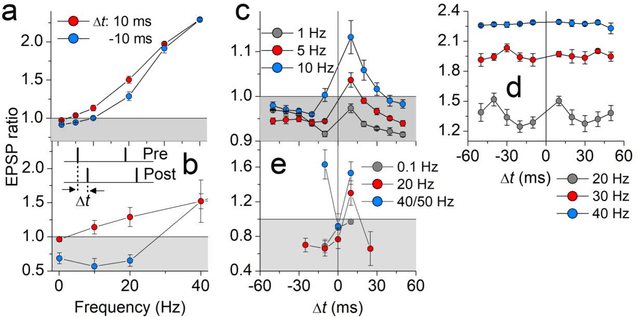

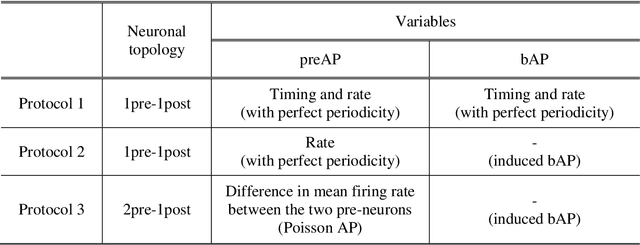

Simplified calcium signaling cascade for synaptic plasticity

Nov 26, 2019

Abstract:We propose a model for synaptic plasticity based on a calcium signaling cascade. The model simplifies the full signaling pathways from a calcium influx to the phosphorylation (potentiation) and dephosphorylation (depression) of glutamate receptors that are gated by fictive C1 and C2 catalysts, respectively. This model is based on tangible chemical reactions, including fictive catalysts, for long-term plasticity rather than the conceptual theories commonplace in various models, such as preset thresholds of calcium concentration. Our simplified model successfully reproduced the experimental synaptic plasticity induced by different protocols such as (i) a synchronous pairing protocol and (ii) correlated presynaptic and postsynaptic action potentials (APs). Further, the ocular dominance plasticity (or the experimental verification of the celebrated Bienenstock--Cooper--Munro theory) was reproduced by two model synapses that compete by means of back-propagating APs (bAPs). The key to this competition is synapse-specific bAPs with reference to bAP-boosting on the physiological grounds.

Markov chain Hebbian learning algorithm with ternary synaptic units

Nov 23, 2017

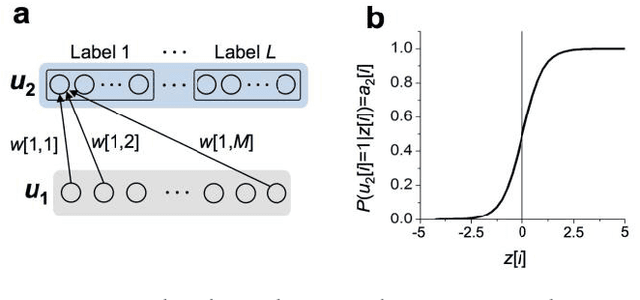

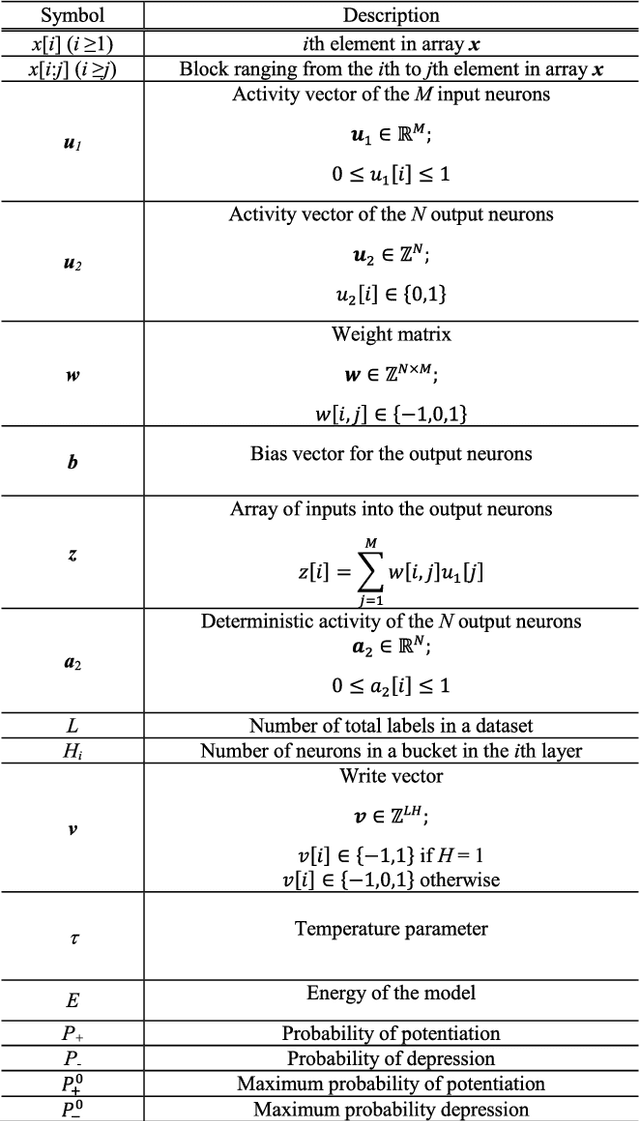

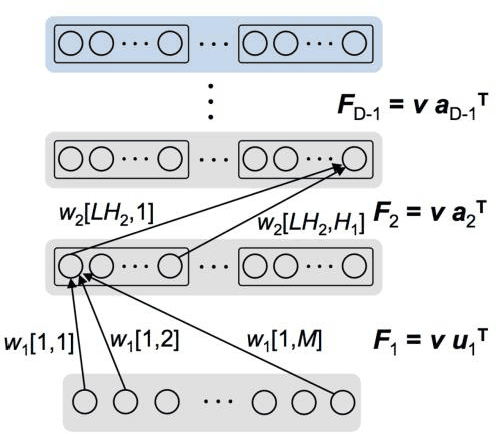

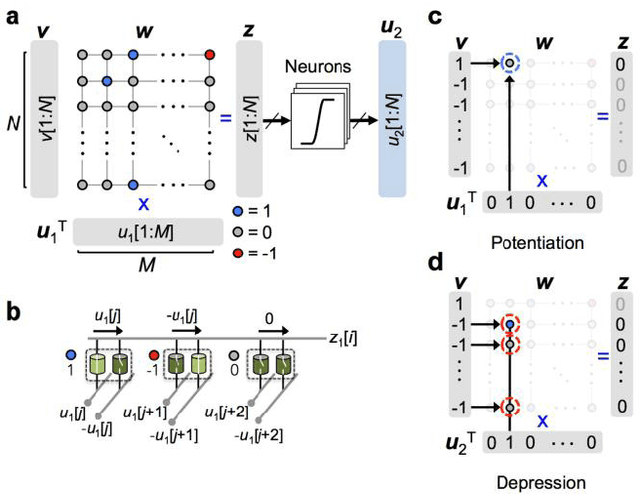

Abstract:In spite of remarkable progress in machine learning techniques, the state-of-the-art machine learning algorithms often keep machines from real-time learning (online learning) due in part to computational complexity in parameter optimization. As an alternative, a learning algorithm to train a memory in real time is proposed, which is named as the Markov chain Hebbian learning algorithm. The algorithm pursues efficient memory use during training in that (i) the weight matrix has ternary elements (-1, 0, 1) and (ii) each update follows a Markov chain--the upcoming update does not need past weight memory. The algorithm was verified by two proof-of-concept tasks (handwritten digit recognition and multiplication table memorization) in which numbers were taken as symbols. Particularly, the latter bases multiplication arithmetic on memory, which may be analogous to humans' mental arithmetic. The memory-based multiplication arithmetic feasibly offers the basis of factorization, supporting novel insight into the arithmetic.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge