Ioannis Marras

Poly-NL: Linear Complexity Non-local Layers with Polynomials

Jul 06, 2021

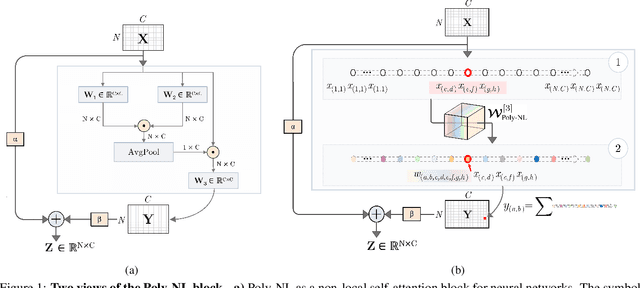

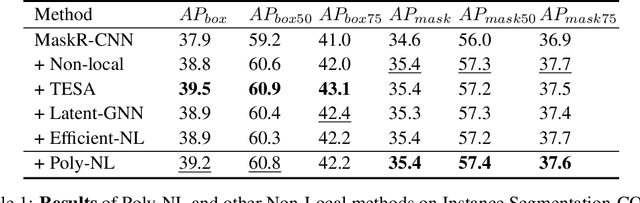

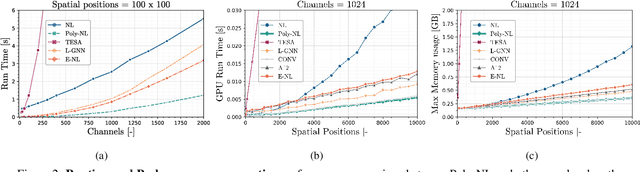

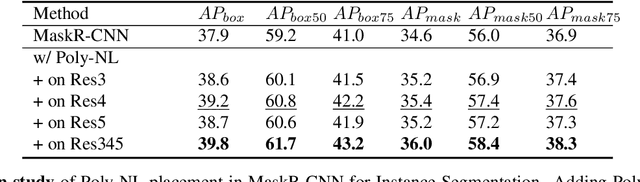

Abstract:Spatial self-attention layers, in the form of Non-Local blocks, introduce long-range dependencies in Convolutional Neural Networks by computing pairwise similarities among all possible positions. Such pairwise functions underpin the effectiveness of non-local layers, but also determine a complexity that scales quadratically with respect to the input size both in space and time. This is a severely limiting factor that practically hinders the applicability of non-local blocks to even moderately sized inputs. Previous works focused on reducing the complexity by modifying the underlying matrix operations, however in this work we aim to retain full expressiveness of non-local layers while keeping complexity linear. We overcome the efficiency limitation of non-local blocks by framing them as special cases of 3rd order polynomial functions. This fact enables us to formulate novel fast Non-Local blocks, capable of reducing the complexity from quadratic to linear with no loss in performance, by replacing any direct computation of pairwise similarities with element-wise multiplications. The proposed method, which we dub as "Poly-NL", is competitive with state-of-the-art performance across image recognition, instance segmentation, and face detection tasks, while having considerably less computational overhead.

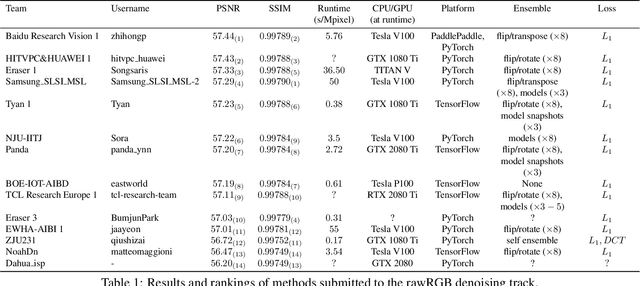

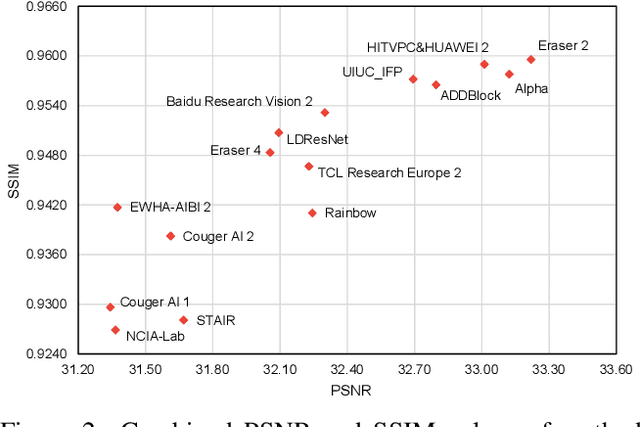

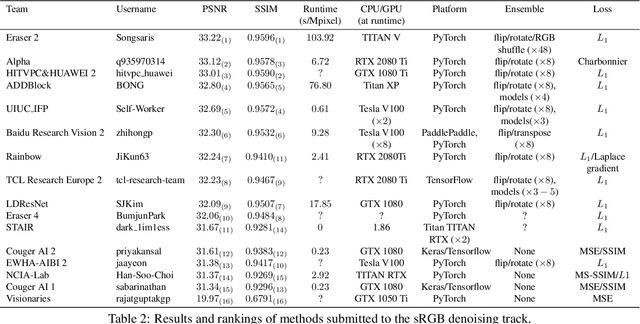

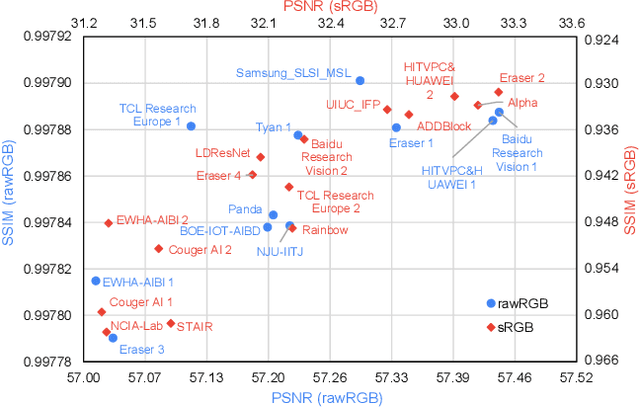

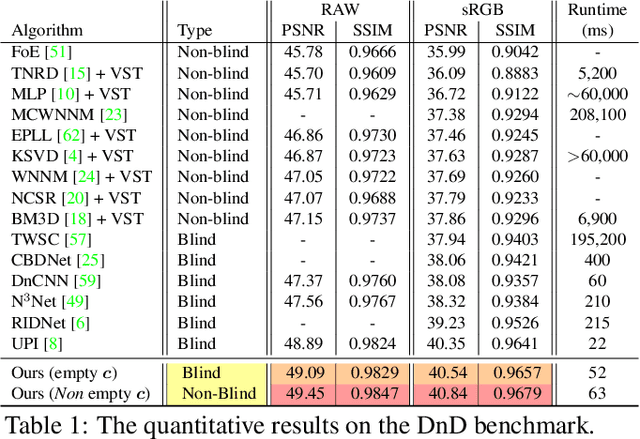

NTIRE 2020 Challenge on Real Image Denoising: Dataset, Methods and Results

May 08, 2020

Abstract:This paper reviews the NTIRE 2020 challenge on real image denoising with focus on the newly introduced dataset, the proposed methods and their results. The challenge is a new version of the previous NTIRE 2019 challenge on real image denoising that was based on the SIDD benchmark. This challenge is based on a newly collected validation and testing image datasets, and hence, named SIDD+. This challenge has two tracks for quantitatively evaluating image denoising performance in (1) the Bayer-pattern rawRGB and (2) the standard RGB (sRGB) color spaces. Each track ~250 registered participants. A total of 22 teams, proposing 24 methods, competed in the final phase of the challenge. The proposed methods by the participating teams represent the current state-of-the-art performance in image denoising targeting real noisy images. The newly collected SIDD+ datasets are publicly available at: https://bit.ly/siddplus_data.

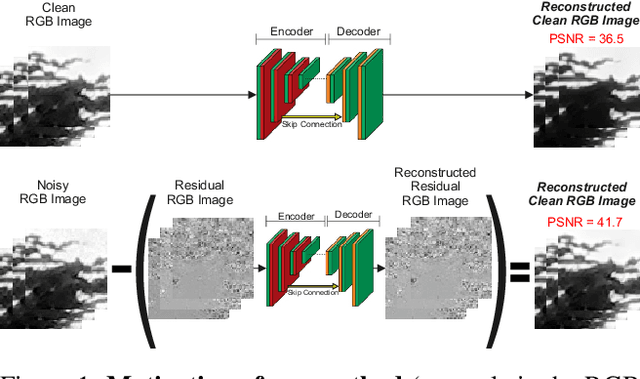

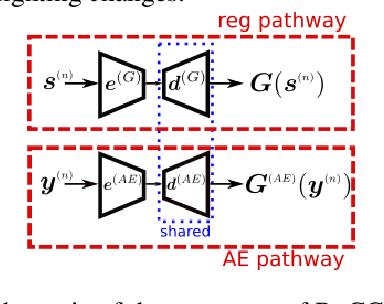

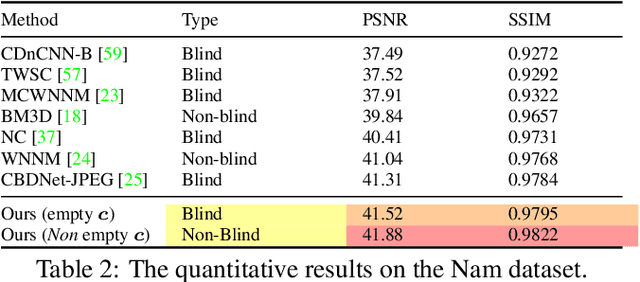

Reconstructing the Noise Manifold for Image Denoising

Mar 07, 2020

Abstract:Deep Convolutional Neural Networks (CNNs) have been successfully used in many low-level vision problems like image denoising. Although the conditional image generation techniques have led to large improvements in this task, there has been little effort in providing conditional generative adversarial networks (cGAN)[42] with an explicit way of understanding the image noise for object-independent denoising reliable for real-world applications. The task of leveraging structures in the target space is unstable due to the complexity of patterns in natural scenes, so the presence of unnatural artifacts or over-smoothed image areas cannot be avoided. To fill the gap, in this work we introduce the idea of a cGAN which explicitly leverages structure in the image noise space. By learning directly a low dimensional manifold of the image noise, the generator promotes the removal from the noisy image only that information which spans this manifold. This idea brings many advantages while it can be appended at the end of any denoiser to significantly improve its performance. Based on our experiments, our model substantially outperforms existing state-of-the-art architectures, resulting in denoised images with less oversmoothing and better detail.

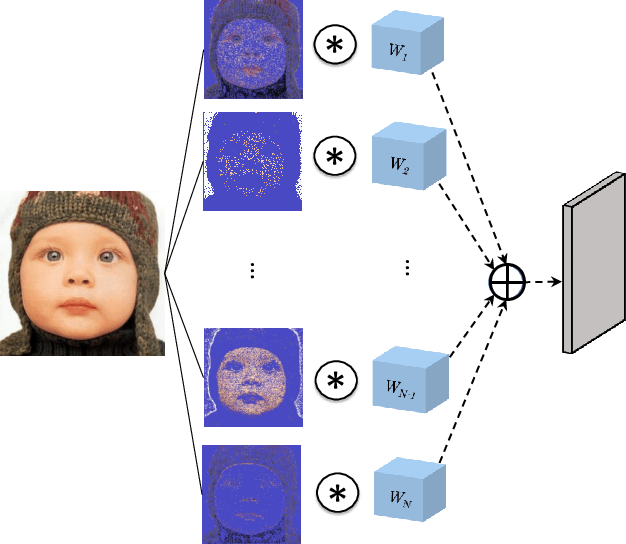

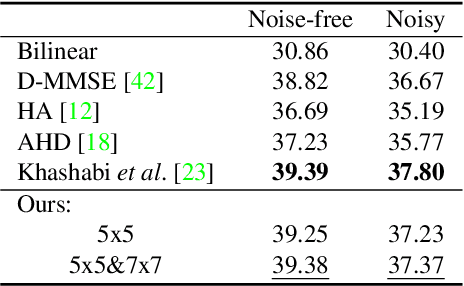

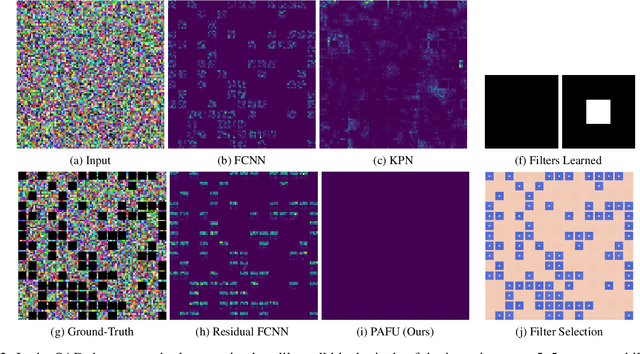

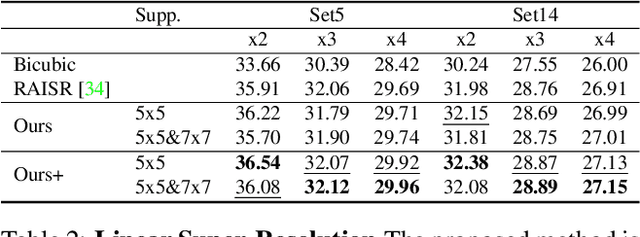

Pixel Adaptive Filtering Units

Nov 24, 2019

Abstract:State-of-the-art methods for computer vision rely heavily on the translation equivariance and spatial sharing properties of convolutional layers without explicitly taking into consideration the input content. Modern techniques employ deep sophisticated architectures in order to circumvent this issue. In this work, we propose a Pixel Adaptive Filtering Unit (PAFU) which introduces a differentiable kernel selection mechanism paired with a discrete, learnable and decorrelated group of kernels to allow for content-based spatial adaptation. First, we demonstrate the applicability of the technique in applications where runtime is of importance. Next, we employ PAFU in deep neural networks as a replacement of standard convolutional layers to enhance the original architectures with spatially varying computations to achieve considerable performance improvements. Finally, diverse and extensive experimentation provides strong empirical evidence in favor of the proposed content-adaptive processing scheme across different image processing and high-level computer vision tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge