Hye-young Paik

ParaMETA: Towards Learning Disentangled Paralinguistic Speaking Styles Representations from Speech

Jan 18, 2026Abstract:Learning representative embeddings for different types of speaking styles, such as emotion, age, and gender, is critical for both recognition tasks (e.g., cognitive computing and human-computer interaction) and generative tasks (e.g., style-controllable speech generation). In this work, we introduce ParaMETA, a unified and flexible framework for learning and controlling speaking styles directly from speech. Unlike existing methods that rely on single-task models or cross-modal alignment, ParaMETA learns disentangled, task-specific embeddings by projecting speech into dedicated subspaces for each type of style. This design reduces inter-task interference, mitigates negative transfer, and allows a single model to handle multiple paralinguistic tasks such as emotion, gender, age, and language classification. Beyond recognition, ParaMETA enables fine-grained style control in Text-To-Speech (TTS) generative models. It supports both speech- and text-based prompting and allows users to modify one speaking styles while preserving others. Extensive experiments demonstrate that ParaMETA outperforms strong baselines in classification accuracy and generates more natural and expressive speech, while maintaining a lightweight and efficient model suitable for real-world applications.

Metaphors are a Source of Cross-Domain Misalignment of Large Reasoning Models

Jan 06, 2026Abstract:Earlier research has shown that metaphors influence human's decision making, which raises the question of whether metaphors also influence large language models (LLMs)' reasoning pathways, considering their training data contain a large number of metaphors. In this work, we investigate the problem in the scope of the emergent misalignment problem where LLMs can generalize patterns learned from misaligned content in one domain to another domain. We discover a strong causal relationship between metaphors in training data and the misalignment degree of LLMs' reasoning contents. With interventions using metaphors in pre-training, fine-tuning and re-alignment phases, models' cross-domain misalignment degrees change significantly. As we delve deeper into the causes behind this phenomenon, we observe that there is a connection between metaphors and the activation of global and local latent features of large reasoning models. By monitoring these latent features, we design a detector that predict misaligned content with high accuracy.

MetaLogic: Robustness Evaluation of Text-to-Image Models via Logically Equivalent Prompts

Oct 01, 2025Abstract:Recent advances in text-to-image (T2I) models, especially diffusion-based architectures, have significantly improved the visual quality of generated images. However, these models continue to struggle with a critical limitation: maintaining semantic consistency when input prompts undergo minor linguistic variations. Despite being logically equivalent, such prompt pairs often yield misaligned or semantically inconsistent images, exposing a lack of robustness in reasoning and generalisation. To address this, we propose MetaLogic, a novel evaluation framework that detects T2I misalignment without relying on ground truth images. MetaLogic leverages metamorphic testing, generating image pairs from prompts that differ grammatically but are semantically identical. By directly comparing these image pairs, the framework identifies inconsistencies that signal failures in preserving the intended meaning, effectively diagnosing robustness issues in the model's logic understanding. Unlike existing evaluation methods that compare a generated image to a single prompt, MetaLogic evaluates semantic equivalence between paired images, offering a scalable, ground-truth-free approach to identifying alignment failures. It categorises these alignment errors (e.g., entity omission, duplication, positional misalignment) and surfaces counterexamples that can be used for model debugging and refinement. We evaluate MetaLogic across multiple state-of-the-art T2I models and reveal consistent robustness failures across a range of logical constructs. We find that even the SOTA text-to-image models like Flux.dev and DALLE-3 demonstrate a 59 percent and 71 percent misalignment rate, respectively. Our results show that MetaLogic is not only efficient and scalable, but also effective in uncovering fine-grained logical inconsistencies that are overlooked by existing evaluation metrics.

LLMs for Law: Evaluating Legal-Specific LLMs on Contract Understanding

Aug 11, 2025Abstract:Despite advances in legal NLP, no comprehensive evaluation covering multiple legal-specific LLMs currently exists for contract classification tasks in contract understanding. To address this gap, we present an evaluation of 10 legal-specific LLMs on three English language contract understanding tasks and compare them with 7 general-purpose LLMs. The results show that legal-specific LLMs consistently outperform general-purpose models, especially on tasks requiring nuanced legal understanding. Legal-BERT and Contracts-BERT establish new SOTAs on two of the three tasks, despite having 69% fewer parameters than the best-performing general-purpose LLM. We also identify CaseLaw-BERT and LexLM as strong additional baselines for contract understanding. Our results provide a holistic evaluation of legal-specific LLMs and will facilitate the development of more accurate contract understanding systems.

Generalized Multilingual Text-to-Speech Generation with Language-Aware Style Adaptation

Apr 11, 2025

Abstract:Text-to-Speech (TTS) models can generate natural, human-like speech across multiple languages by transforming phonemes into waveforms. However, multilingual TTS remains challenging due to discrepancies in phoneme vocabularies and variations in prosody and speaking style across languages. Existing approaches either train separate models for each language, which achieve high performance at the cost of increased computational resources, or use a unified model for multiple languages that struggles to capture fine-grained, language-specific style variations. In this work, we propose LanStyleTTS, a non-autoregressive, language-aware style adaptive TTS framework that standardizes phoneme representations and enables fine-grained, phoneme-level style control across languages. This design supports a unified multilingual TTS model capable of producing accurate and high-quality speech without the need to train language-specific models. We evaluate LanStyleTTS by integrating it with several state-of-the-art non-autoregressive TTS architectures. Results show consistent performance improvements across different model backbones. Furthermore, we investigate a range of acoustic feature representations, including mel-spectrograms and autoencoder-derived latent features. Our experiments demonstrate that latent encodings can significantly reduce model size and computational cost while preserving high-quality speech generation.

RACCOON: A Retrieval-Augmented Generation Approach for Location Coordinate Capture from News Articles

Jan 20, 2025

Abstract:Geocoding involves automatic extraction of location coordinates of incidents reported in news articles, and can be used for epidemic intelligence or disaster management. This paper introduces Retrieval-Augmented Coordinate Capture Of Online News articles (RACCOON), an open-source geocoding approach that extracts geolocations from news articles. RACCOON uses a retrieval-augmented generation (RAG) approach where candidate locations and associated information are retrieved in the form of context from a location database, and a prompt containing the retrieved context, location mentions and news articles is fed to an LLM to generate the location coordinates. Our evaluation on three datasets, two underlying LLMs, three baselines and several ablation tests based on the components of RACCOON demonstrate the utility of RACCOON. To the best of our knowledge, RACCOON is the first RAG-based approach for geocoding using pre-trained LLMs.

Local Differential Privacy for Smart Meter Data Sharing

Nov 08, 2023

Abstract:Energy disaggregation techniques, which use smart meter data to infer appliance energy usage, can provide consumers and energy companies valuable insights into energy management. However, these techniques also present privacy risks, such as the potential for behavioral profiling. Local differential privacy (LDP) methods provide strong privacy guarantees with high efficiency in addressing privacy concerns. However, existing LDP methods focus on protecting aggregated energy consumption data rather than individual appliances. Furthermore, these methods do not consider the fact that smart meter data are a form of streaming data, and its processing methods should account for time windows. In this paper, we propose a novel LDP approach (named LDP-SmartEnergy) that utilizes randomized response techniques with sliding windows to facilitate the sharing of appliance-level energy consumption data over time while not revealing individual users' appliance usage patterns. Our evaluations show that LDP-SmartEnergy runs efficiently compared to baseline methods. The results also demonstrate that our solution strikes a balance between protecting privacy and maintaining the utility of data for effective analysis.

Global Convolutional Neural Processes

Sep 02, 2021

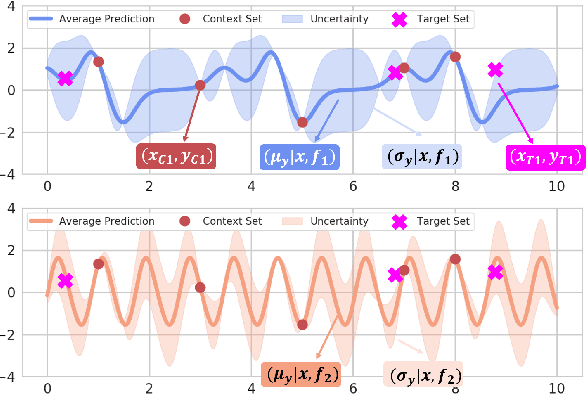

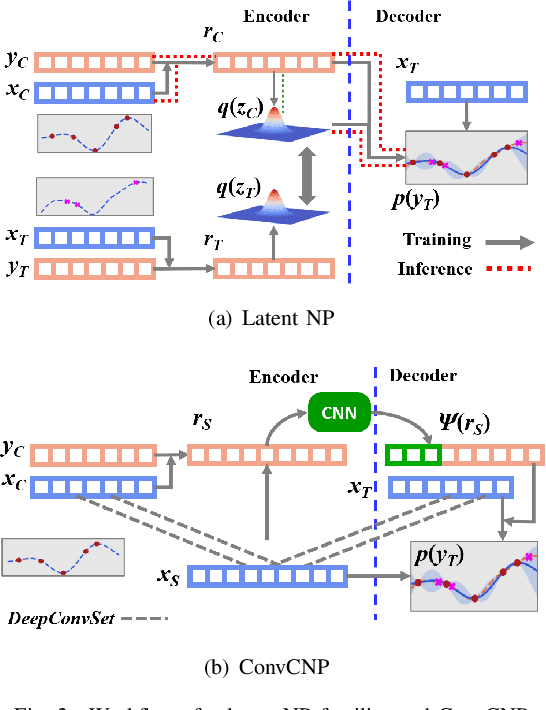

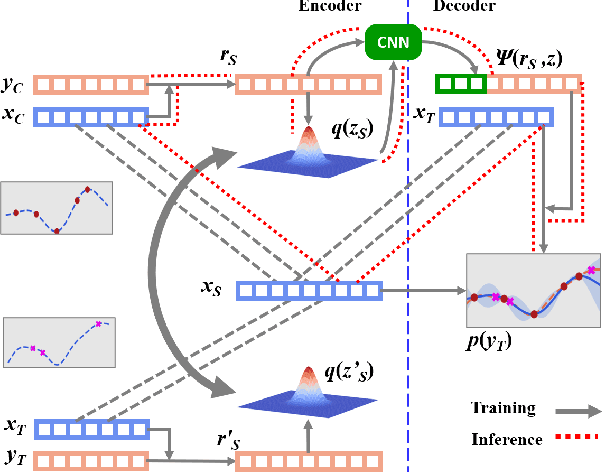

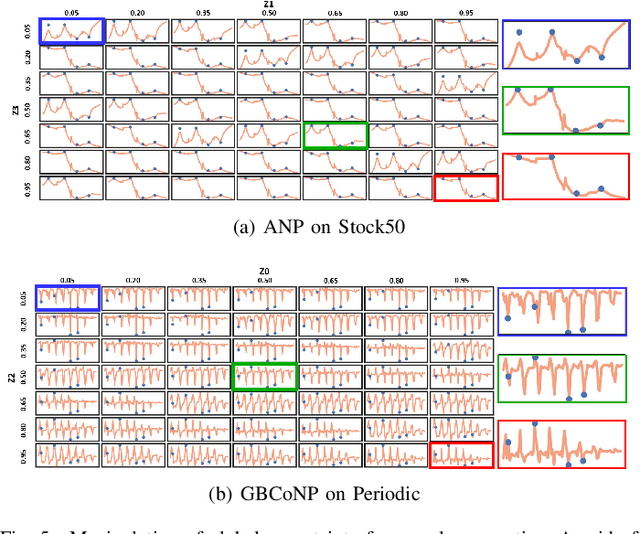

Abstract:The ability to deal with uncertainty in machine learning models has become equally, if not more, crucial to their predictive ability itself. For instance, during the pandemic, governmental policies and personal decisions are constantly made around uncertainties. Targeting this, Neural Process Families (NPFs) have recently shone a light on prediction with uncertainties by bridging Gaussian processes and neural networks. Latent neural process, a member of NPF, is believed to be capable of modelling the uncertainty on certain points (local uncertainty) as well as the general function priors (global uncertainties). Nonetheless, some critical questions remain unresolved, such as a formal definition of global uncertainties, the causality behind global uncertainties, and the manipulation of global uncertainties for generative models. Regarding this, we build a member GloBal Convolutional Neural Process(GBCoNP) that achieves the SOTA log-likelihood in latent NPFs. It designs a global uncertainty representation p(z), which is an aggregation on a discretized input space. The causal effect between the degree of global uncertainty and the intra-task diversity is discussed. The learnt prior is analyzed on a variety of scenarios, including 1D, 2D, and a newly proposed spatial-temporal COVID dataset. Our manipulation of the global uncertainty not only achieves generating the desired samples to tackle few-shot learning, but also enables the probability evaluation on the functional priors.

Simeon -- Secure Federated Machine Learning Through Iterative Filtering

Mar 13, 2021

Abstract:Federated learning enables a global machine learning model to be trained collaboratively by distributed, mutually non-trusting learning agents who desire to maintain the privacy of their training data and their hardware. A global model is distributed to clients, who perform training, and submit their newly-trained model to be aggregated into a superior model. However, federated learning systems are vulnerable to interference from malicious learning agents who may desire to prevent training or induce targeted misclassification in the resulting global model. A class of Byzantine-tolerant aggregation algorithms has emerged, offering varying degrees of robustness against these attacks, often with the caveat that the number of attackers is bounded by some quantity known prior to training. This paper presents Simeon: a novel approach to aggregation that applies a reputation-based iterative filtering technique to achieve robustness even in the presence of attackers who can exhibit arbitrary behaviour. We compare Simeon to state-of-the-art aggregation techniques and find that Simeon achieves comparable or superior robustness to a variety of attacks. Notably, we show that Simeon is tolerant to sybil attacks, where other algorithms are not, presenting a key advantage of our approach.

Architectural Patterns for the Design of Federated Learning Systems

Jan 07, 2021

Abstract:Federated learning has received fast-growing interests from academia and industry to tackle the challenges of data hungriness and privacy in machine learning. A federated learning system can be viewed as a large-scale distributed system with different components and stakeholders as numerous client devices participate in federated learning. Designing a federated learning system requires software system design thinking apart from machine learning knowledge. Although much effort has been put into federated learning from the machine learning technique aspects, the software architecture design concerns in building federated learning systems have been largely ignored. Therefore, in this paper, we present a collection of architectural patterns to deal with the design challenges of federated learning systems. Architectural patterns present reusable solutions to a commonly occurring problem within a given context during software architecture design. The presented patterns are based on the results of a systematic literature review and include three client management patterns, four model management patterns, three model training patterns, and four model aggregation patterns. The patterns are associated to particular state transitions in a federated learning model lifecycle, serving as a guidance for effective use of the patterns in the design of federated learning systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge