Haowei Lou

ParaMETA: Towards Learning Disentangled Paralinguistic Speaking Styles Representations from Speech

Jan 18, 2026Abstract:Learning representative embeddings for different types of speaking styles, such as emotion, age, and gender, is critical for both recognition tasks (e.g., cognitive computing and human-computer interaction) and generative tasks (e.g., style-controllable speech generation). In this work, we introduce ParaMETA, a unified and flexible framework for learning and controlling speaking styles directly from speech. Unlike existing methods that rely on single-task models or cross-modal alignment, ParaMETA learns disentangled, task-specific embeddings by projecting speech into dedicated subspaces for each type of style. This design reduces inter-task interference, mitigates negative transfer, and allows a single model to handle multiple paralinguistic tasks such as emotion, gender, age, and language classification. Beyond recognition, ParaMETA enables fine-grained style control in Text-To-Speech (TTS) generative models. It supports both speech- and text-based prompting and allows users to modify one speaking styles while preserving others. Extensive experiments demonstrate that ParaMETA outperforms strong baselines in classification accuracy and generates more natural and expressive speech, while maintaining a lightweight and efficient model suitable for real-world applications.

Generalized Multilingual Text-to-Speech Generation with Language-Aware Style Adaptation

Apr 11, 2025

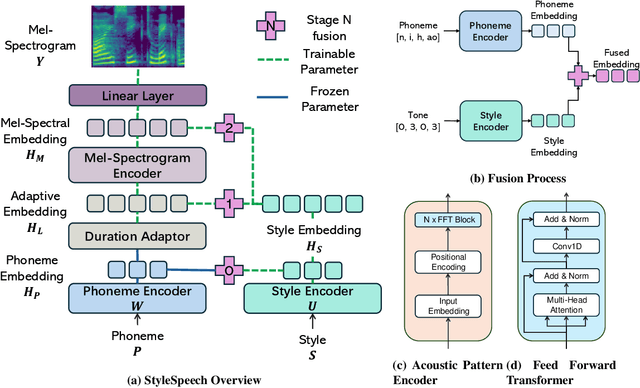

Abstract:Text-to-Speech (TTS) models can generate natural, human-like speech across multiple languages by transforming phonemes into waveforms. However, multilingual TTS remains challenging due to discrepancies in phoneme vocabularies and variations in prosody and speaking style across languages. Existing approaches either train separate models for each language, which achieve high performance at the cost of increased computational resources, or use a unified model for multiple languages that struggles to capture fine-grained, language-specific style variations. In this work, we propose LanStyleTTS, a non-autoregressive, language-aware style adaptive TTS framework that standardizes phoneme representations and enables fine-grained, phoneme-level style control across languages. This design supports a unified multilingual TTS model capable of producing accurate and high-quality speech without the need to train language-specific models. We evaluate LanStyleTTS by integrating it with several state-of-the-art non-autoregressive TTS architectures. Results show consistent performance improvements across different model backbones. Furthermore, we investigate a range of acoustic feature representations, including mel-spectrograms and autoencoder-derived latent features. Our experiments demonstrate that latent encodings can significantly reduce model size and computational cost while preserving high-quality speech generation.

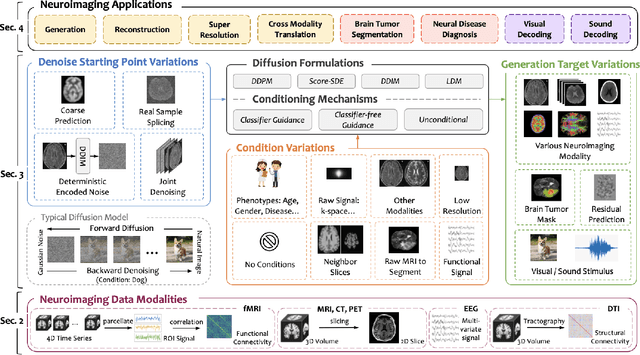

Diffusion Models for Computational Neuroimaging: A Survey

Feb 10, 2025

Abstract:Computational neuroimaging involves analyzing brain images or signals to provide mechanistic insights and predictive tools for human cognition and behavior. While diffusion models have shown stability and high-quality generation in natural images, there is increasing interest in adapting them to analyze brain data for various neurological tasks such as data enhancement, disease diagnosis and brain decoding. This survey provides an overview of recent efforts to integrate diffusion models into computational neuroimaging. We begin by introducing the common neuroimaging data modalities, follow with the diffusion formulations and conditioning mechanisms. Then we discuss how the variations of the denoising starting point, condition input and generation target of diffusion models are developed and enhance specific neuroimaging tasks. For a comprehensive overview of the ongoing research, we provide a publicly available repository at https://github.com/JoeZhao527/dm4neuro.

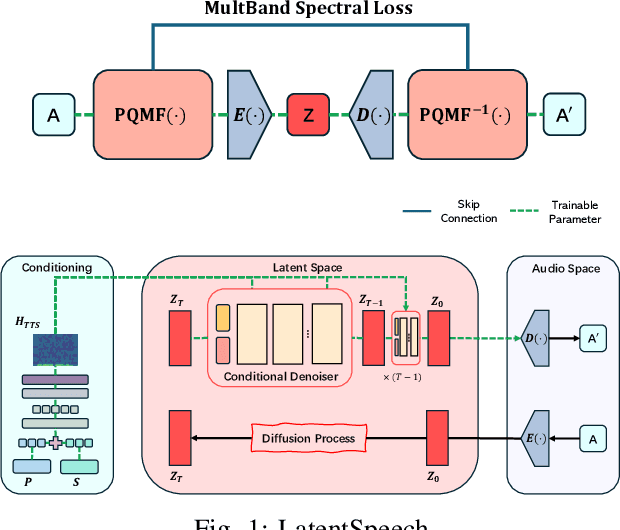

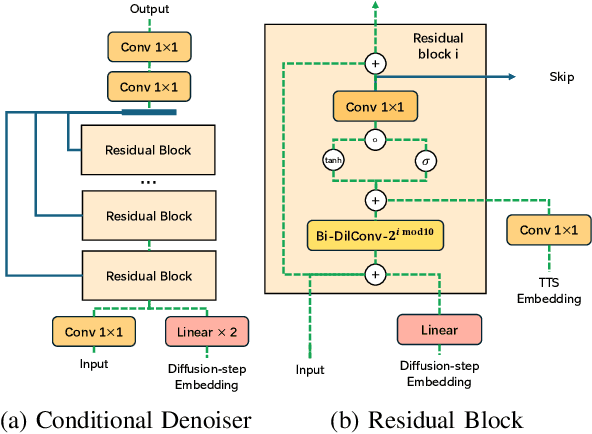

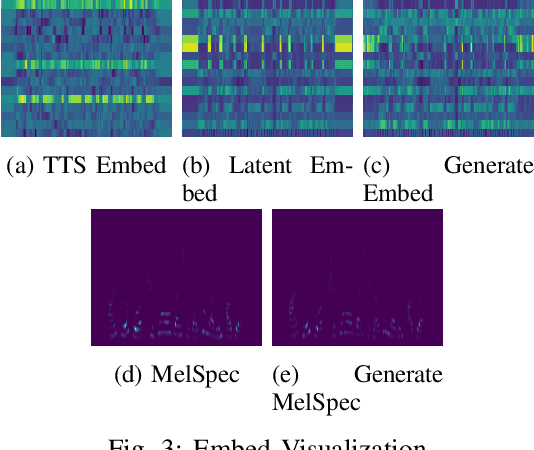

LatentSpeech: Latent Diffusion for Text-To-Speech Generation

Dec 11, 2024

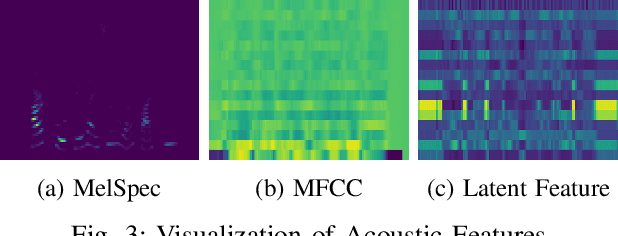

Abstract:Diffusion-based Generative AI gains significant attention for its superior performance over other generative techniques like Generative Adversarial Networks and Variational Autoencoders. While it has achieved notable advancements in fields such as computer vision and natural language processing, their application in speech generation remains under-explored. Mainstream Text-to-Speech systems primarily map outputs to Mel-Spectrograms in the spectral space, leading to high computational loads due to the sparsity of MelSpecs. To address these limitations, we propose LatentSpeech, a novel TTS generation approach utilizing latent diffusion models. By using latent embeddings as the intermediate representation, LatentSpeech reduces the target dimension to 5% of what is required for MelSpecs, simplifying the processing for the TTS encoder and vocoder and enabling efficient high-quality speech generation. This study marks the first integration of latent diffusion models in TTS, enhancing the accuracy and naturalness of generated speech. Experimental results on benchmark datasets demonstrate that LatentSpeech achieves a 25% improvement in Word Error Rate and a 24% improvement in Mel Cepstral Distortion compared to existing models, with further improvements rising to 49.5% and 26%, respectively, with additional training data. These findings highlight the potential of LatentSpeech to advance the state-of-the-art in TTS technology

Aligner-Guided Training Paradigm: Advancing Text-to-Speech Models with Aligner Guided Duration

Dec 11, 2024

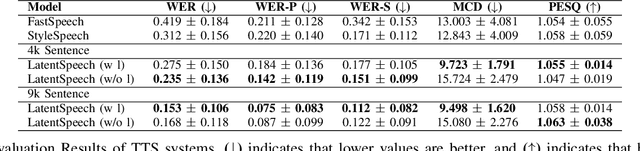

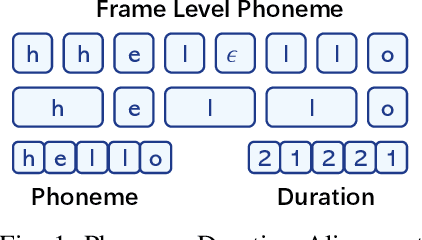

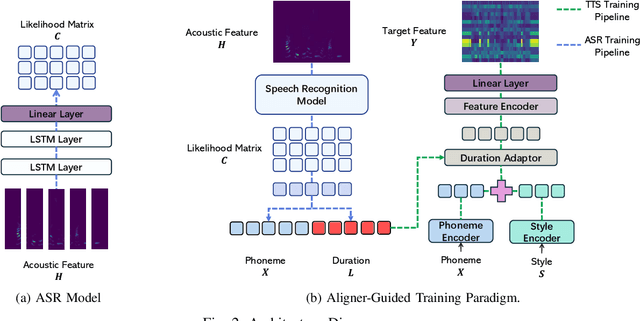

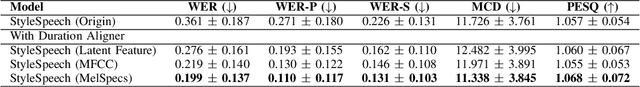

Abstract:Recent advancements in text-to-speech (TTS) systems, such as FastSpeech and StyleSpeech, have significantly improved speech generation quality. However, these models often rely on duration generated by external tools like the Montreal Forced Aligner, which can be time-consuming and lack flexibility. The importance of accurate duration is often underestimated, despite their crucial role in achieving natural prosody and intelligibility. To address these limitations, we propose a novel Aligner-Guided Training Paradigm that prioritizes accurate duration labelling by training an aligner before the TTS model. This approach reduces dependence on external tools and enhances alignment accuracy. We further explore the impact of different acoustic features, including Mel-Spectrograms, MFCCs, and latent features, on TTS model performance. Our experimental results show that aligner-guided duration labelling can achieve up to a 16\% improvement in word error rate and significantly enhance phoneme and tone alignment. These findings highlight the effectiveness of our approach in optimizing TTS systems for more natural and intelligible speech generation.

StyleSpeech: Parameter-efficient Fine Tuning for Pre-trained Controllable Text-to-Speech

Aug 27, 2024

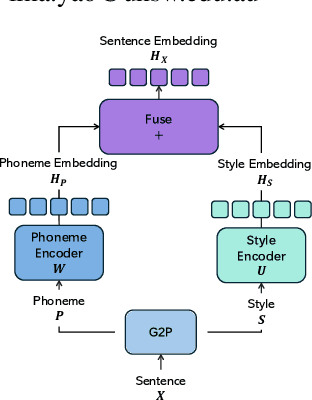

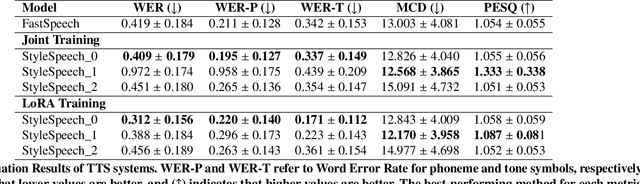

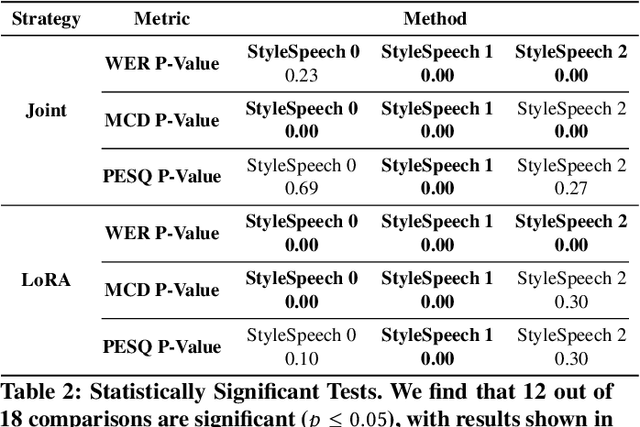

Abstract:This paper introduces StyleSpeech, a novel Text-to-Speech~(TTS) system that enhances the naturalness and accuracy of synthesized speech. Building upon existing TTS technologies, StyleSpeech incorporates a unique Style Decorator structure that enables deep learning models to simultaneously learn style and phoneme features, improving adaptability and efficiency through the principles of Lower Rank Adaptation~(LoRA). LoRA allows efficient adaptation of style features in pre-trained models. Additionally, we introduce a novel automatic evaluation metric, the LLM-Guided Mean Opinion Score (LLM-MOS), which employs large language models to offer an objective and robust protocol for automatically assessing TTS system performance. Extensive testing on benchmark datasets shows that our approach markedly outperforms existing state-of-the-art baseline methods in producing natural, accurate, and high-quality speech. These advancements not only pushes the boundaries of current TTS system capabilities, but also facilitate the application of TTS system in more dynamic and specialized, such as interactive virtual assistants, adaptive audiobooks, and customized voice for gaming. Speech samples can be found in https://style-speech.vercel.app

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge