Hamed Ahmadi

Enhancing Open RAN Digital Twin Through Power Consumption Measurement

Jul 01, 2025Abstract:The increasing demand for high-speed, ultra-reliable and low-latency communications in 5G and beyond networks has led to a significant increase in power consumption, particularly within the Radio Access Network (RAN). This growing energy demand raises operational and sustainability challenges for mobile network operators, requiring novel solutions to enhance energy efficiency while maintaining Quality of Service (QoS). 5G networks are evolving towards disaggregated, programmable, and intelligent architectures, with Open Radio Access Network (O-RAN) spearheaded by the O-RAN Alliance, enabling greater flexibility, interoperability, and cost-effectiveness. However, this disaggregated approach introduces new complexities, especially in terms of power consumption across different network components, including Open Radio Units (RUs), Open Distributed Units (DUs) and Open Central Units (CUs). Understanding the power efficiency of different O-RAN functional splits is crucial for optimising energy consumption and network sustainability. In this paper, we present a comprehensive measurement study of power consumption in RUs, DUs and CUs under varying network loads, specifically analysing the impact of Physical resource block (PRB) utilisation in Split 8 and Split 7.2b. The measurements were conducted on both software-defined radio (SDR)-based RUs and commercial indoor and outdoor RU, as well as their corresponding DU and CU. By evaluating real-world hardware deployments under different operational conditions, this study provides empirical insights into the power efficiency of various O-RAN configurations. The results highlight that power consumption does not scale significantly with network load, suggesting that a large portion of energy consumption remains constant regardless of traffic demand.

An Explainable AI Framework for Dynamic Resource Management in Vehicular Network Slicing

Jun 13, 2025Abstract:Effective resource management and network slicing are essential to meet the diverse service demands of vehicular networks, including Enhanced Mobile Broadband (eMBB) and Ultra-Reliable and Low-Latency Communications (URLLC). This paper introduces an Explainable Deep Reinforcement Learning (XRL) framework for dynamic network slicing and resource allocation in vehicular networks, built upon a near-real-time RAN intelligent controller. By integrating a feature-based approach that leverages Shapley values and an attention mechanism, we interpret and refine the decisions of our reinforcementlearning agents, addressing key reliability challenges in vehicular communication systems. Simulation results demonstrate that our approach provides clear, real-time insights into the resource allocation process and achieves higher interpretability precision than a pure attention mechanism. Furthermore, the Quality of Service (QoS) satisfaction for URLLC services increased from 78.0% to 80.13%, while that for eMBB services improved from 71.44% to 73.21%.

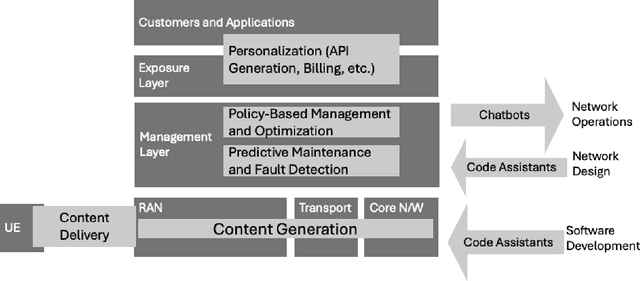

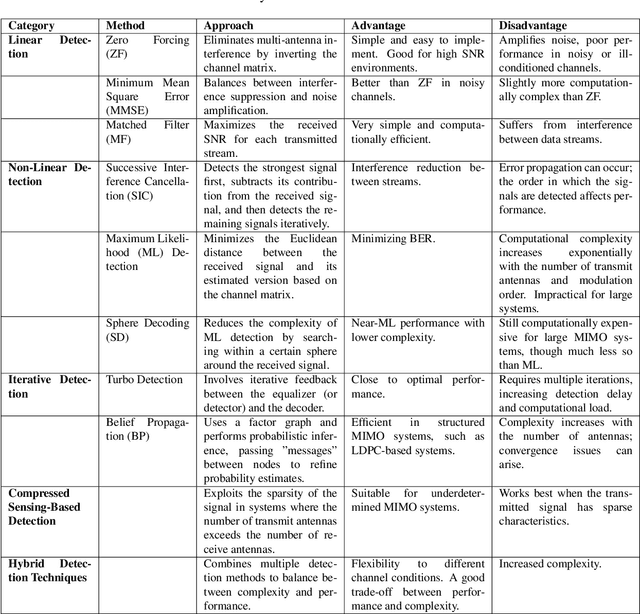

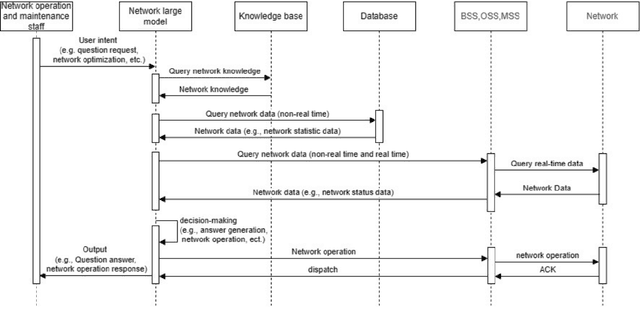

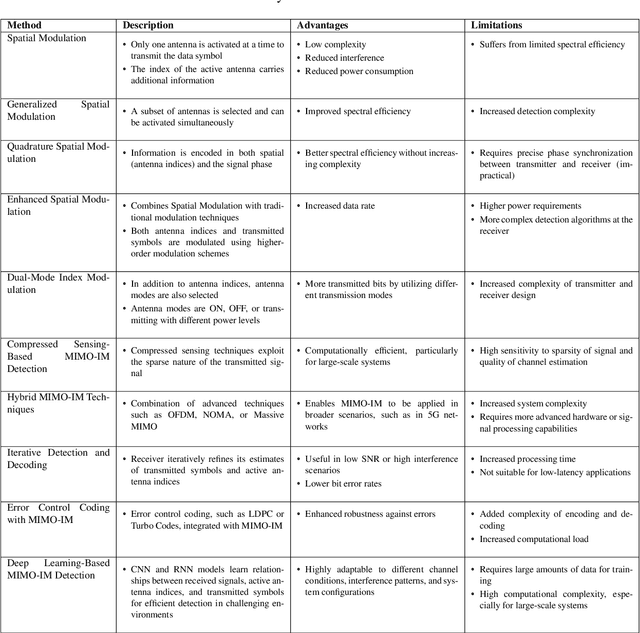

Large-Scale AI in Telecom: Charting the Roadmap for Innovation, Scalability, and Enhanced Digital Experiences

Mar 06, 2025

Abstract:This white paper discusses the role of large-scale AI in the telecommunications industry, with a specific focus on the potential of generative AI to revolutionize network functions and user experiences, especially in the context of 6G systems. It highlights the development and deployment of Large Telecom Models (LTMs), which are tailored AI models designed to address the complex challenges faced by modern telecom networks. The paper covers a wide range of topics, from the architecture and deployment strategies of LTMs to their applications in network management, resource allocation, and optimization. It also explores the regulatory, ethical, and standardization considerations for LTMs, offering insights into their future integration into telecom infrastructure. The goal is to provide a comprehensive roadmap for the adoption of LTMs to enhance scalability, performance, and user-centric innovation in telecom networks.

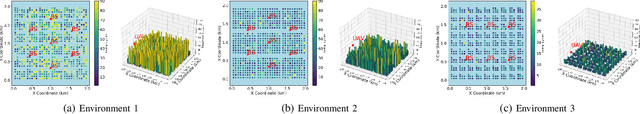

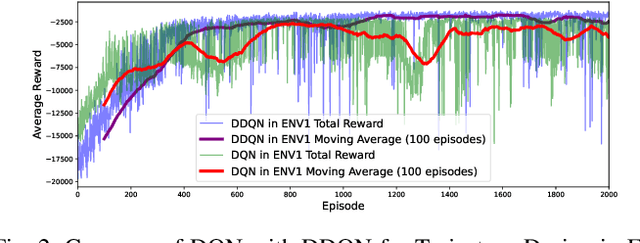

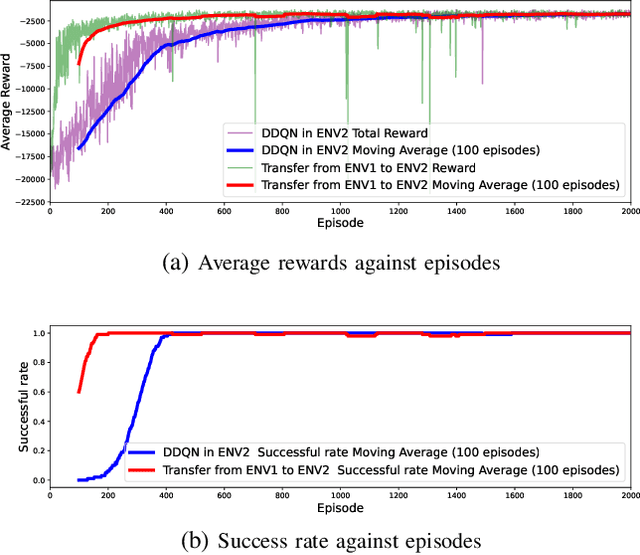

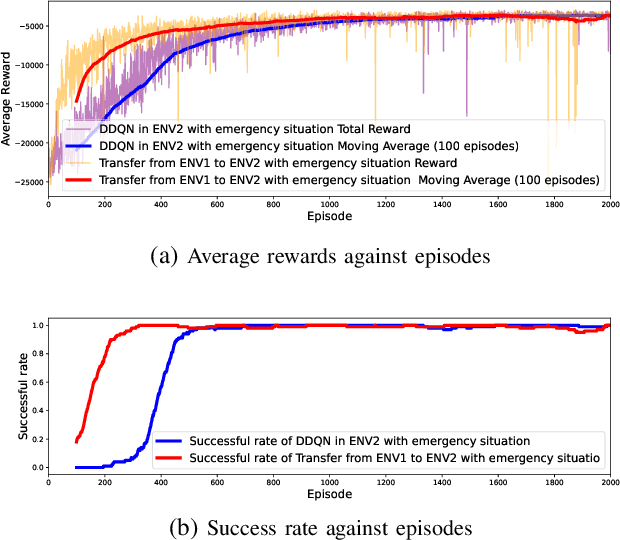

Energy Consumption Reduction for UAV Trajectory Training : A Transfer Learning Approach

Jan 20, 2025Abstract:The advent of 6G technology demands flexible, scalable wireless architectures to support ultra-low latency, high connectivity, and high device density. The Open Radio Access Network (O-RAN) framework, with its open interfaces and virtualized functions, provides a promising foundation for such architectures. However, traditional fixed base stations alone are not sufficient to fully capitalize on the benefits of O-RAN due to their limited flexibility in responding to dynamic network demands. The integration of Unmanned Aerial Vehicles (UAVs) as mobile RUs within the O-RAN architecture offers a solution by leveraging the flexibility of drones to dynamically extend coverage. However, UAV operating in diverse environments requires frequent retraining, leading to significant energy waste. We proposed transfer learning based on Dueling Double Deep Q network (DDQN) with multi-step learning, which significantly reduces the training time and energy consumption required for UAVs to adapt to new environments. We designed simulation environments and conducted ray tracing experiments using Wireless InSite with real-world map data. In the two simulated environments, training energy consumption was reduced by 30.52% and 58.51%, respectively. Furthermore, tests on real-world maps of Ottawa and Rosslyn showed energy reductions of 44.85% and 36.97%, respectively.

Optimized Resource Allocation for Cloud-Native 6G Networks: Zero-Touch ML Models in Microservices-based VNF Deployments

Oct 09, 2024

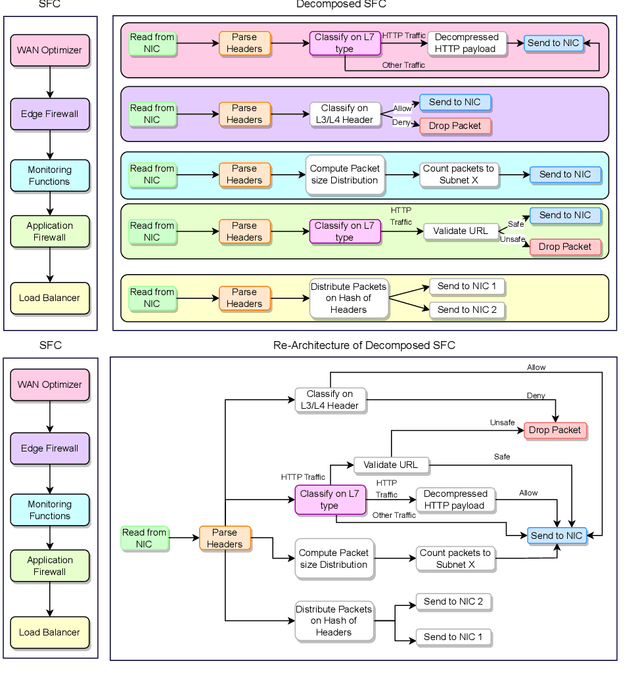

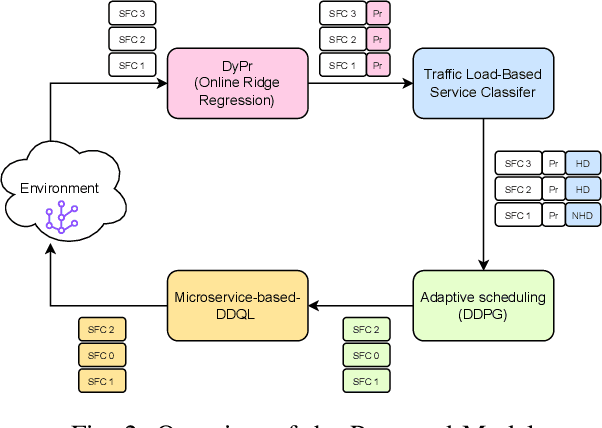

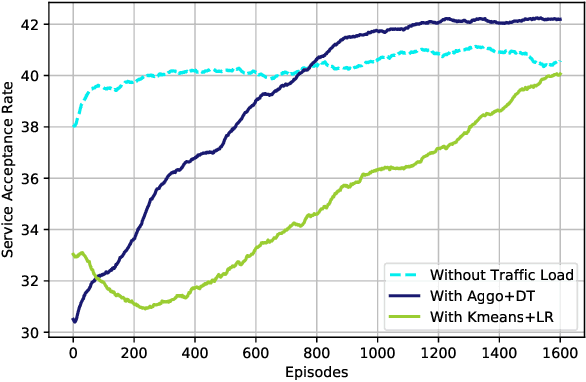

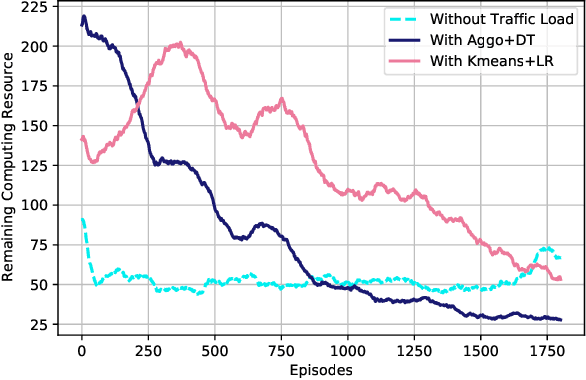

Abstract:6G, the next generation of mobile networks, is set to offer even higher data rates, ultra-reliability, and lower latency than 5G. New 6G services will increase the load and dynamism of the network. Network Function Virtualization (NFV) aids with this increased load and dynamism by eliminating hardware dependency. It aims to boost the flexibility and scalability of network deployment services by separating network functions from their specific proprietary forms so that they can run as virtual network functions (VNFs) on commodity hardware. It is essential to design an NFV orchestration and management framework to support these services. However, deploying bulky monolithic VNFs on the network is difficult, especially when underlying resources are scarce, resulting in ineffective resource management. To address this, microservices-based NFV approaches are proposed. In this approach, monolithic VNFs are decomposed into micro VNFs, increasing the likelihood of their successful placement and resulting in more efficient resource management. This article discusses the proposed framework for resource allocation for microservices-based services to provide end-to-end Quality of Service (QoS) using the Double Deep Q Learning (DDQL) approach. Furthermore, to enhance this resource allocation approach, we discussed and addressed two crucial sub-problems: the need for a dynamic priority technique and the presence of the low-priority starvation problem. Using the Deep Deterministic Policy Gradient (DDPG) model, an Adaptive Scheduling model is developed that effectively mitigates the starvation problem. Additionally, the impact of incorporating traffic load considerations into deployment and scheduling is thoroughly investigated.

Enhancing Energy Efficiency in O-RAN Through Intelligent xApps Deployment

May 16, 2024

Abstract:The proliferation of 5G technology presents an unprecedented challenge in managing the energy consumption of densely deployed network infrastructures, particularly Base Stations (BSs), which account for the majority of power usage in mobile networks. The O-RAN architecture, with its emphasis on open and intelligent design, offers a promising framework to address the Energy Efficiency (EE) demands of modern telecommunication systems. This paper introduces two xApps designed for the O-RAN architecture to optimize power savings without compromising the Quality of Service (QoS). Utilizing a commercial RAN Intelligent Controller (RIC) simulator, we demonstrate the effectiveness of our proposed xApps through extensive simulations that reflect real-world operational conditions. Our results show a significant reduction in power consumption, achieving up to 50% power savings with a minimal number of User Equipments (UEs), by intelligently managing the operational state of Radio Cards (RCs), particularly through switching between active and sleep modes based on network resource block usage conditions.

Continuous Transfer Learning for UAV Communication-aware Trajectory Design

May 16, 2024

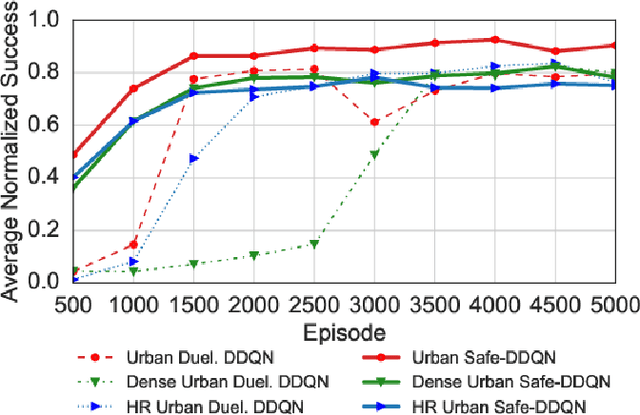

Abstract:Deep Reinforcement Learning (DRL) emerges as a prime solution for Unmanned Aerial Vehicle (UAV) trajectory planning, offering proficiency in navigating high-dimensional spaces, adaptability to dynamic environments, and making sequential decisions based on real-time feedback. Despite these advantages, the use of DRL for UAV trajectory planning requires significant retraining when the UAV is confronted with a new environment, resulting in wasted resources and time. Therefore, it is essential to develop techniques that can reduce the overhead of retraining DRL models, enabling them to adapt to constantly changing environments. This paper presents a novel method to reduce the need for extensive retraining using a double deep Q network (DDQN) model as a pretrained base, which is subsequently adapted to different urban environments through Continuous Transfer Learning (CTL). Our method involves transferring the learned model weights and adapting the learning parameters, including the learning and exploration rates, to suit each new environment specific characteristics. The effectiveness of our approach is validated in three scenarios, each with different levels of similarity. CTL significantly improves learning speed and success rates compared to DDQN models initiated from scratch. For similar environments, Transfer Learning (TL) improved stability, accelerated convergence by 65%, and facilitated 35% faster adaptation in dissimilar settings.

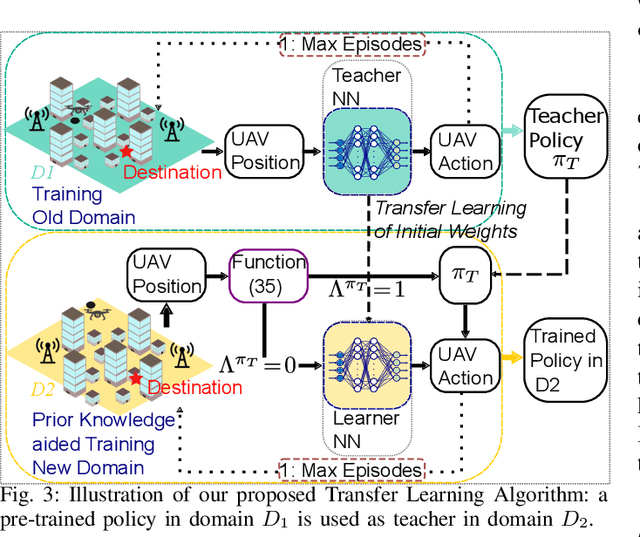

A Transfer Learning Approach for UAV Path Design with Connectivity Outage Constraint

Nov 07, 2022

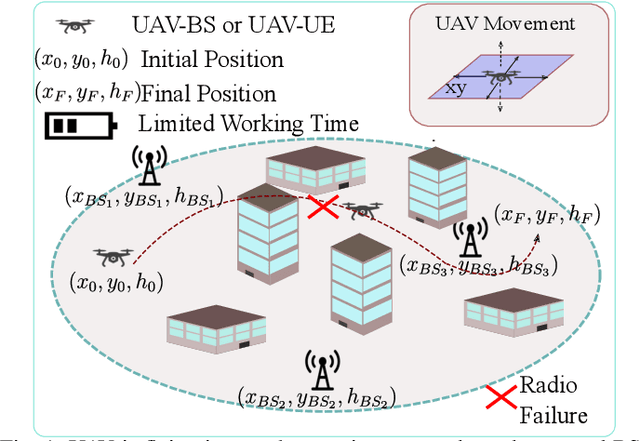

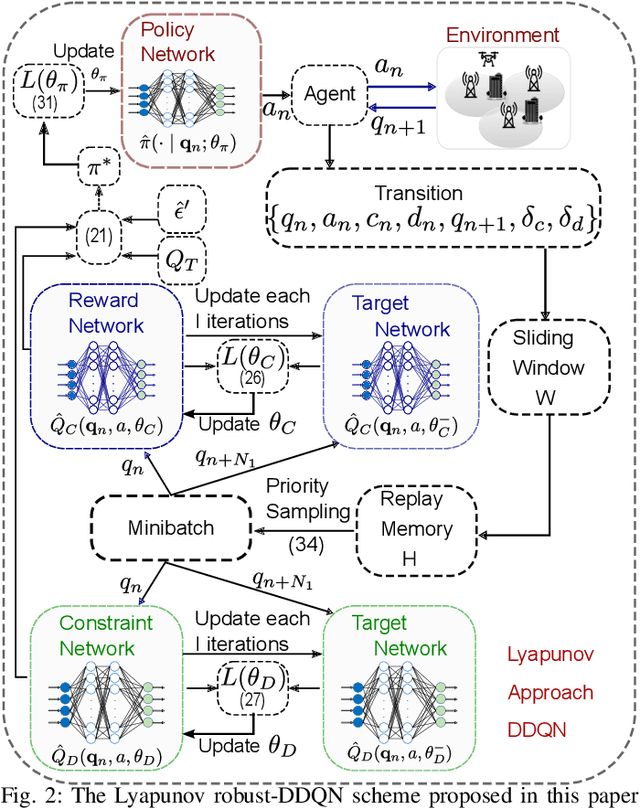

Abstract:The connectivity-aware path design is crucial in the effective deployment of autonomous Unmanned Aerial Vehicles (UAVs). Recently, Reinforcement Learning (RL) algorithms have become the popular approach to solving this type of complex problem, but RL algorithms suffer slow convergence. In this paper, we propose a Transfer Learning (TL) approach, where we use a teacher policy previously trained in an old domain to boost the path learning of the agent in the new domain. As the exploration processes and the training continue, the agent refines the path design in the new domain based on the subsequent interactions with the environment. We evaluate our approach considering an old domain at sub-6 GHz and a new domain at millimeter Wave (mmWave). The teacher path policy, previously trained at sub-6 GHz path, is the solution to a connectivity-aware path problem that we formulate as a constrained Markov Decision Process (CMDP). We employ a Lyapunov-based model-free Deep Q-Network (DQN) to solve the path design at sub-6 GHz that guarantees connectivity constraint satisfaction. We empirically demonstrate the effectiveness of our approach for different urban environment scenarios. The results demonstrate that our proposed approach is capable of reducing the training time considerably at mmWave.

Deep Reinforcement Learning for Dynamic Band Switch in Cellular-Connected UAV

Aug 26, 2021Abstract:The choice of the transmitting frequency to provide cellular-connected Unmanned Aerial Vehicle (UAV) reliable connectivity and mobility support introduce several challenges. Conventional sub-6 GHz networks are optimized for ground Users (UEs). Operating at the millimeter Wave (mmWave) band would provide high-capacity but highly intermittent links. To reach the destination while minimizing a weighted function of traveling time and number of radio failures, we propose in this paper a UAV joint trajectory and band switch approach. By leveraging Double Deep Q-Learning we develop two different approaches to learn a trajectory besides managing the band switch. A first blind approach switches the band along the trajectory anytime the UAV-UE throughput is below a predefined threshold. In addition, we propose a smart approach for simultaneous learning-based path planning of UAV and band switch. The two approaches are compared with an optimal band switch strategy in terms of radio failure and band switches for different thresholds. Results reveal that the smart approach is able in a high threshold regime to reduce the number of radio failures and band switches while reaching the desired destination.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge