Chenrui Sun

Energy Consumption Reduction for UAV Trajectory Training : A Transfer Learning Approach

Jan 20, 2025Abstract:The advent of 6G technology demands flexible, scalable wireless architectures to support ultra-low latency, high connectivity, and high device density. The Open Radio Access Network (O-RAN) framework, with its open interfaces and virtualized functions, provides a promising foundation for such architectures. However, traditional fixed base stations alone are not sufficient to fully capitalize on the benefits of O-RAN due to their limited flexibility in responding to dynamic network demands. The integration of Unmanned Aerial Vehicles (UAVs) as mobile RUs within the O-RAN architecture offers a solution by leveraging the flexibility of drones to dynamically extend coverage. However, UAV operating in diverse environments requires frequent retraining, leading to significant energy waste. We proposed transfer learning based on Dueling Double Deep Q network (DDQN) with multi-step learning, which significantly reduces the training time and energy consumption required for UAVs to adapt to new environments. We designed simulation environments and conducted ray tracing experiments using Wireless InSite with real-world map data. In the two simulated environments, training energy consumption was reduced by 30.52% and 58.51%, respectively. Furthermore, tests on real-world maps of Ottawa and Rosslyn showed energy reductions of 44.85% and 36.97%, respectively.

Continuous Transfer Learning for UAV Communication-aware Trajectory Design

May 16, 2024

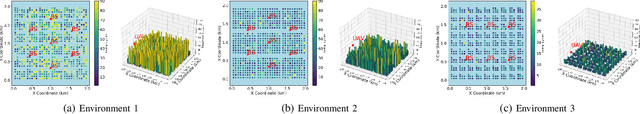

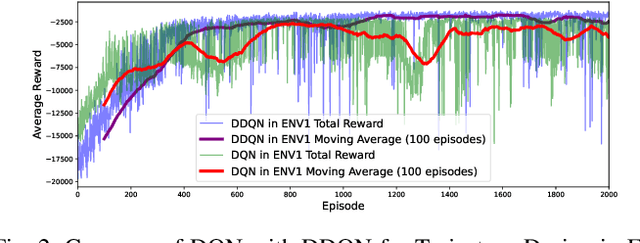

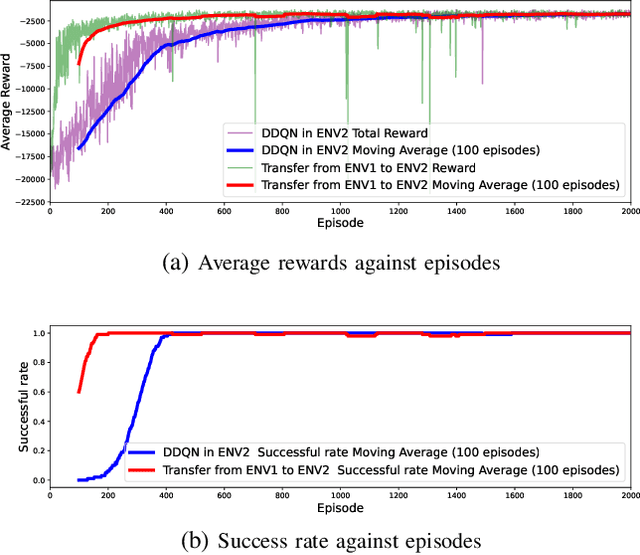

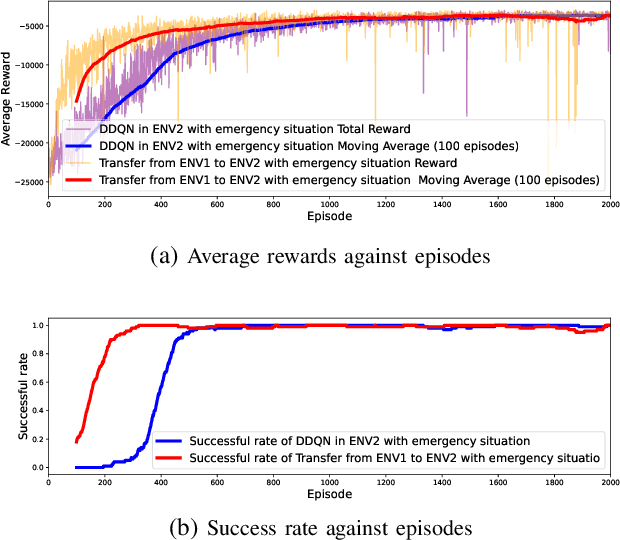

Abstract:Deep Reinforcement Learning (DRL) emerges as a prime solution for Unmanned Aerial Vehicle (UAV) trajectory planning, offering proficiency in navigating high-dimensional spaces, adaptability to dynamic environments, and making sequential decisions based on real-time feedback. Despite these advantages, the use of DRL for UAV trajectory planning requires significant retraining when the UAV is confronted with a new environment, resulting in wasted resources and time. Therefore, it is essential to develop techniques that can reduce the overhead of retraining DRL models, enabling them to adapt to constantly changing environments. This paper presents a novel method to reduce the need for extensive retraining using a double deep Q network (DDQN) model as a pretrained base, which is subsequently adapted to different urban environments through Continuous Transfer Learning (CTL). Our method involves transferring the learned model weights and adapting the learning parameters, including the learning and exploration rates, to suit each new environment specific characteristics. The effectiveness of our approach is validated in three scenarios, each with different levels of similarity. CTL significantly improves learning speed and success rates compared to DDQN models initiated from scratch. For similar environments, Transfer Learning (TL) improved stability, accelerated convergence by 65%, and facilitated 35% faster adaptation in dissimilar settings.

Enhancing Energy Efficiency in O-RAN Through Intelligent xApps Deployment

May 16, 2024

Abstract:The proliferation of 5G technology presents an unprecedented challenge in managing the energy consumption of densely deployed network infrastructures, particularly Base Stations (BSs), which account for the majority of power usage in mobile networks. The O-RAN architecture, with its emphasis on open and intelligent design, offers a promising framework to address the Energy Efficiency (EE) demands of modern telecommunication systems. This paper introduces two xApps designed for the O-RAN architecture to optimize power savings without compromising the Quality of Service (QoS). Utilizing a commercial RAN Intelligent Controller (RIC) simulator, we demonstrate the effectiveness of our proposed xApps through extensive simulations that reflect real-world operational conditions. Our results show a significant reduction in power consumption, achieving up to 50% power savings with a minimal number of User Equipments (UEs), by intelligently managing the operational state of Radio Cards (RCs), particularly through switching between active and sleep modes based on network resource block usage conditions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge