Berk Canberk

JamShield: A Machine Learning Detection System for Over-the-Air Jamming Attacks

Jul 15, 2025Abstract:Wireless networks are vulnerable to jamming attacks due to the shared communication medium, which can severely degrade performance and disrupt services. Despite extensive research, current jamming detection methods often rely on simulated data or proprietary over-the-air datasets with limited cross-layer features, failing to accurately represent the real state of a network and thus limiting their effectiveness in real-world scenarios. To address these challenges, we introduce JamShield, a dynamic jamming detection system trained on our own collected over-the-air and publicly available dataset. It utilizes hybrid feature selection to prioritize relevant features for accurate and efficient detection. Additionally, it includes an auto-classification module that dynamically adjusts the classification algorithm in real-time based on current network conditions. Our experimental results demonstrate significant improvements in detection rate, precision, and recall, along with reduced false alarms and misdetections compared to state-of-the-art detection algorithms, making JamShield a robust and reliable solution for detecting jamming attacks in real-world wireless networks.

Lightweight Authenticated Task Offloading in 6G-Cloud Vehicular Twin Networks

Feb 05, 2025Abstract:Task offloading management in 6G vehicular networks is crucial for maintaining network efficiency, particularly as vehicles generate substantial data. Integrating secure communication through authentication introduces additional computational and communication overhead, significantly impacting offloading efficiency and latency. This paper presents a unified framework incorporating lightweight Identity-Based Cryptographic (IBC) authentication into task offloading within cloud-based 6G Vehicular Twin Networks (VTNs). Utilizing Proximal Policy Optimization (PPO) in Deep Reinforcement Learning (DRL), our approach optimizes authenticated offloading decisions to minimize latency and enhance resource allocation. Performance evaluation under varying network sizes, task sizes, and data rates reveals that IBC authentication can reduce offloading efficiency by up to 50% due to the added overhead. Besides, increasing network size and task size can further reduce offloading efficiency by up to 91.7%. As a countermeasure, increasing the transmission data rate can improve the offloading performance by as much as 63%, even in the presence of authentication overhead. The code for the simulations and experiments detailed in this paper is available on GitHub for further reference and reproducibility [1].

Accurate AI-Driven Emergency Vehicle Location Tracking in Healthcare ITS Digital Twin

Feb 05, 2025Abstract:Creating a Digital Twin (DT) for Healthcare Intelligent Transportation Systems (HITS) is a hot research trend focusing on enhancing HITS management, particularly in emergencies where ambulance vehicles must arrive at the crash scene on time and track their real-time location is crucial to the medical authorities. Despite the claim of real-time representation, a temporal misalignment persists between the physical and virtual domains, leading to discrepancies in the ambulance's location representation. This study proposes integrating AI predictive models, specifically Support Vector Regression (SVR) and Deep Neural Networks (DNN), within a constructed mock DT data pipeline framework to anticipate the medical vehicle's next location in the virtual world. These models align virtual representations with their physical counterparts, i.e., metaphorically offsetting the synchronization delay between the two worlds. Trained meticulously on a historical geospatial dataset, SVR and DNN exhibit exceptional prediction accuracy in MATLAB and Python environments. Through various testing scenarios, we visually demonstrate the efficacy of our methodology, showcasing SVR and DNN's key role in significantly reducing the witnessed gap within the HITS's DT. This transformative approach enhances real-time synchronization in emergency HITS by approximately 88% to 93%.

AI-based traffic analysis in digital twin networks

Nov 01, 2024Abstract:In today's networked world, Digital Twin Networks (DTNs) are revolutionizing how we understand and optimize physical networks. These networks, also known as 'Digital Twin Networks (DTNs)' or 'Networks Digital Twins (NDTs),' encompass many physical networks, from cellular and wireless to optical and satellite. They leverage computational power and AI capabilities to provide virtual representations, leading to highly refined recommendations for real-world network challenges. Within DTNs, tasks include network performance enhancement, latency optimization, energy efficiency, and more. To achieve these goals, DTNs utilize AI tools such as Machine Learning (ML), Deep Learning (DL), Reinforcement Learning (RL), Federated Learning (FL), and graph-based approaches. However, data quality, scalability, interpretability, and security challenges necessitate strategies prioritizing transparency, fairness, privacy, and accountability. This chapter delves into the world of AI-driven traffic analysis within DTNs. It explores DTNs' development efforts, tasks, AI models, and challenges while offering insights into how AI can enhance these dynamic networks. Through this journey, readers will gain a deeper understanding of the pivotal role AI plays in the ever-evolving landscape of networked systems.

Optimized Resource Allocation for Cloud-Native 6G Networks: Zero-Touch ML Models in Microservices-based VNF Deployments

Oct 09, 2024

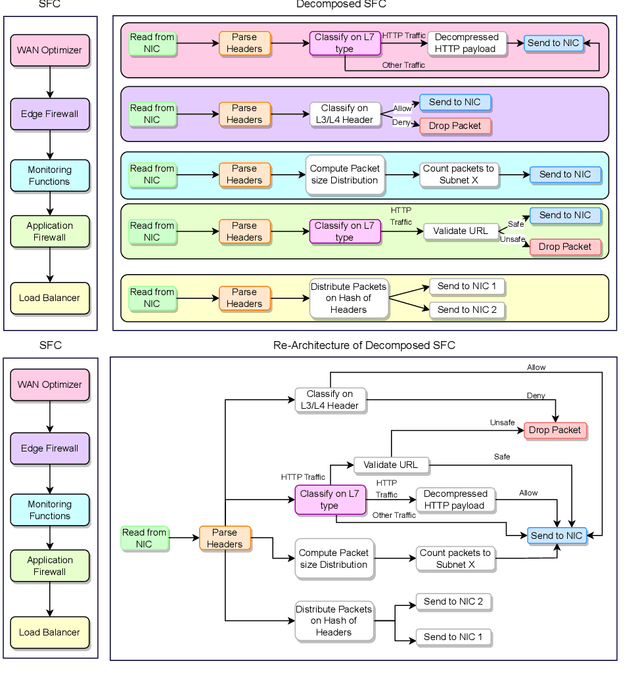

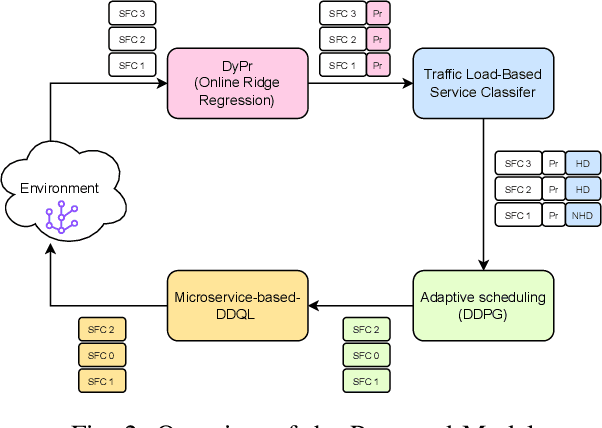

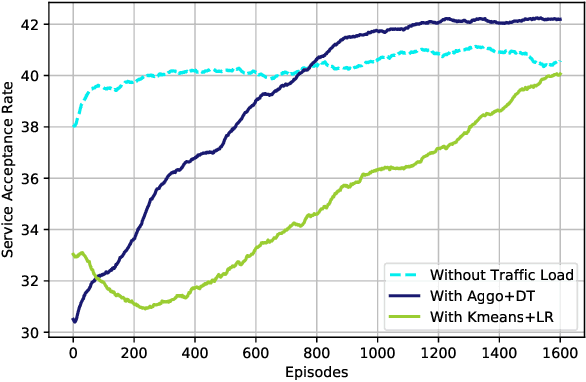

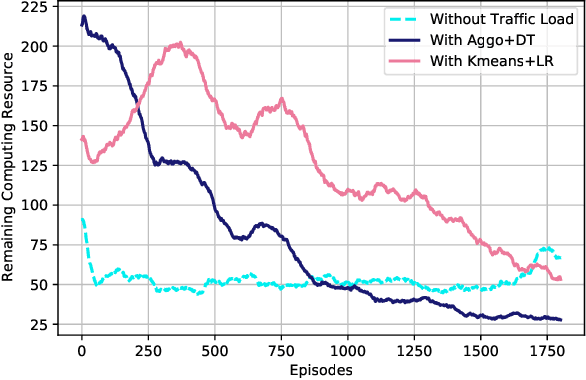

Abstract:6G, the next generation of mobile networks, is set to offer even higher data rates, ultra-reliability, and lower latency than 5G. New 6G services will increase the load and dynamism of the network. Network Function Virtualization (NFV) aids with this increased load and dynamism by eliminating hardware dependency. It aims to boost the flexibility and scalability of network deployment services by separating network functions from their specific proprietary forms so that they can run as virtual network functions (VNFs) on commodity hardware. It is essential to design an NFV orchestration and management framework to support these services. However, deploying bulky monolithic VNFs on the network is difficult, especially when underlying resources are scarce, resulting in ineffective resource management. To address this, microservices-based NFV approaches are proposed. In this approach, monolithic VNFs are decomposed into micro VNFs, increasing the likelihood of their successful placement and resulting in more efficient resource management. This article discusses the proposed framework for resource allocation for microservices-based services to provide end-to-end Quality of Service (QoS) using the Double Deep Q Learning (DDQL) approach. Furthermore, to enhance this resource allocation approach, we discussed and addressed two crucial sub-problems: the need for a dynamic priority technique and the presence of the low-priority starvation problem. Using the Deep Deterministic Policy Gradient (DDPG) model, an Adaptive Scheduling model is developed that effectively mitigates the starvation problem. Additionally, the impact of incorporating traffic load considerations into deployment and scheduling is thoroughly investigated.

Continuous Transfer Learning for UAV Communication-aware Trajectory Design

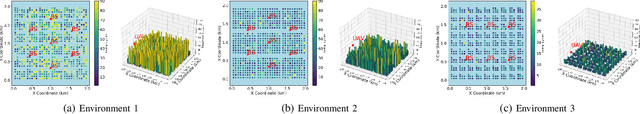

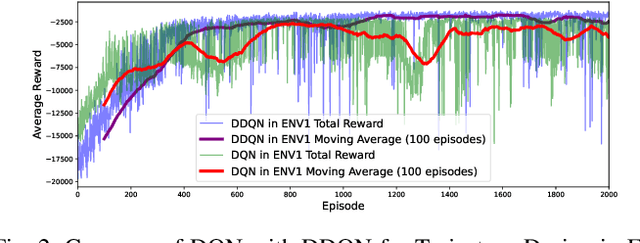

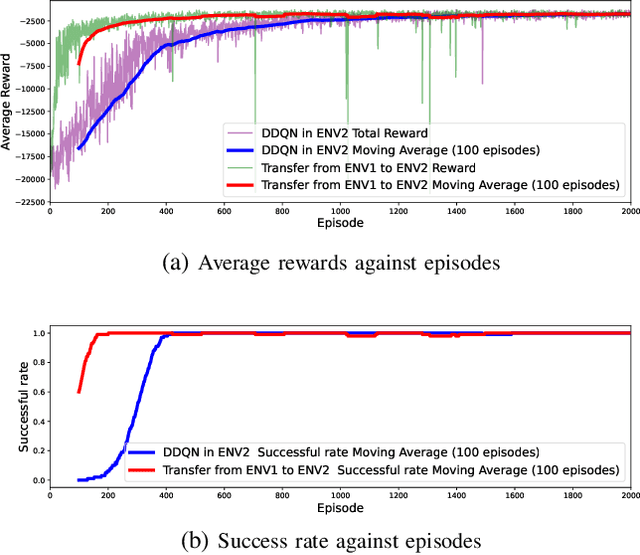

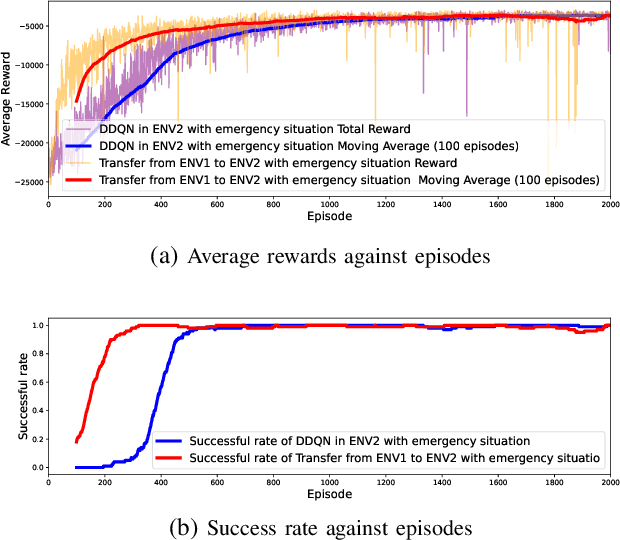

May 16, 2024

Abstract:Deep Reinforcement Learning (DRL) emerges as a prime solution for Unmanned Aerial Vehicle (UAV) trajectory planning, offering proficiency in navigating high-dimensional spaces, adaptability to dynamic environments, and making sequential decisions based on real-time feedback. Despite these advantages, the use of DRL for UAV trajectory planning requires significant retraining when the UAV is confronted with a new environment, resulting in wasted resources and time. Therefore, it is essential to develop techniques that can reduce the overhead of retraining DRL models, enabling them to adapt to constantly changing environments. This paper presents a novel method to reduce the need for extensive retraining using a double deep Q network (DDQN) model as a pretrained base, which is subsequently adapted to different urban environments through Continuous Transfer Learning (CTL). Our method involves transferring the learned model weights and adapting the learning parameters, including the learning and exploration rates, to suit each new environment specific characteristics. The effectiveness of our approach is validated in three scenarios, each with different levels of similarity. CTL significantly improves learning speed and success rates compared to DDQN models initiated from scratch. For similar environments, Transfer Learning (TL) improved stability, accelerated convergence by 65%, and facilitated 35% faster adaptation in dissimilar settings.

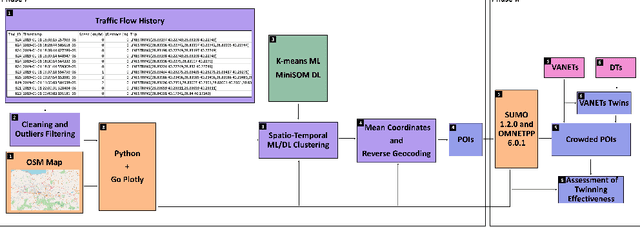

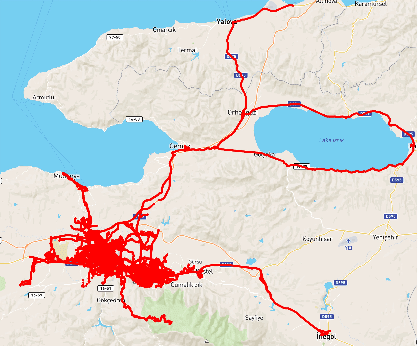

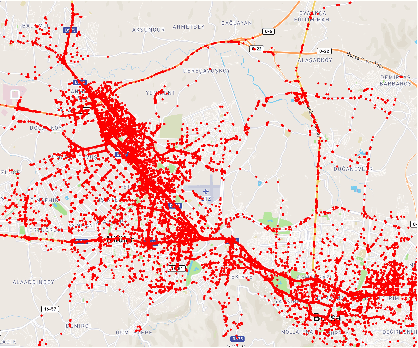

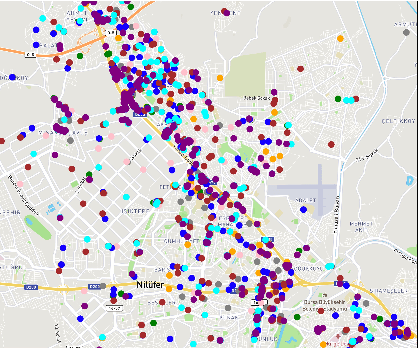

Does Twinning Vehicular Networks Enhance Their Performance in Dense Areas?

Feb 16, 2024

Abstract:This paper investigates the potential of Digital Twins (DTs) to enhance network performance in densely populated urban areas, specifically focusing on vehicular networks. The study comprises two phases. In Phase I, we utilize traffic data and AI clustering to identify critical locations, particularly in crowded urban areas with high accident rates. In Phase II, we evaluate the advantages of twinning vehicular networks through three deployment scenarios: edge-based twin, cloud-based twin, and hybrid-based twin. Our analysis demonstrates that twinning significantly reduces network delays, with virtual twins outperforming physical networks. Virtual twins maintain low delays even with increased vehicle density, such as 15.05 seconds for 300 vehicles. Moreover, they exhibit faster computational speeds, with cloud-based twins being 1.7 times faster than edge twins in certain scenarios. These findings provide insights for efficient vehicular communication and underscore the potential of virtual twins in enhancing vehicular networks in crowded areas while emphasizing the importance of considering real-world factors when making deployment decisions.

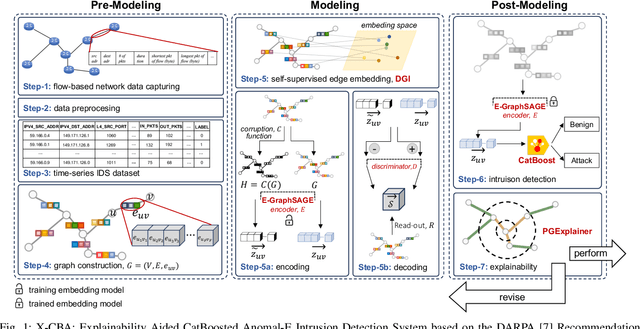

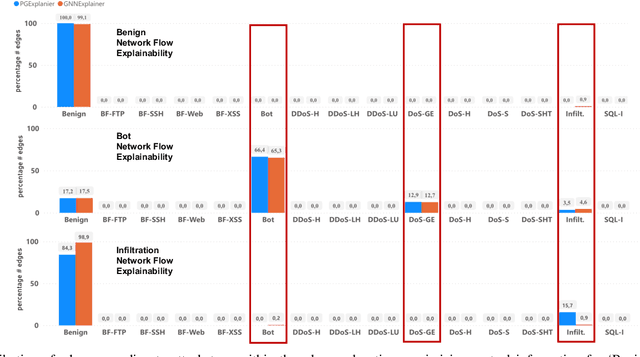

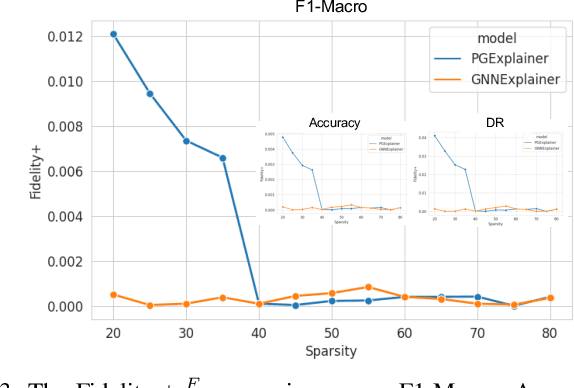

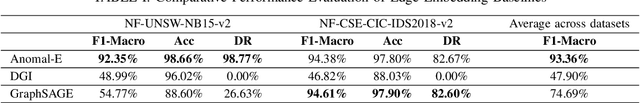

X-CBA: Explainability Aided CatBoosted Anomal-E for Intrusion Detection System

Feb 01, 2024

Abstract:The effectiveness of Intrusion Detection Systems (IDS) is critical in an era where cyber threats are becoming increasingly complex. Machine learning (ML) and deep learning (DL) models provide an efficient and accurate solution for identifying attacks and anomalies in computer networks. However, using ML and DL models in IDS has led to a trust deficit due to their non-transparent decision-making. This transparency gap in IDS research is significant, affecting confidence and accountability. To address, this paper introduces a novel Explainable IDS approach, called X-CBA, that leverages the structural advantages of Graph Neural Networks (GNNs) to effectively process network traffic data, while also adapting a new Explainable AI (XAI) methodology. Unlike most GNN-based IDS that depend on labeled network traffic and node features, thereby overlooking critical packet-level information, our approach leverages a broader range of traffic data through network flows, including edge attributes, to improve detection capabilities and adapt to novel threats. Through empirical testing, we establish that our approach not only achieves high accuracy with 99.47% in threat detection but also advances the field by providing clear, actionable explanations of its analytical outcomes. This research also aims to bridge the current gap and facilitate the broader integration of ML/DL technologies in cybersecurity defenses by offering a local and global explainability solution that is both precise and interpretable.

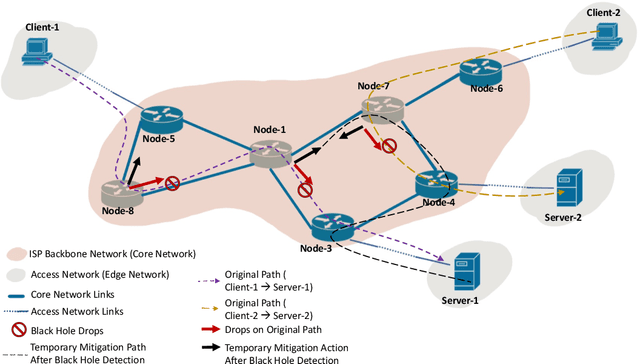

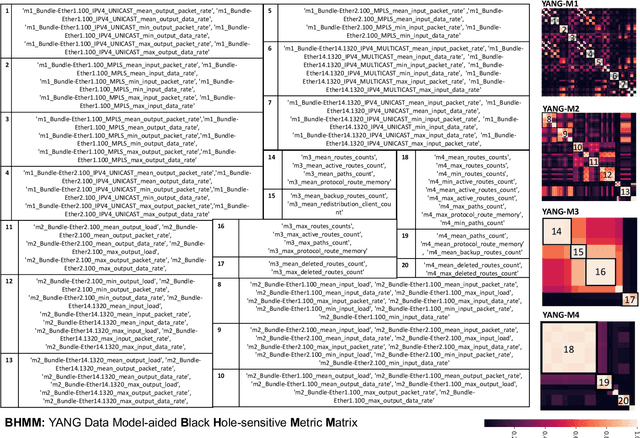

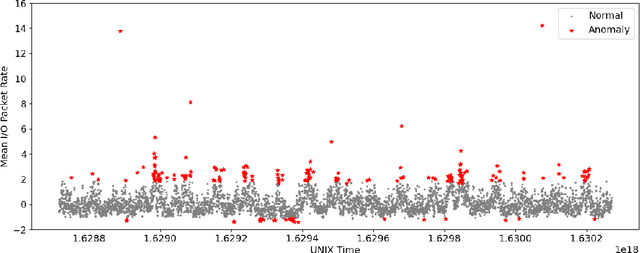

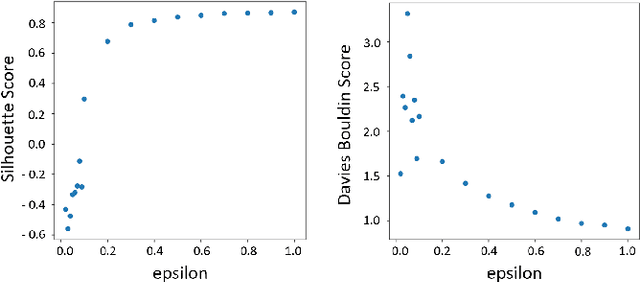

A YANG-aided Unified Strategy for Black Hole Detection for Backbone Networks

Feb 01, 2024

Abstract:Despite the crucial importance of addressing Black Hole failures in Internet backbone networks, effective detection strategies in backbone networks are lacking. This is largely because previous research has been centered on Mobile Ad-hoc Networks (MANETs), which operate under entirely different dynamics, protocols, and topologies, making their findings not directly transferable to backbone networks. Furthermore, detecting Black Hole failures in backbone networks is particularly challenging. It requires a comprehensive range of network data due to the wide variety of conditions that need to be considered, making data collection and analysis far from straightforward. Addressing this gap, our study introduces a novel approach for Black Hole detection in backbone networks using specialized Yet Another Next Generation (YANG) data models with Black Hole-sensitive Metric Matrix (BHMM) analysis. This paper details our method of selecting and analyzing four YANG models relevant to Black Hole detection in ISP networks, focusing on routing protocols and ISP-specific configurations. Our BHMM approach derived from these models demonstrates a 10% improvement in detection accuracy and a 13% increase in packet delivery rate, highlighting the efficiency of our approach. Additionally, we evaluate the Machine Learning approach leveraged with BHMM analysis in two different network settings, a commercial ISP network, and a scientific research-only network topology. This evaluation also demonstrates the practical applicability of our method, yielding significantly improved prediction outcomes in both environments.

AI in Energy Digital Twining: A Reinforcement Learning-based Adaptive Digital Twin Model for Green Cities

Jan 28, 2024Abstract:Digital Twins (DT) have become crucial to achieve sustainable and effective smart urban solutions. However, current DT modelling techniques cannot support the dynamicity of these smart city environments. This is caused by the lack of right-time data capturing in traditional approaches, resulting in inaccurate modelling and high resource and energy consumption challenges. To fill this gap, we explore spatiotemporal graphs and propose the Reinforcement Learning-based Adaptive Twining (RL-AT) mechanism with Deep Q Networks (DQN). By doing so, our study contributes to advancing Green Cities and showcases tangible benefits in accuracy, synchronisation, resource optimization, and energy efficiency. As a result, we note the spatiotemporal graphs are able to offer a consistent accuracy and 55% higher querying performance when implemented using graph databases. In addition, our model demonstrates right-time data capturing with 20% lower overhead and 25% lower energy consumption.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge