Sumeyye Bas

Adaptive Intrusion Detection for Evolving RPL IoT Attacks Using Incremental Learning

Nov 14, 2025

Abstract:The routing protocol for low-power and lossy networks (RPL) has become the de facto routing standard for resource-constrained IoT systems, but its lightweight design exposes critical vulnerabilities to a wide range of routing-layer attacks such as hello flood, decreased rank, and version number manipulation. Traditional countermeasures, including protocol-level modifications and machine learning classifiers, can achieve high accuracy against known threats, yet they fail when confronted with novel or zero-day attacks unless fully retrained, an approach that is impractical for dynamic IoT environments. In this paper, we investigate incremental learning as a practical and adaptive strategy for intrusion detection in RPL-based networks. We systematically evaluate five model families, including ensemble models and deep learning models. Our analysis highlights that incremental learning not only restores detection performance on new attack classes but also mitigates catastrophic forgetting of previously learned threats, all while reducing training time compared to full retraining. By combining five diverse models with attack-specific analysis, forgetting behavior, and time efficiency, this study provides systematic evidence that incremental learning offers a scalable pathway to maintain resilient intrusion detection in evolving RPL-based IoT networks.

Data Augmentation in Graph Neural Networks: The Role of Generated Synthetic Graphs

Jul 20, 2024

Abstract:Graphs are crucial for representing interrelated data and aiding predictive modeling by capturing complex relationships. Achieving high-quality graph representation is important for identifying linked patterns, leading to improvements in Graph Neural Networks (GNNs) to better capture data structures. However, challenges such as data scarcity, high collection costs, and ethical concerns limit progress. As a result, generative models and data augmentation have become more and more popular. This study explores using generated graphs for data augmentation, comparing the performance of combining generated graphs with real graphs, and examining the effect of different quantities of generated graphs on graph classification tasks. The experiments show that balancing scalability and quality requires different generators based on graph size. Our results introduce a new approach to graph data augmentation, ensuring consistent labels and enhancing classification performance.

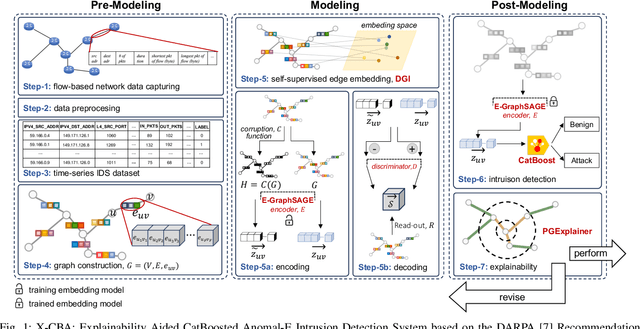

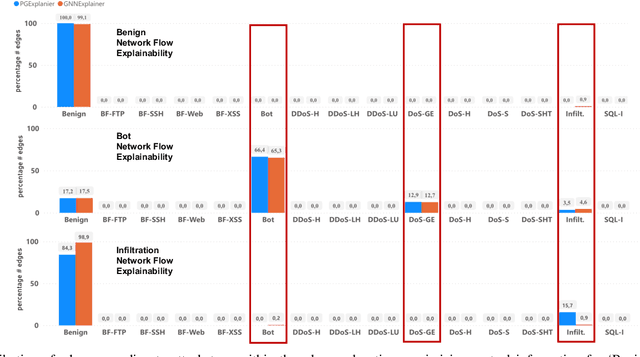

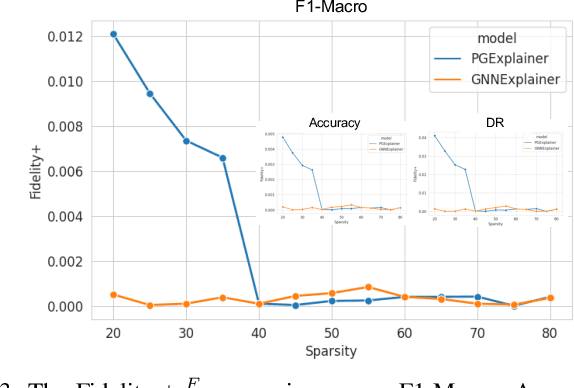

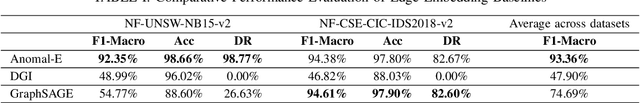

X-CBA: Explainability Aided CatBoosted Anomal-E for Intrusion Detection System

Feb 01, 2024

Abstract:The effectiveness of Intrusion Detection Systems (IDS) is critical in an era where cyber threats are becoming increasingly complex. Machine learning (ML) and deep learning (DL) models provide an efficient and accurate solution for identifying attacks and anomalies in computer networks. However, using ML and DL models in IDS has led to a trust deficit due to their non-transparent decision-making. This transparency gap in IDS research is significant, affecting confidence and accountability. To address, this paper introduces a novel Explainable IDS approach, called X-CBA, that leverages the structural advantages of Graph Neural Networks (GNNs) to effectively process network traffic data, while also adapting a new Explainable AI (XAI) methodology. Unlike most GNN-based IDS that depend on labeled network traffic and node features, thereby overlooking critical packet-level information, our approach leverages a broader range of traffic data through network flows, including edge attributes, to improve detection capabilities and adapt to novel threats. Through empirical testing, we establish that our approach not only achieves high accuracy with 99.47% in threat detection but also advances the field by providing clear, actionable explanations of its analytical outcomes. This research also aims to bridge the current gap and facilitate the broader integration of ML/DL technologies in cybersecurity defenses by offering a local and global explainability solution that is both precise and interpretable.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge