Matthew Broadbent

AI in Energy Digital Twining: A Reinforcement Learning-based Adaptive Digital Twin Model for Green Cities

Jan 28, 2024Abstract:Digital Twins (DT) have become crucial to achieve sustainable and effective smart urban solutions. However, current DT modelling techniques cannot support the dynamicity of these smart city environments. This is caused by the lack of right-time data capturing in traditional approaches, resulting in inaccurate modelling and high resource and energy consumption challenges. To fill this gap, we explore spatiotemporal graphs and propose the Reinforcement Learning-based Adaptive Twining (RL-AT) mechanism with Deep Q Networks (DQN). By doing so, our study contributes to advancing Green Cities and showcases tangible benefits in accuracy, synchronisation, resource optimization, and energy efficiency. As a result, we note the spatiotemporal graphs are able to offer a consistent accuracy and 55% higher querying performance when implemented using graph databases. In addition, our model demonstrates right-time data capturing with 20% lower overhead and 25% lower energy consumption.

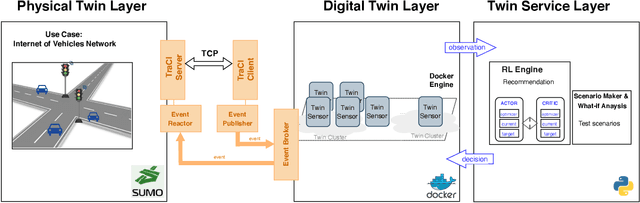

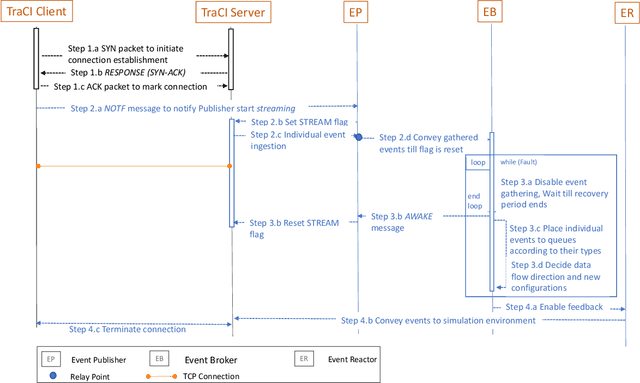

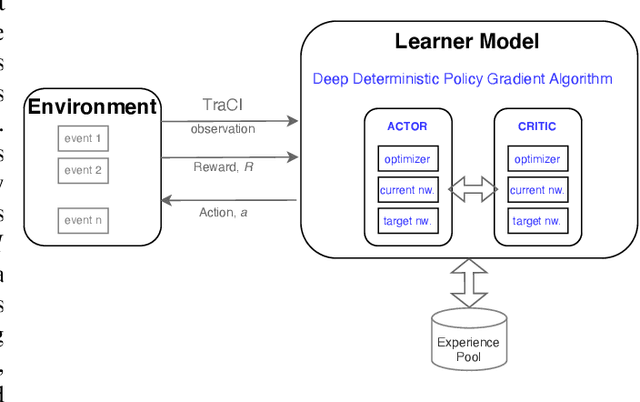

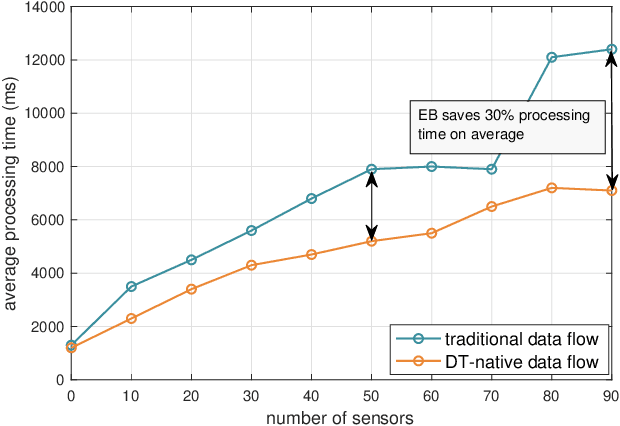

Digital Twin-Native AI-Driven Service Architecture for Industrial Networks

Nov 24, 2023

Abstract:The dramatic increase in the connectivity demand results in an excessive amount of Internet of Things (IoT) sensors. To meet the management needs of these large-scale networks, such as accurate monitoring and learning capabilities, Digital Twin (DT) is the key enabler. However, current attempts regarding DT implementations remain insufficient due to the perpetual connectivity requirements of IoT networks. Furthermore, the sensor data streaming in IoT networks cause higher processing time than traditional methods. In addition to these, the current intelligent mechanisms cannot perform well due to the spatiotemporal changes in the implemented IoT network scenario. To handle these challenges, we propose a DT-native AI-driven service architecture in support of the concept of IoT networks. Within the proposed DT-native architecture, we implement a TCP-based data flow pipeline and a Reinforcement Learning (RL)-based learner model. We apply the proposed architecture to one of the broad concepts of IoT networks, the Internet of Vehicles (IoV). We measure the efficiency of our proposed architecture and note ~30% processing time-saving thanks to the TCP-based data flow pipeline. Moreover, we test the performance of the learner model by applying several learning rate combinations for actor and critic networks and highlight the most successive model.

Contactless Human Activity Recognition using Deep Learning with Flexible and Scalable Software Define Radio

Apr 18, 2023Abstract:Ambient computing is gaining popularity as a major technological advancement for the future. The modern era has witnessed a surge in the advancement in healthcare systems, with viable radio frequency solutions proposed for remote and unobtrusive human activity recognition (HAR). Specifically, this study investigates the use of Wi-Fi channel state information (CSI) as a novel method of ambient sensing that can be employed as a contactless means of recognizing human activity in indoor environments. These methods avoid additional costly hardware required for vision-based systems, which are privacy-intrusive, by (re)using Wi-Fi CSI for various safety and security applications. During an experiment utilizing universal software-defined radio (USRP) to collect CSI samples, it was observed that a subject engaged in six distinct activities, which included no activity, standing, sitting, and leaning forward, across different areas of the room. Additionally, more CSI samples were collected when the subject walked in two different directions. This study presents a Wi-Fi CSI-based HAR system that assesses and contrasts deep learning approaches, namely convolutional neural network (CNN), long short-term memory (LSTM), and hybrid (LSTM+CNN), employed for accurate activity recognition. The experimental results indicate that LSTM surpasses current models and achieves an average accuracy of 95.3% in multi-activity classification when compared to CNN and hybrid techniques. In the future, research needs to study the significance of resilience in diverse and dynamic environments to identify the activity of multiple users.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge