Syed Ali Raza Zaidi

Vision-Language Models on the Edge for Real-Time Robotic Perception

Jan 21, 2026Abstract:Vision-Language Models (VLMs) enable multimodal reasoning for robotic perception and interaction, but their deployment in real-world systems remains constrained by latency, limited onboard resources, and privacy risks of cloud offloading. Edge intelligence within 6G, particularly Open RAN and Multi-access Edge Computing (MEC), offers a pathway to address these challenges by bringing computation closer to the data source. This work investigates the deployment of VLMs on ORAN/MEC infrastructure using the Unitree G1 humanoid robot as an embodied testbed. We design a WebRTC-based pipeline that streams multimodal data to an edge node and evaluate LLaMA-3.2-11B-Vision-Instruct deployed at the edge versus in the cloud under real-time conditions. Our results show that edge deployment preserves near-cloud accuracy while reducing end-to-end latency by 5\%. We further evaluate Qwen2-VL-2B-Instruct, a compact model optimized for resource-constrained environments, which achieves sub-second responsiveness, cutting latency by more than half but at the cost of accuracy.

Agentic AI meets Neural Architecture Search: Proactive Traffic Prediction for AI-RAN

Oct 01, 2025

Abstract:Next-generation wireless networks require intelligent traffic prediction to enable autonomous resource management and handle diverse, dynamic service demands. The Open Radio Access Network (O-RAN) framework provides a promising foundation for embedding machine learning intelligence through its disaggregated architecture and programmable interfaces. This work applies a Neural Architecture Search (NAS)-based framework that dynamically selects and orchestrates efficient Long Short-Term Memory (LSTM) architectures for traffic prediction in O-RAN environments. Our approach leverages the O-RAN paradigm by separating architecture optimisation (via non-RT RIC rApps) from real-time inference (via near-RT RIC xApps), enabling adaptive model deployment based on traffic conditions and resource constraints. Experimental evaluation across six LSTM architectures demonstrates that lightweight models achieve $R^2 \approx 0.91$--$0.93$ with high efficiency for regular traffic, while complex models reach near-perfect accuracy ($R^2 = 0.989$--$0.996$) during critical scenarios. Our NAS-based orchestration achieves a 70-75\% reduction in computational complexity compared to static high-performance models, while maintaining high prediction accuracy when required, thereby enabling scalable deployment in real-world edge environments.

An Explainable AI Framework for Dynamic Resource Management in Vehicular Network Slicing

Jun 13, 2025Abstract:Effective resource management and network slicing are essential to meet the diverse service demands of vehicular networks, including Enhanced Mobile Broadband (eMBB) and Ultra-Reliable and Low-Latency Communications (URLLC). This paper introduces an Explainable Deep Reinforcement Learning (XRL) framework for dynamic network slicing and resource allocation in vehicular networks, built upon a near-real-time RAN intelligent controller. By integrating a feature-based approach that leverages Shapley values and an attention mechanism, we interpret and refine the decisions of our reinforcementlearning agents, addressing key reliability challenges in vehicular communication systems. Simulation results demonstrate that our approach provides clear, real-time insights into the resource allocation process and achieves higher interpretability precision than a pure attention mechanism. Furthermore, the Quality of Service (QoS) satisfaction for URLLC services increased from 78.0% to 80.13%, while that for eMBB services improved from 71.44% to 73.21%.

From Connectivity to Autonomy: The Dawn of Self-Evolving Communication Systems

May 29, 2025Abstract:This paper envisions 6G as a self-evolving telecom ecosystem, where AI-driven intelligence enables dynamic adaptation beyond static connectivity. We explore the key enablers of autonomous communication systems, spanning reconfigurable infrastructure, adaptive middleware, and intelligent network functions, alongside multi-agent collaboration for distributed decision-making. We explore how these methodologies align with emerging industrial IoT frameworks, ensuring seamless integration within digital manufacturing processes. Our findings emphasize the potential for improved real-time decision-making, optimizing efficiency, and reducing latency in networked control systems. The discussion addresses ethical challenges, research directions, and standardization efforts, concluding with a technology stack roadmap to guide future developments. By leveraging state-of-the-art 6G network management techniques, this research contributes to the next generation of intelligent automation solutions, bridging the gap between theoretical advancements and real-world industrial applications.

Generative AI on the Edge: Architecture and Performance Evaluation

Nov 18, 2024Abstract:6G's AI native vision of embedding advance intelligence in the network while bringing it closer to the user requires a systematic evaluation of Generative AI (GenAI) models on edge devices. Rapidly emerging solutions based on Open RAN (ORAN) and Network-in-a-Box strongly advocate the use of low-cost, off-the-shelf components for simpler and efficient deployment, e.g., in provisioning rural connectivity. In this context, conceptual architecture, hardware testbeds and precise performance quantification of Large Language Models (LLMs) on off-the-shelf edge devices remains largely unexplored. This research investigates computationally demanding LLM inference on a single commodity Raspberry Pi serving as an edge testbed for ORAN. We investigate various LLMs, including small, medium and large models, on a Raspberry Pi 5 Cluster using a lightweight Kubernetes distribution (K3s) with modular prompting implementation. We study its feasibility and limitations by analyzing throughput, latency, accuracy and efficiency. Our findings indicate that CPU-only deployment of lightweight models, such as Yi, Phi, and Llama3, can effectively support edge applications, achieving a generation throughput of 5 to 12 tokens per second with less than 50\% CPU and RAM usage. We conclude that GenAI on the edge offers localized inference in remote or bandwidth-constrained environments in 6G networks without reliance on cloud infrastructure.

Nearest neighbor Methods and their Applications in Design of 5G & Beyond Wireless Networks

Jul 16, 2021

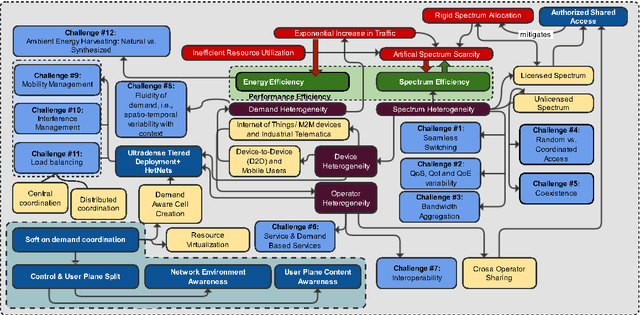

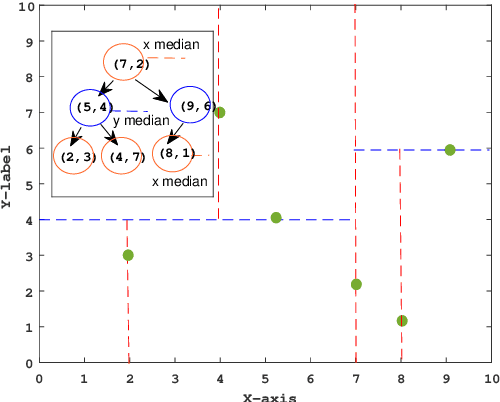

Abstract:In this paper, we present an overview of Nearest neighbor (NN) methods, which are frequently employed for solving classification problems using supervised learning. The article concisely introduces the theoretical background, algorithmic, and implementation aspects along with the key applications. From an application standpoint, this article explores the challenges related to the 5G and beyond wireless networks which can be solved using NN classification techniques.

Fine Timing and Frequency Synchronization for MIMO-OFDM: An Extreme Learning Approach

Jul 17, 2020

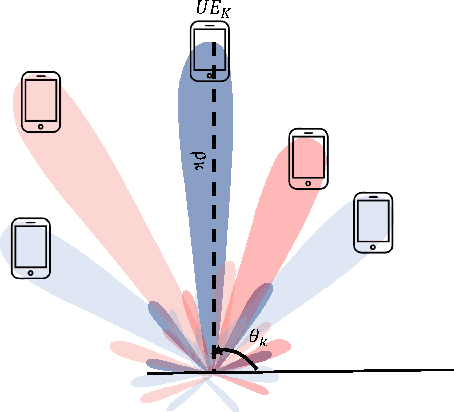

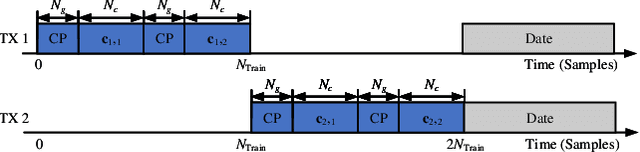

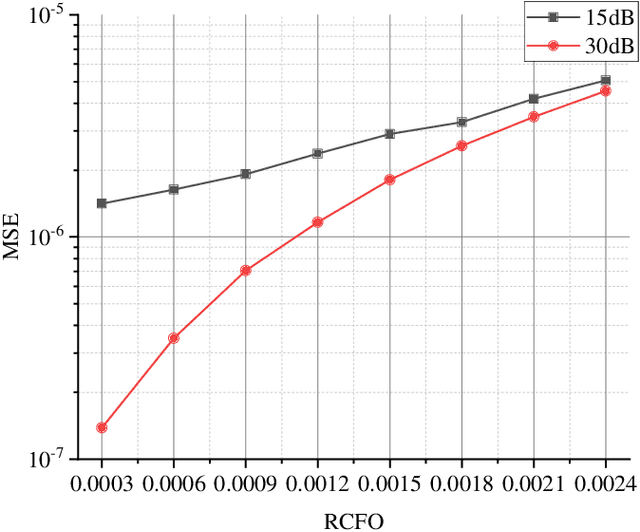

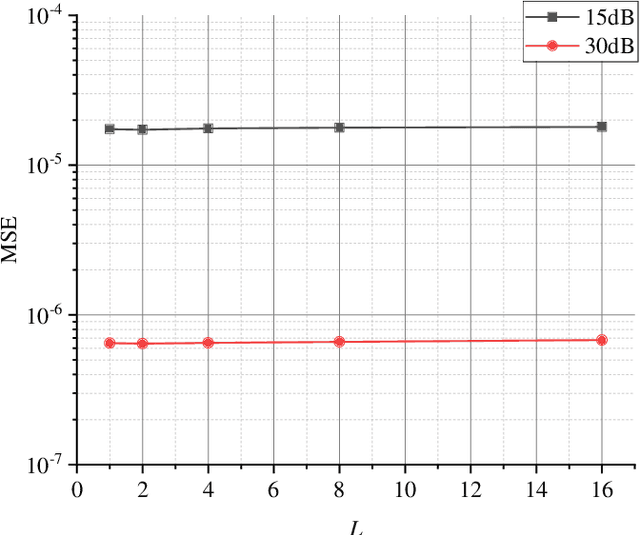

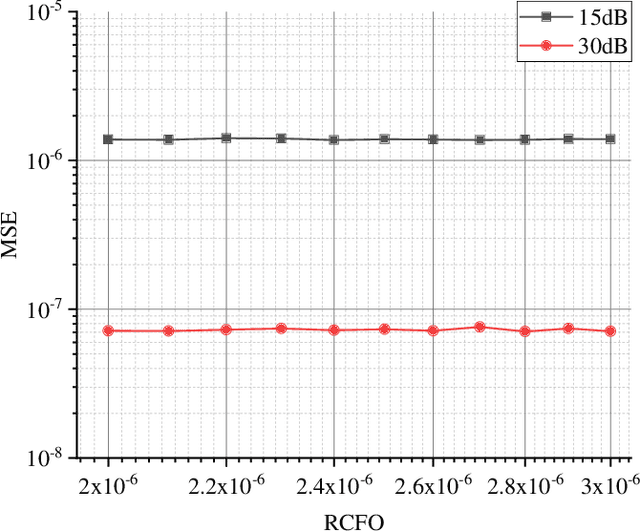

Abstract:Multiple-input multiple-output orthogonal frequency-division multiplexing (MIMO-OFDM) is a key technology component in the evolution towards next-generation communication in which the accuracy of timing and frequency synchronization significantly impacts the overall system performance. In this paper, we propose a novel scheme leveraging extreme learning machine (ELM) to achieve high-precision timing and frequency synchronization. Specifically, two ELMs are incorporated into a traditional MIMO-OFDM system to estimate both the residual symbol timing offset (RSTO) and the residual carrier frequency offset (RCFO). The simulation results show that the performance of an ELM-based synchronization scheme is superior to the traditional method under both additive white Gaussian noise (AWGN) and frequency selective fading channels. Finally, the proposed method is robust in terms of choice of channel parameters (e.g., number of paths) and also in terms of "generalization ability" from a machine learning standpoint.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge