Guanliang Chen

Does the Prompt-based Large Language Model Recognize Students' Demographics and Introduce Bias in Essay Scoring?

Apr 30, 2025Abstract:Large Language Models (LLMs) are widely used in Automated Essay Scoring (AES) due to their ability to capture semantic meaning. Traditional fine-tuning approaches required technical expertise, limiting accessibility for educators with limited technical backgrounds. However, prompt-based tools like ChatGPT have made AES more accessible, enabling educators to obtain machine-generated scores using natural-language prompts (i.e., the prompt-based paradigm). Despite advancements, prior studies have shown bias in fine-tuned LLMs, particularly against disadvantaged groups. It remains unclear whether such biases persist or are amplified in the prompt-based paradigm with cutting-edge tools. Since such biases are believed to stem from the demographic information embedded in pre-trained models (i.e., the ability of LLMs' text embeddings to predict demographic attributes), this study explores the relationship between the model's predictive power of students' demographic attributes based on their written works and its predictive bias in the scoring task in the prompt-based paradigm. Using a publicly available dataset of over 25,000 students' argumentative essays, we designed prompts to elicit demographic inferences (i.e., gender, first-language background) from GPT-4o and assessed fairness in automated scoring. Then we conducted multivariate regression analysis to explore the impact of the model's ability to predict demographics on its scoring outcomes. Our findings revealed that (i) prompt-based LLMs can somewhat infer students' demographics, particularly their first-language backgrounds, from their essays; (ii) scoring biases are more pronounced when the LLM correctly predicts students' first-language background than when it does not; and (iii) scoring error for non-native English speakers increases when the LLM correctly identifies them as non-native.

Fair Knowledge Tracing in Second Language Acquisition

Dec 23, 2024

Abstract:In second-language acquisition, predictive modeling aids educators in implementing diverse teaching strategies, attracting significant research attention. However, while model accuracy is widely explored, model fairness remains under-examined. Model fairness ensures equitable treatment of groups, preventing unintentional biases based on attributes such as gender, ethnicity, or economic background. A fair model should produce impartial outcomes that do not systematically disadvantage any group. This study evaluates the fairness of two predictive models using the Duolingo dataset's en\_es (English learners speaking Spanish), es\_en (Spanish learners speaking English), and fr\_en (French learners speaking English) tracks. We analyze: 1. Algorithmic fairness across platforms (iOS, Android, Web). 2. Algorithmic fairness between developed and developing countries. Key findings include: 1. Deep learning outperforms machine learning in second-language knowledge tracing due to improved accuracy and fairness. 2. Both models favor mobile users over non-mobile users. 3. Machine learning exhibits stronger bias against developing countries compared to deep learning. 4. Deep learning strikes a better balance of fairness and accuracy in the en\_es and es\_en tracks, while machine learning is more suitable for fr\_en. This study highlights the importance of addressing fairness in predictive models to ensure equitable educational strategies across platforms and regions.

Modifying AI, Enhancing Essays: How Active Engagement with Generative AI Boosts Writing Quality

Dec 10, 2024Abstract:Students are increasingly relying on Generative AI (GAI) to support their writing-a key pedagogical practice in education. In GAI-assisted writing, students can delegate core cognitive tasks (e.g., generating ideas and turning them into sentences) to GAI while still producing high-quality essays. This creates new challenges for teachers in assessing and supporting student learning, as they often lack insight into whether students are engaging in meaningful cognitive processes during writing or how much of the essay's quality can be attributed to those processes. This study aimed to help teachers better assess and support student learning in GAI-assisted writing by examining how different writing behaviors, especially those indicative of meaningful learning versus those that are not, impact essay quality. Using a dataset of 1,445 GAI-assisted writing sessions, we applied the cutting-edge method, X-Learner, to quantify the causal impact of three GAI-assisted writing behavioral patterns (i.e., seeking suggestions but not accepting them, seeking suggestions and accepting them as they are, and seeking suggestions and accepting them with modification) on four measures of essay quality (i.e., lexical sophistication, syntactic complexity, text cohesion, and linguistic bias). Our analysis showed that writers who frequently modified GAI-generated text-suggesting active engagement in higher-order cognitive processes-consistently improved the quality of their essays in terms of lexical sophistication, syntactic complexity, and text cohesion. In contrast, those who often accepted GAI-generated text without changes, primarily engaging in lower-order processes, saw a decrease in essay quality. Additionally, while human writers tend to introduce linguistic bias when writing independently, incorporating GAI-generated text-even without modification-can help mitigate this bias.

Towards Detecting AI-Generated Text within Human-AI Collaborative Hybrid Texts

Mar 06, 2024

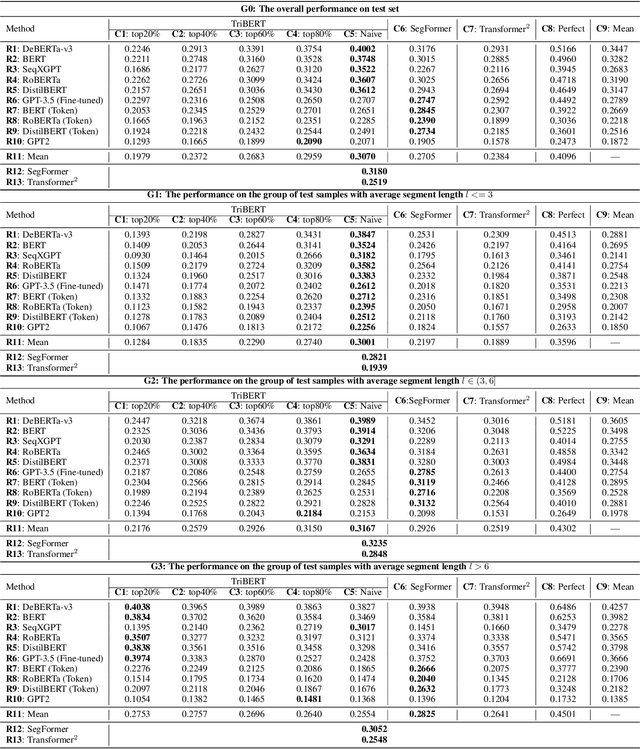

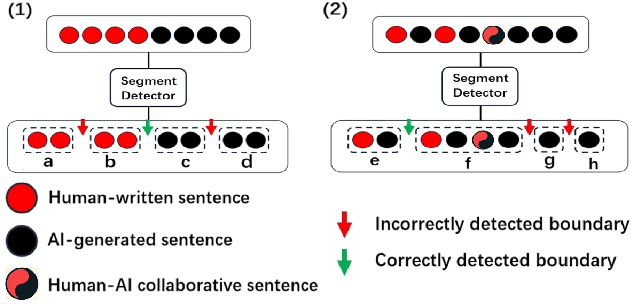

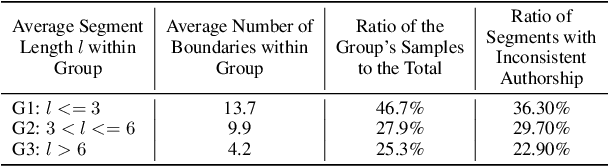

Abstract:This study explores the challenge of sentence-level AI-generated text detection within human-AI collaborative hybrid texts. Existing studies of AI-generated text detection for hybrid texts often rely on synthetic datasets. These typically involve hybrid texts with a limited number of boundaries. We contend that studies of detecting AI-generated content within hybrid texts should cover different types of hybrid texts generated in realistic settings to better inform real-world applications. Therefore, our study utilizes the CoAuthor dataset, which includes diverse, realistic hybrid texts generated through the collaboration between human writers and an intelligent writing system in multi-turn interactions. We adopt a two-step, segmentation-based pipeline: (i) detect segments within a given hybrid text where each segment contains sentences of consistent authorship, and (ii) classify the authorship of each identified segment. Our empirical findings highlight (1) detecting AI-generated sentences in hybrid texts is overall a challenging task because (1.1) human writers' selecting and even editing AI-generated sentences based on personal preferences adds difficulty in identifying the authorship of segments; (1.2) the frequent change of authorship between neighboring sentences within the hybrid text creates difficulties for segment detectors in identifying authorship-consistent segments; (1.3) the short length of text segments within hybrid texts provides limited stylistic cues for reliable authorship determination; (2) before embarking on the detection process, it is beneficial to assess the average length of segments within the hybrid text. This assessment aids in deciding whether (2.1) to employ a text segmentation-based strategy for hybrid texts with longer segments, or (2.2) to adopt a direct sentence-by-sentence classification strategy for those with shorter segments.

Unveiling the Tapestry of Automated Essay Scoring: A Comprehensive Investigation of Accuracy, Fairness, and Generalizability

Jan 11, 2024

Abstract:Automatic Essay Scoring (AES) is a well-established educational pursuit that employs machine learning to evaluate student-authored essays. While much effort has been made in this area, current research primarily focuses on either (i) boosting the predictive accuracy of an AES model for a specific prompt (i.e., developing prompt-specific models), which often heavily relies on the use of the labeled data from the same target prompt; or (ii) assessing the applicability of AES models developed on non-target prompts to the intended target prompt (i.e., developing the AES models in a cross-prompt setting). Given the inherent bias in machine learning and its potential impact on marginalized groups, it is imperative to investigate whether such bias exists in current AES methods and, if identified, how it intervenes with an AES model's accuracy and generalizability. Thus, our study aimed to uncover the intricate relationship between an AES model's accuracy, fairness, and generalizability, contributing practical insights for developing effective AES models in real-world education. To this end, we meticulously selected nine prominent AES methods and evaluated their performance using seven metrics on an open-sourced dataset, which contains over 25,000 essays and various demographic information about students such as gender, English language learner status, and economic status. Through extensive evaluations, we demonstrated that: (1) prompt-specific models tend to outperform their cross-prompt counterparts in terms of predictive accuracy; (2) prompt-specific models frequently exhibit a greater bias towards students of different economic statuses compared to cross-prompt models; (3) in the pursuit of generalizability, traditional machine learning models coupled with carefully engineered features hold greater potential for achieving both high accuracy and fairness than complex neural network models.

Towards Automatic Boundary Detection for Human-AI Collaborative Hybrid Essay in Education

Aug 16, 2023

Abstract:The recent large language models (LLMs), e.g., ChatGPT, have been able to generate human-like and fluent responses when provided with specific instructions. While admitting the convenience brought by technological advancement, educators also have concerns that students might leverage LLMs to complete their writing assignments and pass them off as their original work. Although many AI content detection studies have been conducted as a result of such concerns, most of these prior studies modeled AI content detection as a classification problem, assuming that a text is either entirely human-written or entirely AI-generated. In this study, we investigated AI content detection in a rarely explored yet realistic setting where the text to be detected is collaboratively written by human and generative LLMs (i.e., hybrid text). We first formalized the detection task as identifying the transition points between human-written content and AI-generated content from a given hybrid text (boundary detection). Then we proposed a two-step approach where we (1) separated AI-generated content from human-written content during the encoder training process; and (2) calculated the distances between every two adjacent prototypes and assumed that the boundaries exist between the two adjacent prototypes that have the furthest distance from each other. Through extensive experiments, we observed the following main findings: (1) the proposed approach consistently outperformed the baseline methods across different experiment settings; (2) the encoder training process can significantly boost the performance of the proposed approach; (3) when detecting boundaries for single-boundary hybrid essays, the proposed approach could be enhanced by adopting a relatively large prototype size, leading to a 22% improvement in the In-Domain evaluation and an 18% improvement in the Out-of-Domain evaluation.

Robust Educational Dialogue Act Classifiers with Low-Resource and Imbalanced Datasets

Apr 15, 2023

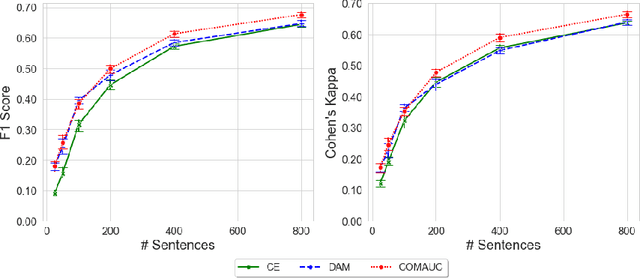

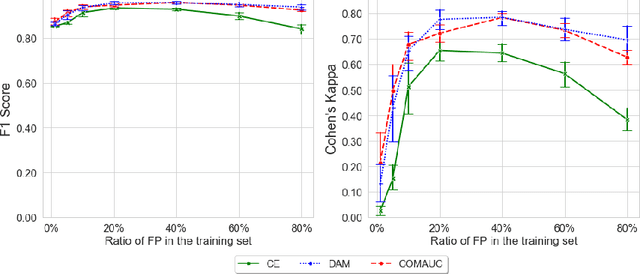

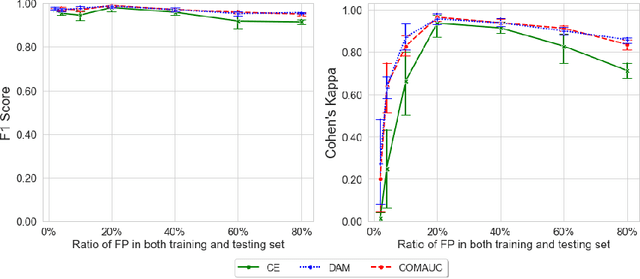

Abstract:Dialogue acts (DAs) can represent conversational actions of tutors or students that take place during tutoring dialogues. Automating the identification of DAs in tutoring dialogues is significant to the design of dialogue-based intelligent tutoring systems. Many prior studies employ machine learning models to classify DAs in tutoring dialogues and invest much effort to optimize the classification accuracy by using limited amounts of training data (i.e., low-resource data scenario). However, beyond the classification accuracy, the robustness of the classifier is also important, which can reflect the capability of the classifier on learning the patterns from different class distributions. We note that many prior studies on classifying educational DAs employ cross entropy (CE) loss to optimize DA classifiers on low-resource data with imbalanced DA distribution. The DA classifiers in these studies tend to prioritize accuracy on the majority class at the expense of the minority class which might not be robust to the data with imbalanced ratios of different DA classes. To optimize the robustness of classifiers on imbalanced class distributions, we propose to optimize the performance of the DA classifier by maximizing the area under the ROC curve (AUC) score (i.e., AUC maximization). Through extensive experiments, our study provides evidence that (i) by maximizing AUC in the training process, the DA classifier achieves significant performance improvement compared to the CE approach under low-resource data, and (ii) AUC maximization approaches can improve the robustness of the DA classifier under different class imbalance ratios.

Does Informativeness Matter? Active Learning for Educational Dialogue Act Classification

Apr 12, 2023

Abstract:Dialogue Acts (DAs) can be used to explain what expert tutors do and what students know during the tutoring process. Most empirical studies adopt the random sampling method to obtain sentence samples for manual annotation of DAs, which are then used to train DA classifiers. However, these studies have paid little attention to sample informativeness, which can reflect the information quantity of the selected samples and inform the extent to which a classifier can learn patterns. Notably, the informativeness level may vary among the samples and the classifier might only need a small amount of low informative samples to learn the patterns. Random sampling may overlook sample informativeness, which consumes human labelling costs and contributes less to training the classifiers. As an alternative, researchers suggest employing statistical sampling methods of Active Learning (AL) to identify the informative samples for training the classifiers. However, the use of AL methods in educational DA classification tasks is under-explored. In this paper, we examine the informativeness of annotated sentence samples. Then, the study investigates how the AL methods can select informative samples to support DA classifiers in the AL sampling process. The results reveal that most annotated sentences present low informativeness in the training dataset and the patterns of these sentences can be easily captured by the DA classifier. We also demonstrate how AL methods can reduce the cost of manual annotation in the AL sampling process.

Practical and Ethical Challenges of Large Language Models in Education: A Systematic Literature Review

Mar 17, 2023

Abstract:Educational technology innovations that have been developed based on large language models (LLMs) have shown the potential to automate the laborious process of generating and analysing textual content. While various innovations have been developed to automate a range of educational tasks (e.g., question generation, feedback provision, and essay grading), there are concerns regarding the practicality and ethicality of these innovations. Such concerns may hinder future research and the adoption of LLMs-based innovations in authentic educational contexts. To address this, we conducted a systematic literature review of 118 peer-reviewed papers published since 2017 to pinpoint the current state of research on using LLMs to automate and support educational tasks. The practical and ethical challenges of LLMs-based innovations were also identified by assessing their technological readiness, model performance, replicability, system transparency, privacy, equality, and beneficence. The findings were summarised into three recommendations for future studies, including updating existing innovations with state-of-the-art models (e.g., GPT-3), embracing the initiative of open-sourcing models/systems, and adopting a human-centred approach throughout the developmental process. These recommendations could support future research to develop practical and ethical innovations for supporting diverse educational tasks and benefiting students, teachers, and institutions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge