Guangquan Xu

Agent Skills in the Wild: An Empirical Study of Security Vulnerabilities at Scale

Jan 15, 2026Abstract:The rise of AI agent frameworks has introduced agent skills, modular packages containing instructions and executable code that dynamically extend agent capabilities. While this architecture enables powerful customization, skills execute with implicit trust and minimal vetting, creating a significant yet uncharacterized attack surface. We conduct the first large-scale empirical security analysis of this emerging ecosystem, collecting 42,447 skills from two major marketplaces and systematically analyzing 31,132 using SkillScan, a multi-stage detection framework integrating static analysis with LLM-based semantic classification. Our findings reveal pervasive security risks: 26.1% of skills contain at least one vulnerability, spanning 14 distinct patterns across four categories: prompt injection, data exfiltration, privilege escalation, and supply chain risks. Data exfiltration (13.3%) and privilege escalation (11.8%) are most prevalent, while 5.2% of skills exhibit high-severity patterns strongly suggesting malicious intent. We find that skills bundling executable scripts are 2.12x more likely to contain vulnerabilities than instruction-only skills (OR=2.12, p<0.001). Our contributions include: (1) a grounded vulnerability taxonomy derived from 8,126 vulnerable skills, (2) a validated detection methodology achieving 86.7% precision and 82.5% recall, and (3) an open dataset and detection toolkit to support future research. These results demonstrate an urgent need for capability-based permission systems and mandatory security vetting before this attack vector is further exploited.

Lurking in the shadows: Unveiling Stealthy Backdoor Attacks against Personalized Federated Learning

Jun 10, 2024

Abstract:Federated Learning (FL) is a collaborative machine learning technique where multiple clients work together with a central server to train a global model without sharing their private data. However, the distribution shift across non-IID datasets of clients poses a challenge to this one-model-fits-all method hindering the ability of the global model to effectively adapt to each client's unique local data. To echo this challenge, personalized FL (PFL) is designed to allow each client to create personalized local models tailored to their private data. While extensive research has scrutinized backdoor risks in FL, it has remained underexplored in PFL applications. In this study, we delve deep into the vulnerabilities of PFL to backdoor attacks. Our analysis showcases a tale of two cities. On the one hand, the personalization process in PFL can dilute the backdoor poisoning effects injected into the personalized local models. Furthermore, PFL systems can also deploy both server-end and client-end defense mechanisms to strengthen the barrier against backdoor attacks. On the other hand, our study shows that PFL fortified with these defense methods may offer a false sense of security. We propose \textit{PFedBA}, a stealthy and effective backdoor attack strategy applicable to PFL systems. \textit{PFedBA} ingeniously aligns the backdoor learning task with the main learning task of PFL by optimizing the trigger generation process. Our comprehensive experiments demonstrate the effectiveness of \textit{PFedBA} in seamlessly embedding triggers into personalized local models. \textit{PFedBA} yields outstanding attack performance across 10 state-of-the-art PFL algorithms, defeating the existing 6 defense mechanisms. Our study sheds light on the subtle yet potent backdoor threats to PFL systems, urging the community to bolster defenses against emerging backdoor challenges.

Graph Agent Network: Empowering Nodes with Decentralized Communications Capabilities for Adversarial Resilience

Jun 12, 2023Abstract:End-to-end training with global optimization have popularized graph neural networks (GNNs) for node classification, yet inadvertently introduced vulnerabilities to adversarial edge-perturbing attacks. Adversaries can exploit the inherent opened interfaces of GNNs' input and output, perturbing critical edges and thus manipulating the classification results. Current defenses, due to their persistent utilization of global-optimization-based end-to-end training schemes, inherently encapsulate the vulnerabilities of GNNs. This is specifically evidenced in their inability to defend against targeted secondary attacks. In this paper, we propose the Graph Agent Network (GAgN) to address the aforementioned vulnerabilities of GNNs. GAgN is a graph-structured agent network in which each node is designed as an 1-hop-view agent. Through the decentralized interactions between agents, they can learn to infer global perceptions to perform tasks including inferring embeddings, degrees and neighbor relationships for given nodes. This empowers nodes to filtering adversarial edges while carrying out classification tasks. Furthermore, agents' limited view prevents malicious messages from propagating globally in GAgN, thereby resisting global-optimization-based secondary attacks. We prove that single-hidden-layer multilayer perceptrons (MLPs) are theoretically sufficient to achieve these functionalities. Experimental results show that GAgN effectively implements all its intended capabilities and, compared to state-of-the-art defenses, achieves optimal classification accuracy on the perturbed datasets.

OFEI: A Semi-black-box Android Adversarial Sample Attack Framework Against DLaaS

May 25, 2021

Abstract:With the growing popularity of Android devices, Android malware is seriously threatening the safety of users. Although such threats can be detected by deep learning as a service (DLaaS), deep neural networks as the weakest part of DLaaS are often deceived by the adversarial samples elaborated by attackers. In this paper, we propose a new semi-black-box attack framework called one-feature-each-iteration (OFEI) to craft Android adversarial samples. This framework modifies as few features as possible and requires less classifier information to fool the classifier. We conduct a controlled experiment to evaluate our OFEI framework by comparing it with the benchmark methods JSMF, GenAttack and pointwise attack. The experimental results show that our OFEI has a higher misclassification rate of 98.25%. Furthermore, OFEI can extend the traditional white-box attack methods in the image field, such as fast gradient sign method (FGSM) and DeepFool, to craft adversarial samples for Android. Finally, to enhance the security of DLaaS, we use two uncertainties of the Bayesian neural network to construct the combined uncertainty, which is used to detect adversarial samples and achieves a high detection rate of 99.28%.

DynaComm: Accelerating Distributed CNN Training between Edges and Clouds through Dynamic Communication Scheduling

Jan 20, 2021

Abstract:To reduce uploading bandwidth and address privacy concerns, deep learning at the network edge has been an emerging topic. Typically, edge devices collaboratively train a shared model using real-time generated data through the Parameter Server framework. Although all the edge devices can share the computing workloads, the distributed training processes over edge networks are still time-consuming due to the parameters and gradients transmission procedures between parameter servers and edge devices. Focusing on accelerating distributed Convolutional Neural Networks (CNNs) training at the network edge, we present DynaComm, a novel scheduler that dynamically decomposes each transmission procedure into several segments to achieve optimal communications and computations overlapping during run-time. Through experiments, we verify that DynaComm manages to achieve optimal scheduling for all cases compared to competing strategies while the model accuracy remains untouched.

SFE-GACN: A Novel Unknown Attack Detection Method Using Intra Categories Generation in Embedding Space

Apr 12, 2020

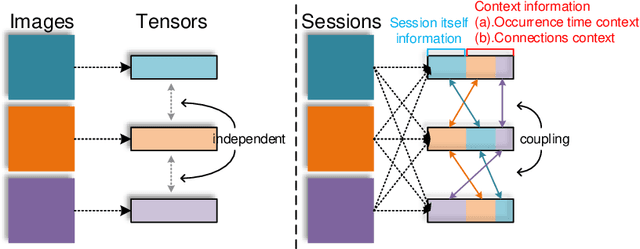

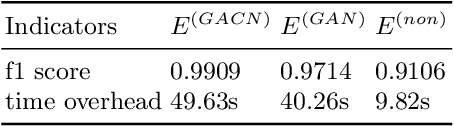

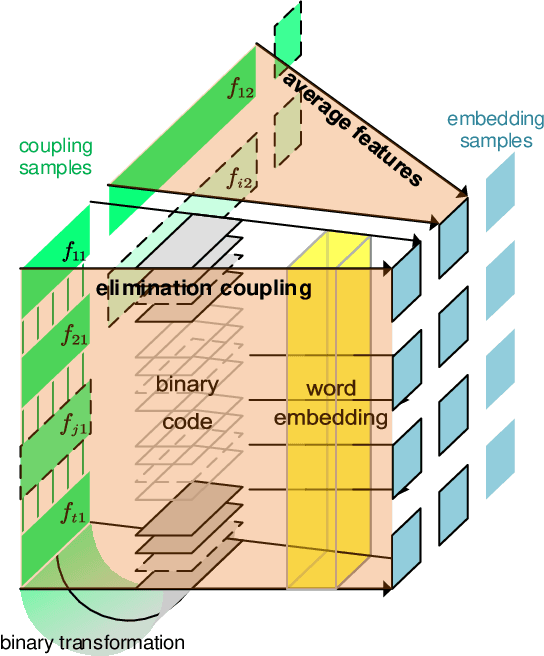

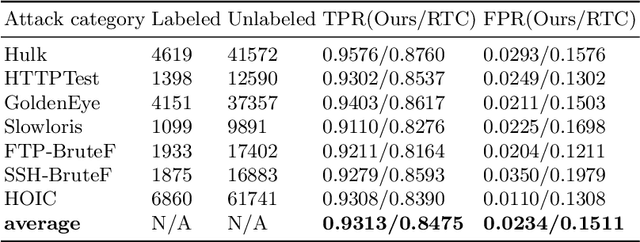

Abstract:In the encrypted network traffic intrusion detection, deep learning based schemes have attracted lots of attention. However, in real-world scenarios, data is often insufficient (few-shot), which leads to various deviations between the models prediction and the ground truth. Consequently, downstream tasks such as unknown attack detection based on few-shot will be limited by insufficient data. In this paper, we propose a novel unknown attack detection method based on Intra Categories Generation in Embedding Space, namely SFE-GACN, which might be the solution of few-shot problem. Concretely, we first proposed Session Feature Embedding (SFE) to summarize the context of sessions (session is the basic granularity of network traffic), bring the insufficient data to the pre-trained embedding space. In this way, we achieve the goal of preliminary information extension in the few-shot case. Second, we further propose the Generative Adversarial Cooperative Network (GACN), which improves the conventional Generative Adversarial Network by supervising the generated sample to avoid falling into similar categories, and thus enables samples to generate intra categories. Our proposed SFE-GACN can accurately generate session samples in the case of few-shot, and ensure the difference between categories during data augmentation. The detection results show that, compared to the state-of-the-art method, the average TPR is 8.38% higher, and the average FPR is 12.77% lower. In addition, we evaluated the graphics generation capabilities of GACN on the graphics dataset, the result shows our proposed GACN can be popularized for generating easy-confused multi-categories graphics.

Big Data Analytics for Manufacturing Internet of Things: Opportunities, Challenges and Enabling Technologies

Sep 01, 2019

Abstract:The recent advances in information and communication technology (ICT) have promoted the evolution of conventional computer-aided manufacturing industry to smart data-driven manufacturing. Data analytics in massive manufacturing data can extract huge business values while can also result in research challenges due to the heterogeneous data types, enormous volume and real-time velocity of manufacturing data. This paper provides an overview on big data analytics in manufacturing Internet of Things (MIoT). This paper first starts with a discussion on necessities and challenges of big data analytics in manufacturing data of MIoT. Then, the enabling technologies of big data analytics of manufacturing data are surveyed and discussed. Moreover, this paper also outlines the future directions in this promising area.

* 14 pages, 6 figures, 3 tables

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge