Jianting Ning

Beyond Denial-of-Service: The Puppeteer's Attack for Fine-Grained Control in Ranking-Based Federated Learning

Jan 21, 2026Abstract:Federated Rank Learning (FRL) is a promising Federated Learning (FL) paradigm designed to be resilient against model poisoning attacks due to its discrete, ranking-based update mechanism. Unlike traditional FL methods that rely on model updates, FRL leverages discrete rankings as a communication parameter between clients and the server. This approach significantly reduces communication costs and limits an adversary's ability to scale or optimize malicious updates in the continuous space, thereby enhancing its robustness. This makes FRL particularly appealing for applications where system security and data privacy are crucial, such as web-based auction and bidding platforms. While FRL substantially reduces the attack surface, we demonstrate that it remains vulnerable to a new class of local model poisoning attack, i.e., fine-grained control attacks. We introduce the Edge Control Attack (ECA), the first fine-grained control attack tailored to ranking-based FL frameworks. Unlike conventional denial-of-service (DoS) attacks that cause conspicuous disruptions, ECA enables an adversary to precisely degrade a competitor's accuracy to any target level while maintaining a normal-looking convergence trajectory, thereby avoiding detection. ECA operates in two stages: (i) identifying and manipulating Ascending and Descending Edges to align the global model with the target model, and (ii) widening the selection boundary gap to stabilize the global model at the target accuracy. Extensive experiments across seven benchmark datasets and nine Byzantine-robust aggregation rules (AGRs) show that ECA achieves fine-grained accuracy control with an average error of only 0.224%, outperforming the baseline by up to 17x. Our findings highlight the need for stronger defenses against advanced poisoning attacks. Our code is available at: https://github.com/Chenzh0205/ECA

Fluent: Round-efficient Secure Aggregation for Private Federated Learning

Mar 10, 2024Abstract:Federated learning (FL) facilitates collaborative training of machine learning models among a large number of clients while safeguarding the privacy of their local datasets. However, FL remains susceptible to vulnerabilities such as privacy inference and inversion attacks. Single-server secure aggregation schemes were proposed to address these threats. Nonetheless, they encounter practical constraints due to their round and communication complexities. This work introduces Fluent, a round and communication-efficient secure aggregation scheme for private FL. Fluent has several improvements compared to state-of-the-art solutions like Bell et al. (CCS 2020) and Ma et al. (SP 2023): (1) it eliminates frequent handshakes and secret sharing operations by efficiently reusing the shares across multiple training iterations without leaking any private information; (2) it accomplishes both the consistency check and gradient unmasking in one logical step, thereby reducing another round of communication. With these innovations, Fluent achieves the fewest communication rounds (i.e., two in the collection phase) in the malicious server setting, in contrast to at least three rounds in existing schemes. This significantly minimizes the latency for geographically distributed clients; (3) Fluent also introduces Fluent-Dynamic with a participant selection algorithm and an alternative secret sharing scheme. This can facilitate dynamic client joining and enhance the system flexibility and scalability. We implemented Fluent and compared it with existing solutions. Experimental results show that Fluent improves the computational cost by at least 75% and communication overhead by at least 25% for normal clients. Fluent also reduces the communication overhead for the server at the expense of a marginal increase in computational cost.

SFE-GACN: A Novel Unknown Attack Detection Method Using Intra Categories Generation in Embedding Space

Apr 12, 2020

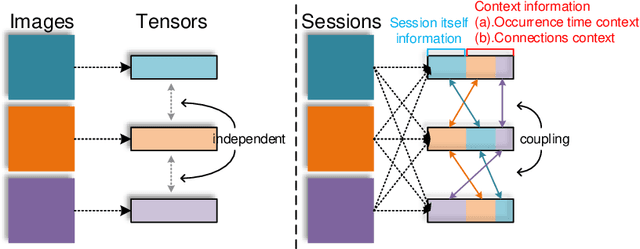

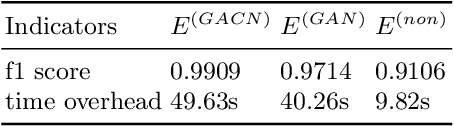

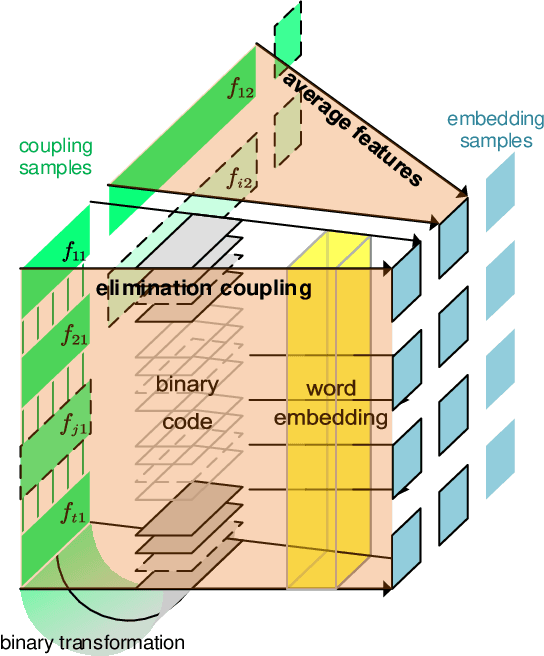

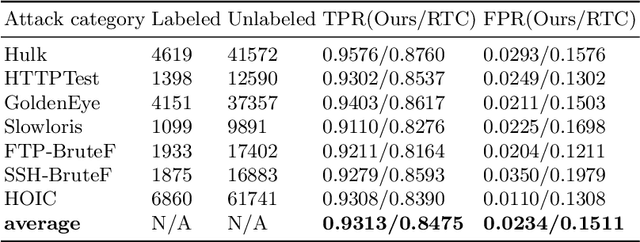

Abstract:In the encrypted network traffic intrusion detection, deep learning based schemes have attracted lots of attention. However, in real-world scenarios, data is often insufficient (few-shot), which leads to various deviations between the models prediction and the ground truth. Consequently, downstream tasks such as unknown attack detection based on few-shot will be limited by insufficient data. In this paper, we propose a novel unknown attack detection method based on Intra Categories Generation in Embedding Space, namely SFE-GACN, which might be the solution of few-shot problem. Concretely, we first proposed Session Feature Embedding (SFE) to summarize the context of sessions (session is the basic granularity of network traffic), bring the insufficient data to the pre-trained embedding space. In this way, we achieve the goal of preliminary information extension in the few-shot case. Second, we further propose the Generative Adversarial Cooperative Network (GACN), which improves the conventional Generative Adversarial Network by supervising the generated sample to avoid falling into similar categories, and thus enables samples to generate intra categories. Our proposed SFE-GACN can accurately generate session samples in the case of few-shot, and ensure the difference between categories during data augmentation. The detection results show that, compared to the state-of-the-art method, the average TPR is 8.38% higher, and the average FPR is 12.77% lower. In addition, we evaluated the graphics generation capabilities of GACN on the graphics dataset, the result shows our proposed GACN can be popularized for generating easy-confused multi-categories graphics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge