Efi Psomopoulou

NeuralTouch: Neural Descriptors for Precise Sim-to-Real Tactile Robot Control

Oct 23, 2025Abstract:Grasping accuracy is a critical prerequisite for precise object manipulation, often requiring careful alignment between the robot hand and object. Neural Descriptor Fields (NDF) offer a promising vision-based method to generate grasping poses that generalize across object categories. However, NDF alone can produce inaccurate poses due to imperfect camera calibration, incomplete point clouds, and object variability. Meanwhile, tactile sensing enables more precise contact, but existing approaches typically learn policies limited to simple, predefined contact geometries. In this work, we introduce NeuralTouch, a multimodal framework that integrates NDF and tactile sensing to enable accurate, generalizable grasping through gentle physical interaction. Our approach leverages NDF to implicitly represent the target contact geometry, from which a deep reinforcement learning (RL) policy is trained to refine the grasp using tactile feedback. This policy is conditioned on the neural descriptors and does not require explicit specification of contact types. We validate NeuralTouch through ablation studies in simulation and zero-shot transfer to real-world manipulation tasks--such as peg-out-in-hole and bottle lid opening--without additional fine-tuning. Results show that NeuralTouch significantly improves grasping accuracy and robustness over baseline methods, offering a general framework for precise, contact-rich robotic manipulation.

A Neuromorphic Incipient Slip Detection System using Papillae Morphology

Sep 11, 2025Abstract:Detecting incipient slip enables early intervention to prevent object slippage and enhance robotic manipulation safety. However, deploying such systems on edge platforms remains challenging, particularly due to energy constraints. This work presents a neuromorphic tactile sensing system based on the NeuroTac sensor with an extruding papillae-based skin and a spiking convolutional neural network (SCNN) for slip-state classification. The SCNN model achieves 94.33% classification accuracy across three classes (no slip, incipient slip, and gross slip) in slip conditions induced by sensor motion. Under the dynamic gravity-induced slip validation conditions, after temporal smoothing of the SCNN's final-layer spike counts, the system detects incipient slip at least 360 ms prior to gross slip across all trials, consistently identifying incipient slip before gross slip occurs. These results demonstrate that this neuromorphic system has stable and responsive incipient slip detection capability.

Text2Touch: Tactile In-Hand Manipulation with LLM-Designed Reward Functions

Sep 09, 2025Abstract:Large language models (LLMs) are beginning to automate reward design for dexterous manipulation. However, no prior work has considered tactile sensing, which is known to be critical for human-like dexterity. We present Text2Touch, bringing LLM-crafted rewards to the challenging task of multi-axis in-hand object rotation with real-world vision based tactile sensing in palm-up and palm-down configurations. Our prompt engineering strategy scales to over 70 environment variables, and sim-to-real distillation enables successful policy transfer to a tactile-enabled fully actuated four-fingered dexterous robot hand. Text2Touch significantly outperforms a carefully tuned human-engineered baseline, demonstrating superior rotation speed and stability while relying on reward functions that are an order of magnitude shorter and simpler. These results illustrate how LLM-designed rewards can significantly reduce the time from concept to deployable dexterous tactile skills, supporting more rapid and scalable multimodal robot learning. Project website: https://hpfield.github.io/text2touch-website

Tactile SoftHand-A: 3D-Printed, Tactile, Highly-underactuated, Anthropomorphic Robot Hand with an Antagonistic Tendon Mechanism

Jun 18, 2024

Abstract:For tendon-driven multi-fingered robotic hands, ensuring grasp adaptability while minimizing the number of actuators needed to provide human-like functionality is a challenging problem. Inspired by the Pisa/IIT SoftHand, this paper introduces a 3D-printed, highly-underactuated, five-finger robotic hand named the Tactile SoftHand-A, which features only two actuators. The dual-tendon design allows for the active control of specific (distal or proximal interphalangeal) joints to adjust the hand's grasp gesture. We have also developed a new design of fully 3D-printed tactile sensor that requires no hand assembly and is printed directly as part of the robotic finger. This sensor is integrated into the fingertips and combined with the antagonistic tendon mechanism to develop a human-hand-guided tactile feedback grasping system. The system can actively mirror human hand gestures, adaptively stabilize grasp gestures upon contact, and adjust grasp gestures to prevent object movement after detecting slippage. Finally, we designed four different experiments to evaluate the novel fingers coupled with the antagonistic mechanism for controlling the robotic hand's gestures, adaptive grasping ability, and human-hand-guided tactile feedback grasping capability. The experimental results demonstrate that the Tactile SoftHand-A can adaptively grasp objects of a wide range of shapes and automatically adjust its gripping gestures upon detecting contact and slippage. Overall, this study points the way towards a class of low-cost, accessible, 3D-printable, underactuated human-like robotic hands, and we openly release the designs to facilitate others to build upon this work. This work is Open-sourced at github.com/SoutheastWind/Tactile_SoftHand_A

AnyRotate: Gravity-Invariant In-Hand Object Rotation with Sim-to-Real Touch

May 12, 2024

Abstract:In-hand manipulation is an integral component of human dexterity. Our hands rely on tactile feedback for stable and reactive motions to ensure objects do not slip away unintentionally during manipulation. For a robot hand, this level of dexterity requires extracting and utilizing rich contact information for precise motor control. In this paper, we present AnyRotate, a system for gravity-invariant multi-axis in-hand object rotation using dense featured sim-to-real touch. We construct a continuous contact feature representation to provide tactile feedback for training a policy in simulation and introduce an approach to perform zero-shot policy transfer by training an observation model to bridge the sim-to-real gap. Our experiments highlight the benefit of detailed contact information when handling objects with varying properties. In the real world, we demonstrate successful sim-to-real transfer of the dense tactile policy, generalizing to a diverse range of objects for various rotation axes and hand directions and outperforming other forms of low-dimensional touch. Interestingly, despite not having explicit slip detection, rich multi-fingered tactile sensing can implicitly detect object movement within grasp and provide a reactive behavior that improves the robustness of the policy, highlighting the importance of information-rich tactile sensing for in-hand manipulation.

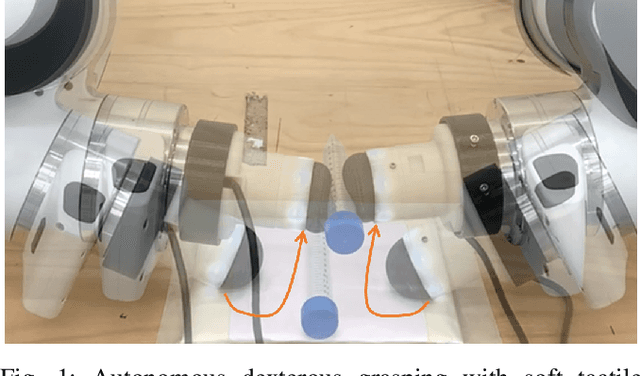

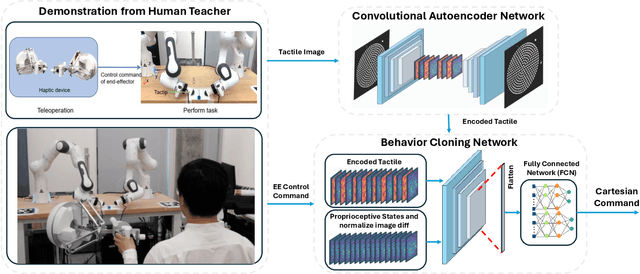

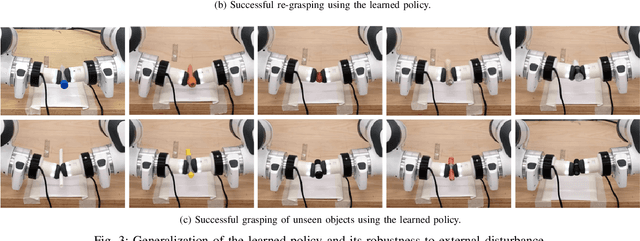

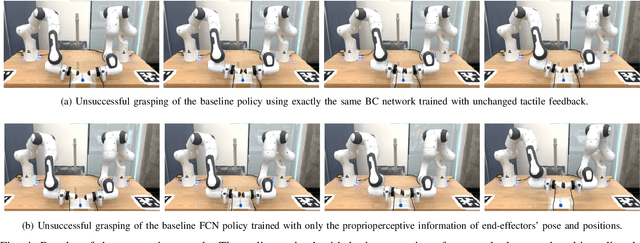

Learning Fine Pinch-Grasp Skills using Tactile Sensing from Real Demonstration Data

Jul 10, 2023

Abstract:This work develops a data-efficient learning from demonstration framework which exploits the use of rich tactile sensing and achieves fine dexterous bimanual manipulation. Specifically, we formulated a convolutional autoencoder network that can effectively extract and encode high-dimensional tactile information. Further, we developed a behaviour cloning network that can learn human-like sensorimotor skills demonstrated directly on the robot hardware in the task space by fusing both proprioceptive and tactile feedback. Our comparison study with the baseline method revealed the effectiveness of the contact information, which enabled successful extraction and replication of the demonstrated motor skills. Extensive experiments on real dual-arm robots demonstrated the robustness and effectiveness of the fine pinch grasp policy directly learned from one-shot demonstration, including grasping of the same object with different initial poses, generalizing to ten unseen new objects, robust and firm grasping against external pushes, as well as contact-aware and reactive re-grasping in case of dropping objects under very large perturbations. Moreover, the saliency map method is employed to describe the weight distribution across various modalities during pinch grasping. The video is available online at: \href{https://youtu.be/4Pg29bUBKqs}{https://youtu.be/4Pg29bUBKqs}.

Tactile-Driven Gentle Grasping for Human-Robot Collaborative Tasks

Mar 16, 2023

Abstract:This paper presents a control scheme for force sensitive, gentle grasping with a Pisa/IIT anthropomorphic SoftHand equipped with a miniaturised version of the TacTip optical tactile sensor on all five fingertips. The tactile sensors provide high-resolution information about a grasp and how the fingers interact with held objects. We first describe a series of hardware developments for performing asynchronous sensor data acquisition and processing, resulting in a fast control loop sufficient for real-time grasp control. We then develop a novel grasp controller that uses tactile feedback from all five fingertip sensors simultaneously to gently and stably grasp 43 objects of varying geometry and stiffness, which is then applied to a human-to-robot handover task. These developments open the door to more advanced manipulation with underactuated hands via fast reflexive control using high-resolution tactile sensing.

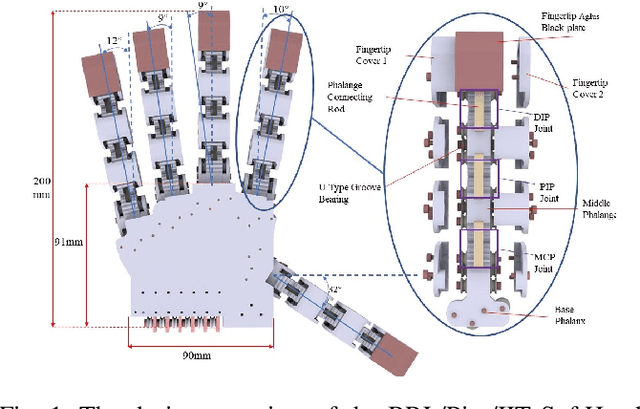

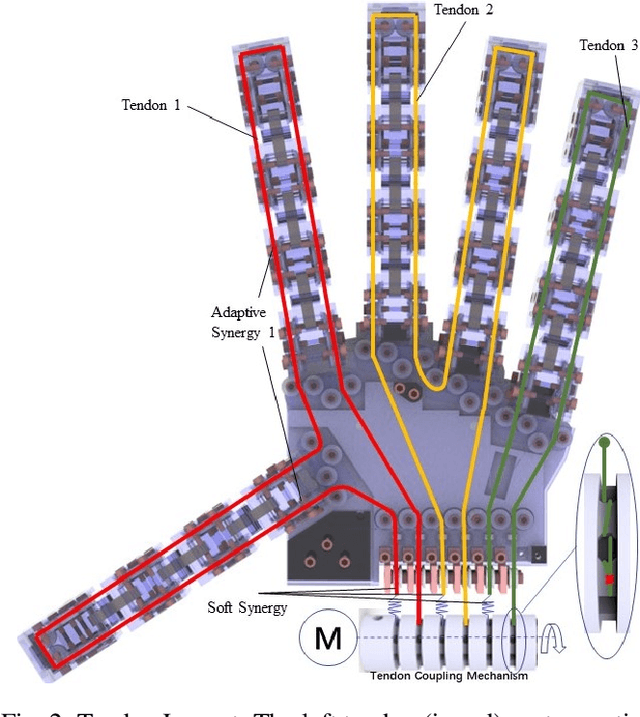

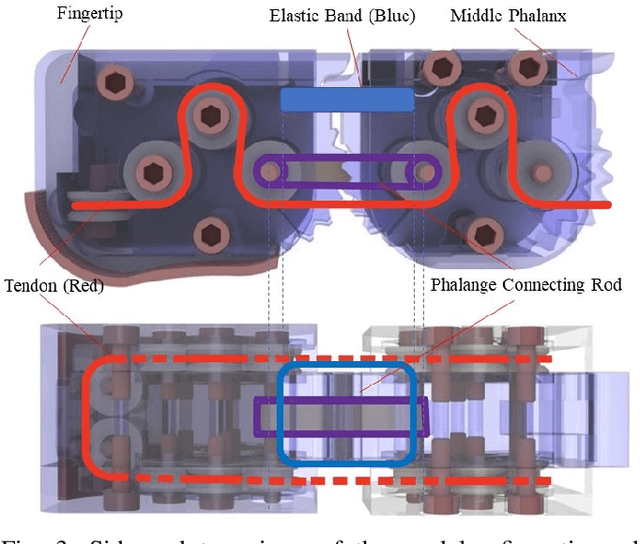

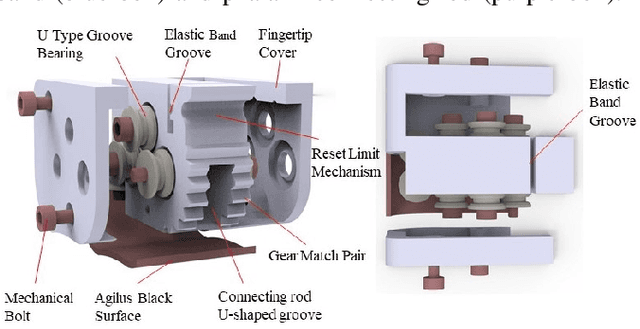

BRL/Pisa/IIT SoftHand: A Low-cost, 3D-Printed, Underactuated, Tendon-Driven Hand with Soft and Adaptive Synergies

Jun 25, 2022

Abstract:This paper introduces the BRL/Pisa/IIT (BPI) SoftHand: a single actuator-driven, low-cost, 3D-printed, tendon-driven, underactuated robot hand that can be used to perform a range of grasping tasks. Based on the adaptive synergies of the Pisa/IIT SoftHand, we design a new joint system and tendon routing to facilitate the inclusion of both soft and adaptive synergies, which helps us balance durability, affordability and grasping performance of the hand. The focus of this work is on the design, simulation, synergies and grasping tests of this SoftHand. The novel phalanges are designed and printed based on linkages, gear pairs and geometric restraint mechanisms, and can be applied to most tendon-driven robotic hands. We show that the robot hand can successfully grasp and lift various target objects and adapt to hold complex geometric shapes, reflecting the successful adoption of the soft and adaptive synergies. We intend to open-source the design of the hand so that it can be built cheaply on a home 3D-printer. For more detail: https://sites.google.com/view/bpi-softhandtactile-group-bri/brlpisaiit-softhand-design

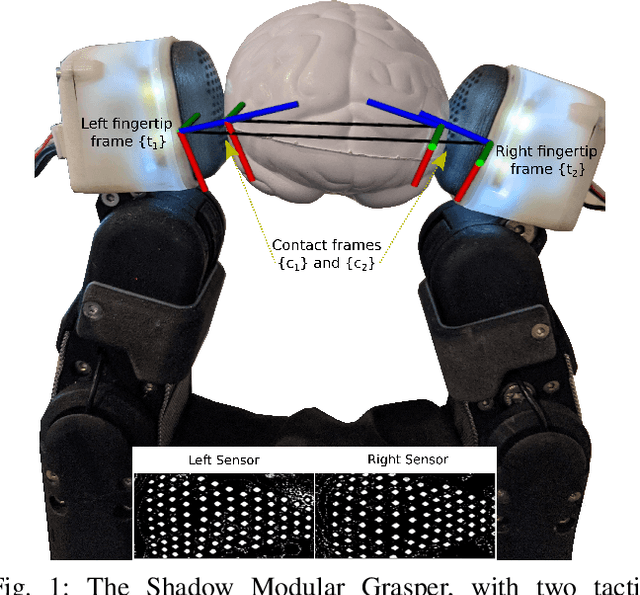

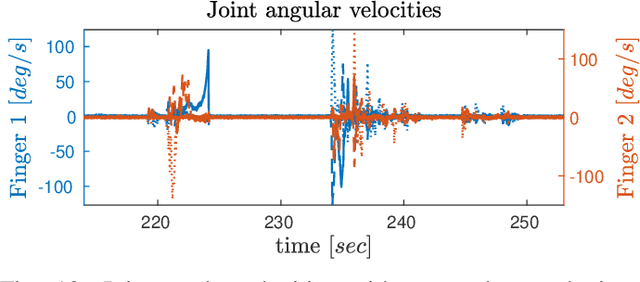

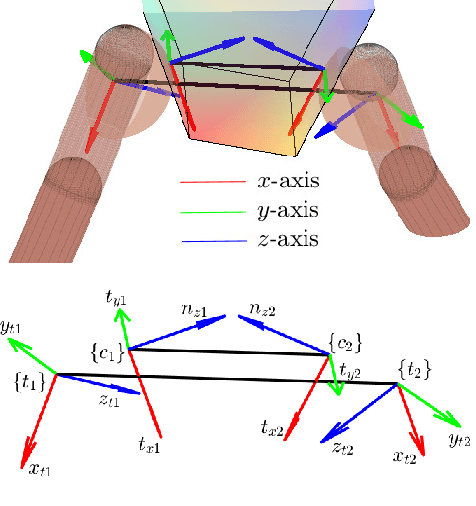

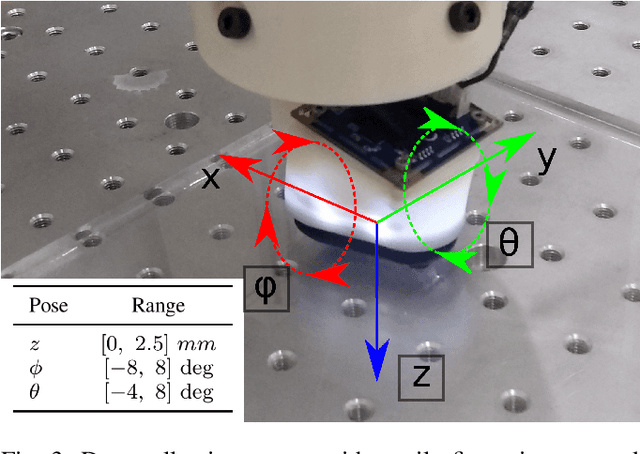

A robust controller for stable 3D pinching using tactile sensing

Jun 02, 2021

Abstract:This paper proposes a controller for stable grasping of unknown-shaped objects by two robotic fingers with tactile fingertips. The grasp is stabilised by rolling the fingertips on the contact surface and applying a desired grasping force to reach an equilibrium state. The validation is both in simulation and on a fully-actuated robot hand (the Shadow Modular Grasper) fitted with custom-built optical tactile sensors (based on the BRL TacTip). The controller requires the orientations of the contact surfaces, which are estimated by regressing a deep convolutional neural network over the tactile images. Overall, the grasp system is demonstrated to achieve stable equilibrium poses on a range of objects varying in shape and softness, with the system being robust to perturbations and measurement errors. This approach also has promise to extend beyond grasping to stable in-hand object manipulation with multiple fingers.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge