David A. W. Barton

Towards Foundational Models for Dynamical System Reconstruction: Hierarchical Meta-Learning via Mixture of Experts

Feb 07, 2025

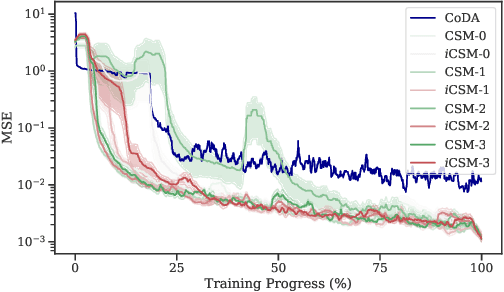

Abstract:As foundational models reshape scientific discovery, a bottleneck persists in dynamical system reconstruction (DSR): the ability to learn across system hierarchies. Many meta-learning approaches have been applied successfully to single systems, but falter when confronted with sparse, loosely related datasets requiring multiple hierarchies to be learned. Mixture of Experts (MoE) offers a natural paradigm to address these challenges. Despite their potential, we demonstrate that naive MoEs are inadequate for the nuanced demands of hierarchical DSR, largely due to their gradient descent-based gating update mechanism which leads to slow updates and conflicted routing during training. To overcome this limitation, we introduce MixER: Mixture of Expert Reconstructors, a novel sparse top-1 MoE layer employing a custom gating update algorithm based on $K$-means and least squares. Extensive experiments validate MixER's capabilities, demonstrating efficient training and scalability to systems of up to ten parametric ordinary differential equations. However, our layer underperforms state-of-the-art meta-learners in high-data regimes, particularly when each expert is constrained to process only a fraction of a dataset composed of highly related data points. Further analysis with synthetic and neuroscientific time series suggests that the quality of the contextual representations generated by MixER is closely linked to the presence of hierarchical structure in the data.

Extending Contextual Self-Modulation: Meta-Learning Across Modalities, Task Dimensionalities, and Data Regimes

Oct 02, 2024

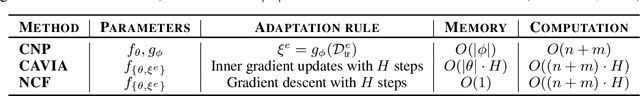

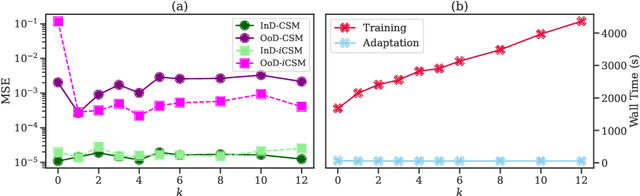

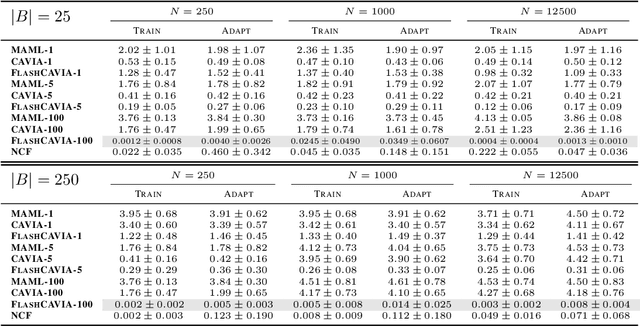

Abstract:Contextual Self-Modulation (CSM) is a potent regularization mechanism for the Neural Context Flow (NCF) framework which demonstrates powerful meta-learning of physical systems. However, CSM has limitations in its applicability across different modalities and in high-data regimes. In this work, we introduce two extensions: $i$CSM, which expands CSM to infinite-dimensional tasks, and StochasticNCF, which improves scalability. These extensions are demonstrated through comprehensive experimentation on a range of tasks, including dynamical systems with parameter variations, computer vision challenges, and curve fitting problems. $i$CSM embeds the contexts into an infinite-dimensional function space, as opposed to CSM which uses finite-dimensional context vectors. StochasticNCF enables the application of both CSM and $i$CSM to high-data scenarios by providing an unbiased approximation of meta-gradient updates through a sampled set of nearest environments. Additionally, we incorporate higher-order Taylor expansions via Taylor-Mode automatic differentiation, revealing that higher-order approximations do not necessarily enhance generalization. Finally, we demonstrate how CSM can be integrated into other meta-learning frameworks with FlashCAVIA, a computationally efficient extension of the CAVIA meta-learning framework (Zintgraf et al. 2019). FlashCAVIA outperforms its predecessor across various benchmarks and reinforces the utility of bi-level optimization techniques. Together, these contributions establish a robust framework for tackling an expanded spectrum of meta-learning tasks, offering practical insights for out-of-distribution generalization. Our open-sourced library, designed for flexible integration of self-modulation into contextual meta-learning workflows, is available at \url{github.com/ddrous/self-mod}.

AnyRotate: Gravity-Invariant In-Hand Object Rotation with Sim-to-Real Touch

May 12, 2024

Abstract:In-hand manipulation is an integral component of human dexterity. Our hands rely on tactile feedback for stable and reactive motions to ensure objects do not slip away unintentionally during manipulation. For a robot hand, this level of dexterity requires extracting and utilizing rich contact information for precise motor control. In this paper, we present AnyRotate, a system for gravity-invariant multi-axis in-hand object rotation using dense featured sim-to-real touch. We construct a continuous contact feature representation to provide tactile feedback for training a policy in simulation and introduce an approach to perform zero-shot policy transfer by training an observation model to bridge the sim-to-real gap. Our experiments highlight the benefit of detailed contact information when handling objects with varying properties. In the real world, we demonstrate successful sim-to-real transfer of the dense tactile policy, generalizing to a diverse range of objects for various rotation axes and hand directions and outperforming other forms of low-dimensional touch. Interestingly, despite not having explicit slip detection, rich multi-fingered tactile sensing can implicitly detect object movement within grasp and provide a reactive behavior that improves the robustness of the policy, highlighting the importance of information-rich tactile sensing for in-hand manipulation.

Neural Context Flows for Learning Generalizable Dynamical Systems

May 03, 2024Abstract:Neural Ordinary Differential Equations typically struggle to generalize to new dynamical behaviors created by parameter changes in the underlying system, even when the dynamics are close to previously seen behaviors. The issue gets worse when the changing parameters are unobserved, i.e., their value or influence is not directly measurable when collecting data. We introduce Neural Context Flow (NCF), a framework that encodes said unobserved parameters in a latent context vector as input to a vector field. NCFs leverage differentiability of the vector field with respect to the parameters, along with first-order Taylor expansion to allow any context vector to influence trajectories from other parameters. We validate our method and compare it to established Multi-Task and Meta-Learning alternatives, showing competitive performance in mean squared error for in-domain and out-of-distribution evaluation on the Lotka-Volterra, Glycolytic Oscillator, and Gray-Scott problems. This study holds practical implications for foundational models in science and related areas that benefit from conditional neural ODEs. Our code is openly available at https://github.com/ddrous/ncflow.

A Comparison of Mesh-Free Differentiable Programming and Data-Driven Strategies for Optimal Control under PDE Constraints

Oct 02, 2023Abstract:The field of Optimal Control under Partial Differential Equations (PDE) constraints is rapidly changing under the influence of Deep Learning and the accompanying automatic differentiation libraries. Novel techniques like Physics-Informed Neural Networks (PINNs) and Differentiable Programming (DP) are to be contrasted with established numerical schemes like Direct-Adjoint Looping (DAL). We present a comprehensive comparison of DAL, PINN, and DP using a general-purpose mesh-free differentiable PDE solver based on Radial Basis Functions. Under Laplace and Navier-Stokes equations, we found DP to be extremely effective as it produces the most accurate gradients; thriving even when DAL fails and PINNs struggle. Additionally, we provide a detailed benchmark highlighting the limited conditions under which any of those methods can be efficiently used. Our work provides a guide to Optimal Control practitioners and connects them further to the Deep Learning community.

Sim-to-Real Model-Based and Model-Free Deep Reinforcement Learning for Tactile Pushing

Jul 26, 2023

Abstract:Object pushing presents a key non-prehensile manipulation problem that is illustrative of more complex robotic manipulation tasks. While deep reinforcement learning (RL) methods have demonstrated impressive learning capabilities using visual input, a lack of tactile sensing limits their capability for fine and reliable control during manipulation. Here we propose a deep RL approach to object pushing using tactile sensing without visual input, namely tactile pushing. We present a goal-conditioned formulation that allows both model-free and model-based RL to obtain accurate policies for pushing an object to a goal. To achieve real-world performance, we adopt a sim-to-real approach. Our results demonstrate that it is possible to train on a single object and a limited sample of goals to produce precise and reliable policies that can generalize to a variety of unseen objects and pushing scenarios without domain randomization. We experiment with the trained agents in harsh pushing conditions, and show that with significantly more training samples, a model-free policy can outperform a model-based planner, generating shorter and more reliable pushing trajectories despite large disturbances. The simplicity of our training environment and effective real-world performance highlights the value of rich tactile information for fine manipulation. Code and videos are available at https://sites.google.com/view/tactile-rl-pushing/.

Using scientific machine learning for experimental bifurcation analysis of dynamic systems

Oct 22, 2021

Abstract:Augmenting mechanistic ordinary differential equation (ODE) models with machine-learnable structures is an novel approach to create highly accurate, low-dimensional models of engineering systems incorporating both expert knowledge and reality through measurement data. Our exploratory study focuses on training universal differential equation (UDE) models for physical nonlinear dynamical systems with limit cycles: an aerofoil undergoing flutter oscillations and an electrodynamic nonlinear oscillator. We consider examples where training data is generated by numerical simulations, whereas we also employ the proposed modelling concept to physical experiments allowing us to investigate problems with a wide range of complexity. To collect the training data, the method of control-based continuation is used as it captures not just the stable but also the unstable limit cycles of the observed system. This feature makes it possible to extract more information about the observed system than the standard, open-loop approach would allow. We use both neural networks and Gaussian processes as universal approximators alongside the mechanistic models to give a critical assessment of the accuracy and robustness of the UDE modelling approach. We also highlight the potential issues one may run into during the training procedure indicating the limits of the current modelling framework.

Walking on TacTip toes: A tactile sensing foot for walking robots

Aug 12, 2020

Abstract:Little research into tactile feet has been done for walking robots despite the benefits such feedback could give when walking on uneven terrain. This paper describes the development of a simple, robust and inexpensive tactile foot for legged robots based on a high-resolution biomimetic TacTip tactile sensor. Several design improvements were made to facilitate tactile sensing while walking, including the use of phosphorescent markers to remove the need for internal LED lighting. The usefulness of the foot is verified on a quadrupedal robot performing a beam walking task and it is found the sensor prevents the robot falling off the beam. Further, this capability also enables the robot to walk along the edge of a curved table. This tactile foot design can be easily modified for use with any legged robot, including much larger walking robots, enabling stable walking in challenging terrain.

Learning to Live Life on the Edge: Online Learning for Data-Efficient Tactile Contour Following

Sep 12, 2019

Abstract:Tactile sensing has been used for a variety of robotic exploration and manipulation tasks but a common constraint is a requirement for a large amount of training data. This paper addresses the issue of data-efficiency by proposing a novel method for online learning based on a Gaussian Process Latent Variable Model (GP-LVM), whereby the robot learns from tactile data whilst performing a contour following task thus enabling generalisation to a wide variety of stimuli. The results show that contour following is successful with very little data and is robust to novel stimuli. This work highlights that even with a simple learning architecture there are significant advantages to be gained in efficient and robust task performance by using latent variable models and online learning for tactile sensing tasks. This paves the way for a new generation of robust, fast, and data-efficient tactile systems.

Shear-invariant Sliding Contact Perception with a Soft Tactile Sensor

May 02, 2019

Abstract:Manipulation tasks often require robots to be continuously in contact with an object. Therefore tactile perception systems need to handle continuous contact data. Shear deformation causes the tactile sensor to output path-dependent readings in contrast to discrete contact readings. As such, in some continuous-contact tasks, sliding can be regarded as a disturbance over the sensor signal. Here we present a shear-invariant perception method based on principal component analysis (PCA) which outputs the required information about the environment despite sliding motion. A compliant tactile sensor (the TacTip) is used to investigate continuous tactile contact. First, we evaluate the method offline using test data collected whilst the sensor slides over an edge. Then, the method is used within a contour-following task applied to 6 objects with varying curvatures; all contours are successfully traced. The method demonstrates generalisation capabilities and could underlie a more sophisticated controller for challenging manipulation or exploration tasks in unstructured environments. A video showing the work described in the paper can be found at https://youtu.be/wrTM61-pieU

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge