Zoe Doulgeri

From RGB images to Dynamic Movement Primitives for planar tasks

Mar 06, 2023

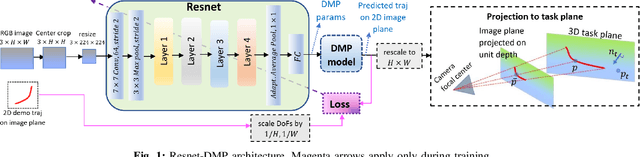

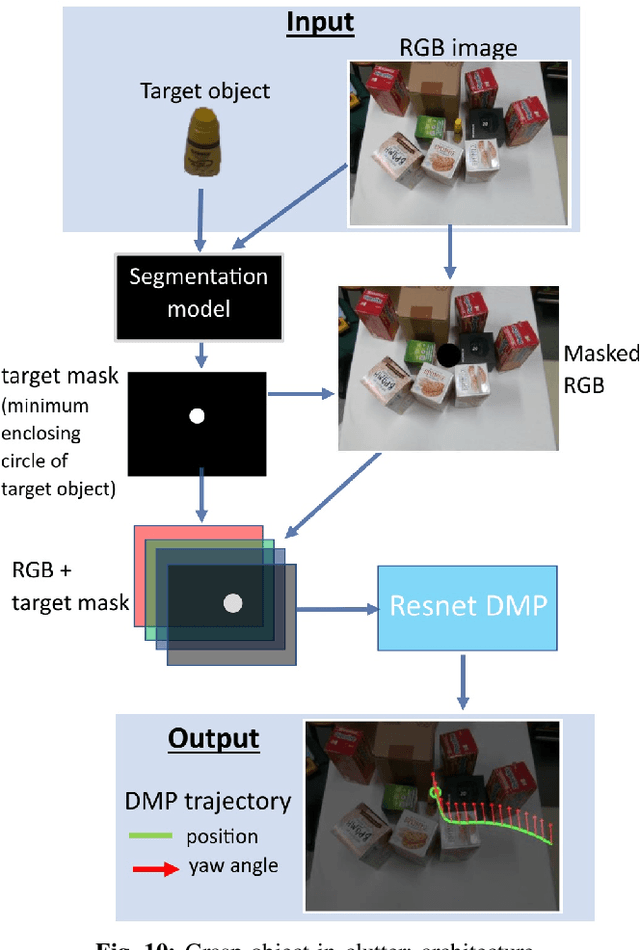

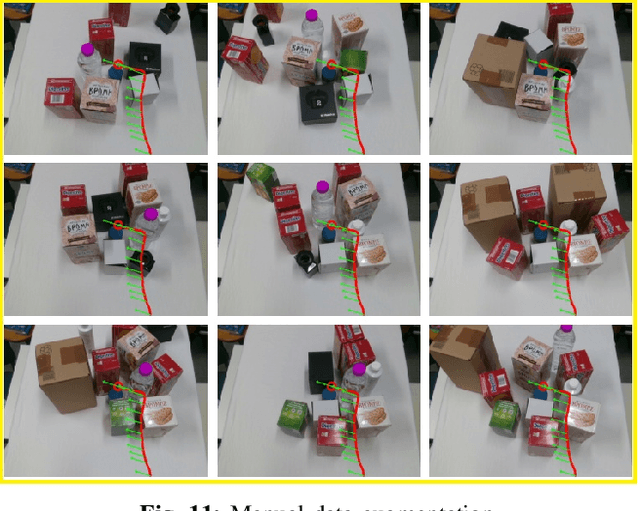

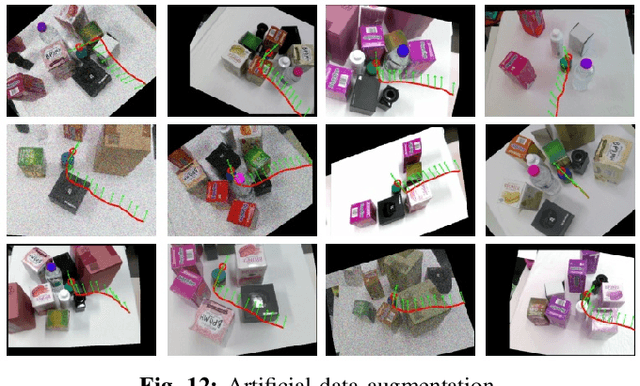

Abstract:DMP have been extensively applied in various robotic tasks thanks to their generalization and robustness properties. However, the successful execution of a given task may necessitate the use of different motion patterns that take into account not only the initial and target position but also features relating to the overall structure and layout of the scene. To make DMP applicable in wider range of tasks and further automate their use, we design in this work a framework combining deep residual networks with DMP, that can encapsulate different motion patterns of a planar task, provided through human demonstrations on the RGB image plane. We can then automatically infer from new raw RGB visual input the appropriate DMP parameters, i.e. the weights that determine the motion pattern and the initial/target positions. We experimentally validate our method in the task of unveiling the stem of a grape-bunch from occluding leaves using on a mock-up vine setup and compare it to another SoA method for inferring DMP from images.

Efficient DMP generalization to time-varying targets, external signals and via-points

Dec 27, 2022

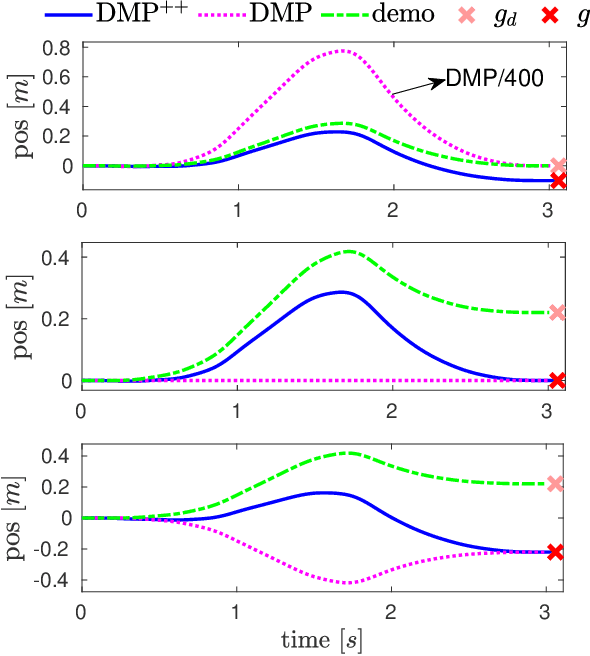

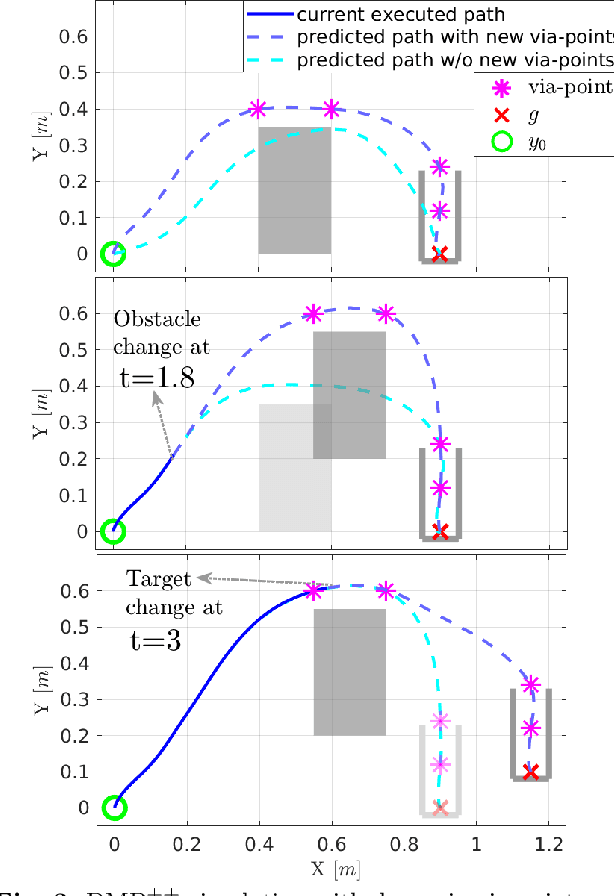

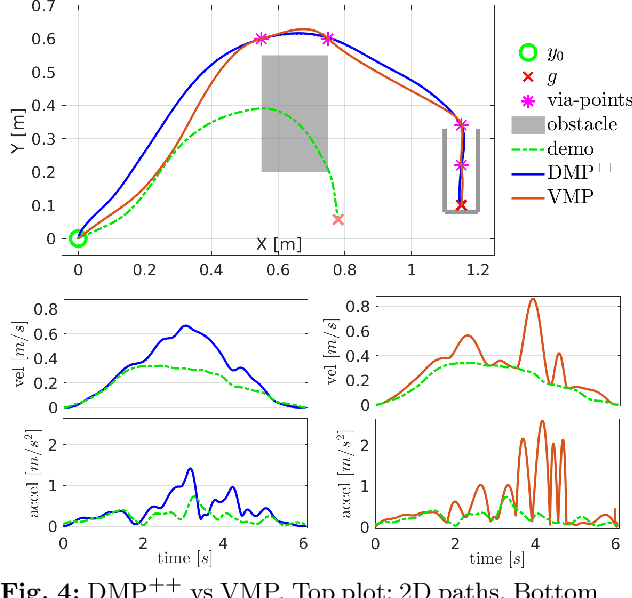

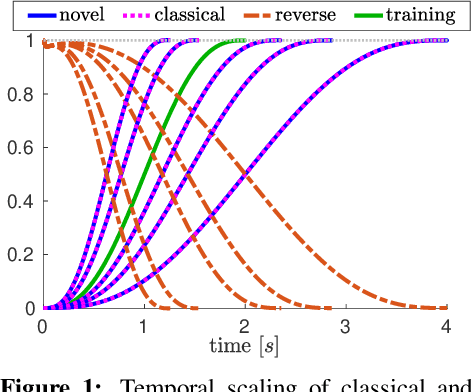

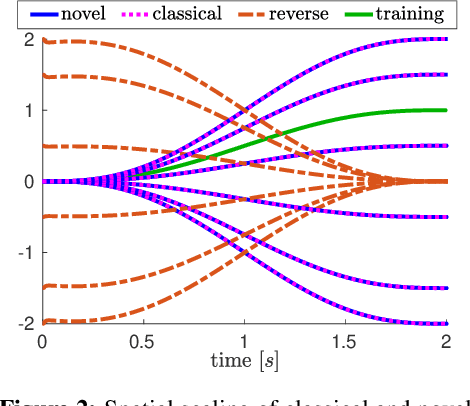

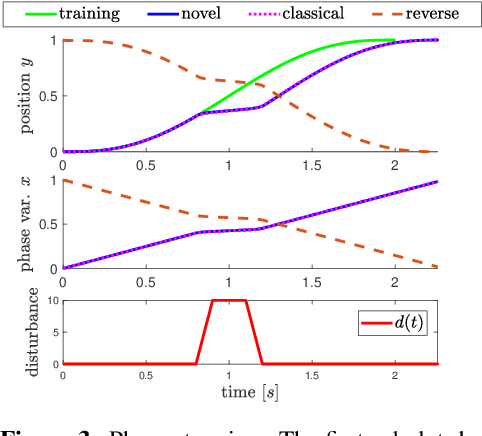

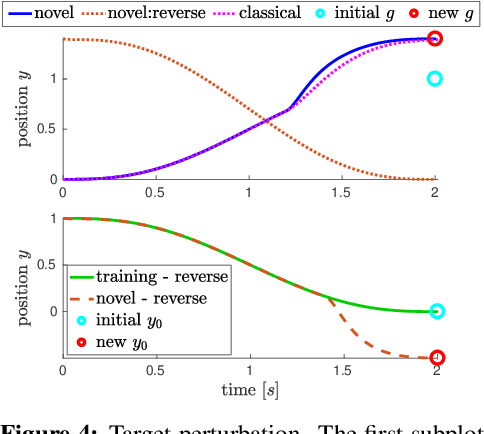

Abstract:Dynamic Movement Primitives (DMP) have found remarkable applicability and success in various robotic tasks, which can be mainly attributed to their generalization and robustness properties. Nevertheless, their generalization is based only on the trajectory endpoints (initial and target position). Moreover, the spatial generalization of DMP is known to suffer from shortcomings like over-scaling and mirroring of the motion. In this work we propose a novel generalization scheme, based on optimizing online the DMP weights so that the acceleration profile and hence the underlying training trajectory pattern is preserved. This approach remedies the shortcomings of the classical DMP scaling and additionally allows the DMP to generalize also to intermediate points (via-points) and external signals (coupling terms), while preserving the training trajectory pattern. Extensive comparative simulations with the classical and other DMP variants are conducted, while experimental results validate the applicability and efficacy of the proposed method.

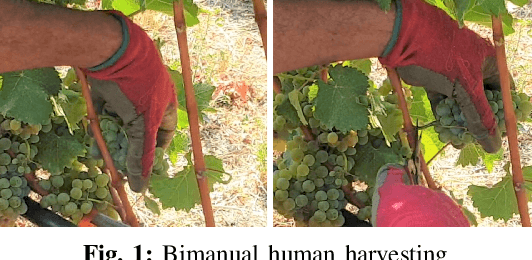

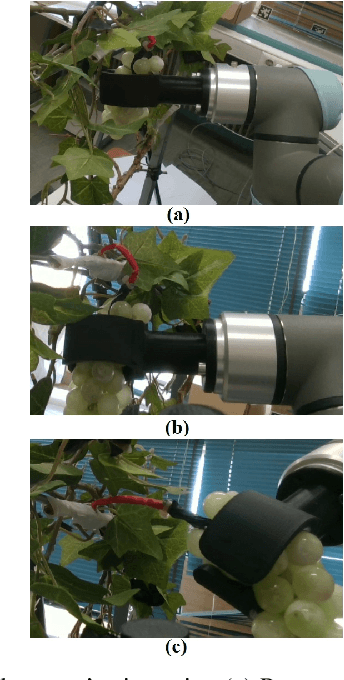

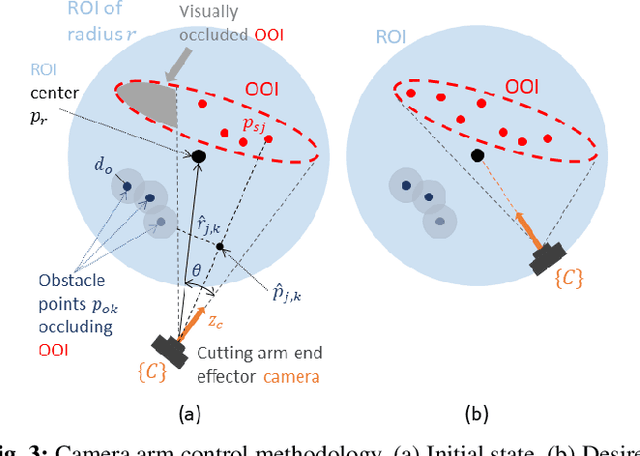

Bimanual crop manipulation for human-inspired robotic harvesting

Sep 13, 2022

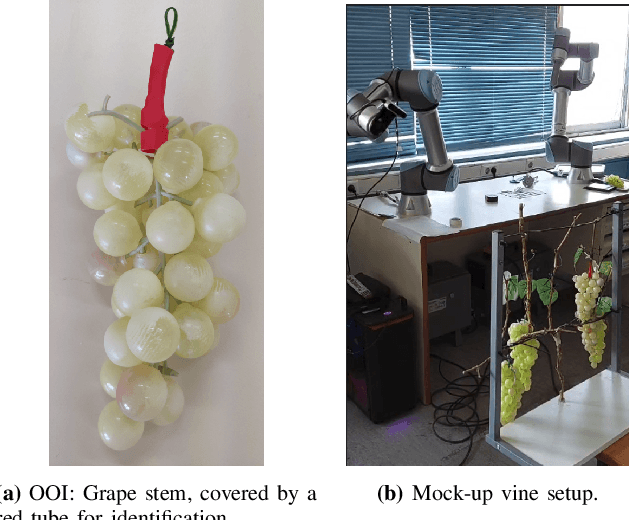

Abstract:Most existing robotic harvesters utilize a unimanual approach; a single arm grasps the crop and detaches it, either via a detachment movement, or by cutting its stem with a specially designed gripper/cutter end-effector. However, such unimanual solutions cannot be applied for sensitive crops and cluttered environments like grapes and a vineyard where obstacles may occlude the stem and leave no space for the cutter's placement. In such cases, the solution would require a bimanual robot in order to visually unveil the stem and manipulate the grasped crop to create cutting affordances which is similar to the practice used by humans. In this work, a dual-arm coordinated motion control methodology for reaching a stem pre-cut state is proposed. The camera equipped arm with the cutter is reaching the stem, unveiling it as much as possible, while the second arm is moving the grasped crop towards the surrounding free-space to facilitate its stem cutting. Lab experimentation on a mock-up vine setup with a plastic grape cluster evaluates the proposed methodology, involving two UR5e robotic arms and a RealSense D415 camera.

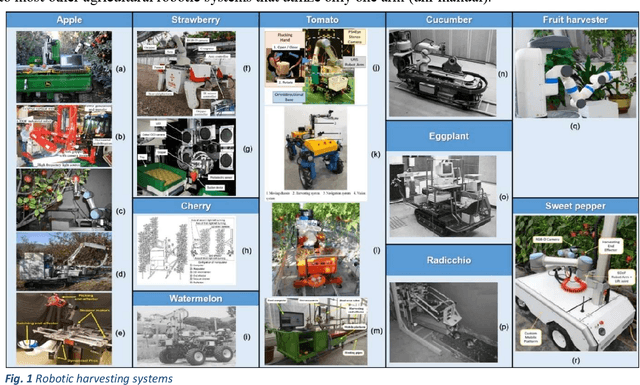

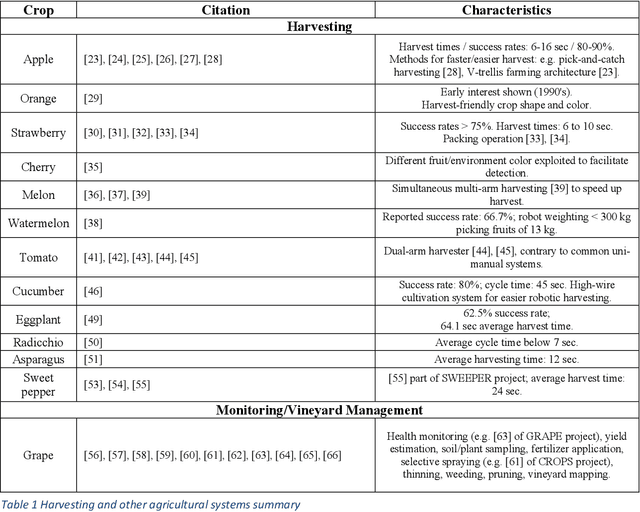

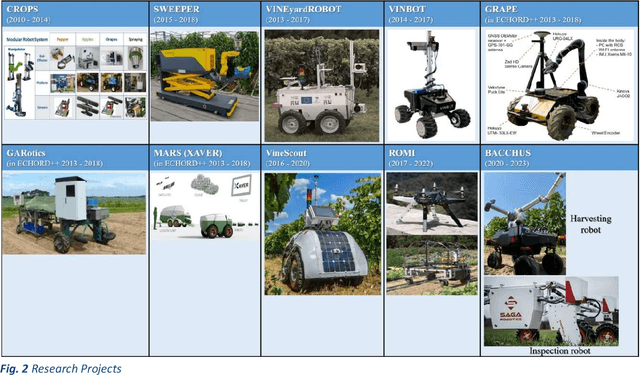

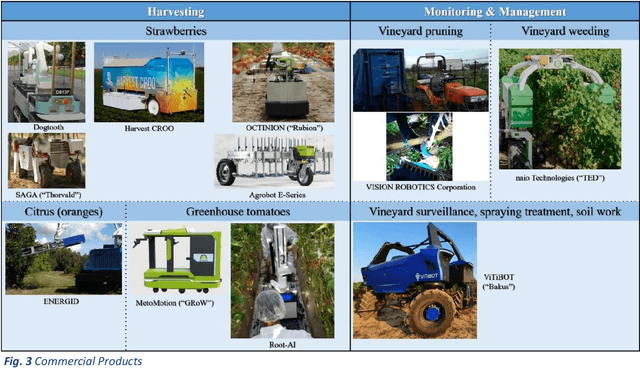

A Survey of Robotic Harvesting Systems and Enabling Technologies

Jul 22, 2022

Abstract:This paper presents a comprehensive review of ground agricultural robotic systems and applications with special focus on harvesting that span research and commercial products and results, as well as their enabling technologies. The majority of literature concerns the development of crop detection, field navigation via vision and their related challenges. Health monitoring, yield estimation, water status inspection, seed planting and weed removal are frequently encountered tasks. Regarding robotic harvesting, apples, strawberries, tomatoes and sweet peppers are mainly the crops considered in publications, research projects and commercial products. The reported harvesting agricultural robotic solutions, typically consist of a mobile platform, a single robotic arm/manipulator and various navigation/vision systems. This paper reviews reported development of specific functionalities and hardware, typically required by an operating agricultural robot harvester; they include (a) vision systems, (b) motion planning/navigation methodologies (for the robotic platform and/or arm), (c) Human-Robot-Interaction (HRI) strategies with 3D visualization, (d) system operation planning & grasping strategies and (e) robotic end-effector/gripper design. Clearly, automated agriculture and specifically autonomous harvesting via robotic systems is a research area that remains wide open, offering several challenges where new contributions can be made.

A passive admittance controller to enforce Remote Center of Motion and Tool Spatial constraints with application in hands-on surgical procedures

Mar 03, 2022

Abstract:The restriction of feasible motions of a manipulator link constrained to move through an entry port is a common problem in minimum invasive surgery procedures. Additional spatial restrictions are required to ensure the safety of sensitive regions from unintentional damage. In this work, we design a target admittance model that is proved to enforce robot tool manipulation by a human through a remote center of motion and to guarantee that the tool will never enter or touch forbidden regions. The control scheme is proved passive under the exertion of a human force ensuring manipulation stability, and smooth natural motion in hands-on surgical procedures enhancing the user's feeling of control over the task. Its performance is demonstrated by experiments with a setup mimicking a hands-on surgical procedure comprising a KUKA LWR4+ and a virtual intraoperative environment.

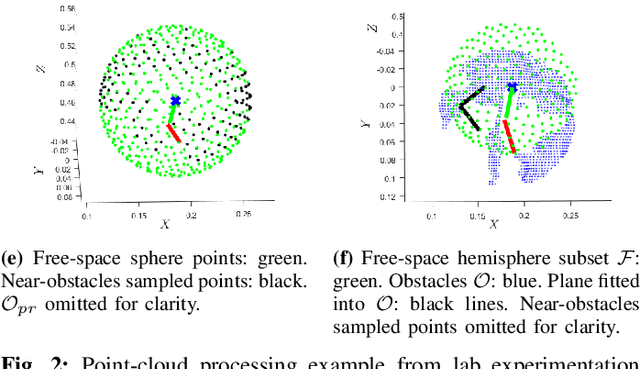

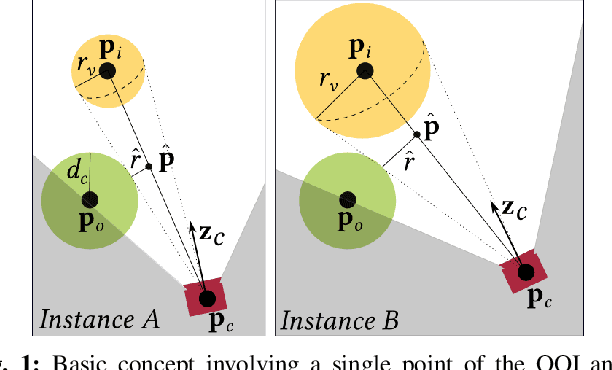

A controller for reaching and unveiling a partially occluded object of interest with an eye-in-hand robot

Dec 17, 2021

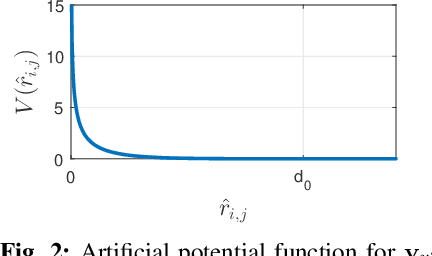

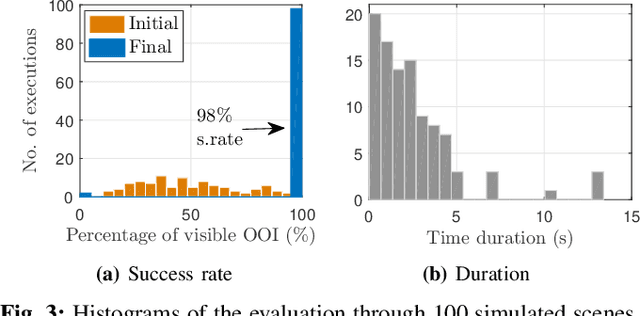

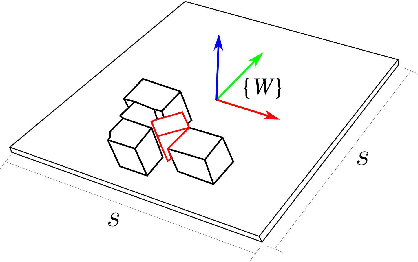

Abstract:In this work, a control scheme for approaching and unveiling a partially occluded object of interest is proposed.The control scheme is based only on the classified point cloud obtained by the in-hand camera attached to the robot's end effector. It is shown that the proposed controller reaches in the vicinity of the object progressively unveiling the neighborhood of each visible point of the object of interest. It can therefore potentially achieve the complete unveiling of the object. The proposed control scheme is evaluated through simulations and experiments with a UR5e robot with an in-hand RealSense camera on a mock-up vine setup for unveiling the stem of a grape.

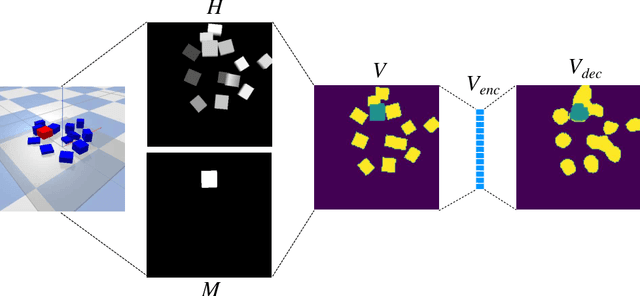

Bridging the gap between learning and heuristic based pushing policies

Nov 22, 2021

Abstract:Non-prehensile pushing actions have the potential to singulate a target object from its surrounding clutter in order to facilitate the robotic grasping of the target. To address this problem we utilize a heuristic rule that moves the target object towards the workspace's empty space and demonstrate that this simple heuristic rule achieves singulation. Furthermore, we incorporate this heuristic rule to the reward in order to train more efficiently reinforcement learning (RL) agents for singulation. Simulation experiments demonstrate that this insight increases performance. Finally, our results show that the RL-based policy implicitly learns something similar to one of the used heuristics in terms of decision making.

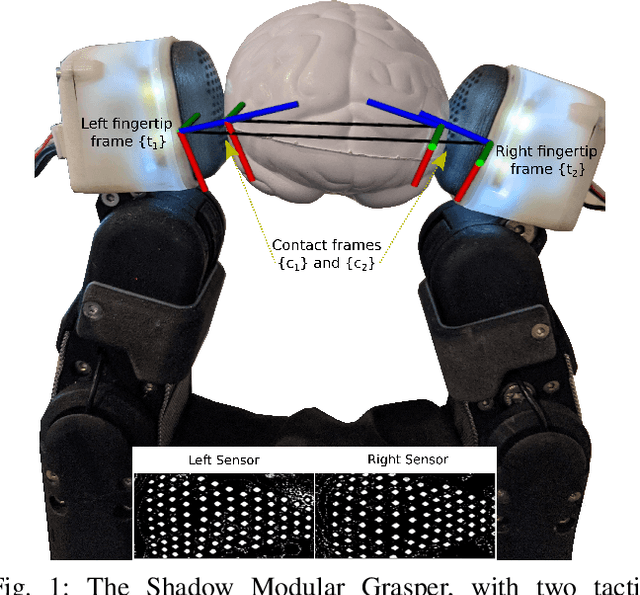

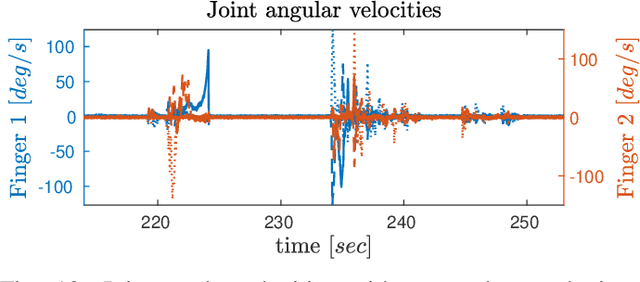

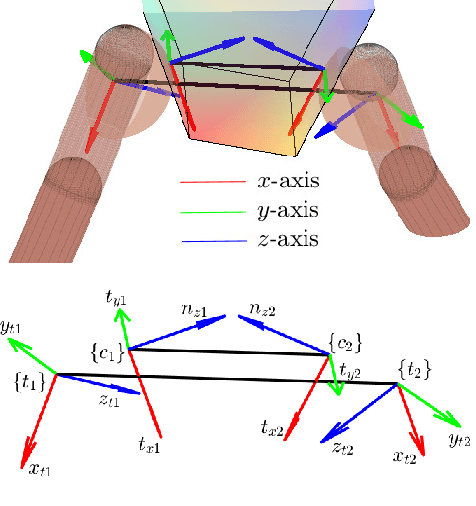

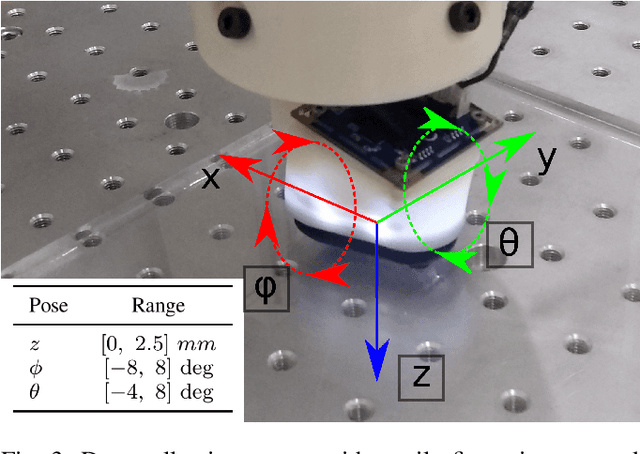

A robust controller for stable 3D pinching using tactile sensing

Jun 02, 2021

Abstract:This paper proposes a controller for stable grasping of unknown-shaped objects by two robotic fingers with tactile fingertips. The grasp is stabilised by rolling the fingertips on the contact surface and applying a desired grasping force to reach an equilibrium state. The validation is both in simulation and on a fully-actuated robot hand (the Shadow Modular Grasper) fitted with custom-built optical tactile sensors (based on the BRL TacTip). The controller requires the orientations of the contact surfaces, which are estimated by regressing a deep convolutional neural network over the tactile images. Overall, the grasp system is demonstrated to achieve stable equilibrium poses on a range of objects varying in shape and softness, with the system being robust to perturbations and measurement errors. This approach also has promise to extend beyond grasping to stable in-hand object manipulation with multiple fingers.

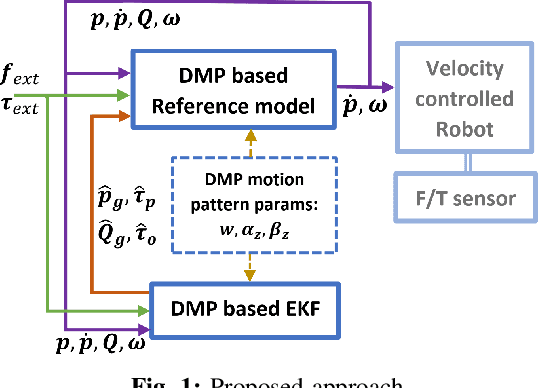

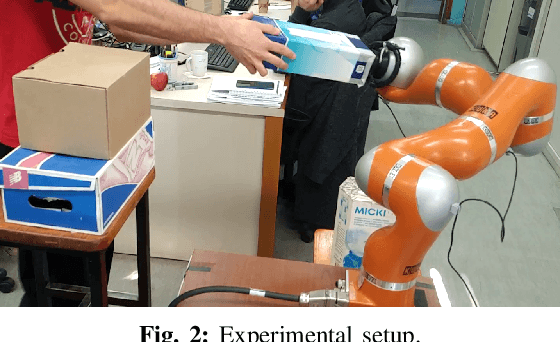

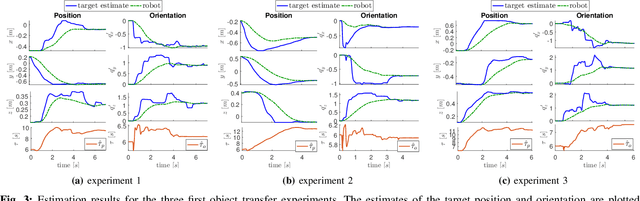

Human-robot collaborative object transfer using human motion prediction based on Cartesian pose Dynamic Movement Primitives

Apr 07, 2021

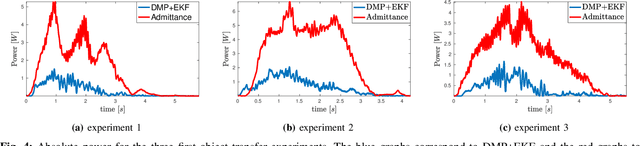

Abstract:In this work, the problem of human-robot collaborative object transfer to unknown target poses is addressed. The desired pattern of the end-effector pose trajectory to a known target pose is encoded using DMPs (Dynamic Movement Primitives). During transportation of the object to new unknown targets, a DMP-based reference model and an EKF (Extended Kalman Filter) for estimating the target pose and time duration of the human's intended motion is proposed. A stability analysis of the overall scheme is provided. Experiments using a Kuka LWR4+ robot equipped with an ATI sensor at its end-effector validate its efficacy with respect to the required human effort and compare it with an admittance control scheme.

A Reversible Dynamic Movement Primitive formulation

Oct 15, 2020

Abstract:In this work, a novel Dynamic Movement Primitive (DMP) formulation is proposed which supports reversibility, i.e. backwards reproduction of a learned trajectory, while also sharing all favourable properties of classical DMP. Classical DMP have been extensively used for encoding and reproducing a desired motion pattern in several robotic applications. However, they lack reversibility, which is a useful and expedient property that can be leveraged in many scenarios. The proposed formulation is analyzed theoretically and is validated through simulations and experiments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge