Eduardo Pignatelli

Department of Bioengineering, Imperial College London

The impact of intrinsic rewards on exploration in Reinforcement Learning

Jan 20, 2025

Abstract:One of the open challenges in Reinforcement Learning is the hard exploration problem in sparse reward environments. Various types of intrinsic rewards have been proposed to address this challenge by pushing towards diversity. This diversity might be imposed at different levels, favouring the agent to explore different states, policies or behaviours (State, Policy and Skill level diversity, respectively). However, the impact of diversity on the agent's behaviour remains unclear. In this work, we aim to fill this gap by studying the effect of different levels of diversity imposed by intrinsic rewards on the exploration patterns of RL agents. We select four intrinsic rewards (State Count, Intrinsic Curiosity Module (ICM), Maximum Entropy, and Diversity is all you need (DIAYN)), each pushing for a different diversity level. We conduct an empirical study on MiniGrid environment to compare their impact on exploration considering various metrics related to the agent's exploration, namely: episodic return, observation coverage, agent's position coverage, policy entropy, and timeframes to reach the sparse reward. The main outcome of the study is that State Count leads to the best exploration performance in the case of low-dimensional observations. However, in the case of RGB observations, the performance of State Count is highly degraded mostly due to representation learning challenges. Conversely, Maximum Entropy is less impacted, resulting in a more robust exploration, despite being not always optimal. Lastly, our empirical study revealed that learning diverse skills with DIAYN, often linked to improved robustness and generalisation, does not promote exploration in MiniGrid environments. This is because: i) learning the skill space itself can be challenging, and ii) exploration within the skill space prioritises differentiating between behaviours rather than achieving uniform state visitation.

BALROG: Benchmarking Agentic LLM and VLM Reasoning On Games

Nov 20, 2024

Abstract:Large Language Models (LLMs) and Vision Language Models (VLMs) possess extensive knowledge and exhibit promising reasoning abilities; however, they still struggle to perform well in complex, dynamic environments. Real-world tasks require handling intricate interactions, advanced spatial reasoning, long-term planning, and continuous exploration of new strategies-areas in which we lack effective methodologies for comprehensively evaluating these capabilities. To address this gap, we introduce BALROG, a novel benchmark designed to assess the agentic capabilities of LLMs and VLMs through a diverse set of challenging games. Our benchmark incorporates a range of existing reinforcement learning environments with varying levels of difficulty, including tasks that are solvable by non-expert humans in seconds to extremely challenging ones that may take years to master (e.g., the NetHack Learning Environment). We devise fine-grained metrics to measure performance and conduct an extensive evaluation of several popular open-source and closed-source LLMs and VLMs. Our findings indicate that while current models achieve partial success in the easier games, they struggle significantly with more challenging tasks. Notably, we observe severe deficiencies in vision-based decision-making, as models perform worse when visual representations of the environments are provided. We release BALROG as an open and user-friendly benchmark to facilitate future research and development in the agentic community.

NAVIX: Scaling MiniGrid Environments with JAX

Jul 28, 2024

Abstract:As Deep Reinforcement Learning (Deep RL) research moves towards solving large-scale worlds, efficient environment simulations become crucial for rapid experimentation. However, most existing environments struggle to scale to high throughput, setting back meaningful progress. Interactions are typically computed on the CPU, limiting training speed and throughput, due to slower computation and communication overhead when distributing the task across multiple machines. Ultimately, Deep RL training is CPU-bound, and developing batched, fast, and scalable environments has become a frontier for progress. Among the most used Reinforcement Learning (RL) environments, MiniGrid is at the foundation of several studies on exploration, curriculum learning, representation learning, diversity, meta-learning, credit assignment, and language-conditioned RL, and still suffers from the limitations described above. In this work, we introduce NAVIX, a re-implementation of MiniGrid in JAX. NAVIX achieves over 200 000x speed improvements in batch mode, supporting up to 2048 agents in parallel on a single Nvidia A100 80 GB. This reduces experiment times from one week to 15 minutes, promoting faster design iterations and more scalable RL model development.

A Survey of Temporal Credit Assignment in Deep Reinforcement Learning

Dec 02, 2023

Abstract:The Credit Assignment Problem (CAP) refers to the longstanding challenge of Reinforcement Learning (RL) agents to associate actions with their long-term consequences. Solving the CAP is a crucial step towards the successful deployment of RL in the real world since most decision problems provide feedback that is noisy, delayed, and with little or no information about the causes. These conditions make it hard to distinguish serendipitous outcomes from those caused by informed decision-making. However, the mathematical nature of credit and the CAP remains poorly understood and defined. In this survey, we review the state of the art of Temporal Credit Assignment (CA) in deep RL. We propose a unifying formalism for credit that enables equitable comparisons of state of the art algorithms and improves our understanding of the trade-offs between the various methods. We cast the CAP as the problem of learning the influence of an action over an outcome from a finite amount of experience. We discuss the challenges posed by delayed effects, transpositions, and a lack of action influence, and analyse how existing methods aim to address them. Finally, we survey the protocols to evaluate a credit assignment method, and suggest ways to diagnoses the sources of struggle for different credit assignment methods. Overall, this survey provides an overview of the field for new-entry practitioners and researchers, it offers a coherent perspective for scholars looking to expedite the starting stages of a new study on the CAP, and it suggests potential directions for future research

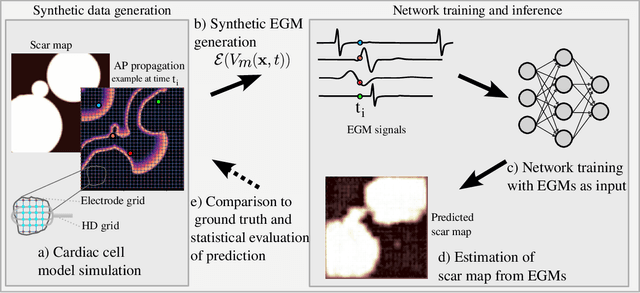

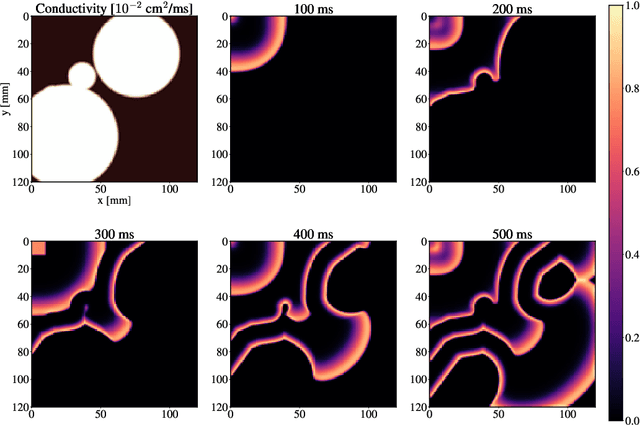

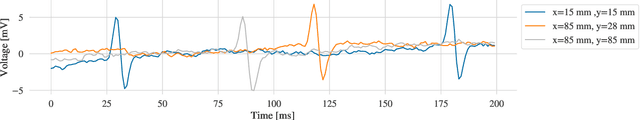

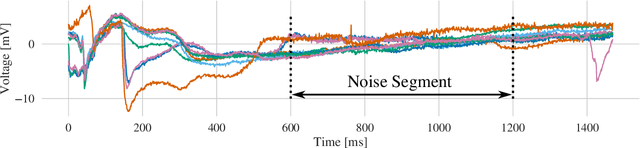

Estimating Cardiac Tissue Conductivity from Electrograms with Fully Convolutional Networks

Dec 06, 2022

Abstract:Atrial Fibrillation (AF) is characterized by disorganised electrical activity in the atria and is known to be sustained by the presence of regions of fibrosis (scars) or functional cellular remodeling, both of which may lead to areas of slow conduction. Estimating the effective conductivity of the myocardium and identifying regions of abnormal propagation is therefore crucial for the effective treatment of AF. We hypothesise that the spatial distribution of tissue conductivity can be directly inferred from an array of concurrently acquired contact electrograms (EGMs). We generate a dataset of simulated cardiac AP propagation using randomised scar distributions and a phenomenological cardiac model and calculate contact electrograms at various positions on the field. A deep neural network, based on a modified U-net architecture, is trained to estimate the location of the scar and quantify conductivity of the tissue with a Jaccard index of $91$%. We adapt a wavelet-based surrogate testing analysis to confirm that the inferred conductivity distribution is an accurate representation of the ground truth input to the model. We find that the root mean square error (RMSE) between the ground truth and our predictions is significantly smaller ($p_{val}=0.007$) than the RMSE between the ground truth and surrogate samples.

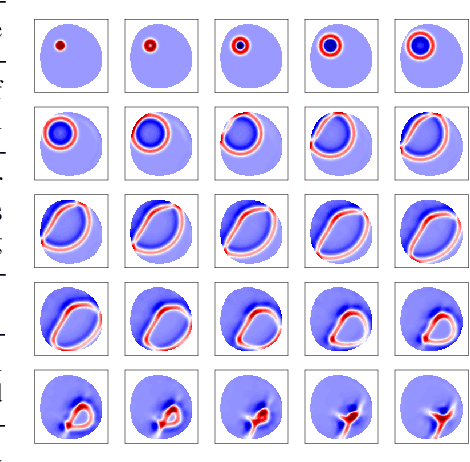

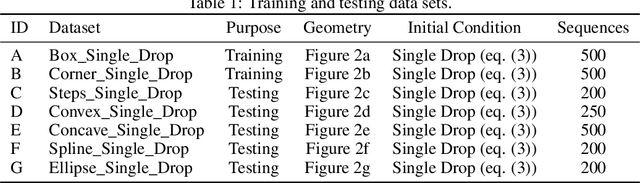

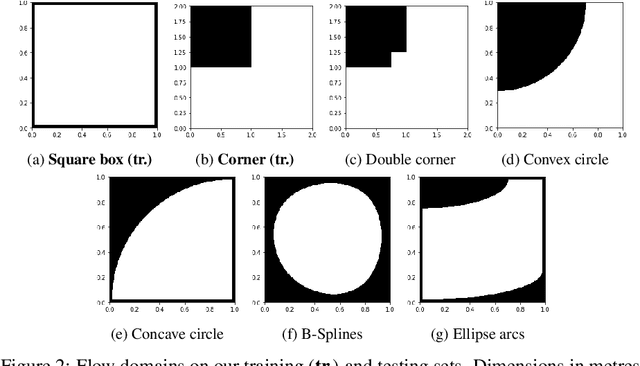

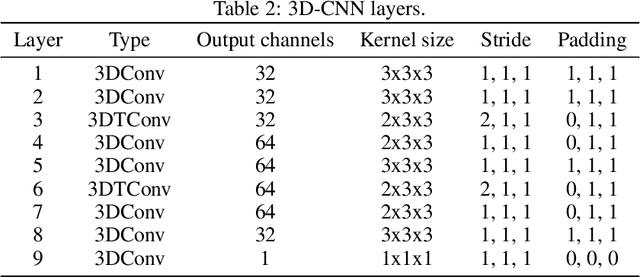

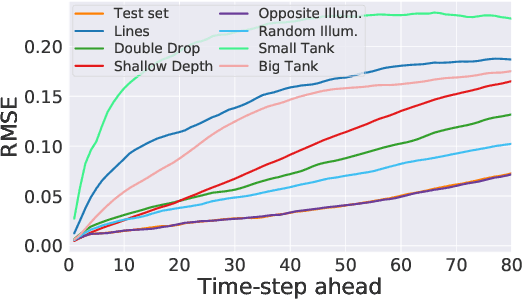

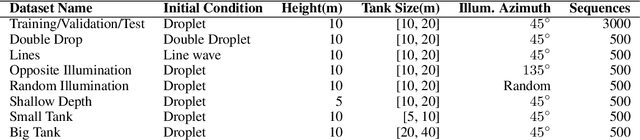

Simulating Surface Wave Dynamics with Convolutional Networks

Dec 01, 2020

Abstract:We investigate the performance of fully convolutional networks to simulate the motion and interaction of surface waves in open and closed complex geometries. We focus on a U-Net architecture and analyse how well it generalises to geometric configurations not seen during training. We demonstrate that a modified U-Net architecture is capable of accurately predicting the height distribution of waves on a liquid surface within curved and multi-faceted open and closed geometries, when only simple box and right-angled corner geometries were seen during training. We also consider a separate and independent 3D CNN for performing time-interpolation on the predictions produced by our U-Net. This allows generating simulations with a smaller time-step size than the one the U-Net has been trained for.

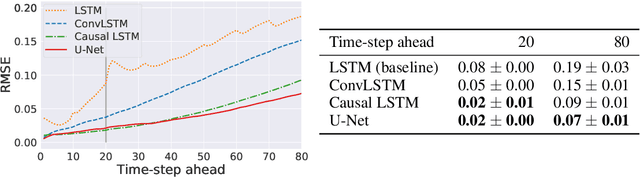

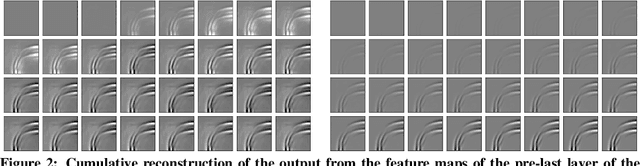

Comparing recurrent and convolutional neural networks for predicting wave propagation

Mar 09, 2020

Abstract:Dynamical systems can be modelled by partial differential equations and numerical computations are used everywhere in science and engineering. In this work, we investigate the performance of recurrent and convolutional deep neural network architectures to predict the surface waves. The system is governed by the Saint-Venant equations. We improve on the long-term prediction over previous methods while keeping the inference time at a fraction of numerical simulations. We also show that convolutional networks perform at least as well as recurrent networks in this task. Finally, we assess the generalisation capability of each network by extrapolating in longer time-frames and in different physical settings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge