Dipti Srinivasan

A Bidirectional Gated Recurrent Unit Model for PUE Prediction in Data Centers

Dec 23, 2025

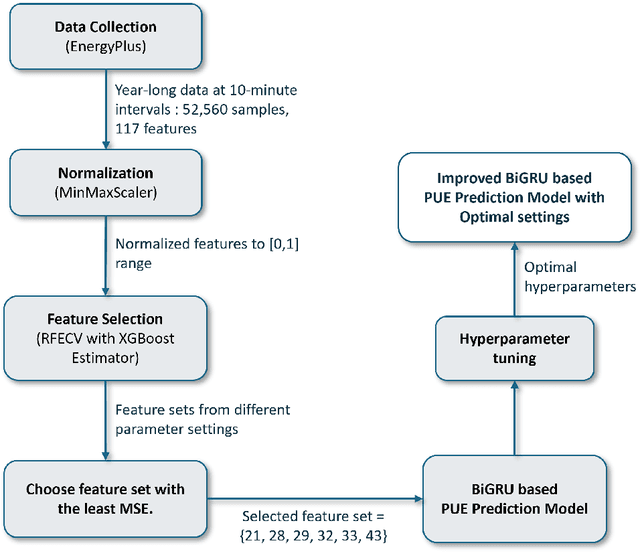

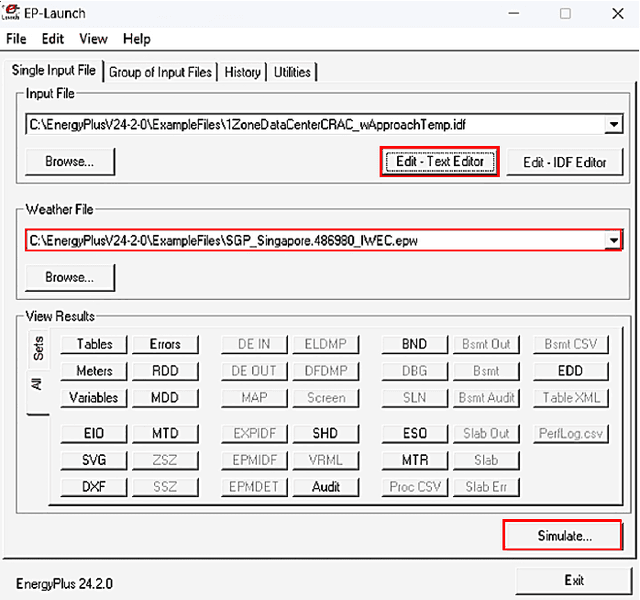

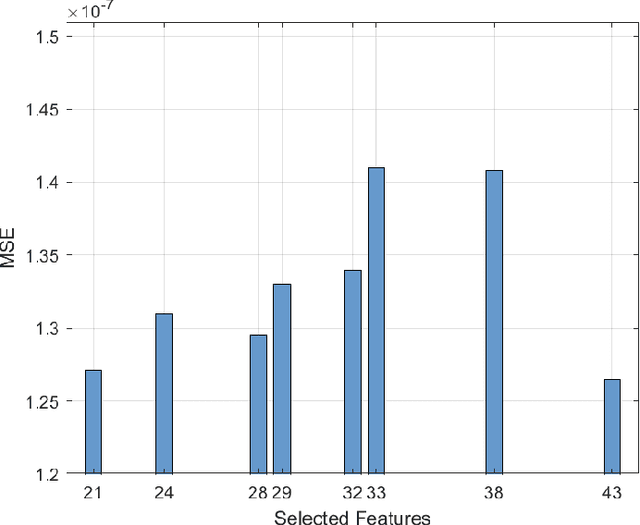

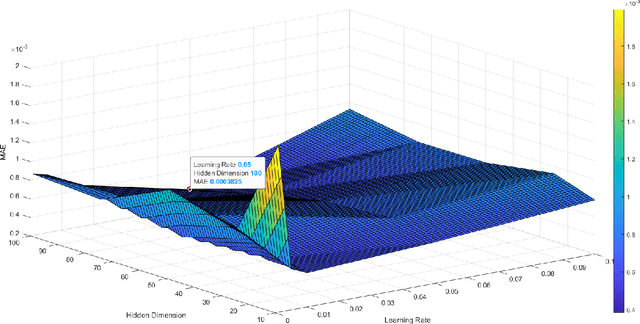

Abstract:Data centers account for significant global energy consumption and a carbon footprint. The recent increasing demand for edge computing and AI advancements drives the growth of data center storage capacity. Energy efficiency is a cost-effective way to combat climate change, cut energy costs, improve business competitiveness, and promote IT and environmental sustainability. Thus, optimizing data center energy management is the most important factor in the sustainability of the world. Power Usage Effectiveness (PUE) is used to represent the operational efficiency of the data center. Predicting PUE using Neural Networks provides an understanding of the effect of each feature on energy consumption, thus enabling targeted modifications of those key features to improve energy efficiency. In this paper, we have developed Bidirectional Gated Recurrent Unit (BiGRU) based PUE prediction model and compared the model performance with GRU. The data set comprises 52,560 samples with 117 features using EnergyPlus, simulating a DC in Singapore. Sets of the most relevant features are selected using the Recursive Feature Elimination with Cross-Validation (RFECV) algorithm for different parameter settings. These feature sets are used to find the optimal hyperparameter configuration and train the BiGRU model. The performance of the optimized BiGRU-based PUE prediction model is then compared with that of GRU using mean squared error (MSE), mean absolute error (MAE), and R-squared metrics.

Disentangled Interleaving Variational Encoding

Jan 16, 2025

Abstract:Conflicting objectives present a considerable challenge in interleaving multi-task learning, necessitating the need for meticulous design and balance to ensure effective learning of a representative latent data space across all tasks without mutual negative impact. Drawing inspiration from the concept of marginal and conditional probability distributions in probability theory, we design a principled and well-founded approach to disentangle the original input into marginal and conditional probability distributions in the latent space of a variational autoencoder. Our proposed model, Deep Disentangled Interleaving Variational Encoding (DeepDIVE) learns disentangled features from the original input to form clusters in the embedding space and unifies these features via the cross-attention mechanism in the fusion stage. We theoretically prove that combining the objectives for reconstruction and forecasting fully captures the lower bound and mathematically derive a loss function for disentanglement using Na\"ive Bayes. Under the assumption that the prior is a mixture of log-concave distributions, we also establish that the Kullback-Leibler divergence between the prior and the posterior is upper bounded by a function minimized by the minimizer of the cross entropy loss, informing our adoption of radial basis functions (RBF) and cross entropy with interleaving training for DeepDIVE to provide a justified basis for convergence. Experiments on two public datasets show that DeepDIVE disentangles the original input and yields forecast accuracies better than the original VAE and comparable to existing state-of-the-art baselines.

Objective Evaluation of Deep Uncertainty Predictions for COVID-19 Detection

Dec 22, 2020

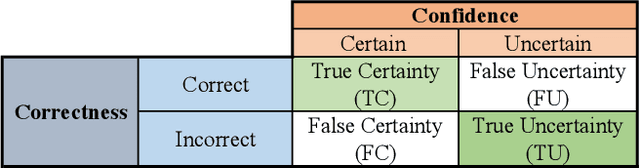

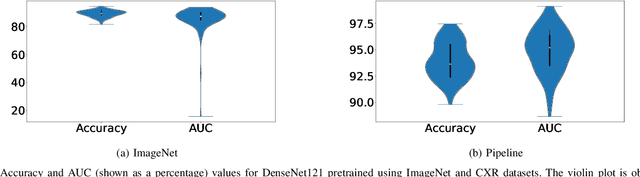

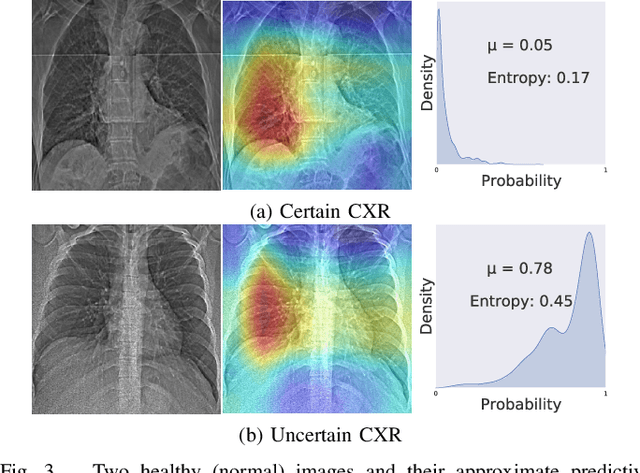

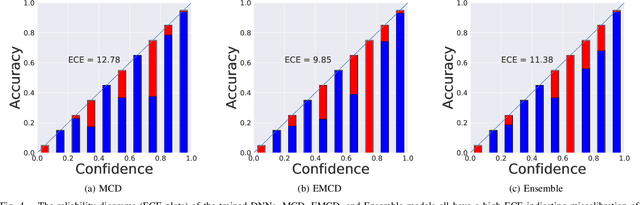

Abstract:Deep neural networks (DNNs) have been widely applied for detecting COVID-19 in medical images. Existing studies mainly apply transfer learning and other data representation strategies to generate accurate point estimates. The generalization power of these networks is always questionable due to being developed using small datasets and failing to report their predictive confidence. Quantifying uncertainties associated with DNN predictions is a prerequisite for their trusted deployment in medical settings. Here we apply and evaluate three uncertainty quantification techniques for COVID-19 detection using chest X-Ray (CXR) images. The novel concept of uncertainty confusion matrix is proposed and new performance metrics for the objective evaluation of uncertainty estimates are introduced. Through comprehensive experiments, it is shown that networks pertained on CXR images outperform networks pretrained on natural image datasets such as ImageNet. Qualitatively and quantitatively evaluations also reveal that the predictive uncertainty estimates are statistically higher for erroneous predictions than correct predictions. Accordingly, uncertainty quantification methods are capable of flagging risky predictions with high uncertainty estimates. We also observe that ensemble methods more reliably capture uncertainties during the inference.

Handling of uncertainty in medical data using machine learning and probability theory techniques: A review of 30 years

Aug 23, 2020

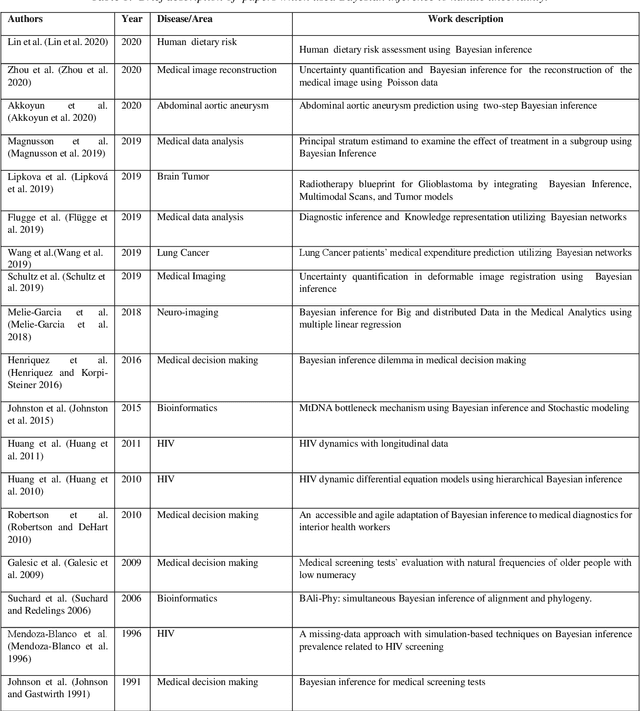

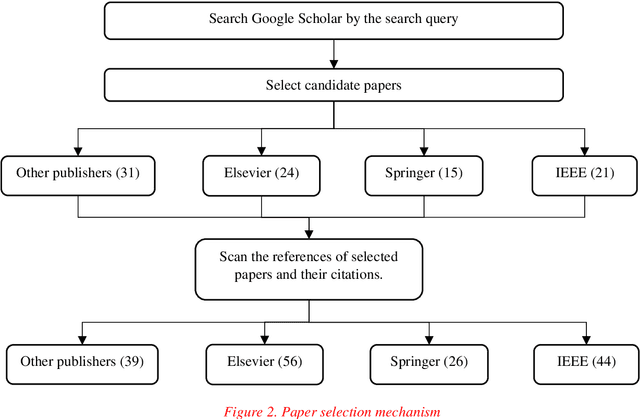

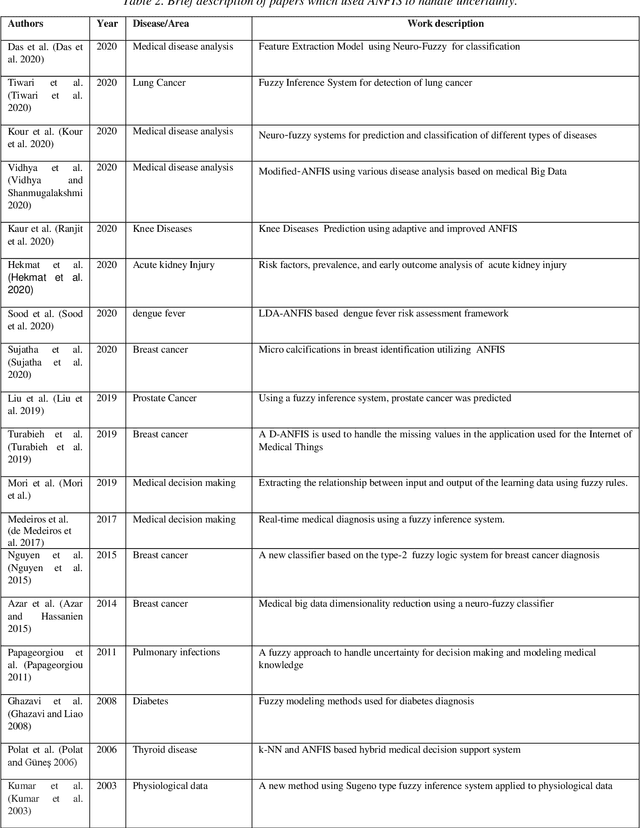

Abstract:Understanding data and reaching valid conclusions are of paramount importance in the present era of big data. Machine learning and probability theory methods have widespread application for this purpose in different fields. One critically important yet less explored aspect is how data and model uncertainties are captured and analyzed. Proper quantification of uncertainty provides valuable information for optimal decision making. This paper reviewed related studies conducted in the last 30 years (from 1991 to 2020) in handling uncertainties in medical data using probability theory and machine learning techniques. Medical data is more prone to uncertainty due to the presence of noise in the data. So, it is very important to have clean medical data without any noise to get accurate diagnosis. The sources of noise in the medical data need to be known to address this issue. Based on the medical data obtained by the physician, diagnosis of disease, and treatment plan are prescribed. Hence, the uncertainty is growing in healthcare and there is limited knowledge to address these problems. We have little knowledge about the optimal treatment methods as there are many sources of uncertainty in medical science. Our findings indicate that there are few challenges to be addressed in handling the uncertainty in medical raw data and new models. In this work, we have summarized various methods employed to overcome this problem. Nowadays, application of novel deep learning techniques to deal such uncertainties have significantly increased.

An Uncertainty-aware Transfer Learning-based Framework for Covid-19 Diagnosis

Jul 26, 2020

Abstract:The early and reliable detection of COVID-19 infected patients is essential to prevent and limit its outbreak. The PCR tests for COVID-19 detection are not available in many countries and also there are genuine concerns about their reliability and performance. Motivated by these shortcomings, this paper proposes a deep uncertainty-aware transfer learning framework for COVID-19 detection using medical images. Four popular convolutional neural networks (CNNs) including VGG16, ResNet50, DenseNet121, and InceptionResNetV2 are first applied to extract deep features from chest X-ray and computed tomography (CT) images. Extracted features are then processed by different machine learning and statistical modelling techniques to identify COVID-19 cases. We also calculate and report the epistemic uncertainty of classification results to identify regions where the trained models are not confident about their decisions (out of distribution problem). Comprehensive simulation results for X-ray and CT image datasets indicate that linear support vector machine and neural network models achieve the best results as measured by accuracy, sensitivity, specificity, and AUC. Also it is found that predictive uncertainty estimates are much higher for CT images compared to X-ray images.

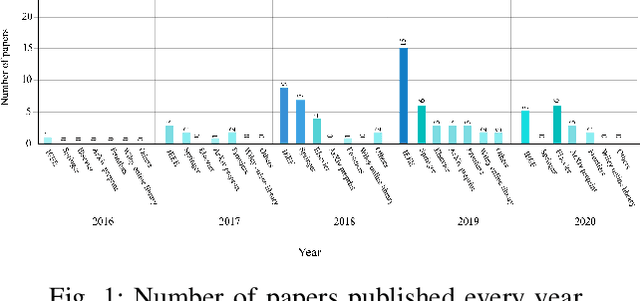

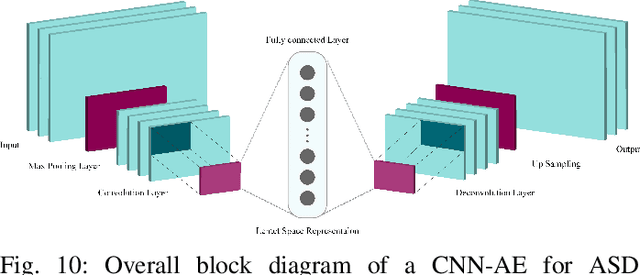

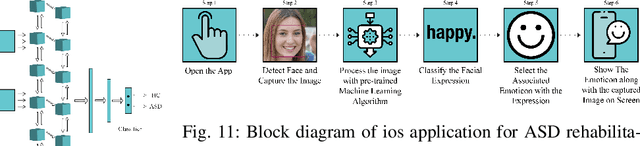

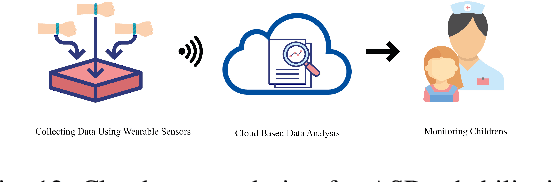

Deep Learning for Neuroimaging-based Diagnosis and Rehabilitation of Autism Spectrum Disorder: A Review

Jul 11, 2020

Abstract:Accurate diagnosis of Autism Spectrum Disorder (ASD) is essential for its management and rehabilitation. Neuroimaging techniques that are non-invasive are disease markers and may be leveraged to aid ASD diagnosis. Structural and functional neuroimaging techniques provide physicians substantial information about the structure (anatomy and structural connectivity) and function (activity and functional connectivity) of the brain. Due to the intricate structure and function of the brain, diagnosing ASD with neuroimaging data without exploiting artificial intelligence (AI) techniques is extremely challenging. AI techniques comprise traditional machine learning (ML) approaches and deep learning (DL) techniques. Conventional ML methods employ various feature extraction and classification techniques, but in DL, the process of feature extraction and classification is accomplished intelligently and integrally. In this paper, studies conducted with the aid of DL networks to distinguish ASD were investigated. Rehabilitation tools provided by supporting ASD patients utilizing DL networks were also assessed. Finally, we presented important challenges in this automated detection and rehabilitation of ASD.

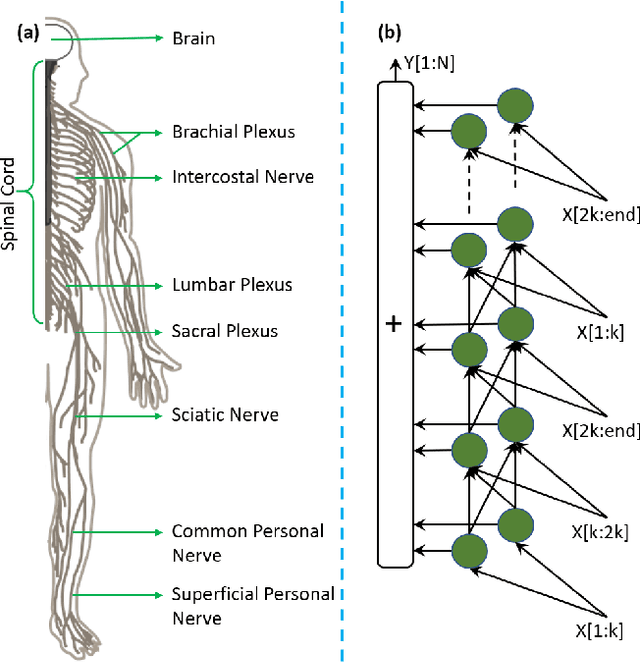

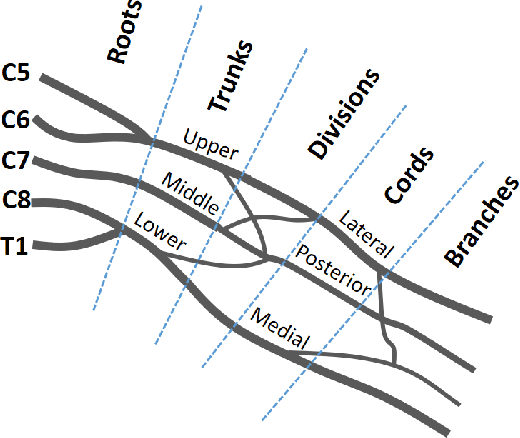

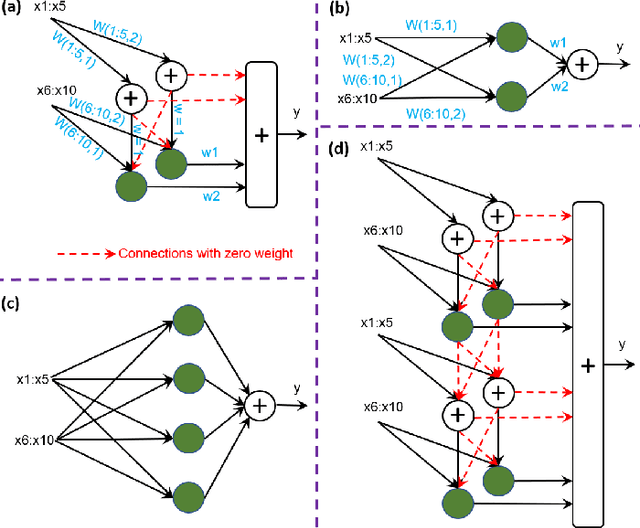

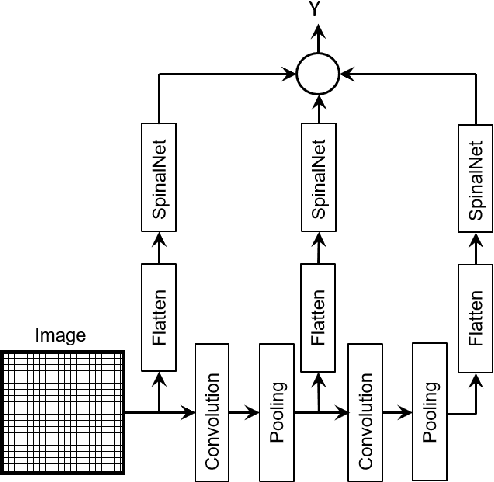

SpinalNet: Deep Neural Network with Gradual Input

Jul 07, 2020

Abstract:Deep neural networks (DNNs) have achieved the state of the art performance in numerous fields. However, DNNs need high computation times, and people always expect better performance with lower computation. Therefore, we study the human somatosensory system and design a neural network (SpinalNet) to achieve higher accuracy with lower computation time. This paper aims to present the SpinalNet. Hidden layers of the proposed SpinalNet consist of three parts: 1) Input row, 2) Intermediate row, and 3) output row. The intermediate row of the SpinalNet usually contains a small number of neurons. Input segmentation enables each hidden layer to receive a part of the input and outputs of the previous layer. Therefore, the number of incoming weights in a hidden layer is significantly lower than traditional DNNs. As the network directly contributes to outputs in each layer, the vanishing gradient problem of DNN does not exist. We integrate the SpinalNet as the fully-connected layer of the convolutional neural network (CNN), residual neural network (ResNet), and Dense Convolutional Network (DenseNet), Visual Geometry Group (VGG) network. We observe a significant error reduction with lower computation in most situations. We have received state-of-the-art performance for the QMNIST, Kuzushiji-MNIST, and EMNIST(digits) datasets. Scripts of the proposed SpinalNet is available at the following link: https://github.com/dipuk0506/SpinalNet

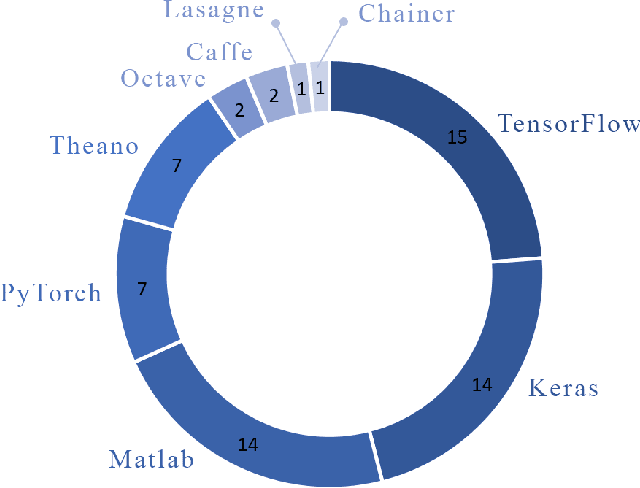

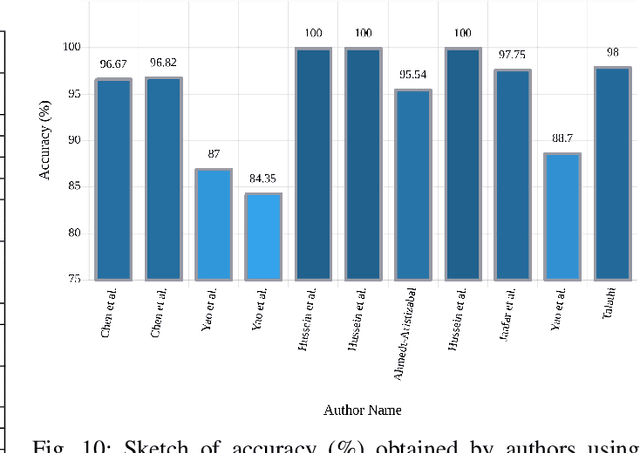

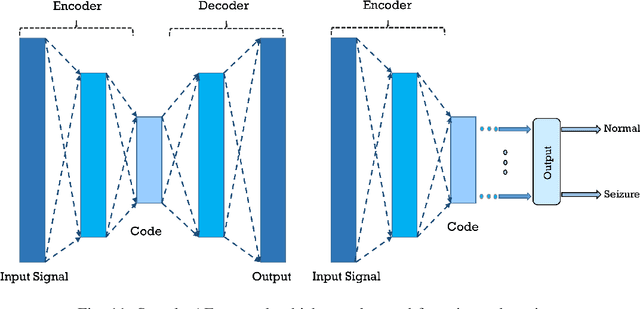

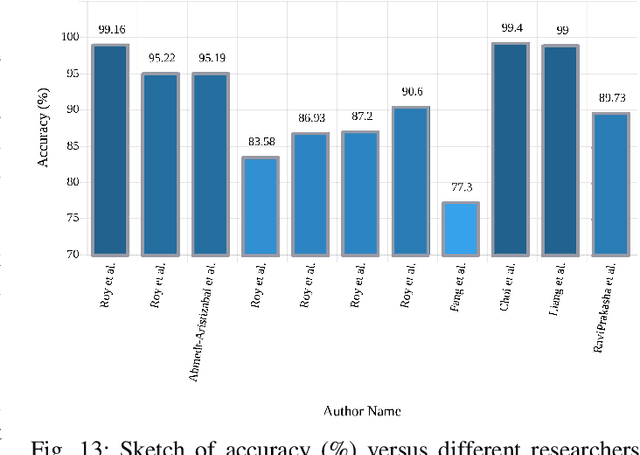

Epileptic seizure detection using deep learning techniques: A Review

Jul 02, 2020

Abstract:A variety of screening approaches have been proposed to diagnose epileptic seizures, using Electroencephalography (EEG) and Magnetic Resonance Imaging (MRI) modalities. Artificial intelligence encompasses a variety of areas, and one of its branches is deep learning. Before the rise of deep learning, conventional machine learning algorithms involving feature extraction were performed. This limited their performance to the ability of those handcrafting the features. However, in deep learning, the extraction of features and classification is entirely automated. The advent of these techniques in many areas of medicine such as diagnosis of epileptic seizures, has made significant advances. In this study, a comprehensive overview of the types of deep learning methods exploited to diagnose epileptic seizures from various modalities has been studied. Additionally, hardware implementation and cloud-based works are discussed as they are most suited for applied medicine.

Optimal Uncertainty-guided Neural Network Training

Dec 30, 2019

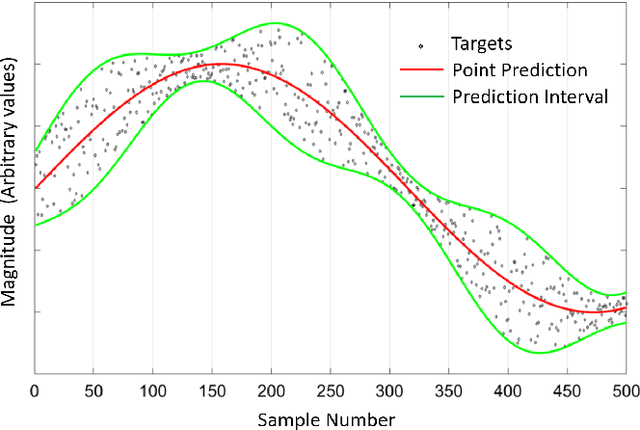

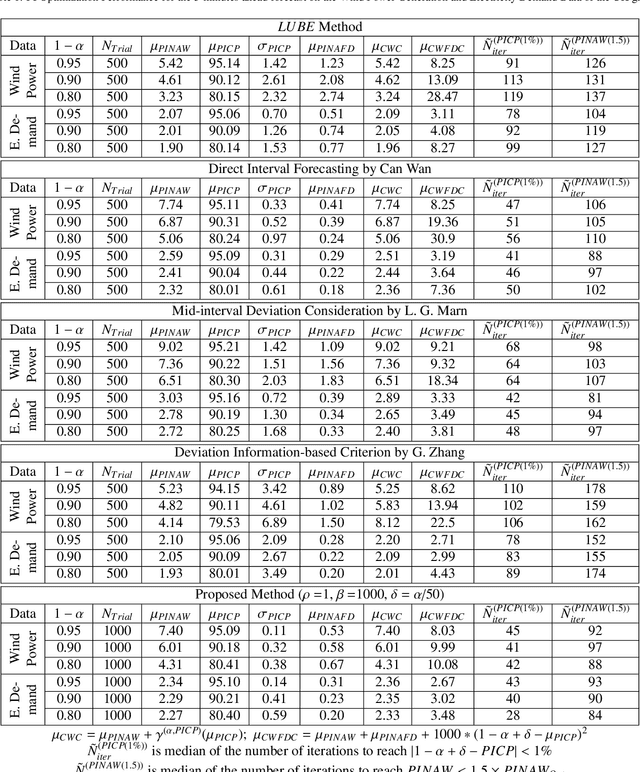

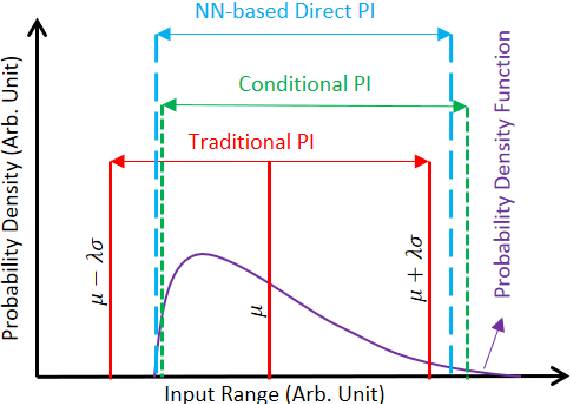

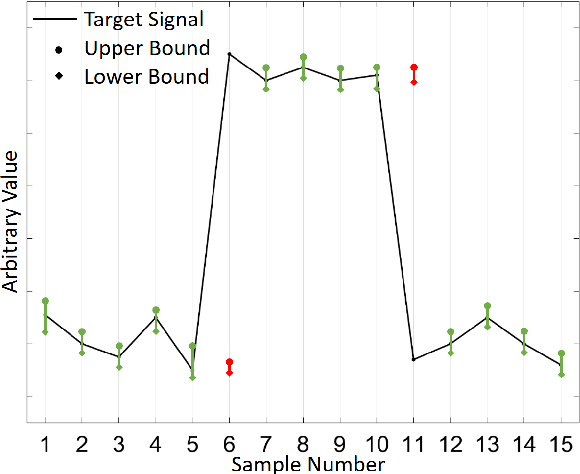

Abstract:The neural network (NN)-based direct uncertainty quantification (UQ) methods have achieved the state of the art performance since the first inauguration, known as the lower-upper-bound estimation (LUBE) method. However, currently-available cost functions for uncertainty guided NN training are not always converging and all converged NNs are not generating optimized prediction intervals (PIs). Moreover, several groups have proposed different quality criteria for PIs. These raise a question about their relative effectiveness. Most of the existing cost functions of uncertainty guided NN training are not customizable and the convergence of training is uncertain. Therefore, in this paper, we propose a highly customizable smooth cost function for developing NNs to construct optimal PIs. The optimized average width of PIs, PI-failure distances and the PI coverage probability (PICP) are computed for the test dataset. The performance of the proposed method is examined for the wind power generation and the electricity demand data. Results show that the proposed method reduces variation in the quality of PIs, accelerates the training, and improves convergence probability from 99.2% to 99.8%.

Enhanced Multiobjective Evolutionary Algorithm based on Decomposition for Solving the Unit Commitment Problem

Oct 23, 2014

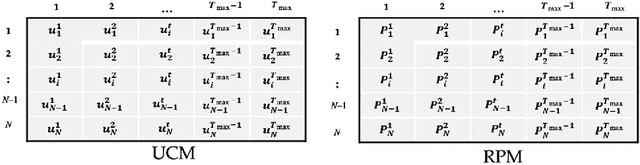

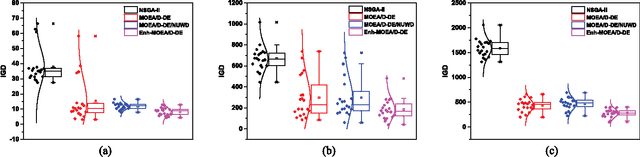

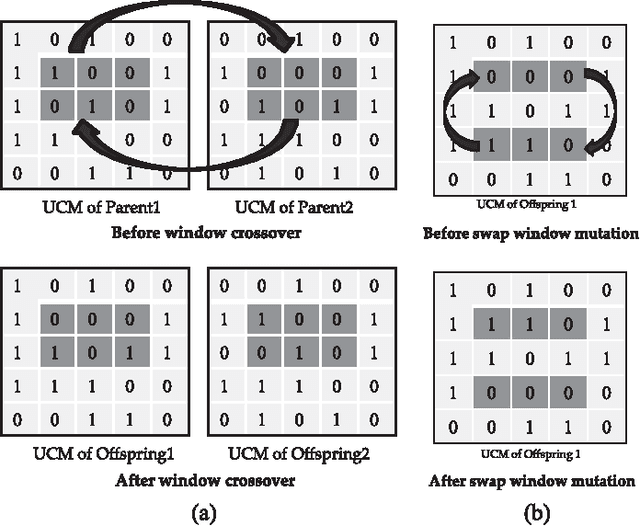

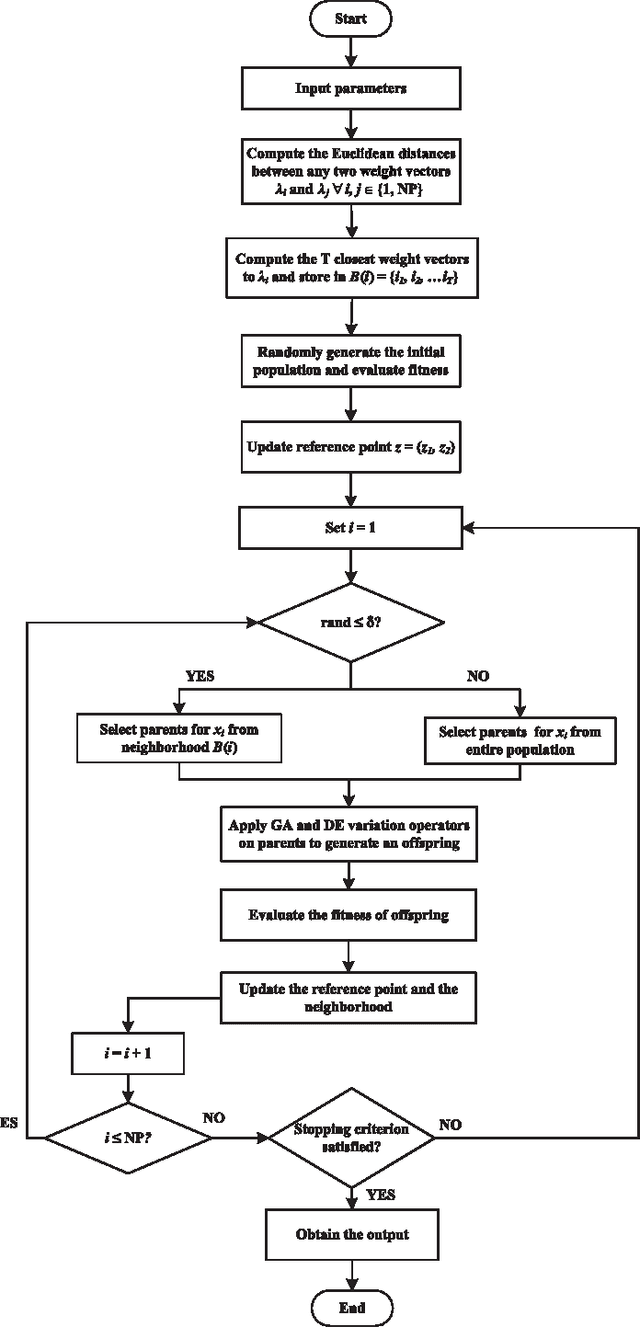

Abstract:The unit commitment (UC) problem is a nonlinear, high-dimensional, highly constrained, mixed-integer power system optimization problem and is generally solved in the literature considering minimizing the system operation cost as the only objective. However, due to increasing environmental concerns, the recent attention has shifted to incorporating emission in the problem formulation. In this paper, a multi-objective evolutionary algorithm based on decomposition (MOEA/D) is proposed to solve the UC problem as a multi-objective optimization problem considering minimizing cost and emission as the multiple objec- tives. Since, UC problem is a mixed-integer optimization problem consisting of binary UC variables and continuous power dispatch variables, a novel hybridization strategy is proposed within the framework of MOEA/D such that genetic algorithm (GA) evolves the binary variables while differential evolution (DE) evolves the continuous variables. Further, a novel non-uniform weight vector distribution strategy is proposed and a parallel island model based on combination of MOEA/D with uniform and non-uniform weight vector distribution strategy is implemented to enhance the performance of the presented algorithm. Extensive case studies are presented on different test systems and the effectiveness of the proposed hybridization strategy, the non-uniform weight vector distribution strategy and parallel island model is verified through stringent simulated results. Further, exhaustive benchmarking against the algorithms proposed in the literature is presented to demonstrate the superiority of the proposed algorithm in obtaining significantly better converged and uniformly distributed trade-off solutions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge