Chiranjib Saha

Determinantal Learning for Subset Selection in Wireless Networks

Mar 05, 2025Abstract:Subset selection is central to many wireless communication problems, including link scheduling, power allocation, and spectrum management. However, these problems are often NP-complete, because of which heuristic algorithms applied to solve these problems struggle with scalability in large-scale settings. To address this, we propose a determinantal point process-based learning (DPPL) framework for efficiently solving general subset selection problems in massive networks. The key idea is to model the optimal subset as a realization of a determinantal point process (DPP), which balances the trade-off between quality (signal strength) and similarity (mutual interference) by enforcing negative correlation in the selection of {\em similar} links (those that create significant mutual interference). However, conventional methods for constructing similarity matrices in DPP impose decomposability and symmetry constraints that often do not hold in practice. To overcome this, we introduce a new method based on the Gershgorin Circle Theorem for constructing valid similarity matrices. The effectiveness of the proposed approach is demonstrated by applying it to two canonical wireless network settings: an ad hoc network in 2D and a cellular network serving drones in 3D. Simulation results show that DPPL selects near-optimal subsets that maximize network sum-rate while significantly reducing computational complexity compared to traditional optimization methods, demonstrating its scalability for large-scale networks.

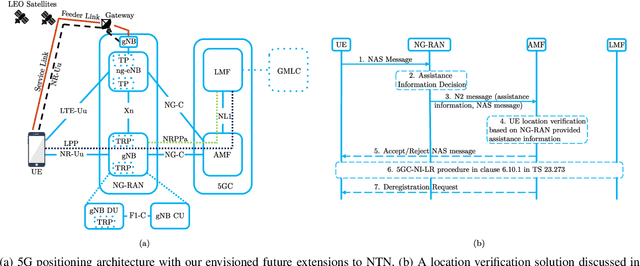

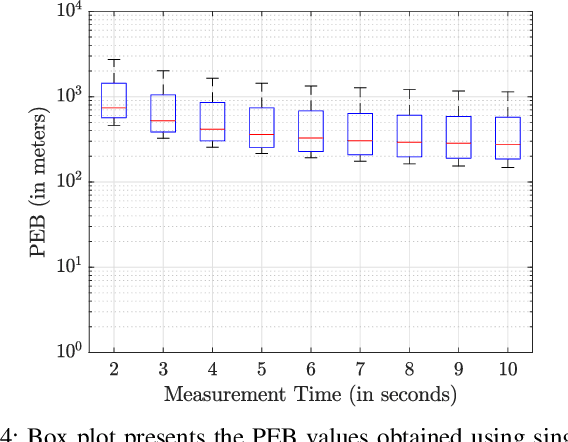

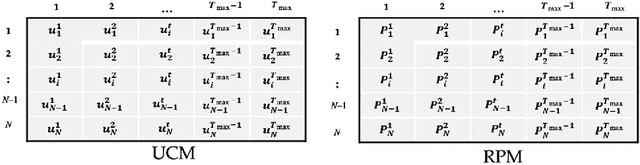

LEO-based Positioning: Foundations, Signal Design, and Receiver Enhancements for 6G NTN

Oct 23, 2024

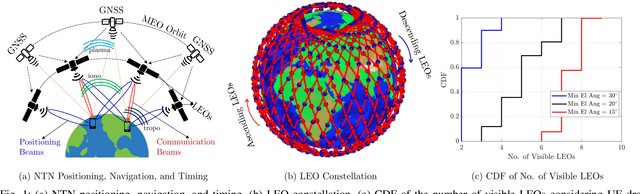

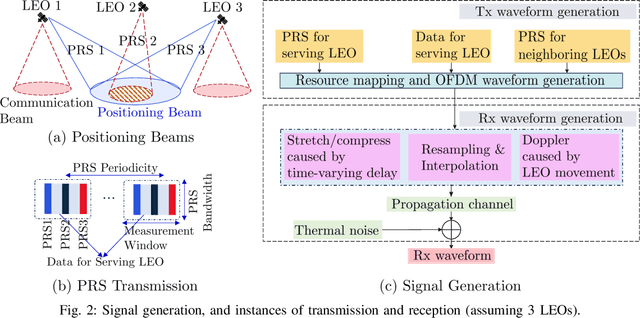

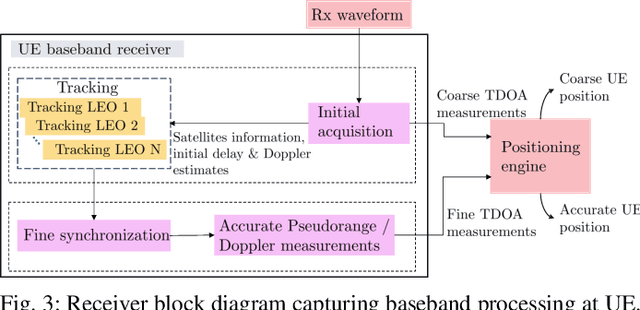

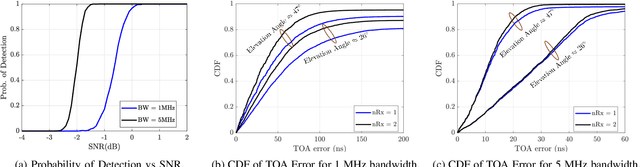

Abstract:The integration of non-terrestrial networks (NTN) into 5G new radio (NR) has opened up the possibility of developing a new positioning infrastructure using NR signals from Low-Earth Orbit (LEO) satellites. LEO-based cellular positioning offers several advantages, such as a superior link budget, higher operating bandwidth, and large forthcoming constellations. Due to these factors, LEO-based positioning, navigation, and timing (PNT) is a potential enhancement for NTN in 6G cellular networks. However, extending the existing terrestrial cellular positioning methods to LEO-based NTN positioning requires considering key fundamental enhancements. These include creating broad positioning beams orthogonal to conventional communication beams, time-domain processing at the user equipment (UE) to resolve large delay and Doppler uncertainties, and efficiently accommodating positioning reference signals (PRS) from multiple satellites within the communication resource grid. In this paper, we present the first set of design insights by incorporating these enhancements and thoroughly evaluating LEO-based positioning, considering the constraints and capabilities of the NR-NTN physical layer. To evaluate the performance of LEO-based NTN positioning, we develop a comprehensive NR-compliant simulation framework, including LEO orbit simulation, transmission (Tx) and receiver (Rx) architectures, and a positioning engine incorporating the necessary enhancements. Our findings suggest that LEO-based NTN positioning could serve as a complementary infrastructure to existing Global Navigation Satellite Systems (GNSS) and, with appropriate enhancements, may also offer a viable alternative.

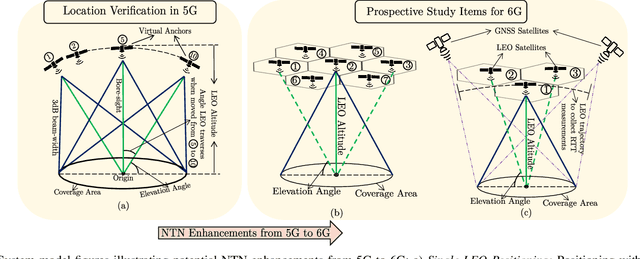

NTN-based 6G Localization: Vision, Role of LEOs, and Open Problems

May 20, 2023

Abstract:Since the introduction of 5G Release 18, non-terrestrial networks (NTNs) based positioning has garnered significant interest due to its numerous applications, including emergency services, lawful intercept, and charging and tariff services. This release considers single low-earth-orbit (LEO) positioning explicitly for {\em location verification} purposes, which requires a fairly coarse location estimate. To understand the future trajectory of NTN-based localization in 6G, we first provide a comprehensive overview of the evolution of 3rd Generation Partnership Project (3GPP) localization techniques, with specific emphasis on the current activities in 5G related to NTN location verification. In order to provide support for more accurate positioning in 6G using LEOs, we identify two NTN positioning systems that are likely study items for 6G: (i) multi-LEO positioning, and (ii) augmenting single-LEO based location verification setup with Global Navigation Satellite System (GNSS), especially when an insufficient number of GNSS satellites (such as 2) are visible. We evaluate the accuracy of both systems through a 3GPP-compliant simulation study using a Cram\'{e}r-Rao lower bound (CRLB) analysis. Our findings suggest that NTN technology has significant potential to provide accurate positioning of UEs in scenarios where GNSS signals may be weak or unavailable, but there are technical challenges in accommodating these solutions in 3GPP. We conclude with a discussion on the research landscape and key open problems related to NTN-based positioning.

Tensor Learning-based Precoder Codebooks for FD-MIMO Systems

Jun 21, 2021

Abstract:This paper develops an efficient procedure for designing low-complexity codebooks for precoding in a full-dimension (FD) multiple-input multiple-output (MIMO) system with a uniform planar array (UPA) antenna at the transmitter (Tx) using tensor learning. In particular, instead of using statistical channel models, we utilize a model-free data-driven approach with foundations in machine learning to generate codebooks that adapt to the surrounding propagation conditions. We use a tensor representation of the FD-MIMO channel and exploit its properties to design quantized version of the channel precoders. We find the best representation of the optimal precoder as a function of Kronecker Product (KP) of two low-dimensional precoders, respectively corresponding to the horizontal and vertical dimensions of the UPA, obtained from the tensor decomposition of the channel. We then quantize this precoder to design product codebooks such that an average loss in mutual information due to quantization of channel state information (CSI) is minimized. The key technical contribution lies in exploiting the constraints on the precoders to reduce the product codebook design problem to an unsupervised clustering problem on a Cartesian Product Grassmann manifold (CPM), where the cluster centroids form a finite-sized precoder codebook. This codebook can be found efficiently by running a $K$-means clustering on the CPM. With a suitable induced distance metric on the CPM, we show that the construction of product codebooks is equivalent to finding the optimal set of centroids on the factor manifolds corresponding to the horizontal and vertical dimensions. Simulation results are presented to demonstrate the capability of the proposed design criterion in learning the codebooks and the attractive performance of the designed codebooks.

Learning on a Grassmann Manifold: CSI Quantization for Massive MIMO Systems

May 18, 2020

Abstract:This paper focuses on the design of beamforming codebooks that maximize the average normalized beamforming gain for any underlying channel distribution. While the existing techniques use statistical channel models, we utilize a model-free data-driven approach with foundations in machine learning to generate beamforming codebooks that adapt to the surrounding propagation conditions. The key technical contribution lies in reducing the codebook design problem to an unsupervised clustering problem on a Grassmann manifold where the cluster centroids form the finite-sized beamforming codebook for the channel state information (CSI), which can be efficiently solved using K-means clustering. This approach is extended to develop a remarkably efficient procedure for designing product codebooks for full-dimension (FD) multiple-input multiple-output (MIMO) systems with uniform planar array (UPA) antennas. Simulation results demonstrate the capability of the proposed design criterion in learning the codebooks, reducing the codebook size and producing noticeably higher beamforming gains compared to the existing state-of-the-art CSI quantization techniques.

Machine Learning meets Stochastic Geometry: Determinantal Subset Selection for Wireless Networks

May 01, 2019

Abstract:In wireless networks, many problems can be formulated as subset selection problems where the goal is to select a subset from the ground set with the objective of maximizing some objective function. These problems are typically NP-hard and hence solved through carefully constructed heuristics, which are themselves mostly NP-complete and thus not easily applicable to large networks. On the other hand, subset selection problems occur in slightly different context in machine learning (ML) where the goal is to select a subset of high quality yet diverse items from a ground set. In this paper, we introduce a novel DPP-based learning (DPPL) framework for efficiently solving subset selection problems in wireless networks. The DPPL is intended to replace the traditional optimization algorithms for subset selection by learning the quality-diversity trade-off in the optimal subsets selected by an optimization routine. As a case study, we apply DPPL to the wireless link scheduling problem, where the goal is to determine the subset of simultaneously active links which maximizes the network-wide sum-rate. We demonstrate that the proposed DPPL approaches the optimal solution with significantly lower computational complexity than the popular optimization algorithms used for this problem in the literature.

Enhanced Multiobjective Evolutionary Algorithm based on Decomposition for Solving the Unit Commitment Problem

Oct 23, 2014

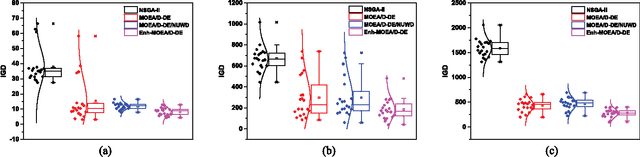

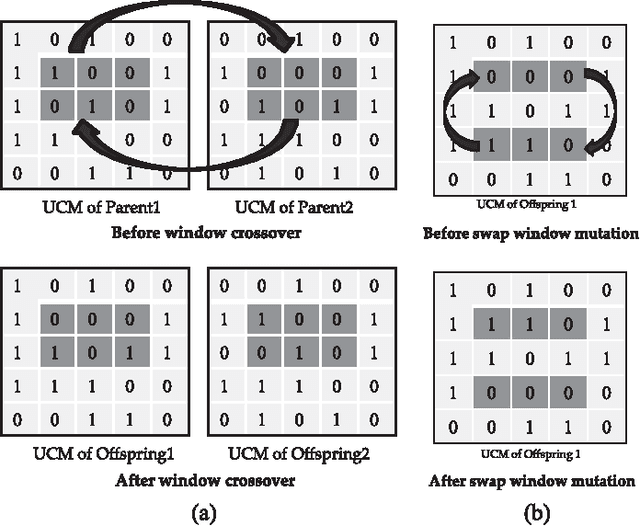

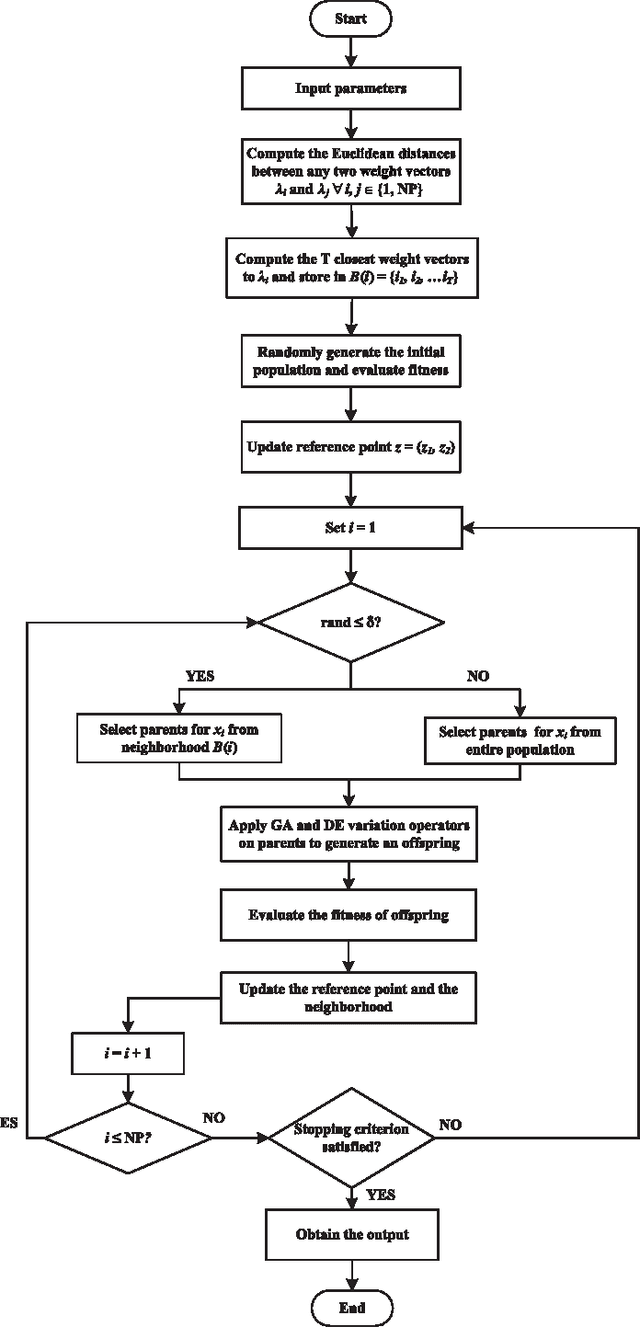

Abstract:The unit commitment (UC) problem is a nonlinear, high-dimensional, highly constrained, mixed-integer power system optimization problem and is generally solved in the literature considering minimizing the system operation cost as the only objective. However, due to increasing environmental concerns, the recent attention has shifted to incorporating emission in the problem formulation. In this paper, a multi-objective evolutionary algorithm based on decomposition (MOEA/D) is proposed to solve the UC problem as a multi-objective optimization problem considering minimizing cost and emission as the multiple objec- tives. Since, UC problem is a mixed-integer optimization problem consisting of binary UC variables and continuous power dispatch variables, a novel hybridization strategy is proposed within the framework of MOEA/D such that genetic algorithm (GA) evolves the binary variables while differential evolution (DE) evolves the continuous variables. Further, a novel non-uniform weight vector distribution strategy is proposed and a parallel island model based on combination of MOEA/D with uniform and non-uniform weight vector distribution strategy is implemented to enhance the performance of the presented algorithm. Extensive case studies are presented on different test systems and the effectiveness of the proposed hybridization strategy, the non-uniform weight vector distribution strategy and parallel island model is verified through stringent simulated results. Further, exhaustive benchmarking against the algorithms proposed in the literature is presented to demonstrate the superiority of the proposed algorithm in obtaining significantly better converged and uniformly distributed trade-off solutions.

Multi-Agent Shape Formation and Tracking Inspired from a Social Foraging Dynamics

Oct 16, 2014

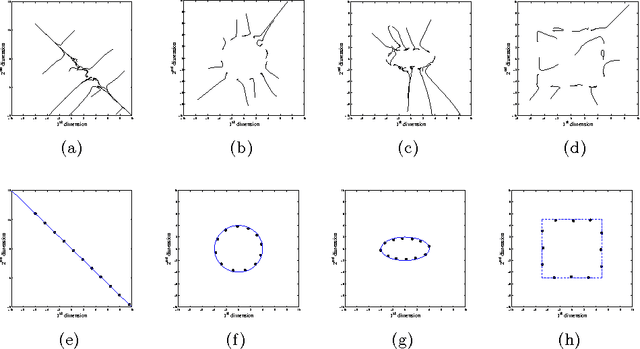

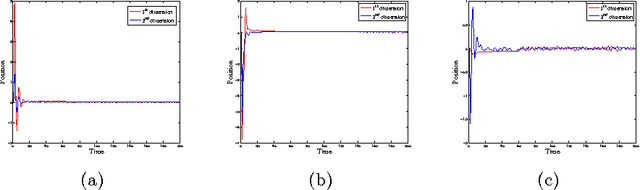

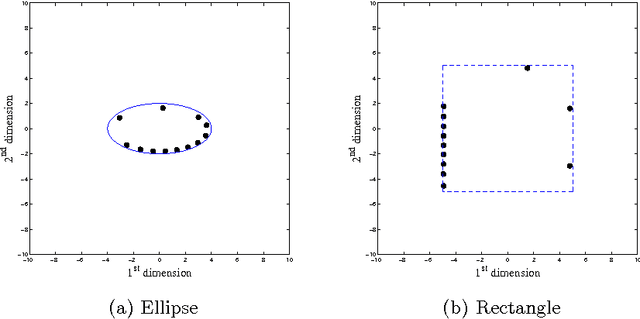

Abstract:Principle of Swarm Intelligence has recently found widespread application in formation control and automated tracking by the automated multi-agent system. This article proposes an elegant and effective method inspired by foraging dynamics to produce geometric-patterns by the search agents. Starting from a random initial orientation, it is investigated how the foraging dynamics can be modified to achieve convergence of the agents on the desired pattern with almost uniform density. Guided through the proposed dynamics, the agents can also track a moving point by continuously circulating around the point. An analytical treatment supported with computer simulation results is provided to better understand the convergence behaviour of the system.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge