Hamzeh Asgharnezhad

Uncertainty-Aware Deep Learning for Automated Skin Cancer Classification: A Comprehensive Evaluation

Jun 12, 2025

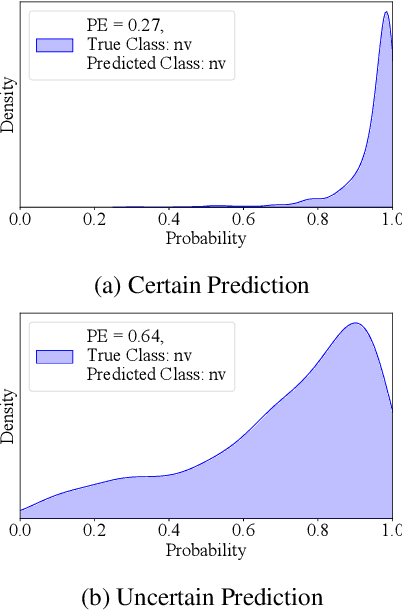

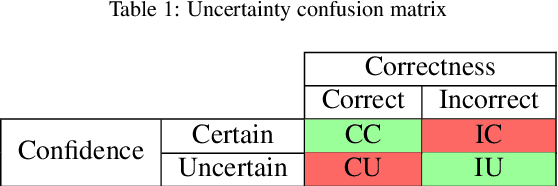

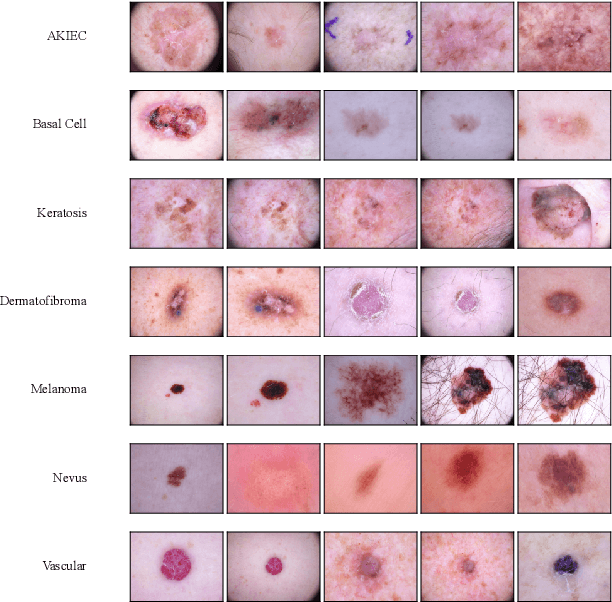

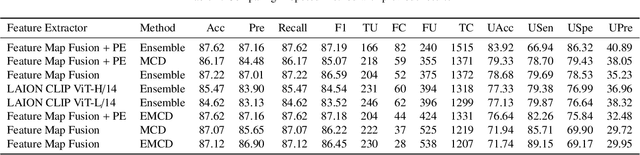

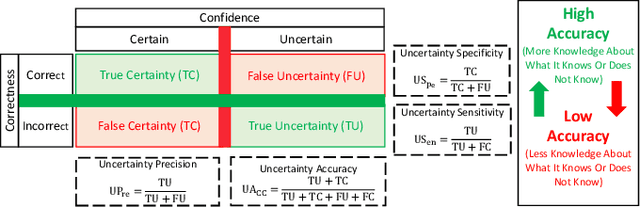

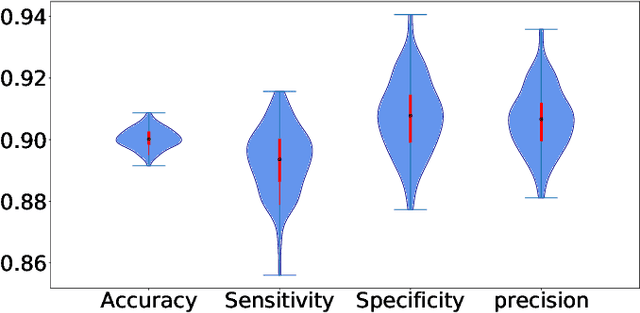

Abstract:Accurate and reliable skin cancer diagnosis is critical for early treatment and improved patient outcomes. Deep learning (DL) models have shown promise in automating skin cancer classification, but their performance can be limited by data scarcity and a lack of uncertainty awareness. In this study, we present a comprehensive evaluation of DL-based skin lesion classification using transfer learning and uncertainty quantification (UQ) on the HAM10000 dataset. In the first phase, we benchmarked several pre-trained feature extractors-including Contrastive Language-Image Pretraining (CLIP) variants, Residual Network-50 (ResNet50), Densely Connected Convolutional Network (DenseNet121), Visual Geometry Group network (VGG16), and EfficientNet-V2-Large-combined with a range of traditional classifiers such as Support Vector Machine (SVM), eXtreme Gradient Boosting (XGBoost), and logistic regression. Our results show that CLIP-based vision transformers, particularly LAION CLIP ViT-H/14 with SVM, deliver the highest classification performance. In the second phase, we incorporated UQ using Monte Carlo Dropout (MCD), Ensemble, and Ensemble Monte Carlo Dropout (EMCD) to assess not only prediction accuracy but also the reliability of model outputs. We evaluated these models using uncertainty-aware metrics such as uncertainty accuracy(UAcc), uncertainty sensitivity(USen), uncertainty specificity(USpe), and uncertainty precision(UPre). The results demonstrate that ensemble methods offer a good trade-off between accuracy and uncertainty handling, while EMCD is more sensitive to uncertain predictions. This study highlights the importance of integrating UQ into DL-based medical diagnosis to enhance both performance and trustworthiness in real-world clinical applications.

Enhancing Monte Carlo Dropout Performance for Uncertainty Quantification

May 21, 2025Abstract:Knowing the uncertainty associated with the output of a deep neural network is of paramount importance in making trustworthy decisions, particularly in high-stakes fields like medical diagnosis and autonomous systems. Monte Carlo Dropout (MCD) is a widely used method for uncertainty quantification, as it can be easily integrated into various deep architectures. However, conventional MCD often struggles with providing well-calibrated uncertainty estimates. To address this, we introduce innovative frameworks that enhances MCD by integrating different search solutions namely Grey Wolf Optimizer (GWO), Bayesian Optimization (BO), and Particle Swarm Optimization (PSO) as well as an uncertainty-aware loss function, thereby improving the reliability of uncertainty quantification. We conduct comprehensive experiments using different backbones, namely DenseNet121, ResNet50, and VGG16, on various datasets, including Cats vs. Dogs, Myocarditis, Wisconsin, and a synthetic dataset (Circles). Our proposed algorithm outperforms the MCD baseline by 2-3% on average in terms of both conventional accuracy and uncertainty accuracy while achieving significantly better calibration. These results highlight the potential of our approach to enhance the trustworthiness of deep learning models in safety-critical applications.

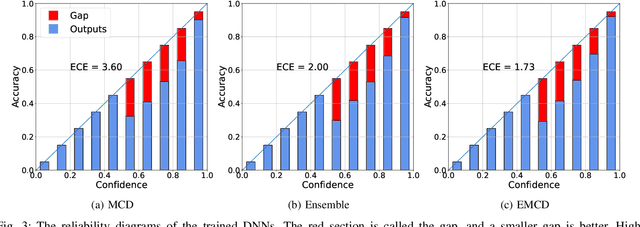

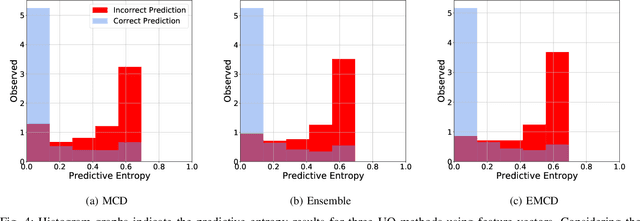

Improving MC-Dropout Uncertainty Estimates with Calibration Error-based Optimization

Oct 07, 2021

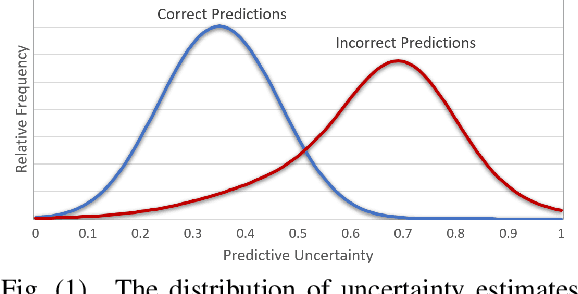

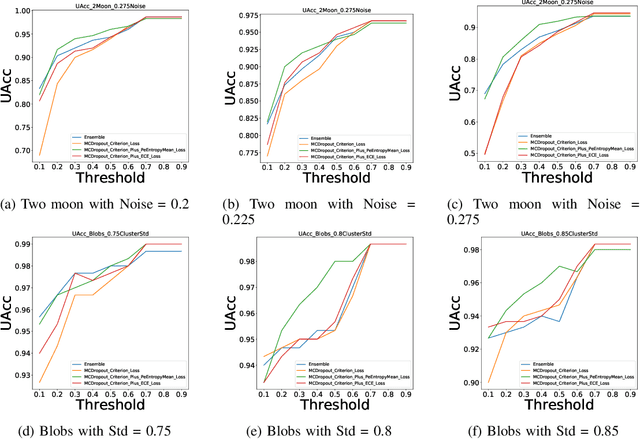

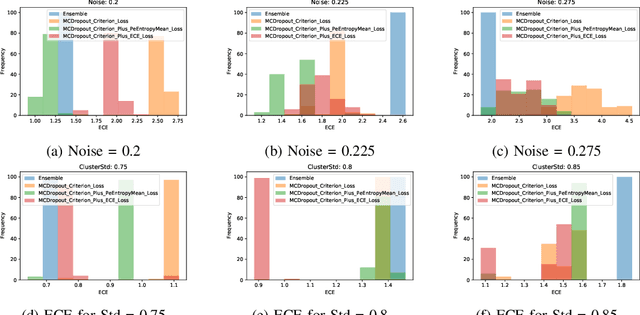

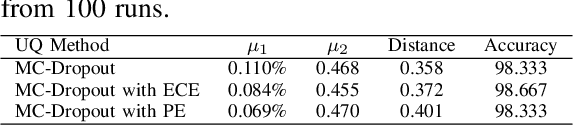

Abstract:Uncertainty quantification of machine learning and deep learning methods plays an important role in enhancing trust to the obtained result. In recent years, a numerous number of uncertainty quantification methods have been introduced. Monte Carlo dropout (MC-Dropout) is one of the most well-known techniques to quantify uncertainty in deep learning methods. In this study, we propose two new loss functions by combining cross entropy with Expected Calibration Error (ECE) and Predictive Entropy (PE). The obtained results clearly show that the new proposed loss functions lead to having a calibrated MC-Dropout method. Our results confirmed the great impact of the new hybrid loss functions for minimising the overlap between the distributions of uncertainty estimates for correct and incorrect predictions without sacrificing the model's overall performance.

Uncertainty-Aware Credit Card Fraud Detection Using Deep Learning

Jul 28, 2021

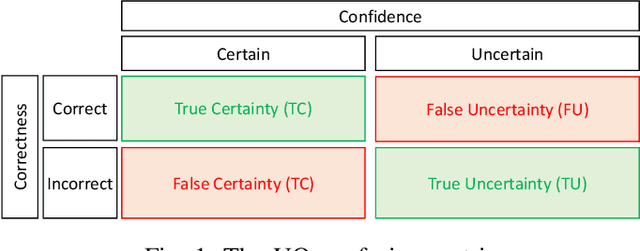

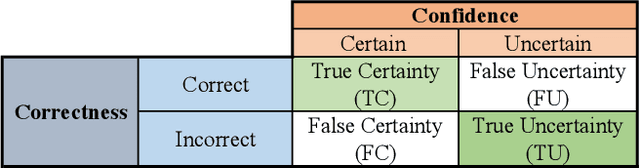

Abstract:Countless research works of deep neural networks (DNNs) in the task of credit card fraud detection have focused on improving the accuracy of point predictions and mitigating unwanted biases by building different network architectures or learning models. Quantifying uncertainty accompanied by point estimation is essential because it mitigates model unfairness and permits practitioners to develop trustworthy systems which abstain from suboptimal decisions due to low confidence. Explicitly, assessing uncertainties associated with DNNs predictions is critical in real-world card fraud detection settings for characteristic reasons, including (a) fraudsters constantly change their strategies, and accordingly, DNNs encounter observations that are not generated by the same process as the training distribution, (b) owing to the time-consuming process, very few transactions are timely checked by professional experts to update DNNs. Therefore, this study proposes three uncertainty quantification (UQ) techniques named Monte Carlo dropout, ensemble, and ensemble Monte Carlo dropout for card fraud detection applied on transaction data. Moreover, to evaluate the predictive uncertainty estimates, UQ confusion matrix and several performance metrics are utilized. Through experimental results, we show that the ensemble is more effective in capturing uncertainty corresponding to generated predictions. Additionally, we demonstrate that the proposed UQ methods provide extra insight to the point predictions, leading to elevate the fraud prevention process.

An Uncertainty-Aware Deep Learning Framework for Defect Detection in Casting Products

Jul 24, 2021

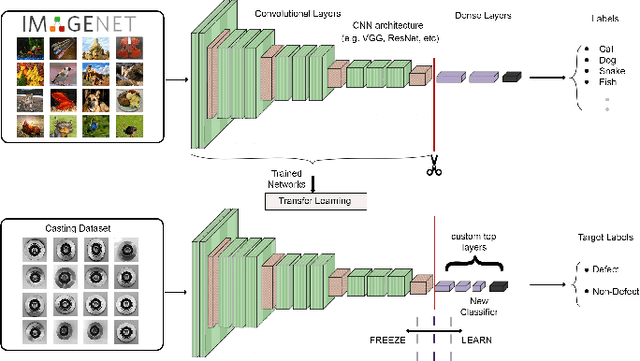

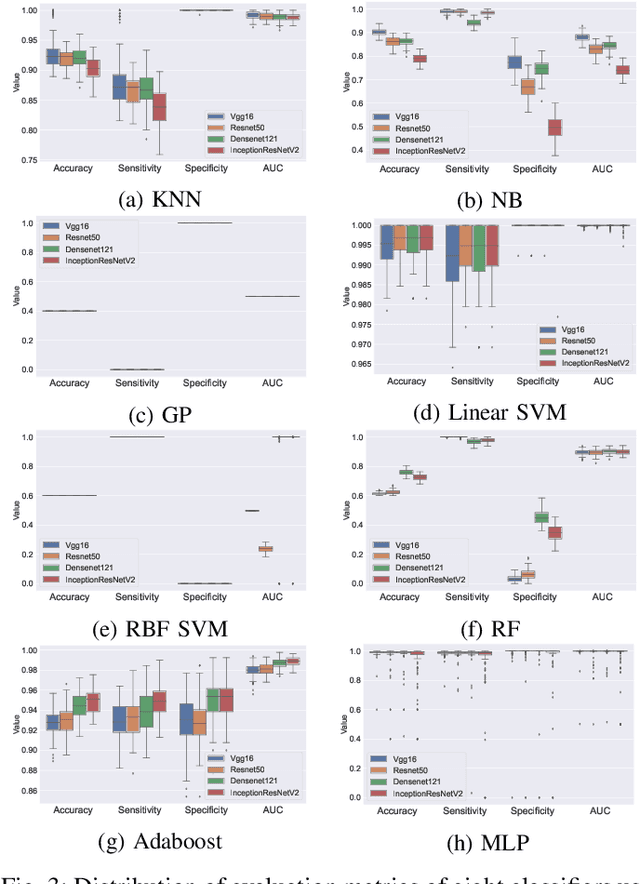

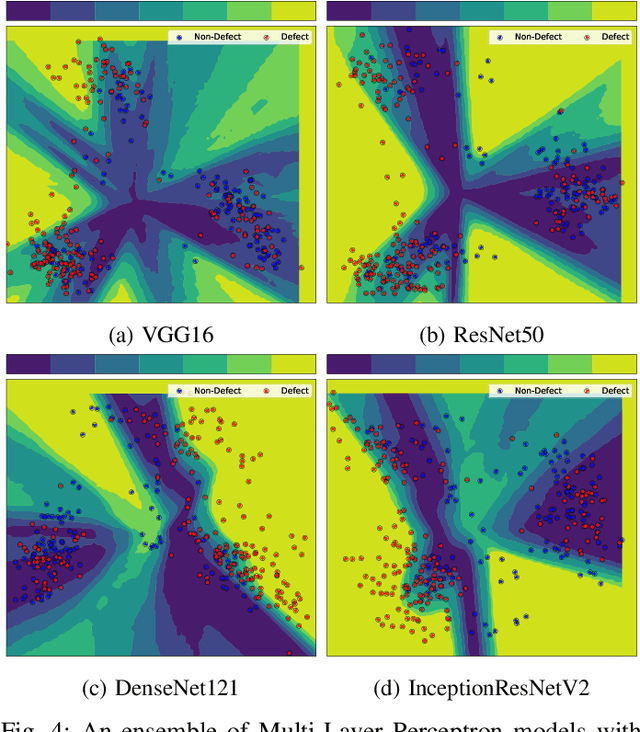

Abstract:Defects are unavoidable in casting production owing to the complexity of the casting process. While conventional human-visual inspection of casting products is slow and unproductive in mass productions, an automatic and reliable defect detection not just enhances the quality control process but positively improves productivity. However, casting defect detection is a challenging task due to diversity and variation in defects' appearance. Convolutional neural networks (CNNs) have been widely applied in both image classification and defect detection tasks. Howbeit, CNNs with frequentist inference require a massive amount of data to train on and still fall short in reporting beneficial estimates of their predictive uncertainty. Accordingly, leveraging the transfer learning paradigm, we first apply four powerful CNN-based models (VGG16, ResNet50, DenseNet121, and InceptionResNetV2) on a small dataset to extract meaningful features. Extracted features are then processed by various machine learning algorithms to perform the classification task. Simulation results demonstrate that linear support vector machine (SVM) and multi-layer perceptron (MLP) show the finest performance in defect detection of casting images. Secondly, to achieve a reliable classification and to measure epistemic uncertainty, we employ an uncertainty quantification (UQ) technique (ensemble of MLP models) using features extracted from four pre-trained CNNs. UQ confusion matrix and uncertainty accuracy metric are also utilized to evaluate the predictive uncertainty estimates. Comprehensive comparisons reveal that UQ method based on VGG16 outperforms others to fetch uncertainty. We believe an uncertainty-aware automatic defect detection solution will reinforce casting productions quality assurance.

Confidence Aware Neural Networks for Skin Cancer Detection

Jul 24, 2021

Abstract:Deep learning (DL) models have received particular attention in medical imaging due to their promising pattern recognition capabilities. However, Deep Neural Networks (DNNs) require a huge amount of data, and because of the lack of sufficient data in this field, transfer learning can be a great solution. DNNs used for disease diagnosis meticulously concentrate on improving the accuracy of predictions without providing a figure about their confidence of predictions. Knowing how much a DNN model is confident in a computer-aided diagnosis model is necessary for gaining clinicians' confidence and trust in DL-based solutions. To address this issue, this work presents three different methods for quantifying uncertainties for skin cancer detection from images. It also comprehensively evaluates and compares performance of these DNNs using novel uncertainty-related metrics. The obtained results reveal that the predictive uncertainty estimation methods are capable of flagging risky and erroneous predictions with a high uncertainty estimate. We also demonstrate that ensemble approaches are more reliable in capturing uncertainties through inference.

Objective Evaluation of Deep Uncertainty Predictions for COVID-19 Detection

Dec 22, 2020

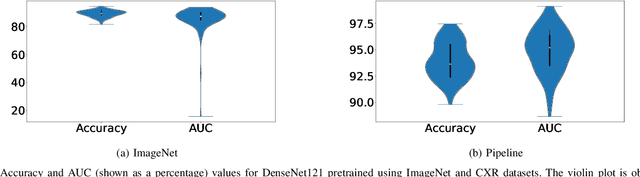

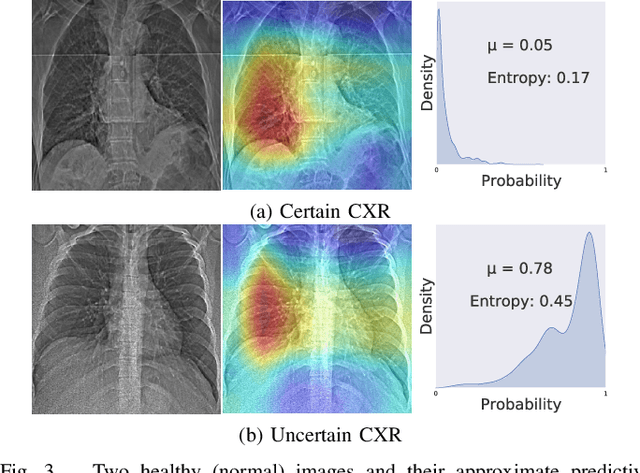

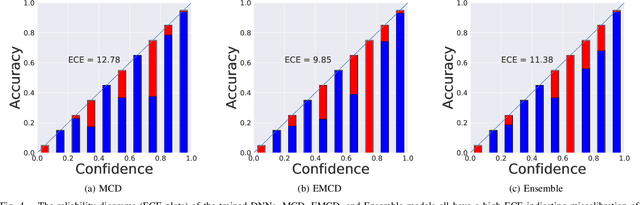

Abstract:Deep neural networks (DNNs) have been widely applied for detecting COVID-19 in medical images. Existing studies mainly apply transfer learning and other data representation strategies to generate accurate point estimates. The generalization power of these networks is always questionable due to being developed using small datasets and failing to report their predictive confidence. Quantifying uncertainties associated with DNN predictions is a prerequisite for their trusted deployment in medical settings. Here we apply and evaluate three uncertainty quantification techniques for COVID-19 detection using chest X-Ray (CXR) images. The novel concept of uncertainty confusion matrix is proposed and new performance metrics for the objective evaluation of uncertainty estimates are introduced. Through comprehensive experiments, it is shown that networks pertained on CXR images outperform networks pretrained on natural image datasets such as ImageNet. Qualitatively and quantitatively evaluations also reveal that the predictive uncertainty estimates are statistically higher for erroneous predictions than correct predictions. Accordingly, uncertainty quantification methods are capable of flagging risky predictions with high uncertainty estimates. We also observe that ensemble methods more reliably capture uncertainties during the inference.

An Uncertainty-aware Transfer Learning-based Framework for Covid-19 Diagnosis

Jul 26, 2020

Abstract:The early and reliable detection of COVID-19 infected patients is essential to prevent and limit its outbreak. The PCR tests for COVID-19 detection are not available in many countries and also there are genuine concerns about their reliability and performance. Motivated by these shortcomings, this paper proposes a deep uncertainty-aware transfer learning framework for COVID-19 detection using medical images. Four popular convolutional neural networks (CNNs) including VGG16, ResNet50, DenseNet121, and InceptionResNetV2 are first applied to extract deep features from chest X-ray and computed tomography (CT) images. Extracted features are then processed by different machine learning and statistical modelling techniques to identify COVID-19 cases. We also calculate and report the epistemic uncertainty of classification results to identify regions where the trained models are not confident about their decisions (out of distribution problem). Comprehensive simulation results for X-ray and CT image datasets indicate that linear support vector machine and neural network models achieve the best results as measured by accuracy, sensitivity, specificity, and AUC. Also it is found that predictive uncertainty estimates are much higher for CT images compared to X-ray images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge