Donya Khaledyan

WATUNet: A Deep Neural Network for Segmentation of Volumetric Sweep Imaging Ultrasound

Nov 17, 2023Abstract:Objective. Limited access to breast cancer diagnosis globally leads to delayed treatment. Ultrasound, an effective yet underutilized method, requires specialized training for sonographers, which hinders its widespread use. Approach. Volume sweep imaging (VSI) is an innovative approach that enables untrained operators to capture high-quality ultrasound images. Combined with deep learning, like convolutional neural networks (CNNs), it can potentially transform breast cancer diagnosis, enhancing accuracy, saving time and costs, and improving patient outcomes. The widely used UNet architecture, known for medical image segmentation, has limitations, such as vanishing gradients and a lack of multi-scale feature extraction and selective region attention. In this study, we present a novel segmentation model known as Wavelet_Attention_UNet (WATUNet). In this model, we incorporate wavelet gates (WGs) and attention gates (AGs) between the encoder and decoder instead of a simple connection to overcome the limitations mentioned, thereby improving model performance. Main results. Two datasets are utilized for the analysis. The public "Breast Ultrasound Images" (BUSI) dataset of 780 images and a VSI dataset of 3818 images. Both datasets contained segmented lesions categorized into three types: no mass, benign mass, and malignant mass. Our segmentation results show superior performance compared to other deep networks. The proposed algorithm attained a Dice coefficient of 0.94 and an F1 score of 0.94 on the VSI dataset and scored 0.93 and 0.94 on the public dataset, respectively.

Confidence Aware Neural Networks for Skin Cancer Detection

Jul 24, 2021

Abstract:Deep learning (DL) models have received particular attention in medical imaging due to their promising pattern recognition capabilities. However, Deep Neural Networks (DNNs) require a huge amount of data, and because of the lack of sufficient data in this field, transfer learning can be a great solution. DNNs used for disease diagnosis meticulously concentrate on improving the accuracy of predictions without providing a figure about their confidence of predictions. Knowing how much a DNN model is confident in a computer-aided diagnosis model is necessary for gaining clinicians' confidence and trust in DL-based solutions. To address this issue, this work presents three different methods for quantifying uncertainties for skin cancer detection from images. It also comprehensively evaluates and compares performance of these DNNs using novel uncertainty-related metrics. The obtained results reveal that the predictive uncertainty estimation methods are capable of flagging risky and erroneous predictions with a high uncertainty estimate. We also demonstrate that ensemble approaches are more reliable in capturing uncertainties through inference.

Deep learning denoising for EOG artifacts removal from EEG signals

Sep 12, 2020

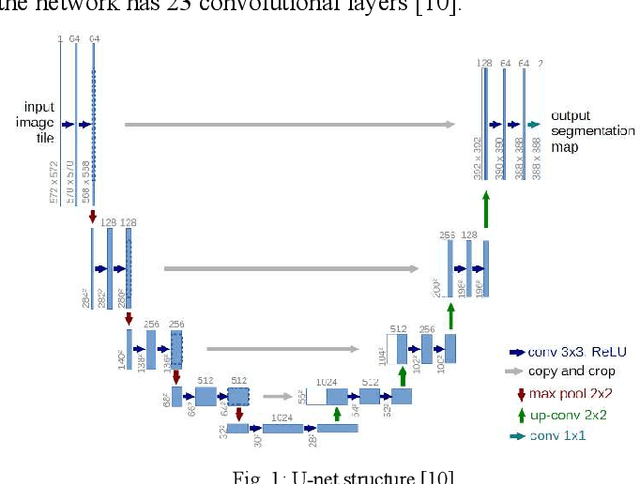

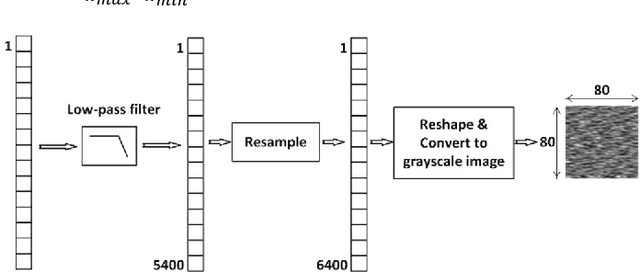

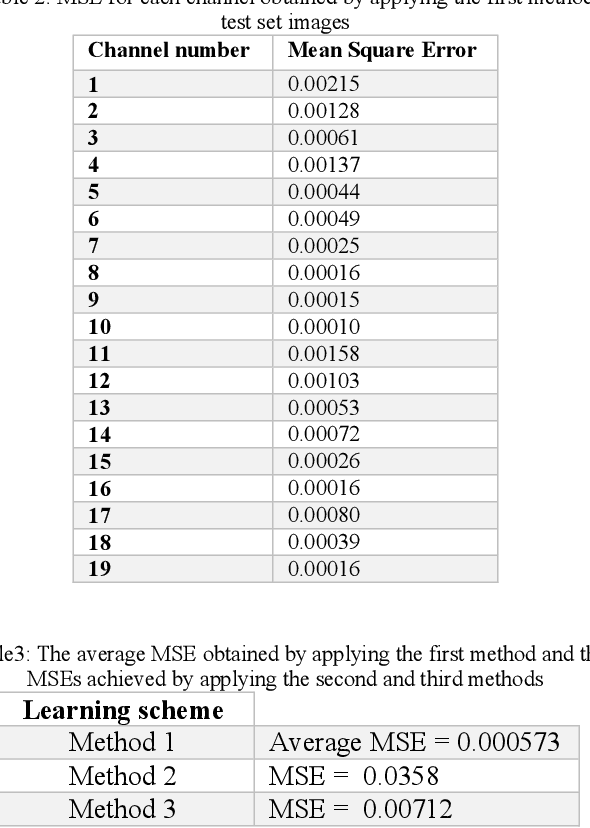

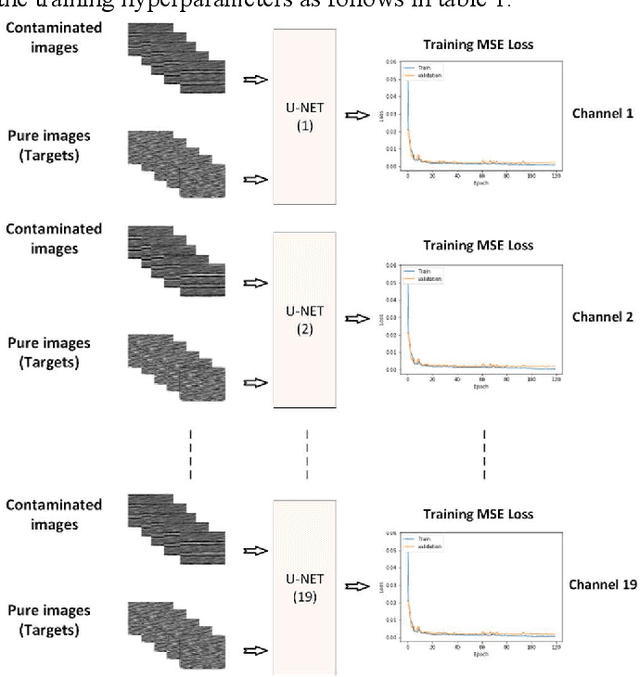

Abstract:There are many sources of interference encountered in the electroencephalogram (EEG) recordings, specifically ocular, muscular, and cardiac artifacts. Rejection of EEG artifacts is an essential process in EEG analysis since such artifacts cause many problems in EEG signals analysis. One of the most challenging issues in EEG denoising processes is removing the ocular artifacts where Electrooculographic (EOG), and EEG signals have an overlap in both frequency and time domains. In this paper, we build and train a deep learning model to deal with this challenge and remove the ocular artifacts effectively. In the proposed scheme, we convert each EEG signal to an image to be fed to a U-NET model, which is a deep learning model usually used in image segmentation tasks. We proposed three different schemes and made our U-NET based models learn to purify contaminated EEG signals similar to the process used in the image segmentation process. The results confirm that one of our schemes can achieve a reliable and promising accuracy to reduce the Mean square error between the target signal (Pure EEGs) and the predicted signal (Purified EEGs).

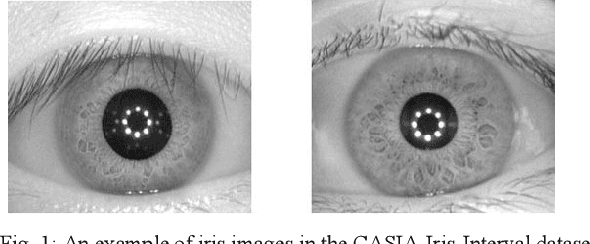

An approach to human iris recognition using quantitative analysis of image features and machine learning

Sep 12, 2020

Abstract:The Iris pattern is a unique biological feature for each individual, making it a valuable and powerful tool for human identification. In this paper, an efficient framework for iris recognition is proposed in four steps. (1) Iris segmentation (using a relative total variation combined with Coarse Iris Localization), (2) feature extraction (using Shape&density, FFT, GLCM, GLDM, and Wavelet), (3) feature reduction (employing Kernel-PCA) and (4) classification (applying multi-layer neural network) to classify 2000 iris images of CASIA-Iris-Interval dataset obtained from 200 volunteers. The results confirm that the proposed scheme can provide a reliable prediction with an accuracy of up to 99.64%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge