Depu Meng

Evaluating Roadside Perception for Autonomous Vehicles: Insights from Field Testing

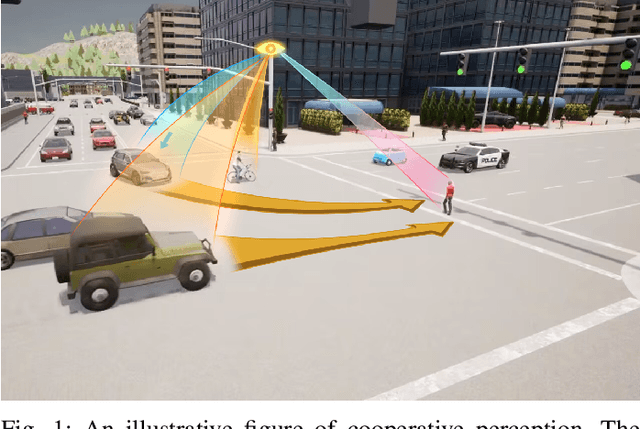

Jan 22, 2024Abstract:Roadside perception systems are increasingly crucial in enhancing traffic safety and facilitating cooperative driving for autonomous vehicles. Despite rapid technological advancements, a major challenge persists for this newly arising field: the absence of standardized evaluation methods and benchmarks for these systems. This limitation hampers the ability to effectively assess and compare the performance of different systems, thus constraining progress in this vital field. This paper introduces a comprehensive evaluation methodology specifically designed to assess the performance of roadside perception systems. Our methodology encompasses measurement techniques, metric selection, and experimental trial design, all grounded in real-world field testing to ensure the practical applicability of our approach. We applied our methodology in Mcity\footnote{\url{https://mcity.umich.edu/}}, a controlled testing environment, to evaluate various off-the-shelf perception systems. This approach allowed for an in-depth comparative analysis of their performance in realistic scenarios, offering key insights into their respective strengths and limitations. The findings of this study are poised to inform the development of industry-standard benchmarks and evaluation methods, thereby enhancing the effectiveness of roadside perception system development and deployment for autonomous vehicles. We anticipate that this paper will stimulate essential discourse on standardizing evaluation methods for roadside perception systems, thus pushing the frontiers of this technology. Furthermore, our results offer both academia and industry a comprehensive understanding of the capabilities of contemporary infrastructure-based perception systems.

MSight: An Edge-Cloud Infrastructure-based Perception System for Connected Automated Vehicles

Oct 08, 2023

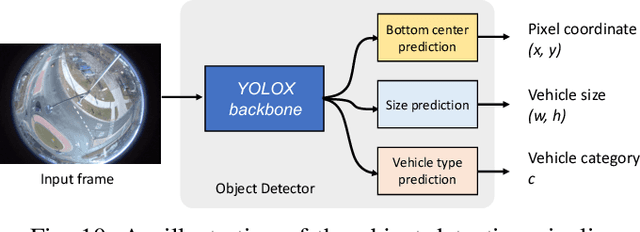

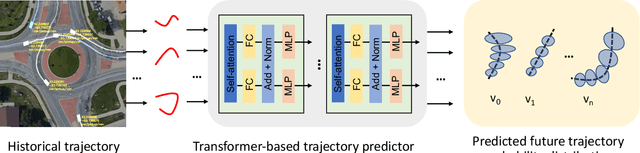

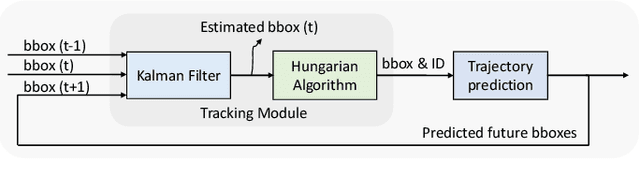

Abstract:As vehicular communication and networking technologies continue to advance, infrastructure-based roadside perception emerges as a pivotal tool for connected automated vehicle (CAV) applications. Due to their elevated positioning, roadside sensors, including cameras and lidars, often enjoy unobstructed views with diminished object occlusion. This provides them a distinct advantage over onboard perception, enabling more robust and accurate detection of road objects. This paper presents MSight, a cutting-edge roadside perception system specifically designed for CAVs. MSight offers real-time vehicle detection, localization, tracking, and short-term trajectory prediction. Evaluations underscore the system's capability to uphold lane-level accuracy with minimal latency, revealing a range of potential applications to enhance CAV safety and efficiency. Presently, MSight operates 24/7 at a two-lane roundabout in the City of Ann Arbor, Michigan.

Robust Roadside Perception for Autonomous Driving: an Annotation-free Strategy with Synthesized Data

Jun 29, 2023Abstract:Recently, with the rapid development in vehicle-to-infrastructure communication technologies, the infrastructure-based, roadside perception system for cooperative driving has become a rising field. This paper focuses on one of the most critical challenges - the data-insufficiency problem. The lacking of high-quality labeled roadside sensor data with high diversity leads to low robustness, and low transfer-ability of current roadside perception systems. In this paper, a novel approach is proposed to address this problem by creating synthesized training data using Augmented Reality and Generative Adversarial Network. This method creates synthesized dataset that is capable of training or fine-tuning a roadside perception detector which is robust to different weather and lighting conditions, or to adapt a new deployment location. We validate our approach at two intersections: Mcity intersection and State St/Ellsworth Rd roundabout. Our experiments show that (1) the detector can achieve good performance in all conditions when trained on synthesized data only, and (2) the performance of an existing detector trained with labeled data can be enhanced by synthesized data in harsh conditions.

ROCO: A Roundabout Traffic Conflict Dataset

Mar 02, 2023Abstract:Traffic conflicts have been studied by the transportation research community as a surrogate safety measure for decades. However, due to the rarity of traffic conflicts, collecting large-scale real-world traffic conflict data becomes extremely challenging. In this paper, we introduce and analyze ROCO - a real-world roundabout traffic conflict dataset. The data is collected at a two-lane roundabout at the intersection of State St. and W. Ellsworth Rd. in Ann Arbor, Michigan. We use raw video dataflow captured from four fisheye cameras installed at the roundabout as our input data source. We adopt a learning-based conflict identification algorithm from video to find potential traffic conflicts, and then manually label them for dataset collection and annotation. In total 557 traffic conflicts and 17 traffic crashes are collected from August 2021 to October 2021. We provide trajectory data of the traffic conflict scenes extracted using our roadside perception system. Taxonomy based on traffic conflict severity, reason for the traffic conflict, and its effect on the traffic flow is provided. With the traffic conflict data collected, we discover that failure to yield to circulating vehicles when entering the roundabout is the largest contributing reason for traffic conflicts. ROCO dataset will be made public in the short future.

CORE: Consistent Representation Learning for Face Forgery Detection

Jun 06, 2022

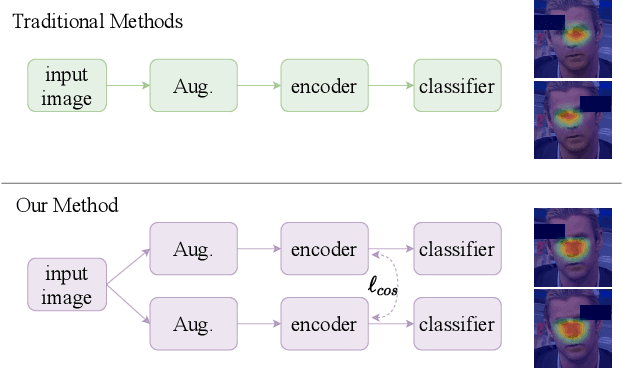

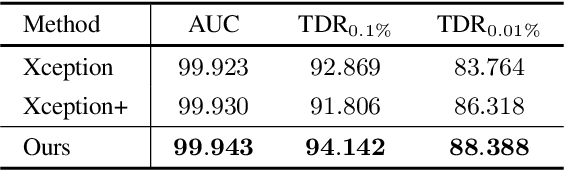

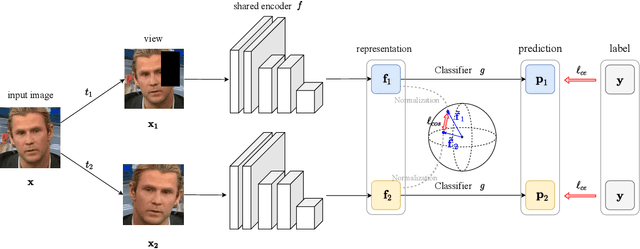

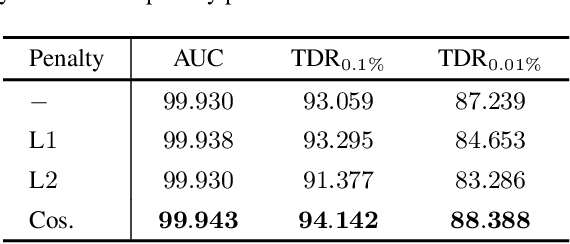

Abstract:Face manipulation techniques develop rapidly and arouse widespread public concerns. Despite that vanilla convolutional neural networks achieve acceptable performance, they suffer from the overfitting issue. To relieve this issue, there is a trend to introduce some erasing-based augmentations. We find that these methods indeed attempt to implicitly induce more consistent representations for different augmentations via assigning the same label for different augmented images. However, due to the lack of explicit regularization, the consistency between different representations is less satisfactory. Therefore, we constrain the consistency of different representations explicitly and propose a simple yet effective framework, COnsistent REpresentation Learning (CORE). Specifically, we first capture the different representations with different augmentations, then regularize the cosine distance of the representations to enhance the consistency. Extensive experiments (in-dataset and cross-dataset) demonstrate that CORE performs favorably against state-of-the-art face forgery detection methods.

Conditional DETR for Fast Training Convergence

Aug 19, 2021

Abstract:The recently-developed DETR approach applies the transformer encoder and decoder architecture to object detection and achieves promising performance. In this paper, we handle the critical issue, slow training convergence, and present a conditional cross-attention mechanism for fast DETR training. Our approach is motivated by that the cross-attention in DETR relies highly on the content embeddings for localizing the four extremities and predicting the box, which increases the need for high-quality content embeddings and thus the training difficulty. Our approach, named conditional DETR, learns a conditional spatial query from the decoder embedding for decoder multi-head cross-attention. The benefit is that through the conditional spatial query, each cross-attention head is able to attend to a band containing a distinct region, e.g., one object extremity or a region inside the object box. This narrows down the spatial range for localizing the distinct regions for object classification and box regression, thus relaxing the dependence on the content embeddings and easing the training. Empirical results show that conditional DETR converges 6.7x faster for the backbones R50 and R101 and 10x faster for stronger backbones DC5-R50 and DC5-R101. Code is available at https://github.com/Atten4Vis/ConditionalDETR.

Bottom-Up Human Pose Estimation by Ranking Heatmap-Guided Adaptive Keypoint Estimates

Jun 28, 2020

Abstract:The typical bottom-up human pose estimation framework includes two stages, keypoint detection and grouping. Most existing works focus on developing grouping algorithms, e.g., associative embedding, and pixel-wise keypoint regression that we adopt in our approach. We present several schemes that are rarely or unthoroughly studied before for improving keypoint detection and grouping (keypoint regression) performance. First, we exploit the keypoint heatmaps for pixel-wise keypoint regression instead of separating them for improving keypoint regression. Second, we adopt a pixel-wise spatial transformer network to learn adaptive representations for handling the scale and orientation variance to further improve keypoint regression quality. Last, we present a joint shape and heatvalue scoring scheme to promote the estimated poses that are more likely to be true poses. Together with the tradeoff heatmap estimation loss for balancing the background and keypoint pixels and thus improving heatmap estimation quality, we get the state-of-the-art bottom-up human pose estimation result. Code is available at https://github.com/HRNet/HRNet-Bottom-up-Pose-Estimation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge