Chu Guo

Learning Generalizable Features for Tibial Plateau Fracture Segmentation Using Masked Autoencoder and Limited Annotations

Feb 05, 2025

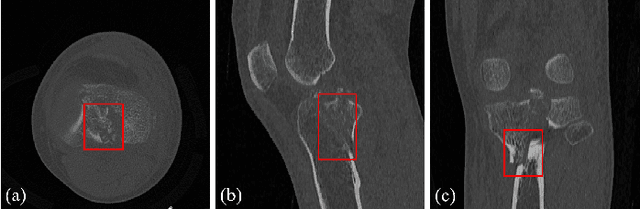

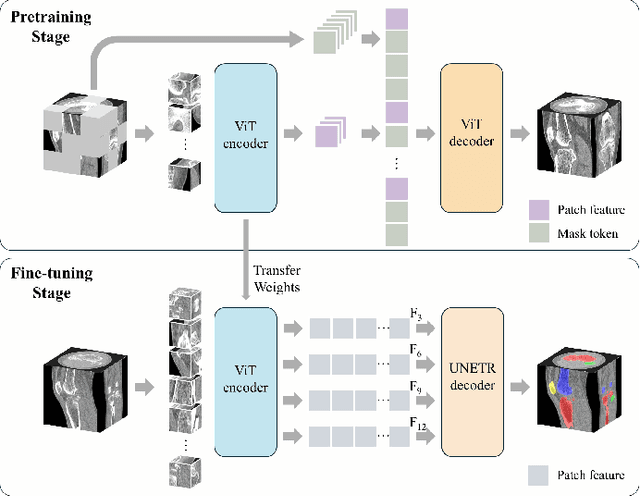

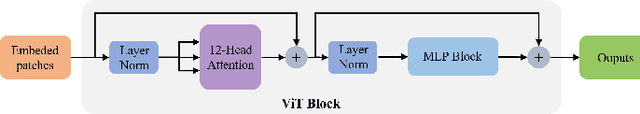

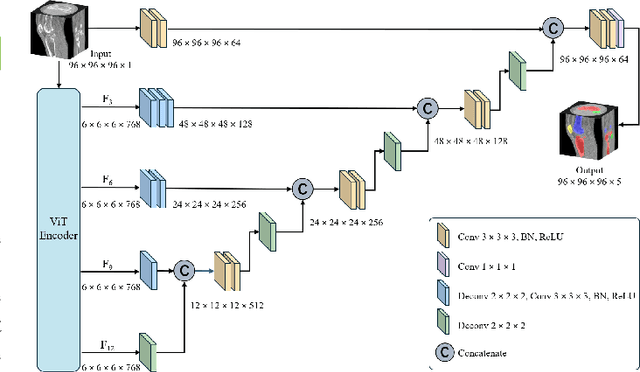

Abstract:Accurate automated segmentation of tibial plateau fractures (TPF) from computed tomography (CT) requires large amounts of annotated data to train deep learning models, but obtaining such annotations presents unique challenges. The process demands expert knowledge to identify diverse fracture patterns, assess severity, and account for individual anatomical variations, making the annotation process highly time-consuming and expensive. Although semi-supervised learning methods can utilize unlabeled data, existing approaches often struggle with the complexity and variability of fracture morphologies, as well as limited generalizability across datasets. To tackle these issues, we propose an effective training strategy based on masked autoencoder (MAE) for the accurate TPF segmentation in CT. Our method leverages MAE pretraining to capture global skeletal structures and fine-grained fracture details from unlabeled data, followed by fine-tuning with a small set of labeled data. This strategy reduces the dependence on extensive annotations while enhancing the model's ability to learn generalizable and transferable features. The proposed method is evaluated on an in-house dataset containing 180 CT scans with TPF. Experimental results demonstrate that our method consistently outperforms semi-supervised methods, achieving an average Dice similarity coefficient (DSC) of 95.81%, average symmetric surface distance (ASSD) of 1.91mm, and Hausdorff distance (95HD) of 9.42mm with only 20 annotated cases. Moreover, our method exhibits strong transferability when applying to another public pelvic CT dataset with hip fractures, highlighting its potential for broader applications in fracture segmentation tasks.

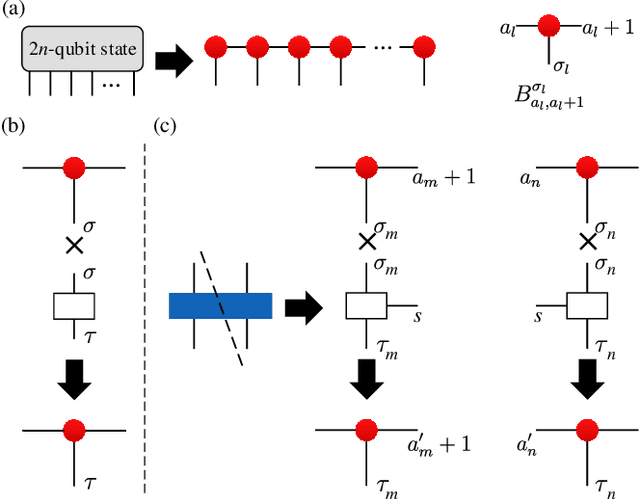

Tensor-Networks-based Learning of Probabilistic Cellular Automata Dynamics

Apr 17, 2024

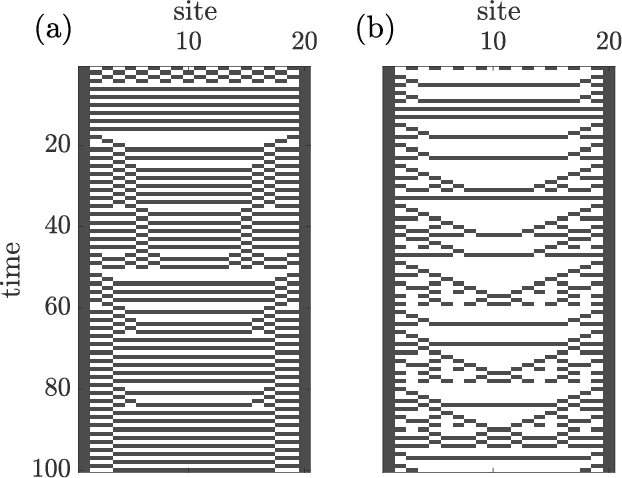

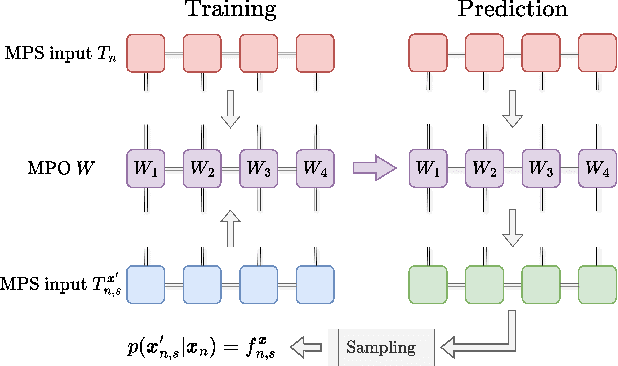

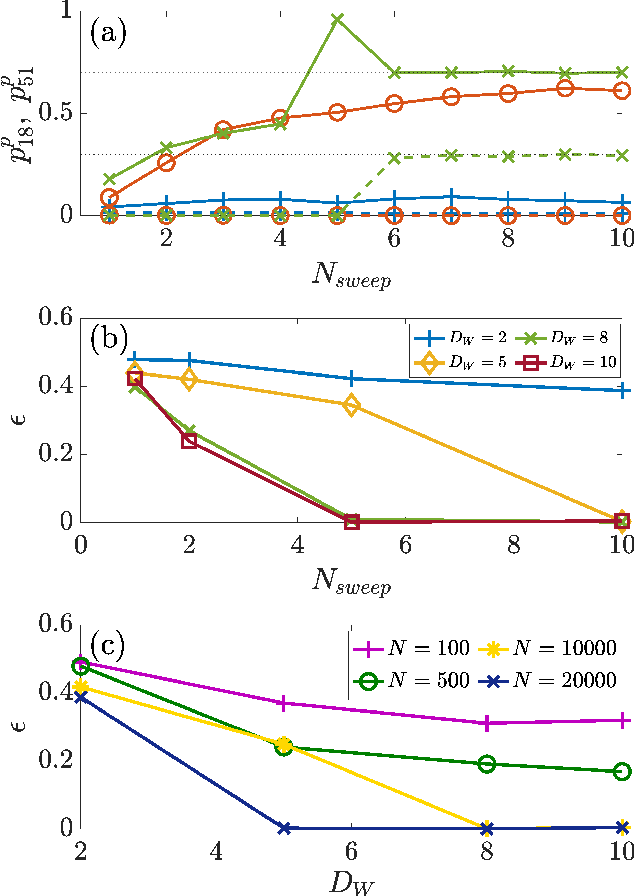

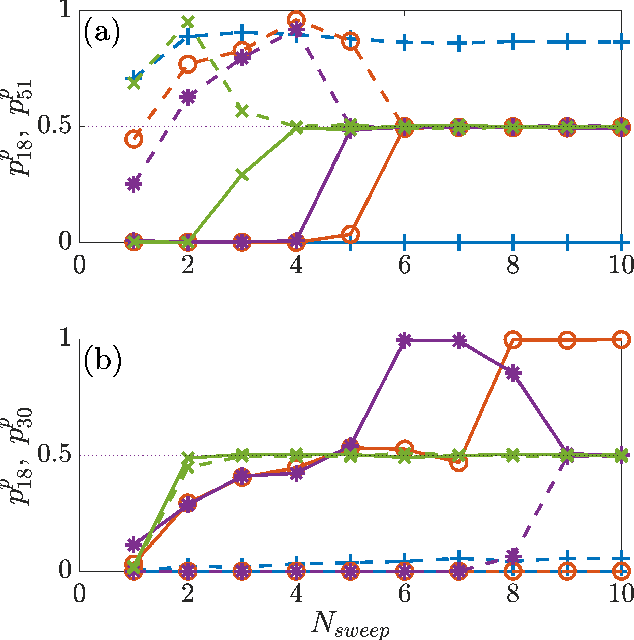

Abstract:Algorithms developed to solve many-body quantum problems, like tensor networks, can turn into powerful quantum-inspired tools to tackle problems in the classical domain. In this work, we focus on matrix product operators, a prominent numerical technique to study many-body quantum systems, especially in one dimension. It has been previously shown that such a tool can be used for classification, learning of deterministic sequence-to-sequence processes and of generic quantum processes. We further develop a matrix product operator algorithm to learn probabilistic sequence-to-sequence processes and apply this algorithm to probabilistic cellular automata. This new approach can accurately learn probabilistic cellular automata processes in different conditions, even when the process is a probabilistic mixture of different chaotic rules. In addition, we find that the ability to learn these dynamics is a function of the bit-wise difference between the rules and whether one is much more likely than the other.

NNQS-Transformer: an Efficient and Scalable Neural Network Quantum States Approach for Ab initio Quantum Chemistry

Jul 01, 2023

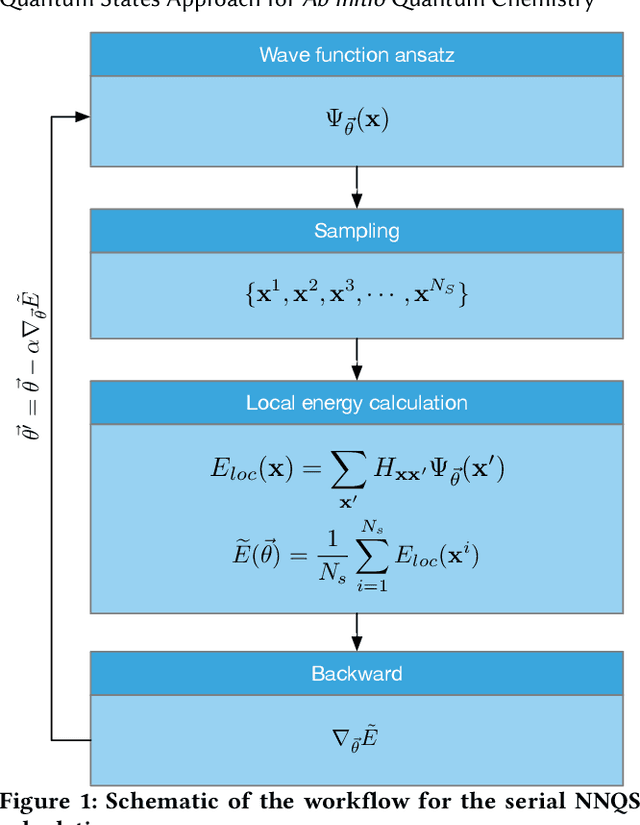

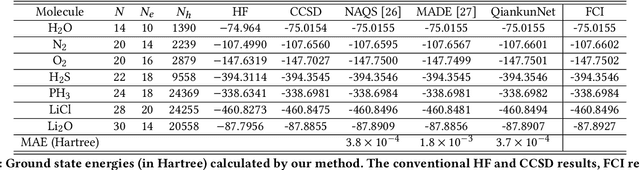

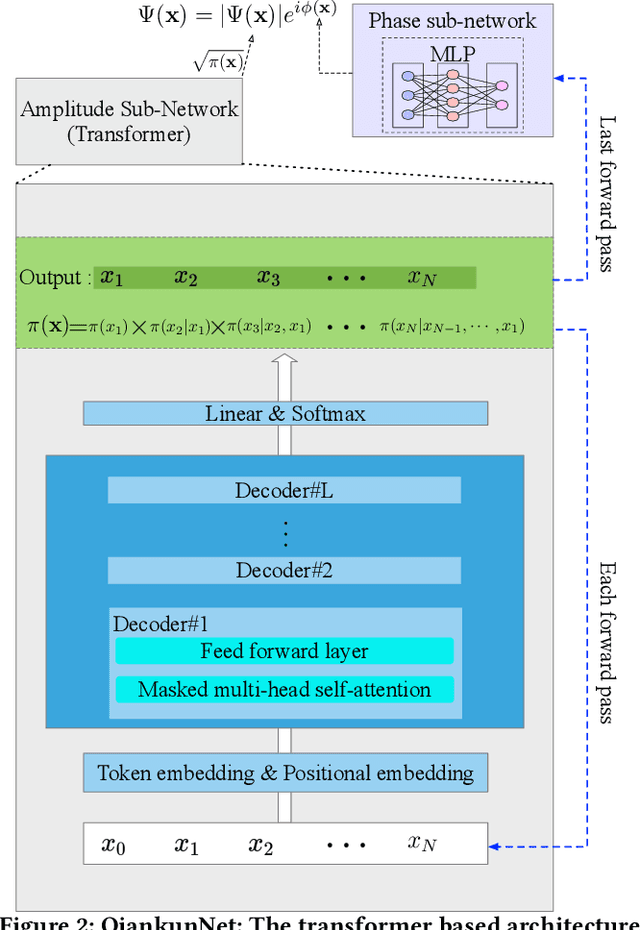

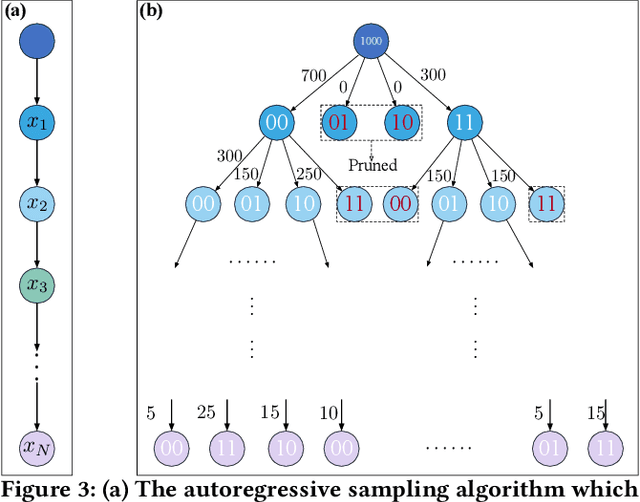

Abstract:Neural network quantum state (NNQS) has emerged as a promising candidate for quantum many-body problems, but its practical applications are often hindered by the high cost of sampling and local energy calculation. We develop a high-performance NNQS method for \textit{ab initio} electronic structure calculations. The major innovations include: (1) A transformer based architecture as the quantum wave function ansatz; (2) A data-centric parallelization scheme for the variational Monte Carlo (VMC) algorithm which preserves data locality and well adapts for different computing architectures; (3) A parallel batch sampling strategy which reduces the sampling cost and achieves good load balance; (4) A parallel local energy evaluation scheme which is both memory and computationally efficient; (5) Study of real chemical systems demonstrates both the superior accuracy of our method compared to state-of-the-art and the strong and weak scalability for large molecular systems with up to $120$ spin orbitals.

Near-Term Quantum Computing Techniques: Variational Quantum Algorithms, Error Mitigation, Circuit Compilation, Benchmarking and Classical Simulation

Nov 17, 2022Abstract:Quantum computing is a game-changing technology for global academia, research centers and industries including computational science, mathematics, finance, pharmaceutical, materials science, chemistry and cryptography. Although it has seen a major boost in the last decade, we are still a long way from reaching the maturity of a full-fledged quantum computer. That said, we will be in the Noisy-Intermediate Scale Quantum (NISQ) era for a long time, working on dozens or even thousands of qubits quantum computing systems. An outstanding challenge, then, is to come up with an application that can reliably carry out a nontrivial task of interest on the near-term quantum devices with non-negligible quantum noise. To address this challenge, several near-term quantum computing techniques, including variational quantum algorithms, error mitigation, quantum circuit compilation and benchmarking protocols, have been proposed to characterize and mitigate errors, and to implement algorithms with a certain resistance to noise, so as to enhance the capabilities of near-term quantum devices and explore the boundaries of their ability to realize useful applications. Besides, the development of near-term quantum devices is inseparable from the efficient classical simulation, which plays a vital role in quantum algorithm design and verification, error-tolerant verification and other applications. This review will provide a thorough introduction of these near-term quantum computing techniques, report on their progress, and finally discuss the future prospect of these techniques, which we hope will motivate researchers to undertake additional studies in this field.

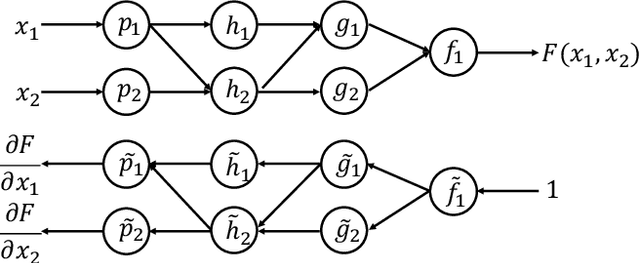

A scheme for automatic differentiation of complex loss functions

Mar 02, 2020

Abstract:For a real function, automatic differentiation is such a standard algorithm used to efficiently compute its gradient, that it is integrated in various neural network frameworks. However, despite the recent advances in using complex functions in machine learning and the well-established usefulness of automatic differentiation, the support of automatic differentiation for complex functions is not as well-established and widespread as for real functions. In this work we propose an efficient and seamless scheme to implement automatic differentiation for complex functions, which is a compatible generalization of the current scheme for real functions. This scheme can significantly simplify the implementation of neural networks which use complex numbers.

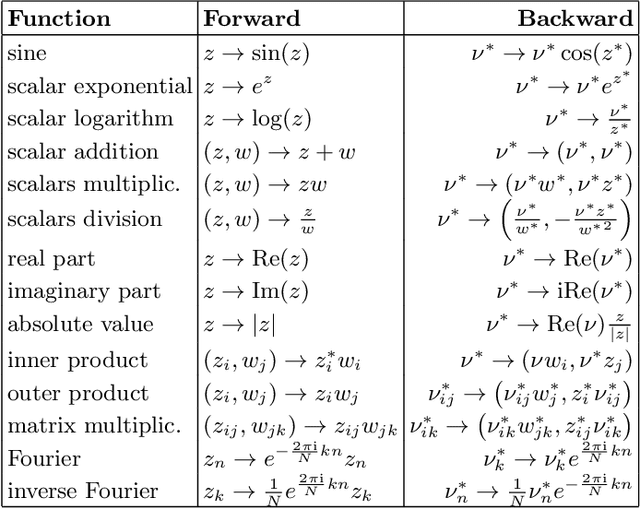

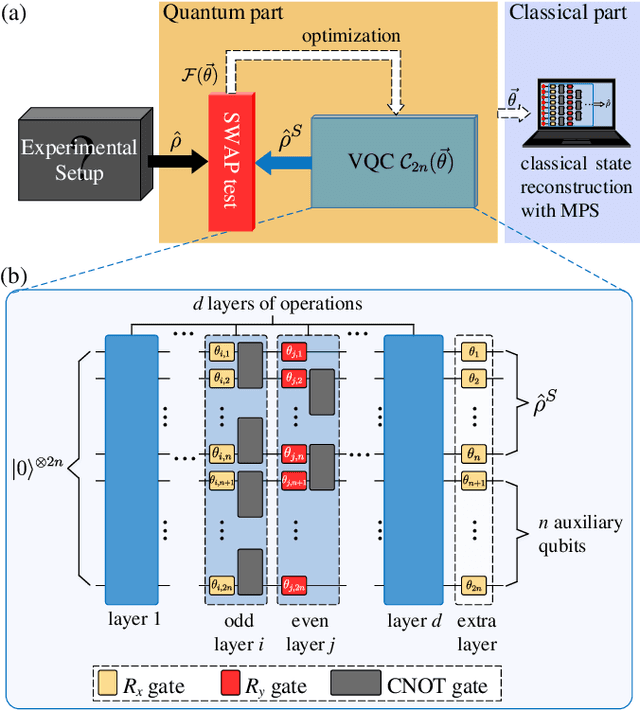

Variational Quantum Circuits for Quantum State Tomography

Dec 16, 2019

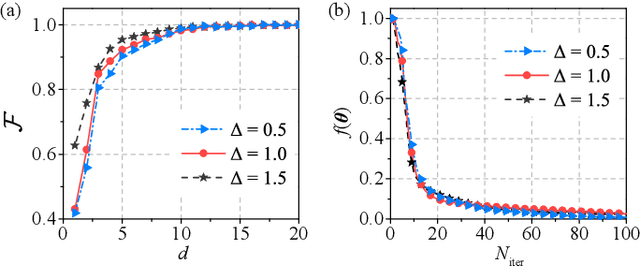

Abstract:We propose a hybrid quantum-classical algorithm for quantum state tomography. Given an unknown quantum state, a quantum machine learning algorithm is used to maximize the fidelity between the output of a variational quantum circuit and this state. The number of parameters of the variational quantum circuit grows linearly with the number of qubits and the circuit depth. After that, a subsequent classical algorithm is used to reconstruct the unknown quantum state. We demonstrate our method by performing numerical simulations to reconstruct the ground state of a one-dimensional quantum spin chain, using a variational quantum circuit simulator. Our method is suitable for near-term quantum computing platforms, and could be used for relatively large-scale quantum state tomography for experimentally relevant quantum states.

IPOD: Corpus of 190,000 Industrial Occupations

Oct 22, 2019

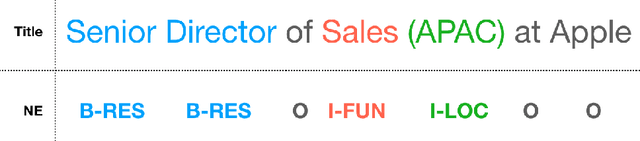

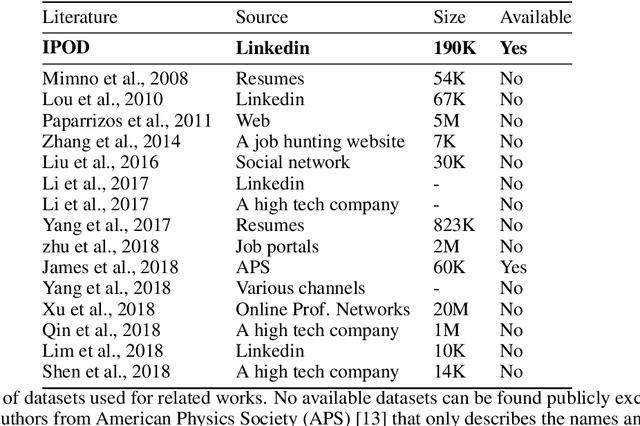

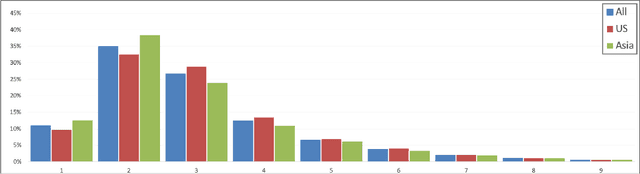

Abstract:Job titles are the most fundamental building blocks for occupational data mining tasks, such as Career Modelling and Job Recommendation. However, there are no publicly available dataset to support such efforts. In this work, we present the Industrial and Professional Occupations Dataset (IPOD), which is a comprehensive corpus that consists of over 190,000 job titles crawled from over 56,000 profiles from Linkedin. To the best of our knowledge, IPOD is the first dataset released for industrial occupations mining. We use a knowledge-based approach for sequence tagging, creating a gazzetteer with domain-specific named entities tagged by 3 experts. All title NE tags are populated by the gazetteer using BIOES scheme. Finally, We develop 4 baseline models for the dataset on NER task with several models, including Linear Regression, CRF, LSTM and the state-of-the-art bi-directional LSTM-CRF. Both CRF and LSTM-CRF outperform human in both exact-match accuracy and f1 scores.

Crowd-aware itinerary recommendation: a game-theoretic approach to optimize social welfare

Oct 06, 2019

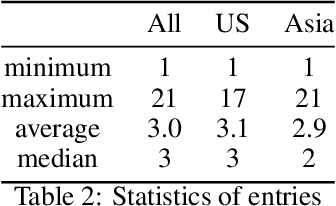

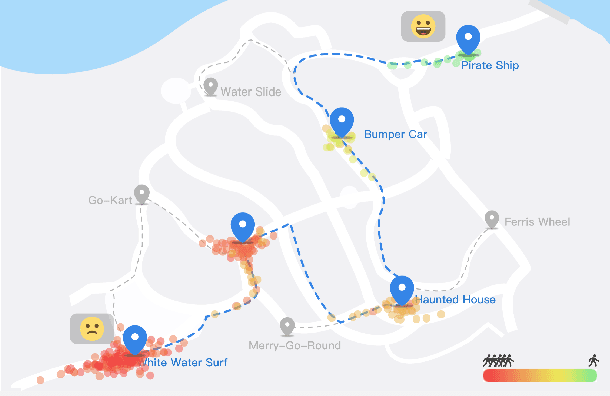

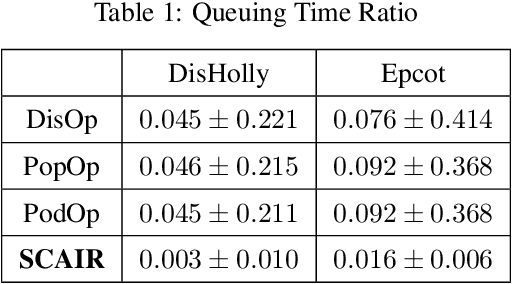

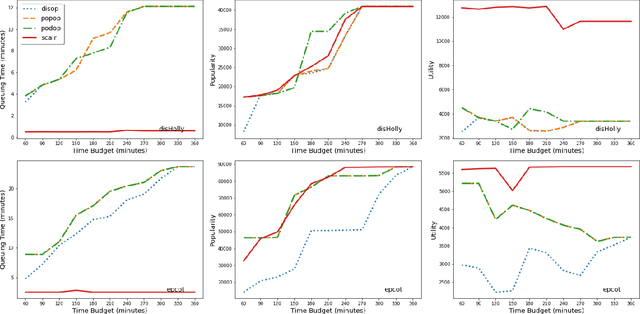

Abstract:The demand for Itinerary Planning grows rapidly in recent years as the economy and standard of living are improving globally. Nonetheless, itinerary recommendation remains a complex and difficult task, especially for one that is queuing time- and crowd-aware. This difficulty is due to the large amount of parameters involved, i.e., attraction popularity, queuing time, walking time, operating hours, etc. Many recent or existing works adopt a data-driven approach and propose solutions with single-person perspectives, but do not address real-world problems as a result of natural crowd behavior, such as the Selfish Routing problem, which describes the consequence of ineffective network and sub-optimal social outcome by leaving agents to decide freely. In this work, we propose the Strategic and Crowd-Aware Itinerary Recommendation (SCAIR) algorithm which takes a game-theoretic approach to address the Selfish Routing problem and optimize social welfare in real-world situations. To address the NP-hardness of the social welfare optimization problem, we further propose a Markov Decision Process (MDP) approach which enables our simulations to be carried out in poly-time. We then use real-world data to evaluate the proposed algorithm, with benchmarks of two intuitive strategies commonly adopted in real life, and a recent algorithm published in the literature. Our simulation results highlight the existence of the Selfish Routing problem and show that SCAIR outperforms the benchmarks in handling this issue with real-world data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge