Chaoran Huang

Beyond Terabit/s Integrated Neuromorphic Photonic Processor for DSP-Free Optical Interconnects

Apr 21, 2025Abstract:The rapid expansion of generative AI drives unprecedented demands for high-performance computing. Training large-scale AI models now requires vast interconnected GPU clusters across multiple data centers. Multi-scale AI training and inference demand uniform, ultra-low latency, and energy-efficient links to enable massive GPUs to function as a single cohesive unit. However, traditional electrical and optical interconnects, relying on conventional digital signal processors (DSPs) for signal distortion compensation, increasingly fail to meet these stringent requirements. To overcome these limitations, we present an integrated neuromorphic optical signal processor (OSP) that leverages deep reservoir computing and achieves DSP-free, all-optical, real-time processing. Experimentally, our OSP achieves a 100 Gbaud PAM4 per lane, 1.6 Tbit/s data center interconnect over a 5 km optical fiber in the C-band (equivalent to over 80 km in the O-band), far exceeding the reach of state-of-the-art DSP solutions, which are fundamentally constrained by chromatic dispersion in IMDD systems. Simultaneously, it reduces processing latency by four orders of magnitude and energy consumption by three orders of magnitude. Unlike DSPs, which introduce increased latency at high data rates, our OSP maintains consistent, ultra-low latency regardless of data rate scaling, making it ideal for future optical interconnects. Moreover, the OSP retains full optical field information for better impairment compensation and adapts to various modulation formats, data rates, and wavelengths. Fabricated using a mature silicon photonic process, the OSP can be monolithically integrated with silicon photonic transceivers, enhancing the compactness and reliability of all-optical interconnects. This research provides a highly scalable, energy-efficient, and high-speed solution, paving the way for next-generation AI infrastructure.

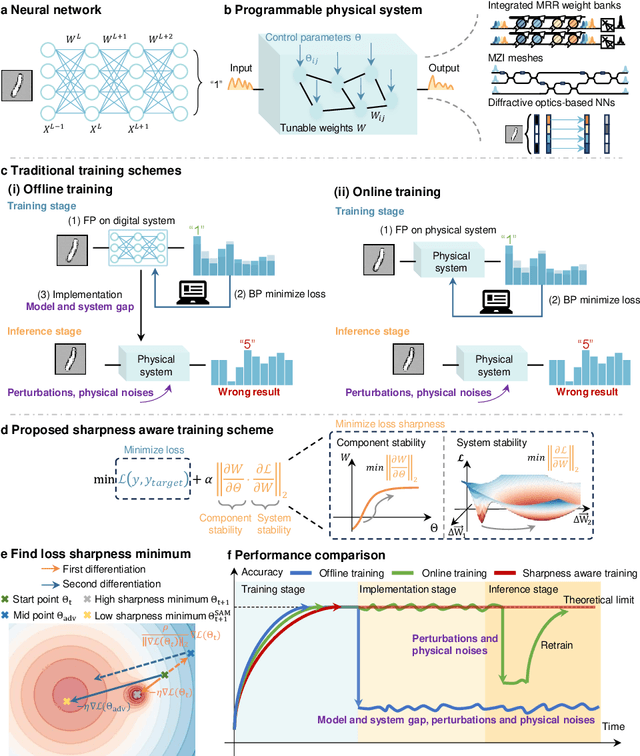

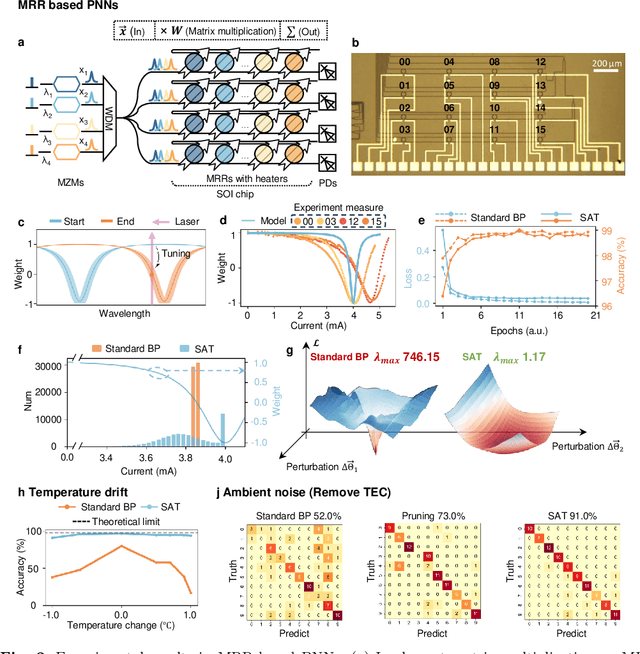

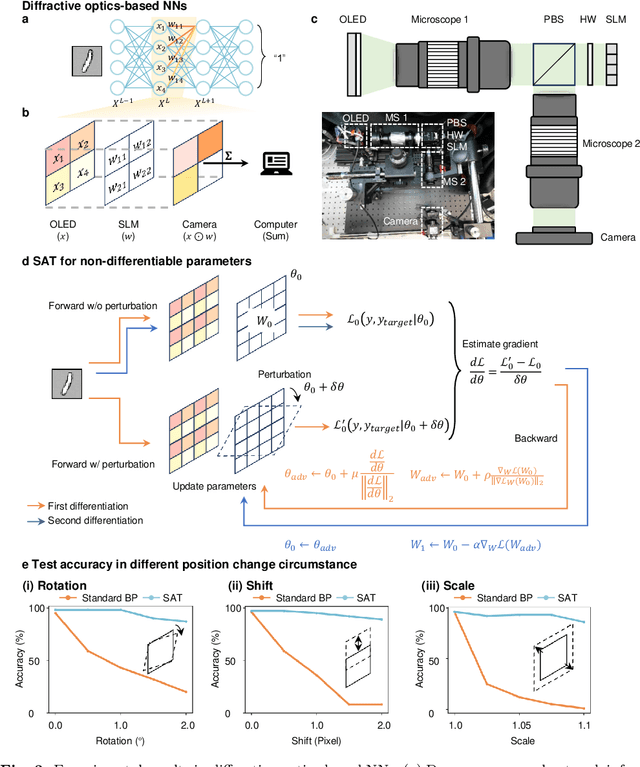

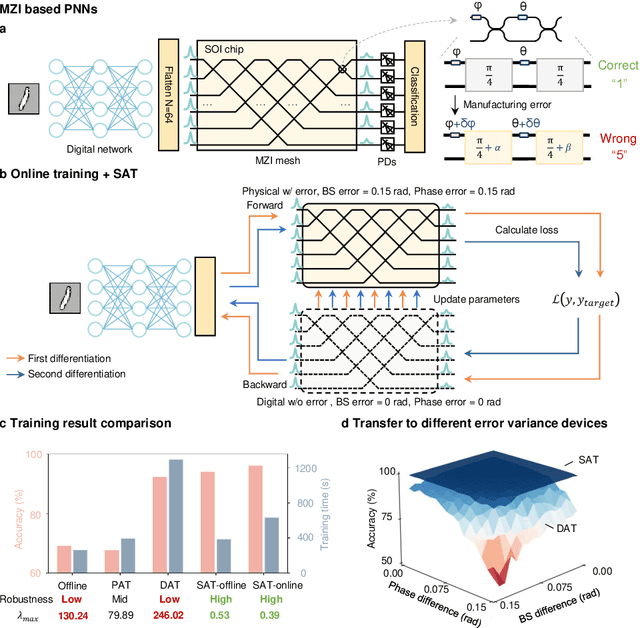

Perfecting Imperfect Physical Neural Networks with Transferable Robustness using Sharpness-Aware Training

Nov 19, 2024

Abstract:AI models are essential in science and engineering, but recent advances are pushing the limits of traditional digital hardware. To address these limitations, physical neural networks (PNNs), which use physical substrates for computation, have gained increasing attention. However, developing effective training methods for PNNs remains a significant challenge. Current approaches, regardless of offline and online training, suffer from significant accuracy loss. Offline training is hindered by imprecise modeling, while online training yields device-specific models that can't be transferred to other devices due to manufacturing variances. Both methods face challenges from perturbations after deployment, such as thermal drift or alignment errors, which make trained models invalid and require retraining. Here, we address the challenges with both offline and online training through a novel technique called Sharpness-Aware Training (SAT), where we innovatively leverage the geometry of the loss landscape to tackle the problems in training physical systems. SAT enables accurate training using efficient backpropagation algorithms, even with imprecise models. PNNs trained by SAT offline even outperform those trained online, despite modeling and fabrication errors. SAT also overcomes online training limitations by enabling reliable transfer of models between devices. Finally, SAT is highly resilient to perturbations after deployment, allowing PNNs to continuously operate accurately under perturbations without retraining. We demonstrate SAT across three types of PNNs, showing it is universally applicable, regardless of whether the models are explicitly known. This work offers a transformative, efficient approach to training PNNs, addressing critical challenges in analog computing and enabling real-world deployment.

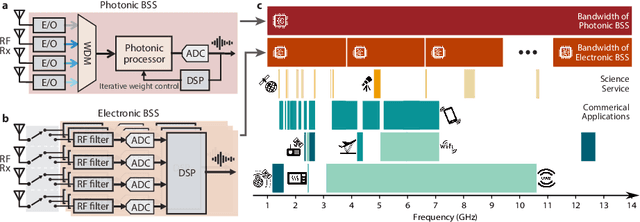

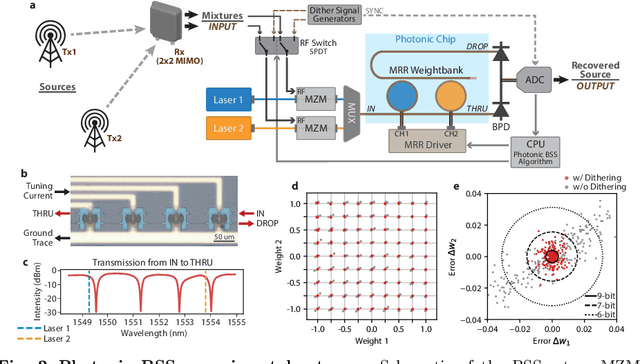

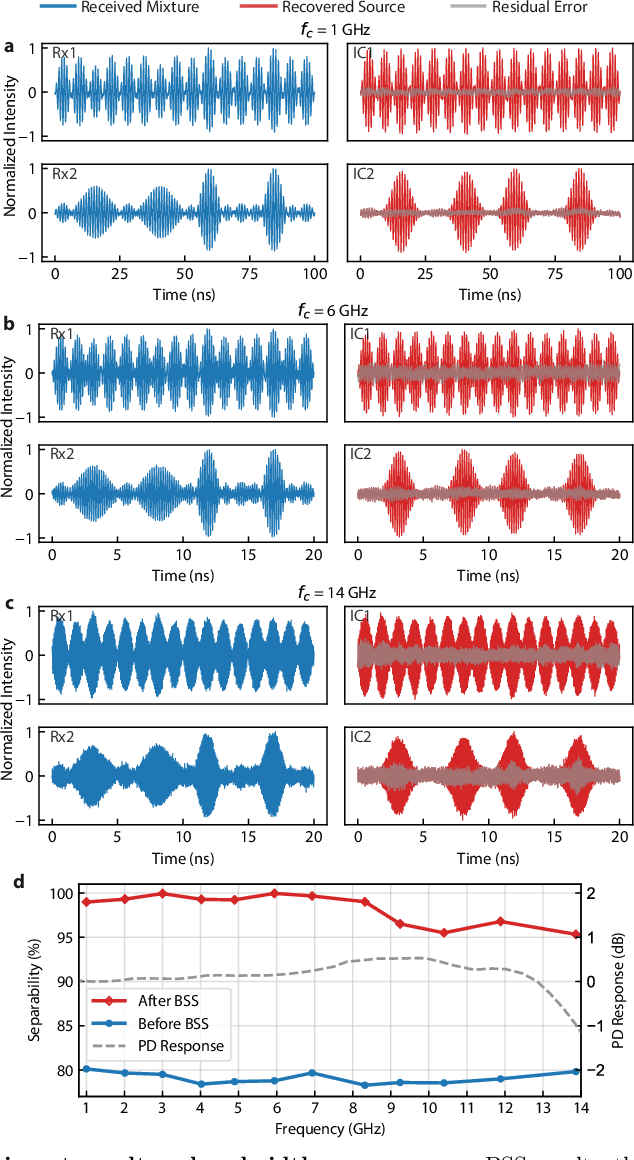

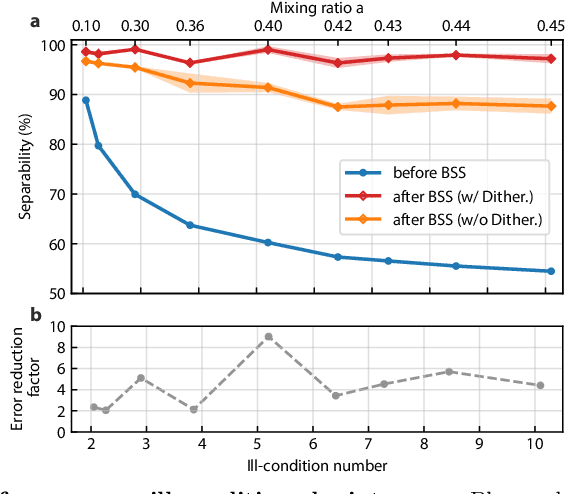

Wideband physical layer cognitive radio with an integrated photonic processor for blind source separation

May 17, 2022

Abstract:The expansion of telecommunications incurs increasingly severe crosstalk and interference, and a physical layer cognitive method, called blind source separation (BSS), can effectively address these issues. BSS requires minimal prior knowledge to recover signals from their mixtures, agnostic to carrier frequency, signal format, and channel conditions. However, the previous BSS implemented in electronics did not fulfill this versatility due to the inherently narrow bandwidth of radio-frequency (RF) components, the high energy consumption of digital signal processors (DSP), and their shared weaknesses of low scalability. Here, we report a photonic BSS approach that inherits the advantages of optical devices and can fully fulfill its "blindness" aspect. Using a microring weight bank integrated on a photonic chip, we demonstrate energy-efficient, WDM-scalable BSS across 13.8 GHz of bandwidth, covering many standard frequency bands. Our system also has high (9-bit) accuracy for signal demixing thanks to a recently developed dithering control method, resulting in higher signal of interest ratios (SIR) even for ill-conditioned mixtures.

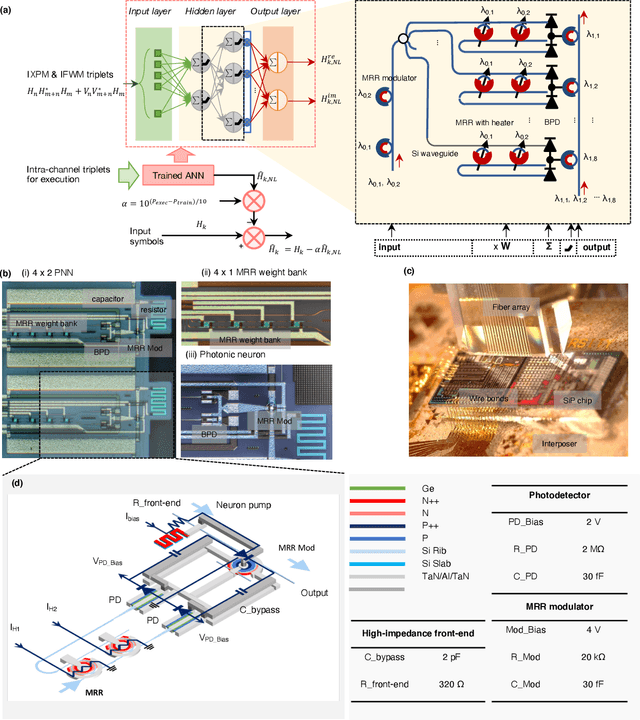

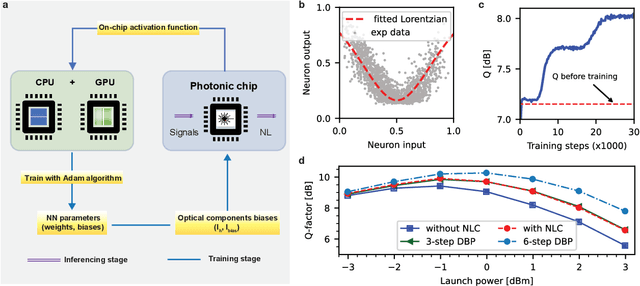

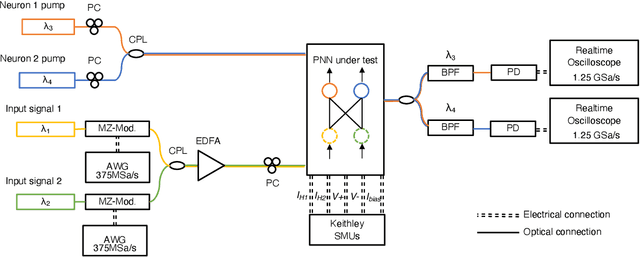

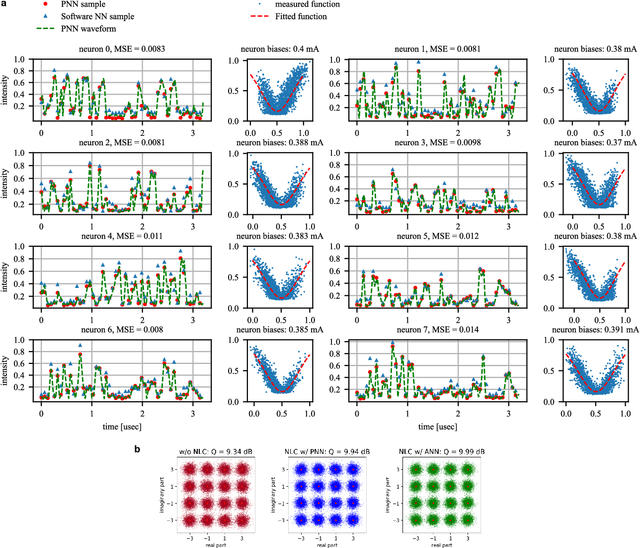

Silicon photonic-electronic neural network for fibre nonlinearity compensation

Oct 11, 2021

Abstract:In optical communication systems, fibre nonlinearity is the major obstacle in increasing the transmission capacity. Typically, digital signal processing techniques and hardware are used to deal with optical communication signals, but increasing speed and computational complexity create challenges for such approaches. Highly parallel, ultrafast neural networks using photonic devices have the potential to ease the requirements placed on the digital signal processing circuits by processing the optical signals in the analogue domain. Here we report a silicon photonice-lectronic neural network for solving fibre nonlinearity compensation of submarine optical fibre transmission systems. Our approach uses a photonic neural network based on wavelength-division multiplexing built on a CMOS-compatible silicon photonic platform. We show that the platform can be used to compensate optical fibre nonlinearities and improve the signal quality (Q)-factor in a 10,080 km submarine fibre communication system. The Q-factor improvement is comparable to that of a software-based neural network implemented on a 32-bit graphic processing unit-assisted workstation. Our reconfigurable photonic-electronic integrated neural network promises to address pressing challenges in high-speed intelligent signal processing.

A Photonic-Circuits-Inspired Compact Network: Toward Real-Time Wireless Signal Classification at the Edge

Jun 25, 2021

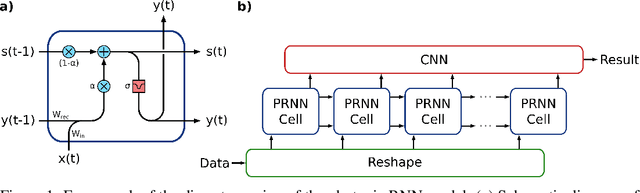

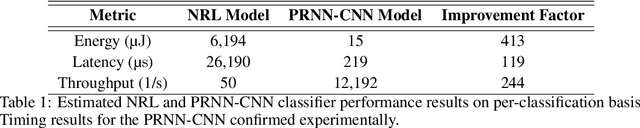

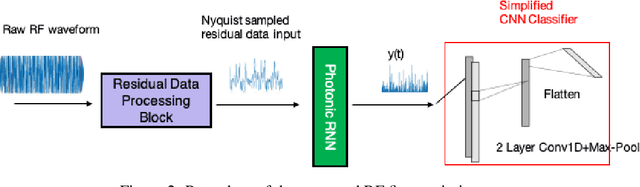

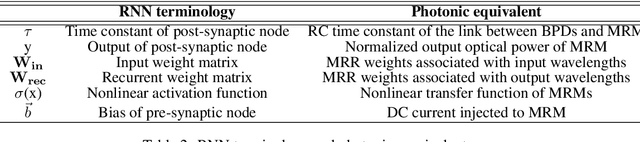

Abstract:Machine learning (ML) methods are ubiquitous in wireless communication systems and have proven powerful for applications including radio-frequency (RF) fingerprinting, automatic modulation classification, and cognitive radio. However, the large size of ML models can make them difficult to implement on edge devices for latency-sensitive downstream tasks. In wireless communication systems, ML data processing at a sub-millisecond scale will enable real-time network monitoring to improve security and prevent infiltration. In addition, compact and integratable hardware platforms which can implement ML models at the chip scale will find much broader application to wireless communication networks. Toward real-time wireless signal classification at the edge, we propose a novel compact deep network that consists of a photonic-hardware-inspired recurrent neural network model in combination with a simplified convolutional classifier, and we demonstrate its application to the identification of RF emitters by their random transmissions. With the proposed model, we achieve 96.32% classification accuracy over a set of 30 identical ZigBee devices when using 50 times fewer training parameters than an existing state-of-the-art CNN classifier. Thanks to the large reduction in network size, we demonstrate real-time RF fingerprinting with 0.219 ms latency using a small-scale FPGA board, the PYNQ-Z1.

Recommender Systems for the Internet of Things: A Survey

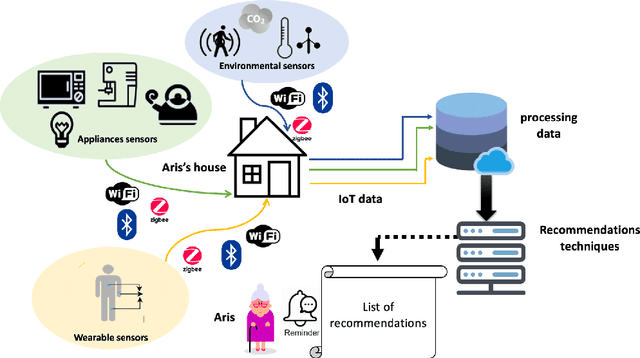

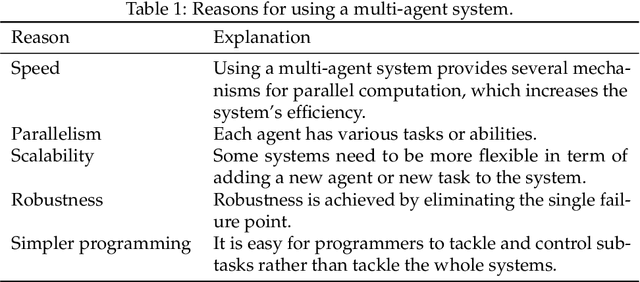

Jul 14, 2020

Abstract:Recommendation represents a vital stage in developing and promoting the benefits of the Internet of Things (IoT). Traditional recommender systems fail to exploit ever-growing, dynamic, and heterogeneous IoT data. This paper presents a comprehensive review of the state-of-the-art recommender systems, as well as related techniques and application in the vibrant field of IoT. We discuss several limitations of applying recommendation systems to IoT and propose a reference framework for comparing existing studies to guide future research and practices.

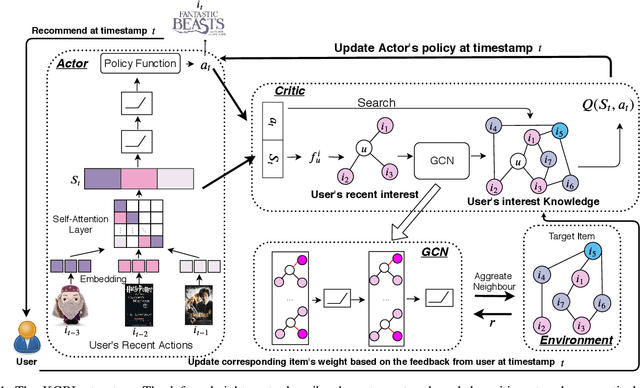

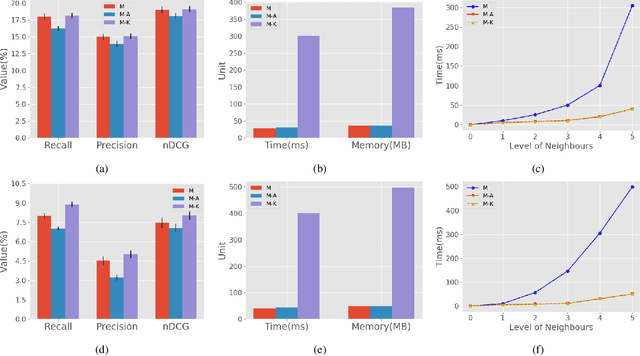

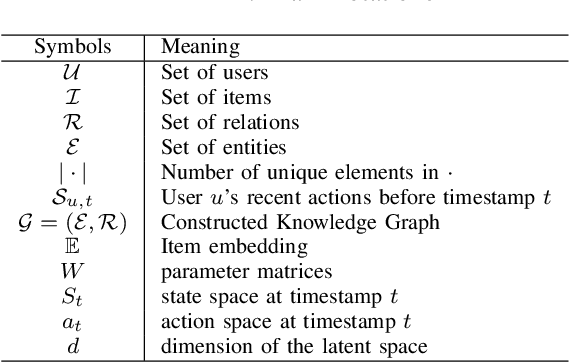

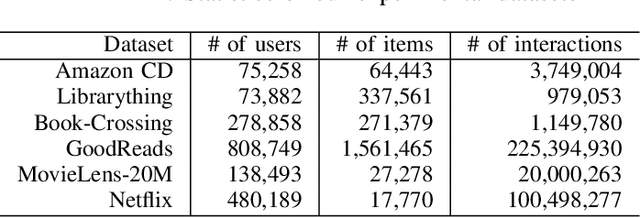

Knowledge-guided Deep Reinforcement Learning for Interactive Recommendation

Apr 17, 2020

Abstract:Interactive recommendation aims to learn from dynamic interactions between items and users to achieve responsiveness and accuracy. Reinforcement learning is inherently advantageous for coping with dynamic environments and thus has attracted increasing attention in interactive recommendation research. Inspired by knowledge-aware recommendation, we proposed Knowledge-Guided deep Reinforcement learning (KGRL) to harness the advantages of both reinforcement learning and knowledge graphs for interactive recommendation. This model is implemented upon the actor-critic network framework. It maintains a local knowledge network to guide decision-making and employs the attention mechanism to capture long-term semantics between items. We have conducted comprehensive experiments in a simulated online environment with six public real-world datasets and demonstrated the superiority of our model over several state-of-the-art methods.

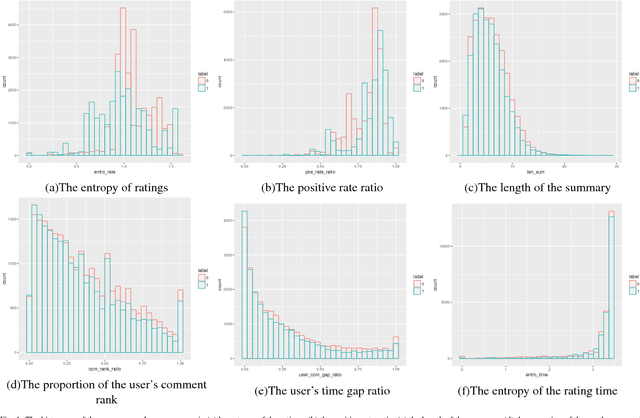

Opinion Fraud Detection via Neural Autoencoder Decision Forest

May 09, 2018

Abstract:Online reviews play an important role in influencing buyers' daily purchase decisions. However, fake and meaningless reviews, which cannot reflect users' genuine purchase experience and opinions, widely exist on the Web and pose great challenges for users to make right choices. Therefore,it is desirable to build a fair model that evaluates the quality of products by distinguishing spamming reviews. We present an end-to-end trainable unified model to leverage the appealing properties from Autoencoder and random forest. A stochastic decision tree model is implemented to guide the global parameter learning process. Extensive experiments were conducted on a large Amazon review dataset. The proposed model consistently outperforms a series of compared methods.

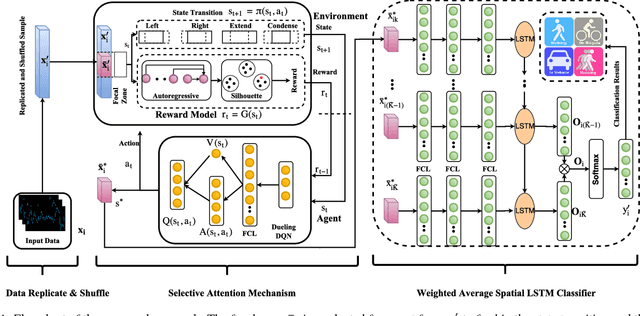

Multi-modality Sensor Data Classification with Selective Attention

May 01, 2018

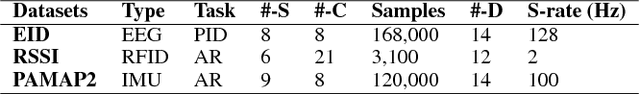

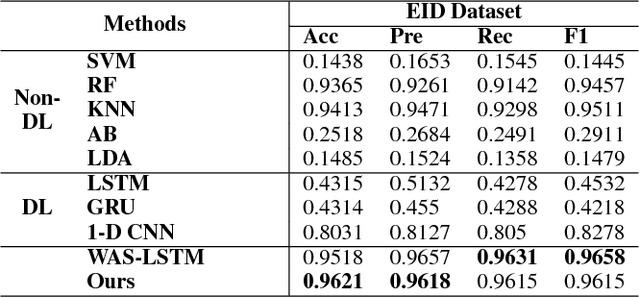

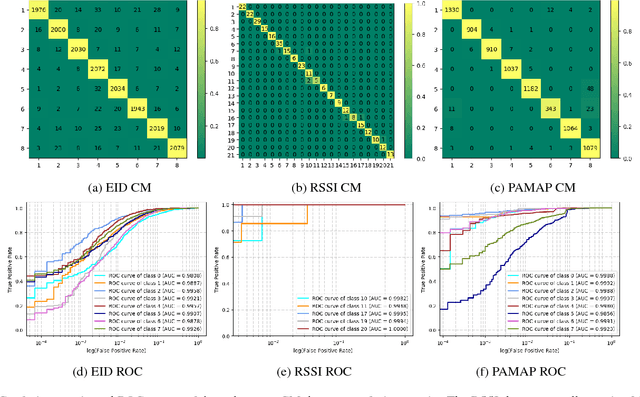

Abstract:Multimodal wearable sensor data classification plays an important role in ubiquitous computing and has a wide range of applications in scenarios from healthcare to entertainment. However, most existing work in this field employs domain-specific approaches and is thus ineffective in complex sit- uations where multi-modality sensor data are col- lected. Moreover, the wearable sensor data are less informative than the conventional data such as texts or images. In this paper, to improve the adapt- ability of such classification methods across differ- ent application domains, we turn this classification task into a game and apply a deep reinforcement learning scheme to deal with complex situations dynamically. Additionally, we introduce a selective attention mechanism into the reinforcement learn- ing scheme to focus on the crucial dimensions of the data. This mechanism helps to capture extra information from the signal and thus it is able to significantly improve the discriminative power of the classifier. We carry out several experiments on three wearable sensor datasets and demonstrate the competitive performance of the proposed approach compared to several state-of-the-art baselines.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge