Multi-modality Sensor Data Classification with Selective Attention

Paper and Code

May 01, 2018

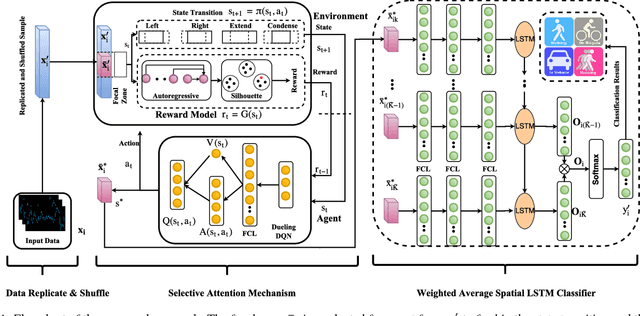

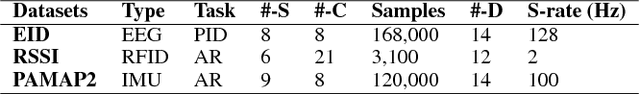

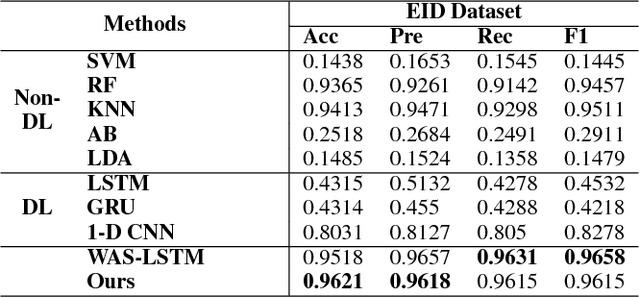

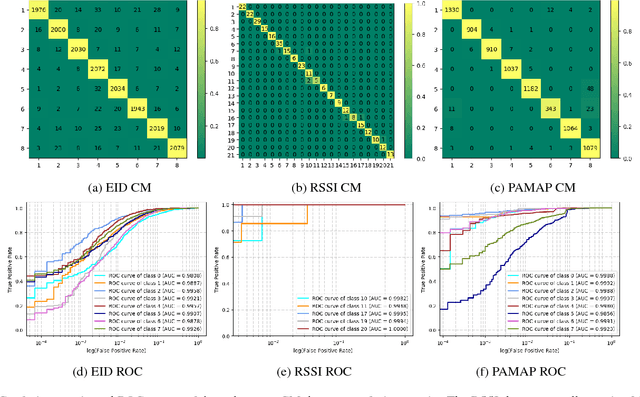

Multimodal wearable sensor data classification plays an important role in ubiquitous computing and has a wide range of applications in scenarios from healthcare to entertainment. However, most existing work in this field employs domain-specific approaches and is thus ineffective in complex sit- uations where multi-modality sensor data are col- lected. Moreover, the wearable sensor data are less informative than the conventional data such as texts or images. In this paper, to improve the adapt- ability of such classification methods across differ- ent application domains, we turn this classification task into a game and apply a deep reinforcement learning scheme to deal with complex situations dynamically. Additionally, we introduce a selective attention mechanism into the reinforcement learn- ing scheme to focus on the crucial dimensions of the data. This mechanism helps to capture extra information from the signal and thus it is able to significantly improve the discriminative power of the classifier. We carry out several experiments on three wearable sensor datasets and demonstrate the competitive performance of the proposed approach compared to several state-of-the-art baselines.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge