Bilel Benjdira

NTIRE 2025 Image Shadow Removal Challenge Report

Jun 18, 2025

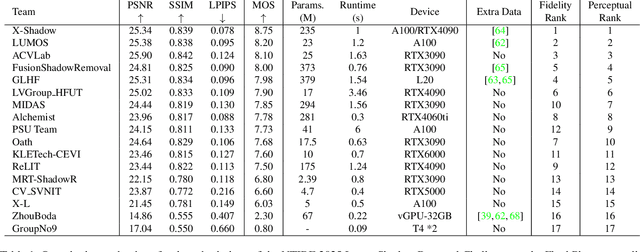

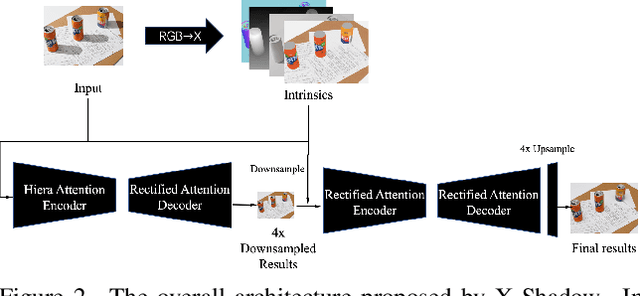

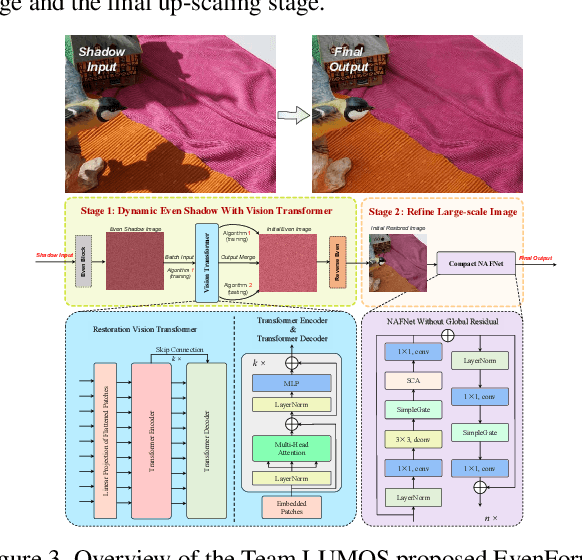

Abstract:This work examines the findings of the NTIRE 2025 Shadow Removal Challenge. A total of 306 participants have registered, with 17 teams successfully submitting their solutions during the final evaluation phase. Following the last two editions, this challenge had two evaluation tracks: one focusing on reconstruction fidelity and the other on visual perception through a user study. Both tracks were evaluated with images from the WSRD+ dataset, simulating interactions between self- and cast-shadows with a large number of diverse objects, textures, and materials.

NTIRE 2025 Challenge on Image Super-Resolution ($\times$4): Methods and Results

Apr 20, 2025Abstract:This paper presents the NTIRE 2025 image super-resolution ($\times$4) challenge, one of the associated competitions of the 10th NTIRE Workshop at CVPR 2025. The challenge aims to recover high-resolution (HR) images from low-resolution (LR) counterparts generated through bicubic downsampling with a $\times$4 scaling factor. The objective is to develop effective network designs or solutions that achieve state-of-the-art SR performance. To reflect the dual objectives of image SR research, the challenge includes two sub-tracks: (1) a restoration track, emphasizes pixel-wise accuracy and ranks submissions based on PSNR; (2) a perceptual track, focuses on visual realism and ranks results by a perceptual score. A total of 286 participants registered for the competition, with 25 teams submitting valid entries. This report summarizes the challenge design, datasets, evaluation protocol, the main results, and methods of each team. The challenge serves as a benchmark to advance the state of the art and foster progress in image SR.

The Tenth NTIRE 2025 Image Denoising Challenge Report

Apr 16, 2025

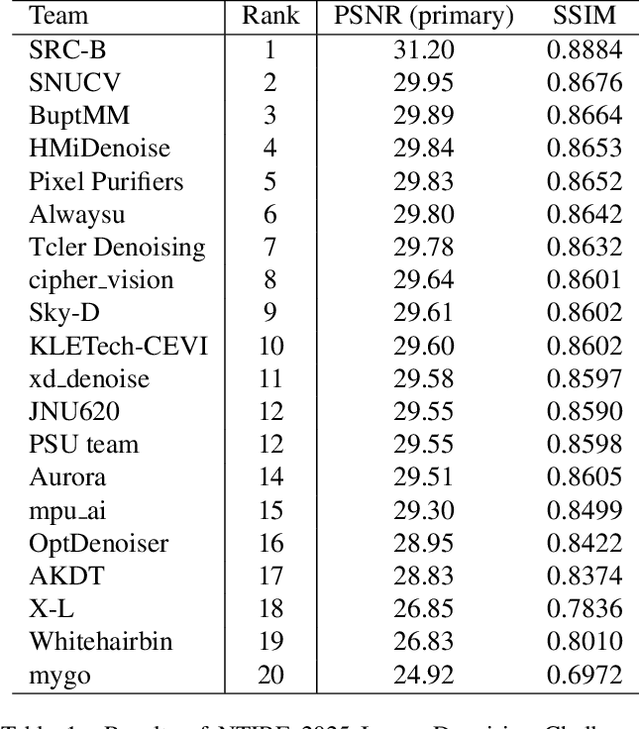

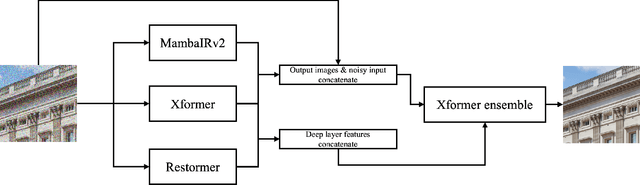

Abstract:This paper presents an overview of the NTIRE 2025 Image Denoising Challenge ({\sigma} = 50), highlighting the proposed methodologies and corresponding results. The primary objective is to develop a network architecture capable of achieving high-quality denoising performance, quantitatively evaluated using PSNR, without constraints on computational complexity or model size. The task assumes independent additive white Gaussian noise (AWGN) with a fixed noise level of 50. A total of 290 participants registered for the challenge, with 20 teams successfully submitting valid results, providing insights into the current state-of-the-art in image denoising.

Prediction of Arabic Legal Rulings using Large Language Models

Oct 16, 2023

Abstract:In the intricate field of legal studies, the analysis of court decisions is a cornerstone for the effective functioning of the judicial system. The ability to predict court outcomes helps judges during the decision-making process and equips lawyers with invaluable insights, enhancing their strategic approaches to cases. Despite its significance, the domain of Arabic court analysis remains under-explored. This paper pioneers a comprehensive predictive analysis of Arabic court decisions on a dataset of 10,813 commercial court real cases, leveraging the advanced capabilities of the current state-of-the-art large language models. Through a systematic exploration, we evaluate three prevalent foundational models (LLaMA-7b, JAIS-13b, and GPT3.5-turbo) and three training paradigms: zero-shot, one-shot, and tailored fine-tuning. Besides, we assess the benefit of summarizing and/or translating the original Arabic input texts. This leads to a spectrum of 14 model variants, for which we offer a granular performance assessment with a series of different metrics (human assessment, GPT evaluation, ROUGE, and BLEU scores). We show that all variants of LLaMA models yield limited performance, whereas GPT-3.5-based models outperform all other models by a wide margin, surpassing the average score of the dedicated Arabic-centric JAIS model by 50%. Furthermore, we show that all scores except human evaluation are inconsistent and unreliable for assessing the performance of large language models on court decision predictions. This study paves the way for future research, bridging the gap between computational linguistics and Arabic legal analytics.

License Plate Super-Resolution Using Diffusion Models

Sep 21, 2023

Abstract:In surveillance, accurately recognizing license plates is hindered by their often low quality and small dimensions, compromising recognition precision. Despite advancements in AI-based image super-resolution, methods like Convolutional Neural Networks (CNNs) and Generative Adversarial Networks (GANs) still fall short in enhancing license plate images. This study leverages the cutting-edge diffusion model, which has consistently outperformed other deep learning techniques in image restoration. By training this model using a curated dataset of Saudi license plates, both in low and high resolutions, we discovered the diffusion model's superior efficacy. The method achieves a 12.55\% and 37.32% improvement in Peak Signal-to-Noise Ratio (PSNR) over SwinIR and ESRGAN, respectively. Moreover, our method surpasses these techniques in terms of Structural Similarity Index (SSIM), registering a 4.89% and 17.66% improvement over SwinIR and ESRGAN, respectively. Furthermore, 92% of human evaluators preferred our images over those from other algorithms. In essence, this research presents a pioneering solution for license plate super-resolution, with tangible potential for surveillance systems.

Early Detection of Red Palm Weevil Infestations using Deep Learning Classification of Acoustic Signals

Aug 30, 2023Abstract:The Red Palm Weevil (RPW), also known as the palm weevil, is considered among the world's most damaging insect pests of palms. Current detection techniques include the detection of symptoms of RPW using visual or sound inspection and chemical detection of volatile signatures generated by infested palm trees. However, efficient detection of RPW diseases at an early stage is considered one of the most challenging issues for cultivating date palms. In this paper, an efficient approach to the early detection of RPW is proposed. The proposed approach is based on RPW sound activities being recorded and analyzed. The first step involves the conversion of sound data into images based on a selected set of features. The second step involves the combination of images from the same sound file but computed by different features into a single image. The third step involves the application of different Deep Learning (DL) techniques to classify resulting images into two classes: infested and not infested. Experimental results show good performances of the proposed approach for RPW detection using different DL techniques, namely MobileNetV2, ResNet50V2, ResNet152V2, VGG16, VGG19, DenseNet121, DenseNet201, Xception, and InceptionV3. The proposed approach outperformed existing techniques for public datasets.

ROSGPT_Vision: Commanding Robots Using Only Language Models' Prompts

Aug 23, 2023Abstract:In this paper, we argue that the next generation of robots can be commanded using only Language Models' prompts. Every prompt interrogates separately a specific Robotic Modality via its Modality Language Model (MLM). A central Task Modality mediates the whole communication to execute the robotic mission via a Large Language Model (LLM). This paper gives this new robotic design pattern the name of: Prompting Robotic Modalities (PRM). Moreover, this paper applies this PRM design pattern in building a new robotic framework named ROSGPT_Vision. ROSGPT_Vision allows the execution of a robotic task using only two prompts: a Visual and an LLM prompt. The Visual Prompt extracts, in natural language, the visual semantic features related to the task under consideration (Visual Robotic Modality). Meanwhile, the LLM Prompt regulates the robotic reaction to the visual description (Task Modality). The framework automates all the mechanisms behind these two prompts. The framework enables the robot to address complex real-world scenarios by processing visual data, making informed decisions, and carrying out actions automatically. The framework comprises one generic vision module and two independent ROS nodes. As a test application, we used ROSGPT_Vision to develop CarMate, which monitors the driver's distraction on the roads and makes real-time vocal notifications to the driver. We showed how ROSGPT_Vision significantly reduced the development cost compared to traditional methods. We demonstrated how to improve the quality of the application by optimizing the prompting strategies, without delving into technical details. ROSGPT_Vision is shared with the community (link: https://github.com/bilel-bj/ROSGPT_Vision) to advance robotic research in this direction and to build more robotic frameworks that implement the PRM design pattern and enables controlling robots using only prompts.

Streamlined Global and Local Features Combinator (SGLC) for High Resolution Image Dehazing

Apr 26, 2023

Abstract:Image Dehazing aims to remove atmospheric fog or haze from an image. Although the Dehazing models have evolved a lot in recent years, few have precisely tackled the problem of High-Resolution hazy images. For this kind of image, the model needs to work on a downscaled version of the image or on cropped patches from it. In both cases, the accuracy will drop. This is primarily due to the inherent failure to combine global and local features when the image size increases. The Dehazing model requires global features to understand the general scene peculiarities and the local features to work better with fine and pixel details. In this study, we propose the Streamlined Global and Local Features Combinator (SGLC) to solve these issues and to optimize the application of any Dehazing model to High-Resolution images. The SGLC contains two successive blocks. The first is the Global Features Generator (GFG) which generates the first version of the Dehazed image containing strong global features. The second block is the Local Features Enhancer (LFE) which improves the local feature details inside the previously generated image. When tested on the Uformer architecture for Dehazing, SGLC increased the PSNR metric by a significant margin. Any other model can be incorporated inside the SGLC process to improve its efficiency on High-Resolution input data.

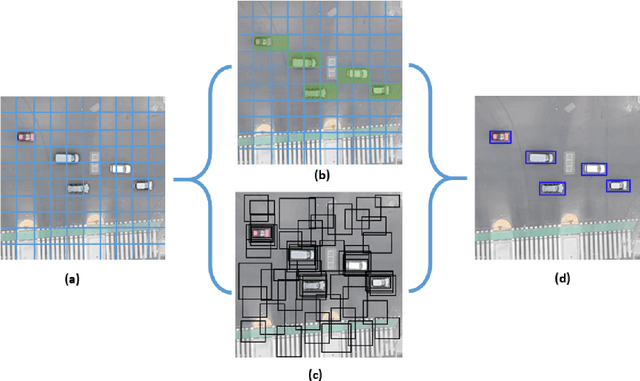

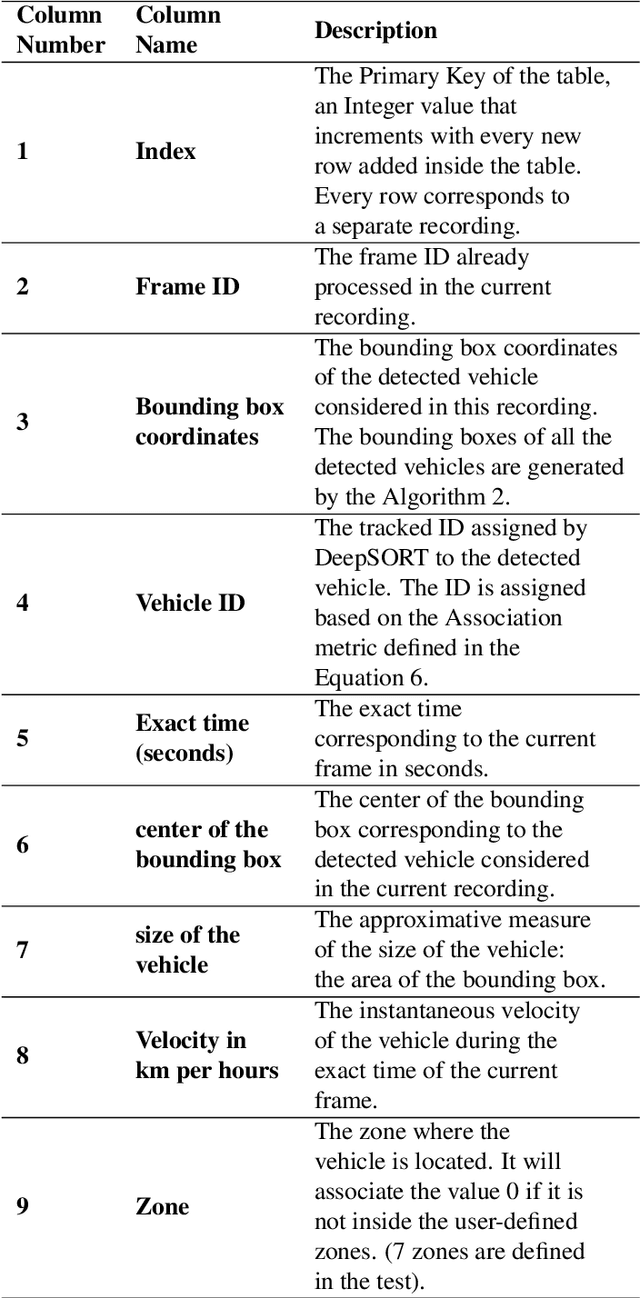

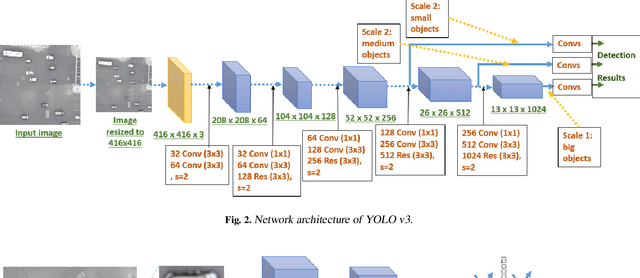

TAU: A Framework for Video-Based Traffic Analytics Leveraging Artificial Intelligence and Unmanned Aerial Systems

Mar 01, 2023

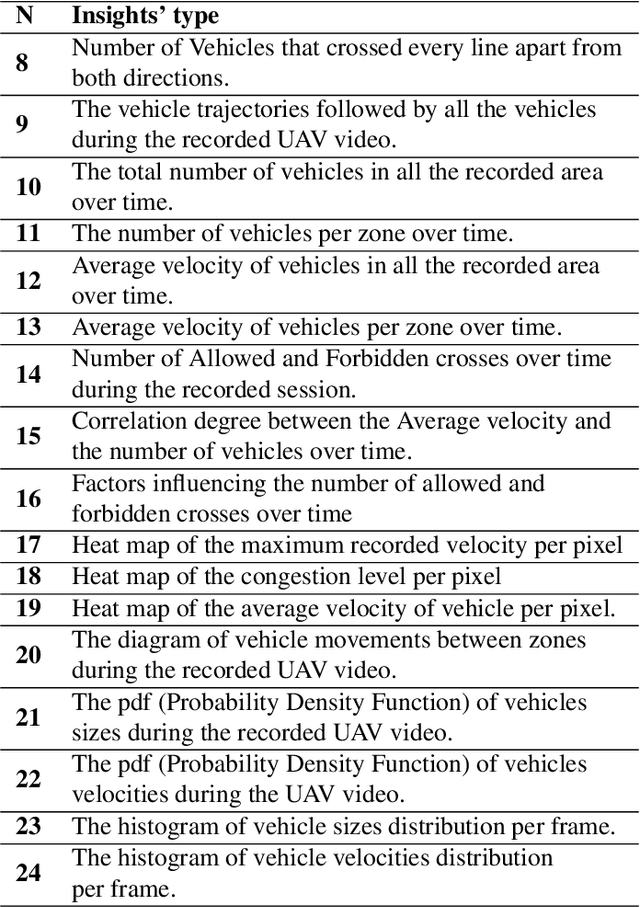

Abstract:Smart traffic engineering and intelligent transportation services are in increasing demand from governmental authorities to optimize traffic performance and thus reduce energy costs, increase the drivers' safety and comfort, ensure traffic laws enforcement, and detect traffic violations. In this paper, we address this challenge, and we leverage the use of Artificial Intelligence (AI) and Unmanned Aerial Vehicles (UAVs) to develop an AI-integrated video analytics framework, called TAU (Traffic Analysis from UAVs), for automated traffic analytics and understanding. Unlike previous works on traffic video analytics, we propose an automated object detection and tracking pipeline from video processing to advanced traffic understanding using high-resolution UAV images. TAU combines six main contributions. First, it proposes a pre-processing algorithm to adapt the high-resolution UAV image as input to the object detector without lowering the resolution. This ensures an excellent detection accuracy from high-quality features, particularly the small size of detected objects from UAV images. Second, it introduces an algorithm for recalibrating the vehicle coordinates to ensure that vehicles are uniquely identified and tracked across the multiple crops of the same frame. Third, it presents a speed calculation algorithm based on accumulating information from successive frames. Fourth, TAU counts the number of vehicles per traffic zone based on the Ray Tracing algorithm. Fifth, TAU has a fully independent algorithm for crossroad arbitration based on the data gathered from the different zones surrounding it. Sixth, TAU introduces a set of algorithms for extracting twenty-four types of insights from the raw data collected. The code is shared here: https://github.com/bilel-bj/TAU. Video demonstrations are provided here: https://youtu.be/wXJV0H7LviU and here: https://youtu.be/kGv0gmtVEbI.

* This is the final proofread version submitted to Elsevier EAAI: please see the published version at: https://doi.org/10.1016/j.engappai.2022.105095

Parking Analytics Framework using Deep Learning

Mar 15, 2022

Abstract:With the number of vehicles continuously increasing, parking monitoring and analysis are becoming a substantial feature of modern cities. In this study, we present a methodology to monitor car parking areas and to analyze their occupancy in real-time. The solution is based on a combination between image analysis and deep learning techniques. It incorporates four building blocks put inside a pipeline: vehicle detection, vehicle tracking, manual annotation of parking slots, and occupancy estimation using the Ray Tracing algorithm. The aim of this methodology is to optimize the use of parking areas and to reduce the time wasted by daily drivers to find the right parking slot for their cars. Also, it helps to better manage the space of the parking areas and to discover misuse cases. A demonstration of the provided solution is shown in the following video link: https://www.youtube.com/watch?v=KbAt8zT14Tc.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge