Ben Lengerich

FastCache: Fast Caching for Diffusion Transformer Through Learnable Linear Approximation

May 26, 2025Abstract:Diffusion Transformers (DiT) are powerful generative models but remain computationally intensive due to their iterative structure and deep transformer stacks. To alleviate this inefficiency, we propose FastCache, a hidden-state-level caching and compression framework that accelerates DiT inference by exploiting redundancy within the model's internal representations. FastCache introduces a dual strategy: (1) a spatial-aware token selection mechanism that adaptively filters redundant tokens based on hidden state saliency, and (2) a transformer-level cache that reuses latent activations across timesteps when changes are statistically insignificant. These modules work jointly to reduce unnecessary computation while preserving generation fidelity through learnable linear approximation. Theoretical analysis shows that FastCache maintains bounded approximation error under a hypothesis-testing-based decision rule. Empirical evaluations across multiple DiT variants demonstrate substantial reductions in latency and memory usage, with best generation output quality compared to other cache methods, as measured by FID and t-FID. Code implementation of FastCache is available on GitHub at https://github.com/NoakLiu/FastCache-xDiT.

Patient-Specific Models of Treatment Effects Explain Heterogeneity in Tuberculosis

Nov 16, 2024

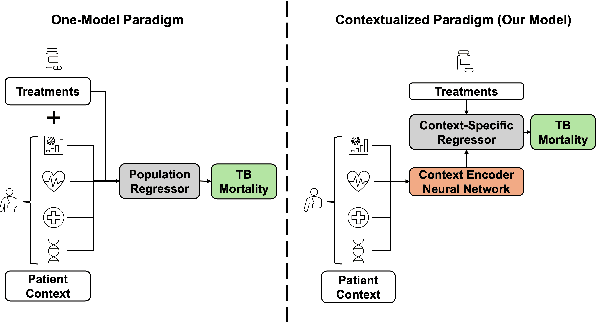

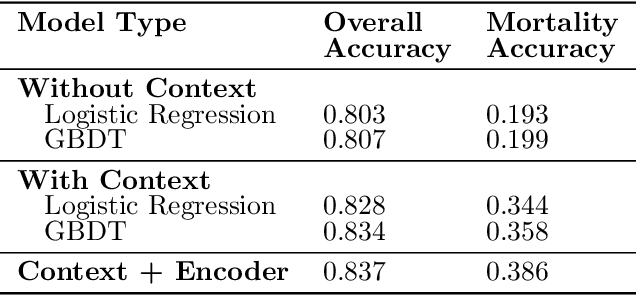

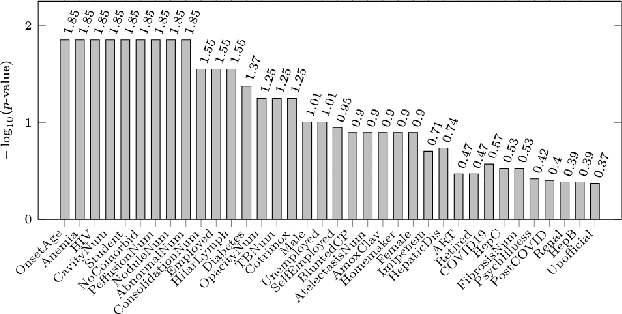

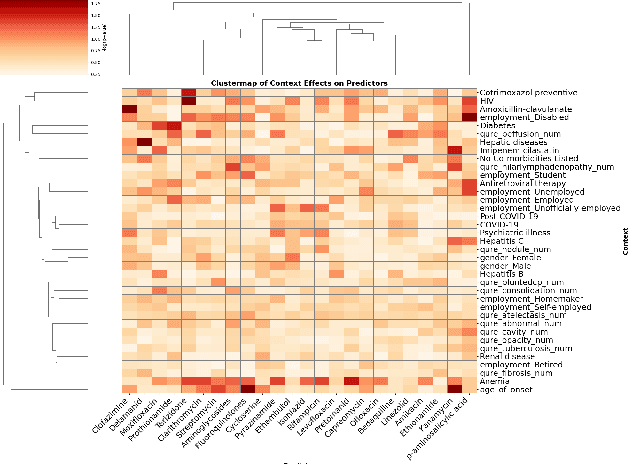

Abstract:Tuberculosis (TB) is a major global health challenge, and is compounded by co-morbidities such as HIV, diabetes, and anemia, which complicate treatment outcomes and contribute to heterogeneous patient responses. Traditional models of TB often overlook this heterogeneity by focusing on broad, pre-defined patient groups, thereby missing the nuanced effects of individual patient contexts. We propose moving beyond coarse subgroup analyses by using contextualized modeling, a multi-task learning approach that encodes patient context into personalized models of treatment effects, revealing patient-specific treatment benefits. Applied to the TB Portals dataset with multi-modal measurements for over 3,000 TB patients, our model reveals structured interactions between co-morbidities, treatments, and patient outcomes, identifying anemia, age of onset, and HIV as influential for treatment efficacy. By enhancing predictive accuracy in heterogeneous populations and providing patient-specific insights, contextualized models promise to enable new approaches to personalized treatment.

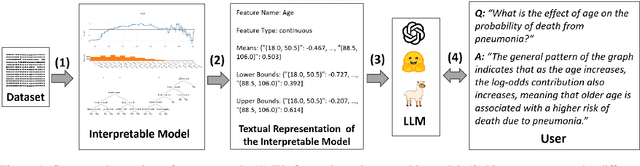

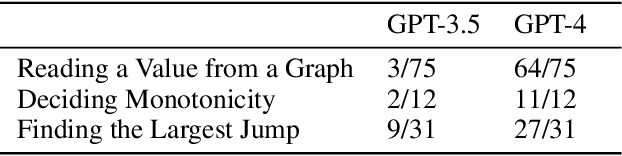

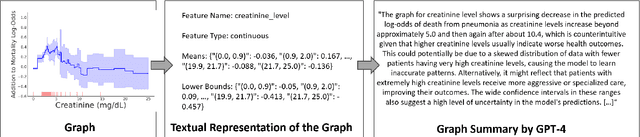

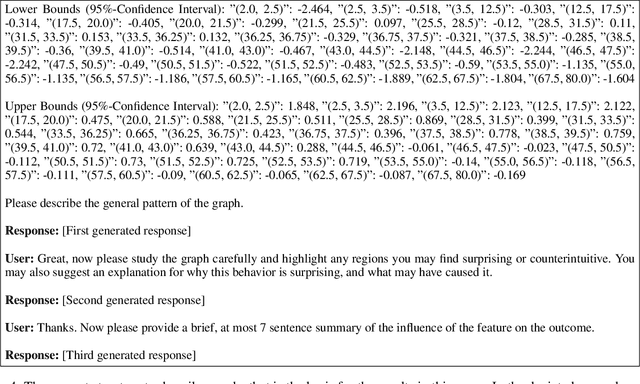

Data Science with LLMs and Interpretable Models

Feb 22, 2024

Abstract:Recent years have seen important advances in the building of interpretable models, machine learning models that are designed to be easily understood by humans. In this work, we show that large language models (LLMs) are remarkably good at working with interpretable models, too. In particular, we show that LLMs can describe, interpret, and debug Generalized Additive Models (GAMs). Combining the flexibility of LLMs with the breadth of statistical patterns accurately described by GAMs enables dataset summarization, question answering, and model critique. LLMs can also improve the interaction between domain experts and interpretable models, and generate hypotheses about the underlying phenomenon. We release \url{https://github.com/interpretml/TalkToEBM} as an open-source LLM-GAM interface.

Executive Function: A Contrastive Value Policy for Resampling and Relabeling Perceptions via Hindsight Summarization?

Apr 27, 2022

Abstract:We develop the few-shot continual learning task from first principles and hypothesize an evolutionary motivation and mechanism of action for executive function as a contrastive value policy which resamples and relabels perception data via hindsight summarization to minimize attended prediction error, similar to an online prompt engineering problem. This is made feasible by the use of a memory policy and a pretrained network with inductive biases for a grammar of learning and is trained to maximize evolutionary survival. We show how this model of executive function can be used to implement hypothesis testing as a stream of consciousness and may explain observations of human few-shot learning and neuroanatomy.

NOTMAD: Estimating Bayesian Networks with Sample-Specific Structures and Parameters

Nov 01, 2021

Abstract:Context-specific Bayesian networks (i.e. directed acyclic graphs, DAGs) identify context-dependent relationships between variables, but the non-convexity induced by the acyclicity requirement makes it difficult to share information between context-specific estimators (e.g. with graph generator functions). For this reason, existing methods for inferring context-specific Bayesian networks have favored breaking datasets into subsamples, limiting statistical power and resolution, and preventing the use of multidimensional and latent contexts. To overcome this challenge, we propose NOTEARS-optimized Mixtures of Archetypal DAGs (NOTMAD). NOTMAD models context-specific Bayesian networks as the output of a function which learns to mix archetypal networks according to sample context. The archetypal networks are estimated jointly with the context-specific networks and do not require any prior knowledge. We encode the acyclicity constraint as a smooth regularization loss which is back-propagated to the mixing function; in this way, NOTMAD shares information between context-specific acyclic graphs, enabling the estimation of Bayesian network structures and parameters at even single-sample resolution. We demonstrate the utility of NOTMAD and sample-specific network inference through analysis and experiments, including patient-specific gene expression networks which correspond to morphological variation in cancer.

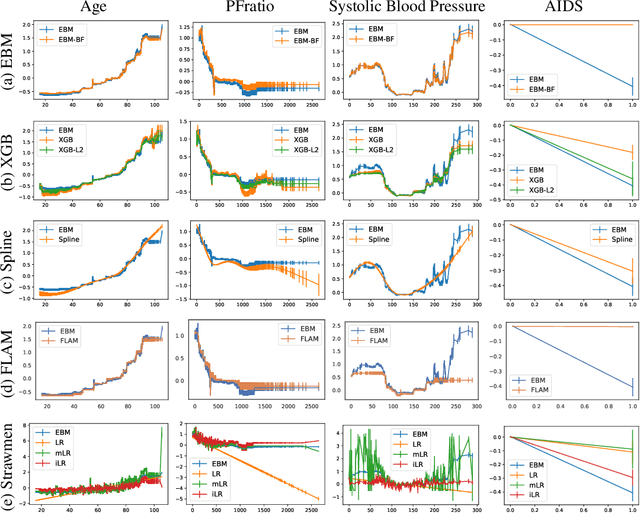

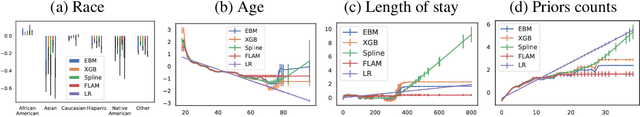

How Interpretable and Trustworthy are GAMs?

Jun 11, 2020

Abstract:Generalized additive models (GAMs) have become a leading model class for data bias discovery and model auditing. However, there are a variety of algorithms for training GAMs, and these do not always learn the same things. Statisticians originally used splines to train GAMs, but more recently GAMs are being trained with boosted decision trees. It is unclear which GAM model(s) to believe, particularly when their explanations are contradictory. In this paper, we investigate a variety of different GAM algorithms both qualitatively and quantitatively on real and simulated datasets. Our results suggest that inductive bias plays a crucial role in model explanations and tree-based GAMs are to be recommended for the kinds of problems and dataset sizes we worked with.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge