Balint Gyevnar

Objective Metrics for Human-Subjects Evaluation in Explainable Reinforcement Learning

Jan 31, 2025

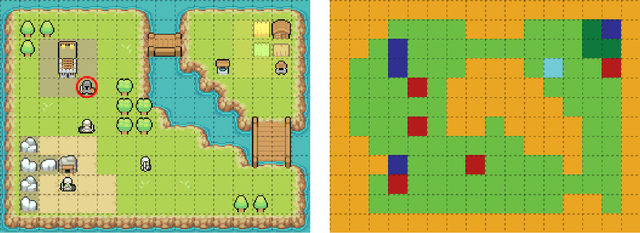

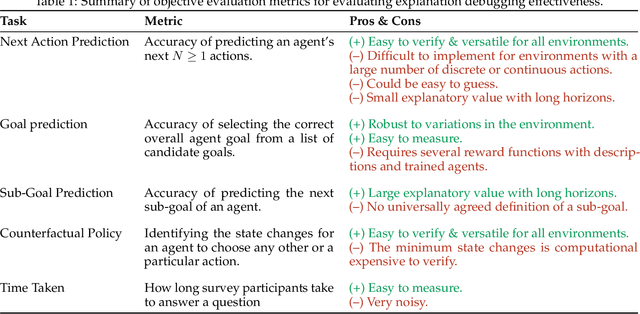

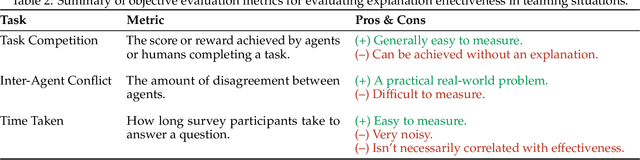

Abstract:Explanation is a fundamentally human process. Understanding the goal and audience of the explanation is vital, yet existing work on explainable reinforcement learning (XRL) routinely does not consult humans in their evaluations. Even when they do, they routinely resort to subjective metrics, such as confidence or understanding, that can only inform researchers of users' opinions, not their practical effectiveness for a given problem. This paper calls on researchers to use objective human metrics for explanation evaluations based on observable and actionable behaviour to build more reproducible, comparable, and epistemically grounded research. To this end, we curate, describe, and compare several objective evaluation methodologies for applying explanations to debugging agent behaviour and supporting human-agent teaming, illustrating our proposed methods using a novel grid-based environment. We discuss how subjective and objective metrics complement each other to provide holistic validation and how future work needs to utilise standardised benchmarks for testing to enable greater comparisons between research.

People Attribute Purpose to Autonomous Vehicles When Explaining Their Behavior

Mar 11, 2024

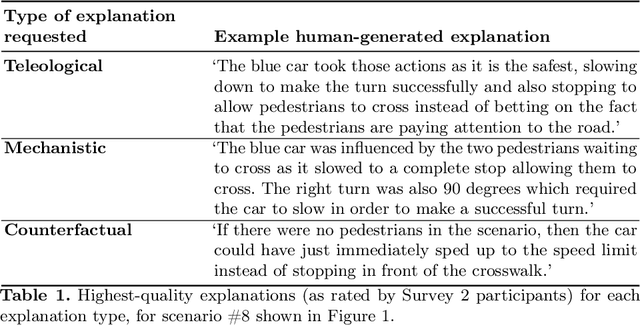

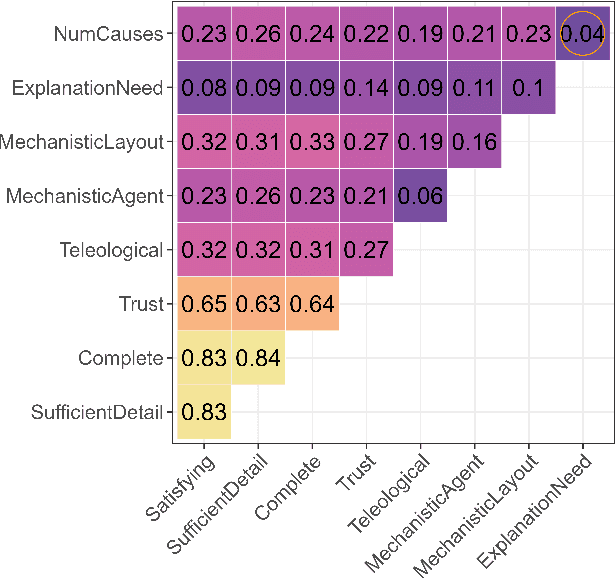

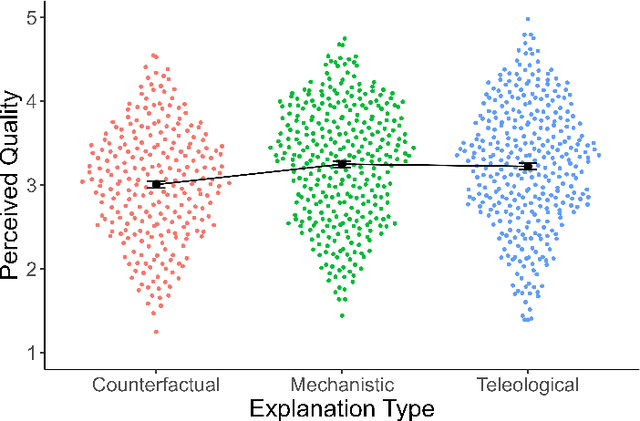

Abstract:A hallmark of a good XAI system is explanations that users can understand and act on. In many cases, this requires a system to offer causal or counterfactual explanations that are intelligible. Cognitive science can help us understand what kinds of explanations users might expect, and in which format to frame these explanations. We briefly review relevant literature from the cognitive science of explanation, particularly as it concerns teleology, the tendency to explain a decision in terms of the purpose it was meant to achieve. We then report empirical data on how people generate explanations for the behavior of autonomous vehicles, and how they evaluate these explanations. In a first survey, participants (n=54) were shown videos of a road scene and asked to generate either mechanistic, counterfactual, or teleological verbal explanations for a vehicle's actions. In the second survey, a different set of participants (n=356) rated these explanations along various metrics including quality, trustworthiness, and how much each explanatory mode was emphasized in the explanation. Participants deemed mechanistic and teleological explanations as significantly higher quality than counterfactual explanations. In addition, perceived teleology was the best predictor of perceived quality and trustworthiness. Neither perceived teleology nor quality ratings were affected by whether the car whose actions were being explained was an autonomous vehicle or was being driven by a person. The results show people use and value teleological concepts to evaluate information about both other people and autonomous vehicles, indicating they find the 'intentional stance' a convenient abstraction. We make our dataset of annotated video situations with explanations, called Human Explanations for Autonomous Driving Decisions (HEADD), publicly available, which we hope will prompt further research.

Explainable AI for Safe and Trustworthy Autonomous Driving: A Systematic Review

Feb 08, 2024Abstract:Artificial Intelligence (AI) shows promising applications for the perception and planning tasks in autonomous driving (AD) due to its superior performance compared to conventional methods. However, inscrutable AI systems exacerbate the existing challenge of safety assurance of AD. One way to mitigate this challenge is to utilize explainable AI (XAI) techniques. To this end, we present the first comprehensive systematic literature review of explainable methods for safe and trustworthy AD. We begin by analyzing the requirements for AI in the context of AD, focusing on three key aspects: data, model, and agency. We find that XAI is fundamental to meeting these requirements. Based on this, we explain the sources of explanations in AI and describe a taxonomy of XAI. We then identify five key contributions of XAI for safe and trustworthy AI in AD, which are interpretable design, interpretable surrogate models, interpretable monitoring, auxiliary explanations, and interpretable validation. Finally, we propose a modular framework called SafeX to integrate these contributions, enabling explanation delivery to users while simultaneously ensuring the safety of AI models.

Aligning Explainable AI and the Law: The European Perspective

Feb 22, 2023Abstract:The European Union has proposed the Artificial Intelligence Act intending to regulate AI systems, especially those used in high-risk, safety-critical applications such as healthcare. Among the Act's articles are detailed requirements for transparency and explainability. The field of explainable AI (XAI) offers technologies that could address many of these requirements. However, there are significant differences between the solutions offered by XAI and the requirements of the AI Act, for instance, the lack of an explicit definition of transparency. We argue that collaboration is essential between lawyers and XAI researchers to address these differences. To establish common ground, we give an overview of XAI and its legal relevance followed by a reading of the transparency and explainability requirements of the AI Act and the related General Data Protection Regulation (GDPR). We then discuss four main topics where the differences could induce issues. Specifically, the legal status of XAI, the lack of a definition of transparency, issues around conformity assessments, and the use of XAI for dataset-related transparency. We hope that increased clarity will promote interdisciplinary research between the law and XAI and support the creation of a sustainable regulation that fosters responsible innovation.

Causal Social Explanations for Stochastic Sequential Multi-Agent Decision-Making

Feb 21, 2023Abstract:We present a novel framework to generate causal explanations for the decisions of agents in stochastic sequential multi-agent environments. Explanations are given via natural language conversations answering a wide range of user queries and requiring associative, interventionist, or counterfactual causal reasoning. Instead of assuming any specific causal graph, our method relies on a generative model of interactions to simulate counterfactual worlds which are used to identify the salient causes behind decisions. We implement our method for motion planning for autonomous driving and test it in simulated scenarios with coupled interactions. Our method correctly identifies and ranks the relevant causes and delivers concise explanations to the users' queries.

Deep Reinforcement Learning for Multi-Agent Interaction

Aug 02, 2022Abstract:The development of autonomous agents which can interact with other agents to accomplish a given task is a core area of research in artificial intelligence and machine learning. Towards this goal, the Autonomous Agents Research Group develops novel machine learning algorithms for autonomous systems control, with a specific focus on deep reinforcement learning and multi-agent reinforcement learning. Research problems include scalable learning of coordinated agent policies and inter-agent communication; reasoning about the behaviours, goals, and composition of other agents from limited observations; and sample-efficient learning based on intrinsic motivation, curriculum learning, causal inference, and representation learning. This article provides a broad overview of the ongoing research portfolio of the group and discusses open problems for future directions.

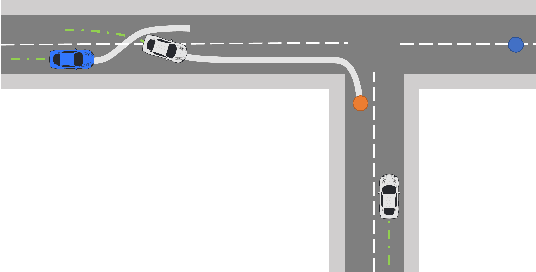

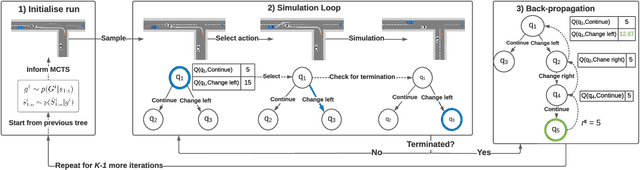

A Human-Centric Method for Generating Causal Explanations in Natural Language for Autonomous Vehicle Motion Planning

Jun 27, 2022

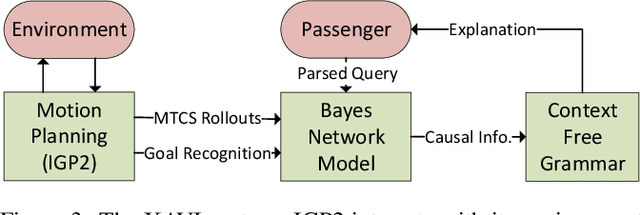

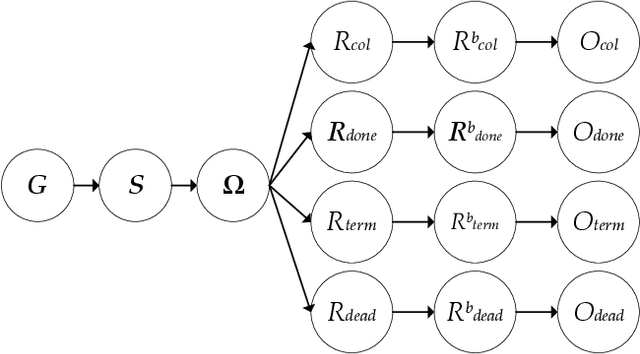

Abstract:Inscrutable AI systems are difficult to trust, especially if they operate in safety-critical settings like autonomous driving. Therefore, there is a need to build transparent and queryable systems to increase trust levels. We propose a transparent, human-centric explanation generation method for autonomous vehicle motion planning and prediction based on an existing white-box system called IGP2. Our method integrates Bayesian networks with context-free generative rules and can give causal natural language explanations for the high-level driving behaviour of autonomous vehicles. Preliminary testing on simulated scenarios shows that our method captures the causes behind the actions of autonomous vehicles and generates intelligible explanations with varying complexity.

GRIT: Verifiable Goal Recognition for Autonomous Driving using Decision Trees

Mar 10, 2021

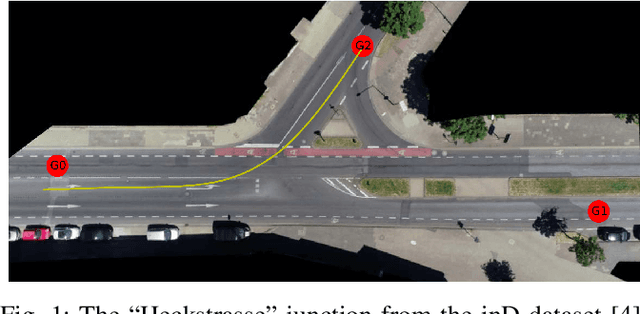

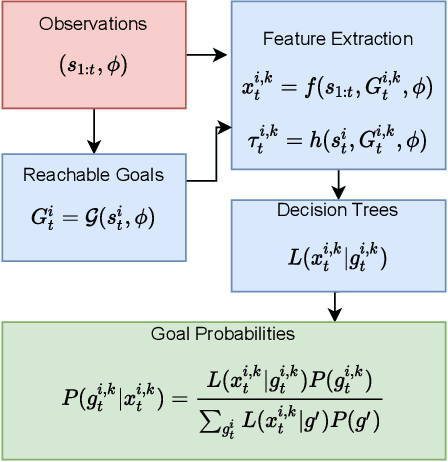

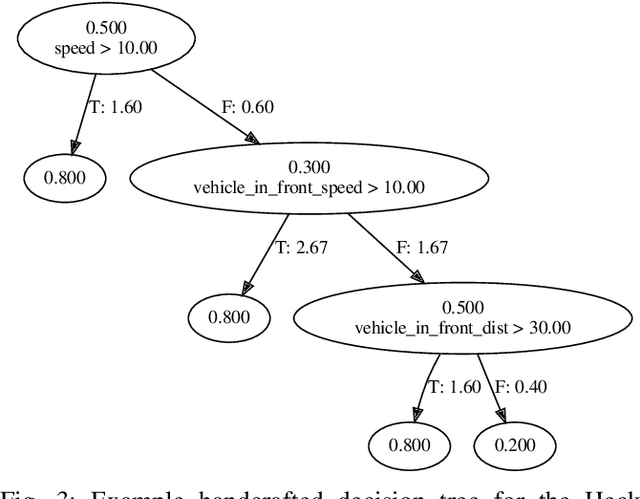

Abstract:It is useful for autonomous vehicles to have the ability to infer the goals of other vehicles (goal recognition), in order to safely interact with other vehicles and predict their future trajectories. Goal recognition methods must be fast to run in real time and make accurate inferences. As autonomous driving is safety-critical, it is important to have methods which are human interpretable and for which safety can be formally verified. Existing goal recognition methods for autonomous vehicles fail to satisfy all four objectives of being fast, accurate, interpretable and verifiable. We propose Goal Recognition with Interpretable Trees (GRIT), a goal recognition system for autonomous vehicles which achieves these objectives. GRIT makes use of decision trees trained on vehicle trajectory data. Evaluation on two vehicle trajectory datasets demonstrates the inference speed and accuracy of GRIT compared to an ablation and two deep learning baselines. We show that the learned trees are human interpretable and demonstrate how properties of GRIT can be formally verified using an SMT solver.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge